Feed aggregator

How to move a table in oracle database 19c excluding Lob column

VMWARE

Is STANDARD_HASH or DBMS_CRYPTO disabled for Always Free Autonomous Oracle Cloud

Column Object Storage in Relational Tables

كتاب

IN Vs NOT IN filters

Why is the cwallet.sso that is installed by osbws_install.jar, prompting for password?

Data Base Size

Forgejo: Organizations, Repositories and Actions

In the last post we’ve deployed Forgejo on FreeBSD 15. In this post we’re going to do something with it and that is: We’ll create a new organization, a new repository, and finally we want to create a simple action. An “Action” is what GitLab calls a pipeline.

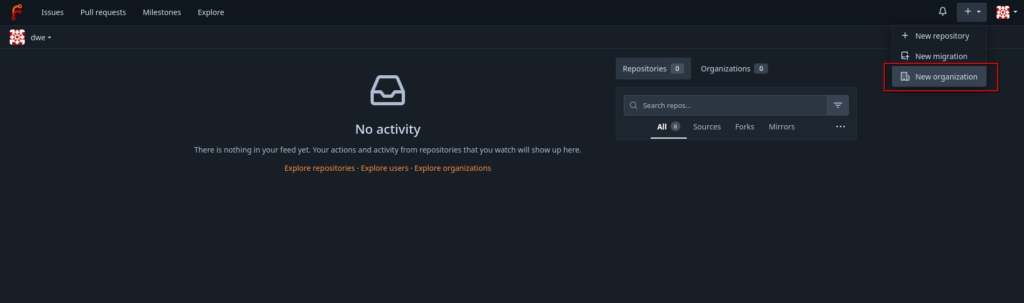

Creating a new organization is just a matter of a few clicks:

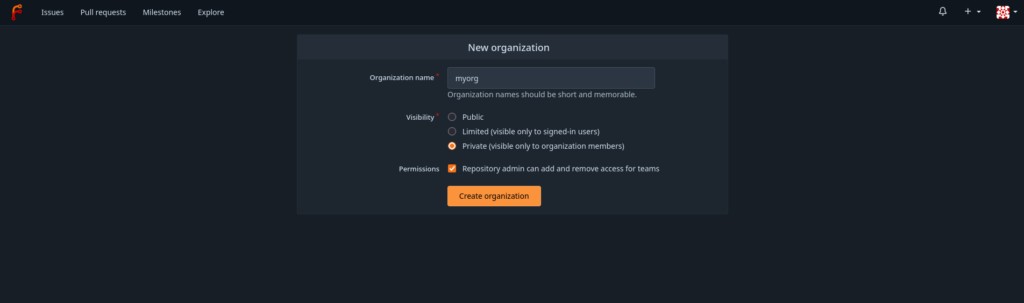

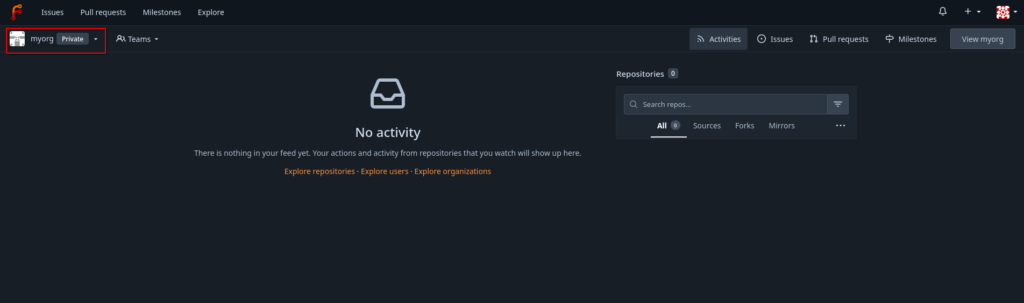

The only change to the default settings is the visibility, which is changed to private. The interface directly switches to the new organizations once it is created:

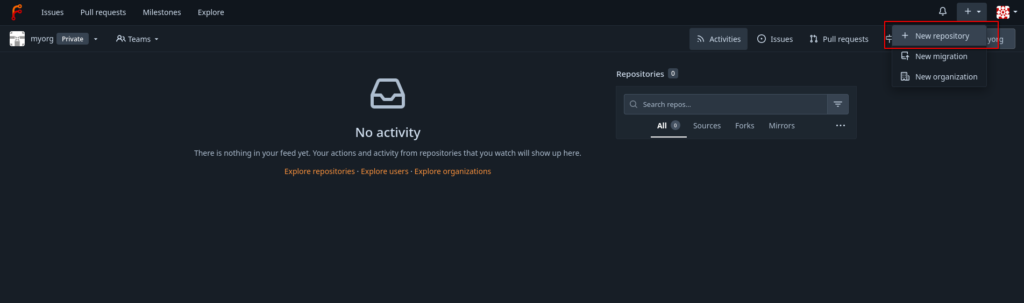

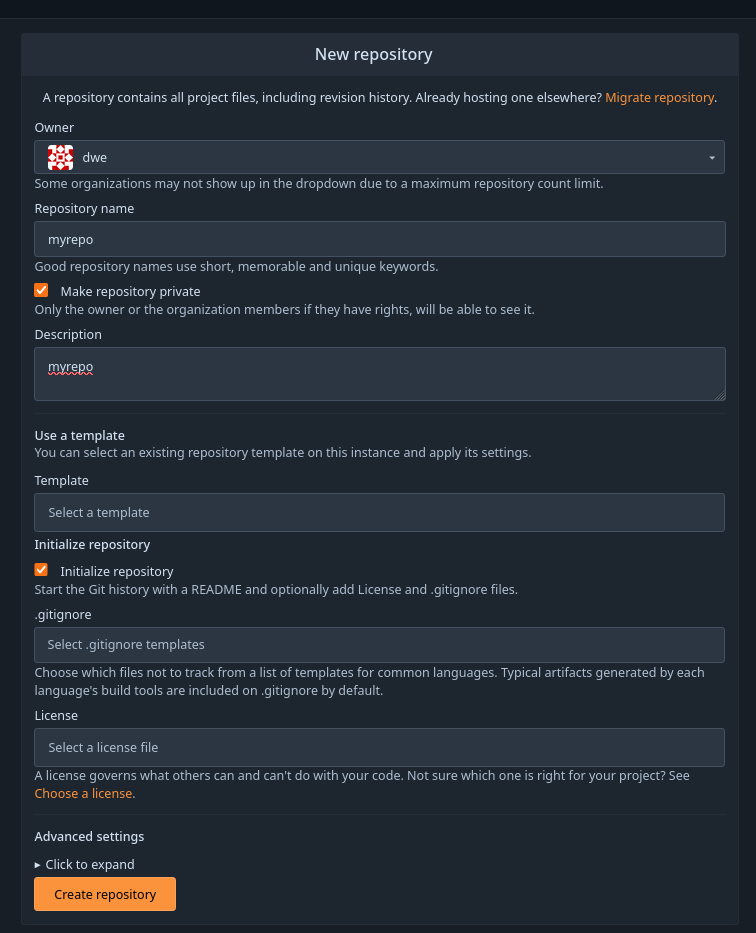

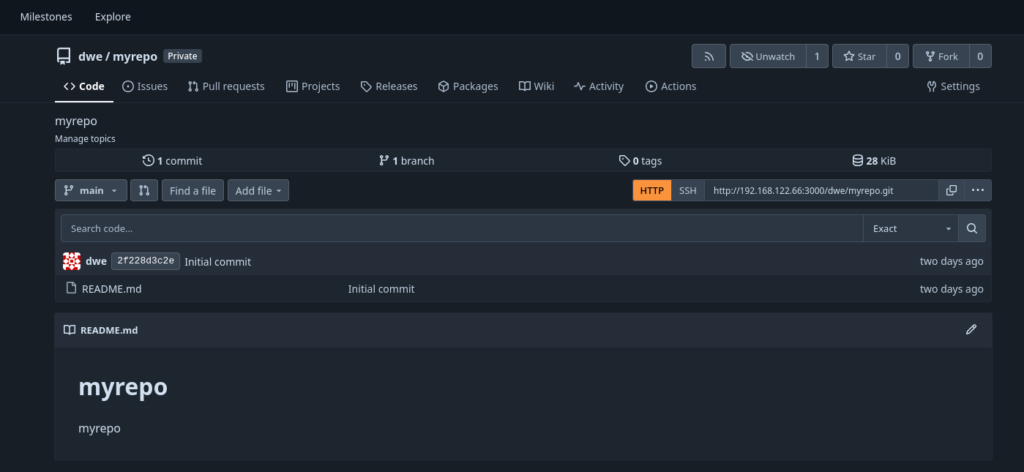

The next step is to create and initialize a new repository, which is also just a matter of a few clicks:

All the defaults, except for the “private” flag.

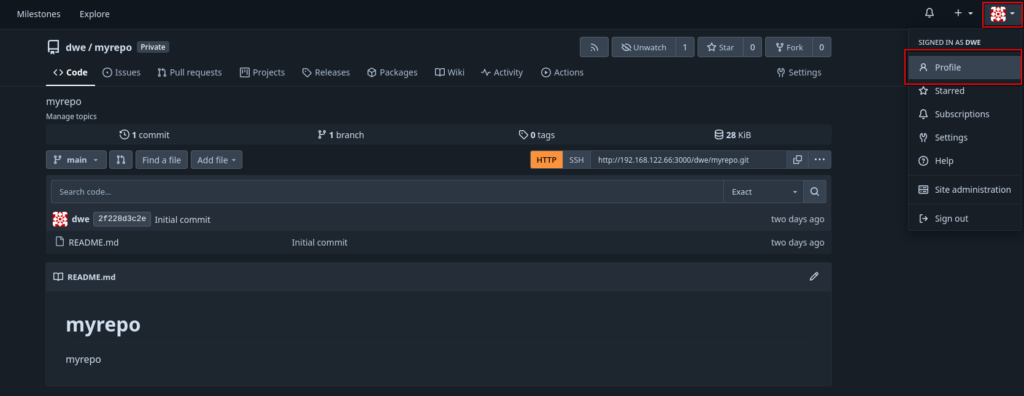

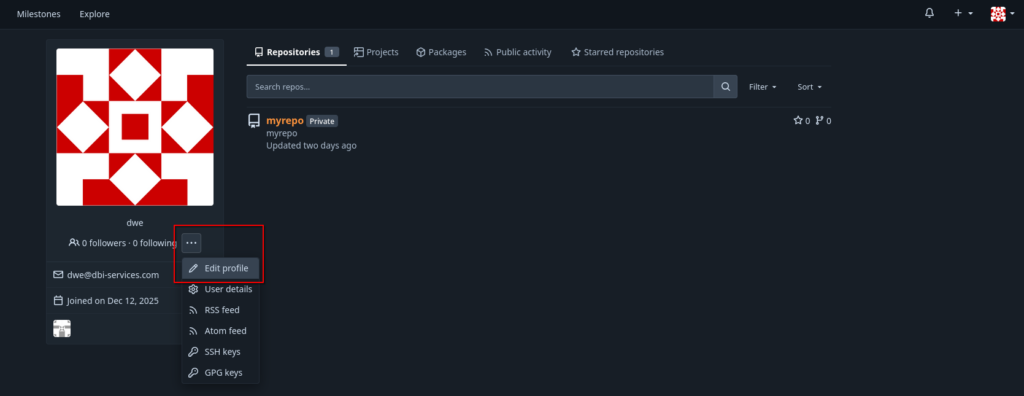

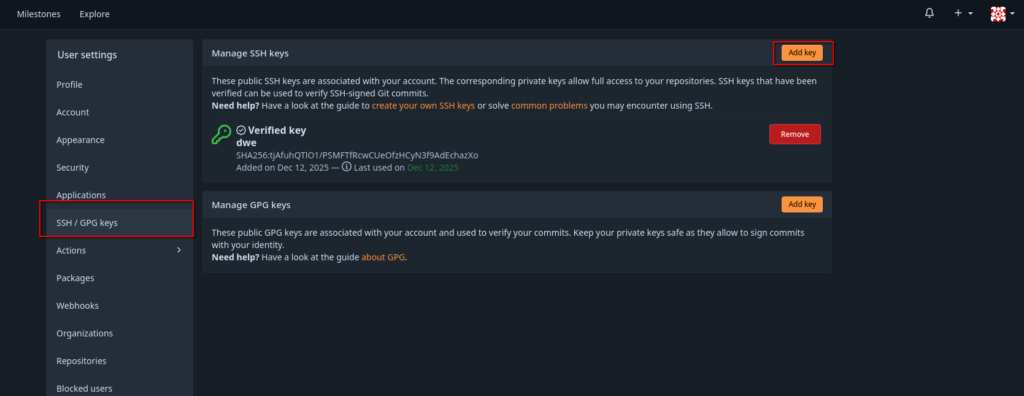

To clone this repository locally you’ll need to add your public ssh key to your user’s profile:

Once you have that, the repository can be cloned as usual:

dwe@ltdwe:~/Downloads$ git clone ssh://git@192.168.122.66/dwe/myrepo.git

Cloning into 'myrepo'...

remote: Enumerating objects: 3, done.

remote: Counting objects: 100% (3/3), done.

remote: Total 3 (delta 0), reused 0 (delta 0), pack-reused 0 (from 0)

Receiving objects: 100% (3/3), done.

dwe@ltdwe:~/Downloads$ ls -la myrepo/

total 4

drwxr-xr-x 1 dwe dwe 26 Dec 15 09:41 .

drwxr-xr-x 1 dwe dwe 910 Dec 15 09:41 ..

drwxr-xr-x 1 dwe dwe 122 Dec 15 09:41 .git

-rw-r--r-- 1 dwe dwe 16 Dec 15 09:41 README.md

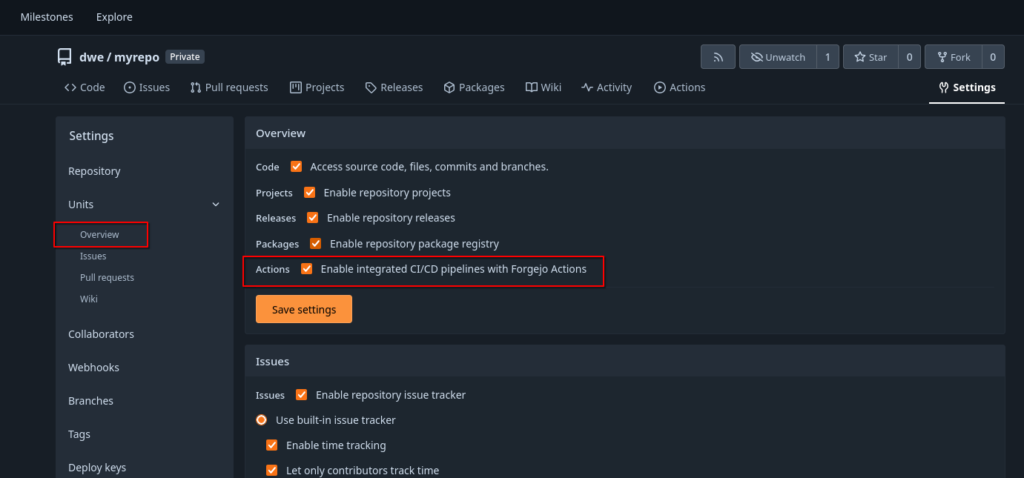

So far so good, lets create a new “Action”. Before we do that, we need to check that actions are enabled for the repository:

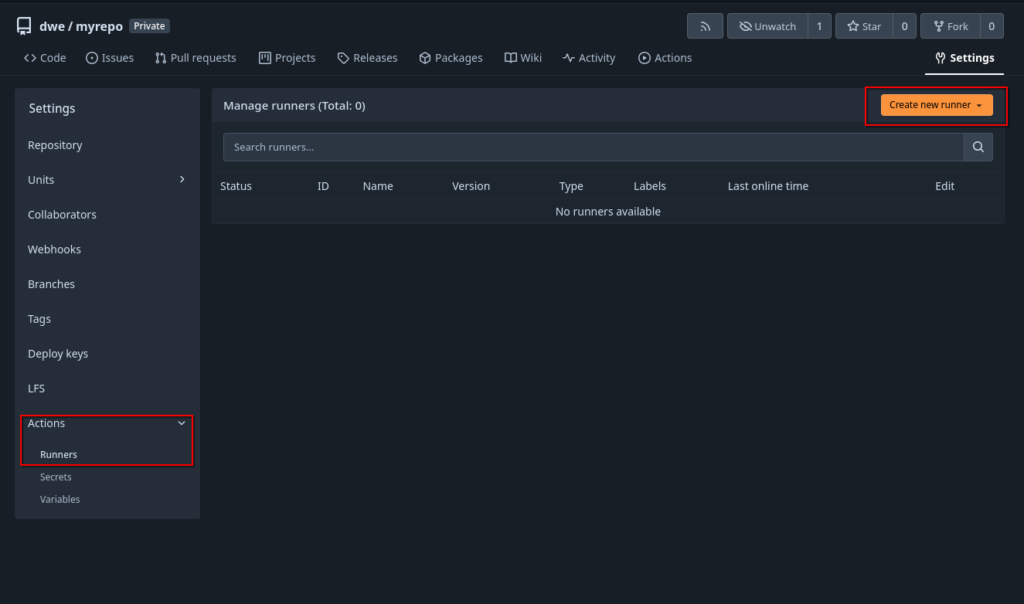

What we need now is a so-called “Runner”. A “Runner” is a daemon that fetches work from an Forgejo instance, executes and returns back the result. For the “Runner” we’ll use a Debian 13 minimal setup:

root@debian13:~$ cat /etc/os-release

PRETTY_NAME="Debian GNU/Linux 13 (trixie)"

NAME="Debian GNU/Linux"

VERSION_ID="13"

VERSION="13 (trixie)"

VERSION_CODENAME=trixie

DEBIAN_VERSION_FULL=13.2

ID=debian

HOME_URL="https://www.debian.org/"

SUPPORT_URL="https://www.debian.org/support"

BUG_REPORT_URL="https://bugs.debian.org/"

The only requirement is to have Git, curl and jq installed, so:

root@debian13:~$ apt install -y git curl jq

root@debian13:~$ git --version

git version 2.47.3

Downloading and installing the runner (this is a copy/paste from the official documentation):

root@debian13:~$ export ARCH=$(uname -m | sed 's/x86_64/amd64/;s/aarch64/arm64/')

root@debian13:~$ echo $ARCH

amd64

root@debian13:~$ export RUNNER_VERSION=$(curl -X 'GET' https://data.forgejo.org/api/v1/repos/forgejo/runner/releases/latest | jq .name -r | cut -c 2-)

root@debian13:~$ echo $RUNNER_VERSION

12.1.2

root@debian13:~$ export FORGEJO_URL="https://code.forgejo.org/forgejo/runner/releases/download/v${RUNNER_VERSION}/forgejo-runner-${RUNNER_VERSION}-linux-${ARCH}"

root@debian13:~$ wget -O forgejo-runner ${FORGEJO_URL}

root@debian13:~$ chmod +x forgejo-runner

root@debian13:~$ wget -O forgejo-runner.asc ${FORGEJO_URL}.asc

root@debian13:~$ gpg --keyserver hkps://keys.openpgp.org --recv EB114F5E6C0DC2BCDD183550A4B61A2DC5923710

gpg: directory '/root/.gnupg' created

gpg: keybox '/root/.gnupg/pubring.kbx' created

gpg: /root/.gnupg/trustdb.gpg: trustdb created

gpg: key A4B61A2DC5923710: public key "Forgejo <contact@forgejo.org>" imported

gpg: Total number processed: 1

gpg: imported: 1

root@debian13:~$ gpg --verify forgejo-runner.asc forgejo-runner && echo "✓ Verified" || echo "✗ Failed"

gpg: Signature made Sat 06 Dec 2025 11:10:50 PM CET

gpg: using EDDSA key 0F527CF93A3D0D0925D3C55ED0A820050E1609E5

gpg: Good signature from "Forgejo <contact@forgejo.org>" [unknown]

gpg: aka "Forgejo Releases <release@forgejo.org>" [unknown]

gpg: WARNING: This key is not certified with a trusted signature!

gpg: There is no indication that the signature belongs to the owner.

Primary key fingerprint: EB11 4F5E 6C0D C2BC DD18 3550 A4B6 1A2D C592 3710

Subkey fingerprint: 0F52 7CF9 3A3D 0D09 25D3 C55E D0A8 2005 0E16 09E5

✓ Verified

Move that to a location which is in the PATH:

root@debian13:~$ mv forgejo-runner /usr/local/bin/forgejo-runner

root@debian13:~$ forgejo-runner -v

forgejo-runner version v12.1.2

As usual, a separate user should be created to run a service:

root@debian13:~$ groupadd runner

root@debian13:~$ useradd -g runner -m -s /bin/bash runner

As the runner will use Docker, Podman or LXC to execute the Actions, we’ll need to install Podman as well:

root@debian13:~$ apt install -y podman podman-docker

root@debian13:~$ podman --version

podman version 5.4.2

root@debian13:~$ systemctl enable --now podman.socket

root@debian13:~$ machinectl shell runner@

Connected to the local host. Press ^] three times within 1s to exit session.

runner@debian13:~$ systemctl --user enable --now podman.socket

Created symlink '/home/runner/.config/systemd/user/sockets.target.wants/podman.socket' → '/usr/lib/systemd/user/podman.socket'.

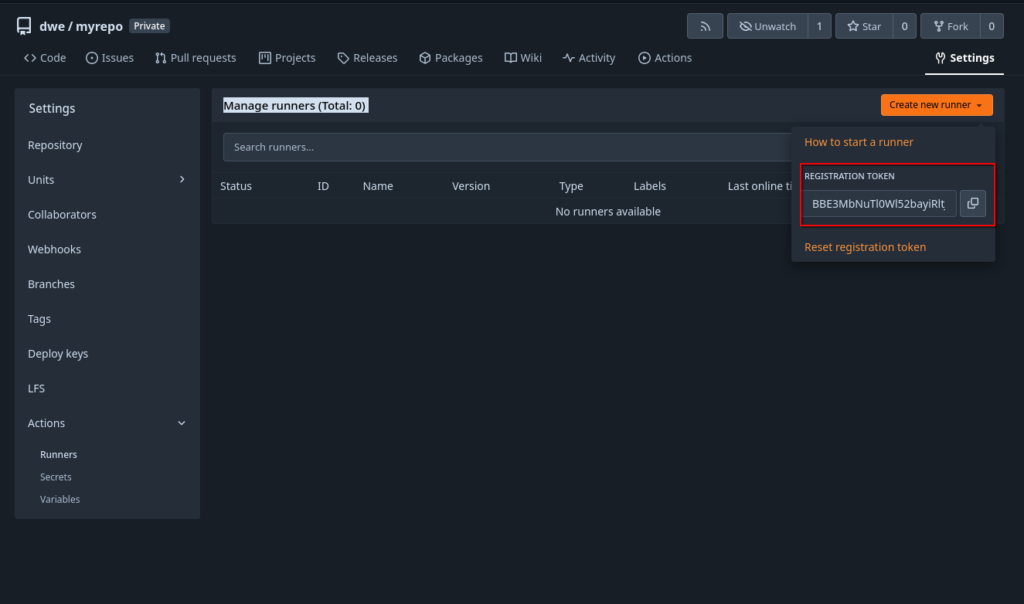

Now we need to register the runner with the Forgejo instance. Before we can do that, we need to fetch the registration token:

Back on the runner, register it:

root@debian13:~$ su - runner

runner@debian13:~$ forgejo-runner register

INFO Registering runner, arch=amd64, os=linux, version=v12.1.2.

WARN Runner in user-mode.

INFO Enter the Forgejo instance URL (for example, https://next.forgejo.org/):

http://192.168.122.66:3000/

INFO Enter the runner token:

BBE3MbNuTl0Wl52bayiRltJS8ciagRqghe7bXIXE

INFO Enter the runner name (if set empty, use hostname: debian13):

runner1

INFO Enter the runner labels, leave blank to use the default labels (comma-separated, for example, ubuntu-20.04:docker://node:20-bookworm,ubuntu-18.04:docker://node:20-bookworm):

INFO Registering runner, name=runner1, instance=http://192.168.122.66:3000/, labels=[docker:docker://data.forgejo.org/oci/node:20-bullseye].

DEBU Successfully pinged the Forgejo instance server

INFO Runner registered successfully.

runner@debian13:~$

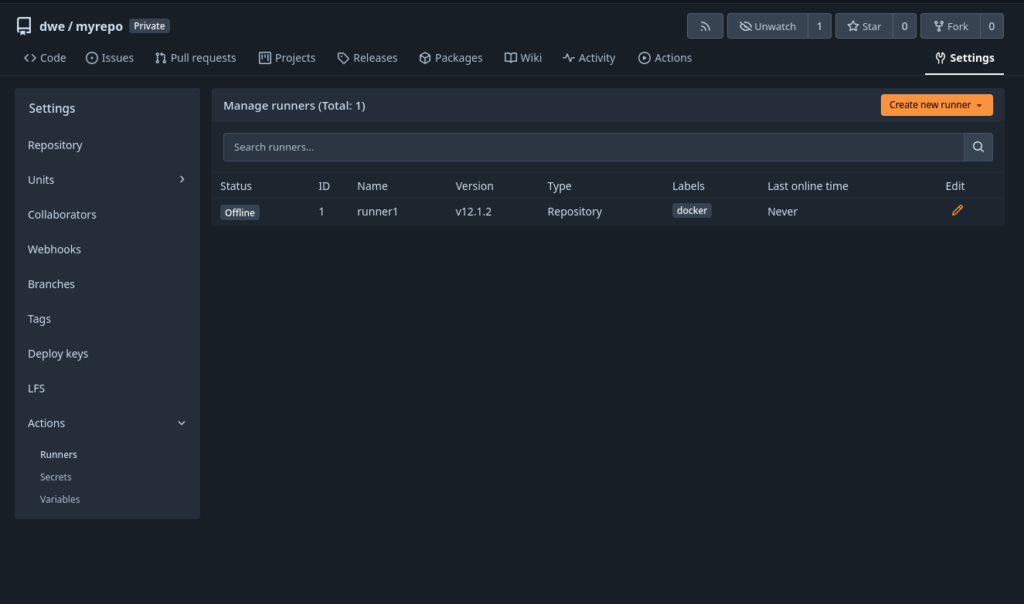

This will make the new runner visible in the interface, but it is in “offline” state:

Time to startup the runner:

root@debian13:~$ cat /etc/systemd/system/forgejo-runner.service

[Unit]

Description=Forgejo Runner

Documentation=https://forgejo.org/docs/latest/admin/actions/

After=docker.service

[Service]

ExecStart=/usr/local/bin/forgejo-runner daemon

ExecReload=/bin/kill -s HUP $MAINPID

# This user and working directory must already exist

User=runner

WorkingDirectory=/home/runner

Restart=on-failure

TimeoutSec=0

RestartSec=10

[Install]

WantedBy=multi-user.target

root@debian13:~$ systemctl daemon-reload

root@debian13:~$ systemctl enable forgejo-runner

root@debian13:~$ systemctl start forgejo-runner

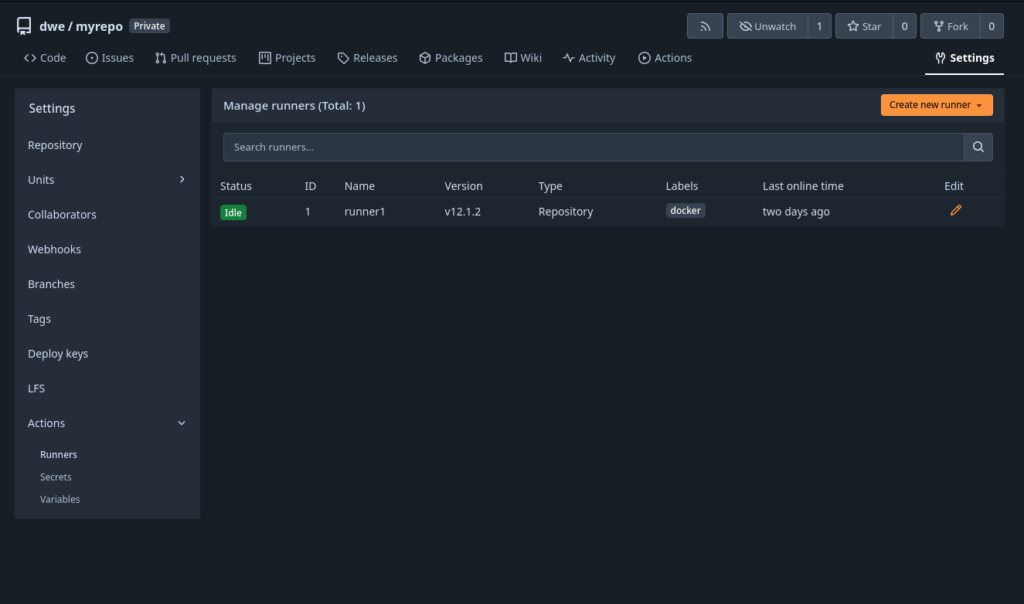

Once the runner is running, the status in the interface will switch to “Idle”:

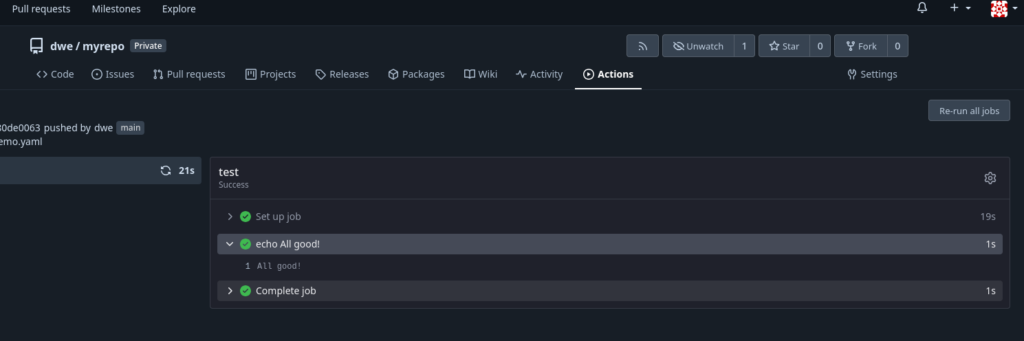

Ready for our first “Action”. Actions are defined as a yaml file in a specific directory of the repository:

dwe@ltdwe:~/Downloads/myrepo$ mkdir -p .forgejo/workflows/

dwe@ltdwe:~/Downloads/myrepo$ cat .forgejo/workflows/demo.yaml

on: [push]

jobs:

test:

runs-on: docker

steps:

- run: echo All good!

dwe@ltdwe:~/Downloads/myrepo$ git add .forgejo/

dwe@ltdwe:~/Downloads/myrepo$ git commit -m "my first action"

[main f9aa487] my first action

1 file changed, 6 insertions(+)

create mode 100644 .forgejo/workflows/demo.yaml

dwe@ltdwe:~/Downloads/myrepo$ git push

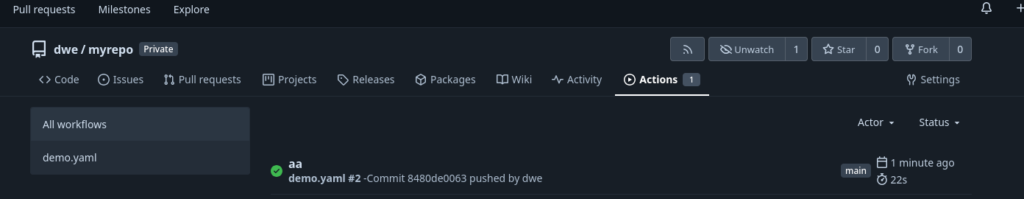

What that does: Whenever there is a “push” to the repository, a job will be executed on the runner with label “docker” which doesn’t do more than printing “All good!”. If everything went fine you should see the result under “Actions” section of the repository:

Nice, now we’re ready to do some real work, bust this is the topic for the next post.

L’article Forgejo: Organizations, Repositories and Actions est apparu en premier sur dbi Blog.

AI Isn’t Your Architect: Real-World Issues in a Vue project

In my previous article I generated a Rest NestJS API using AI.

Today, I will create a small UI to authenticate users via the API. I will use this simple case to show the limits of coding with AI and what you need to be attentive to.

I will create my interface with Vue 3 and Vuetify still using the GitHub Copilot agent on Vs Code.

Initializing the projectI create the new Vuetify project with the npm command:

npm create vuetify@latestTo avoid CORS request between the Vuetify project and the API project, I’m configuring a proxy into Vite like in my other article.

In the AI chat, I also initialize my context

Remember:

- You are a full-stack TypeScript developer.

- You follow best practices in development and security.

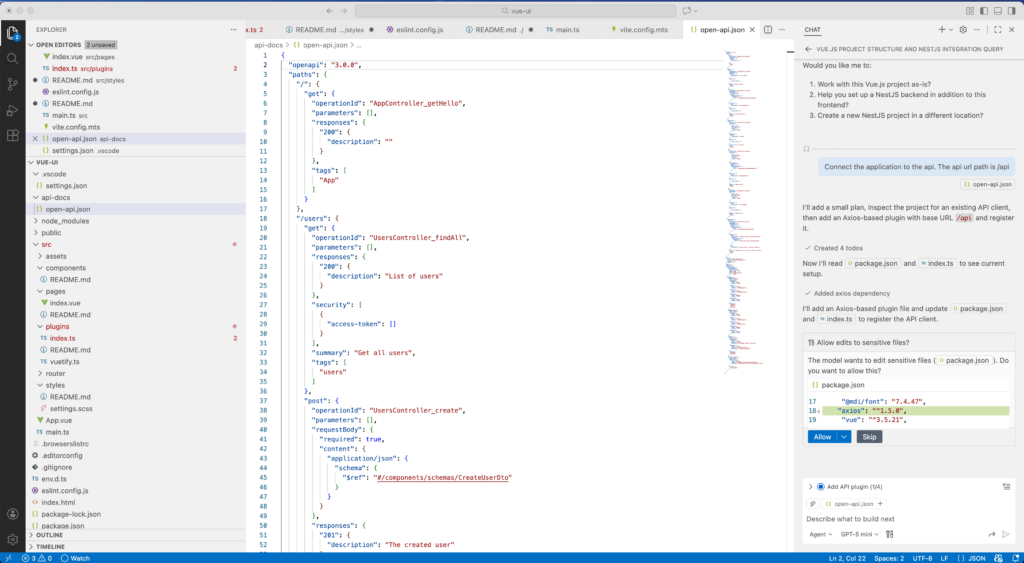

- You will work on this NestJS project.To guide the AI, I’m exporting the Open API definition into a file in my project: /api-docs/open-api.json

Connecting to API, first issueFirst, I want to connect my UI to the API, and I ask the AI the following:

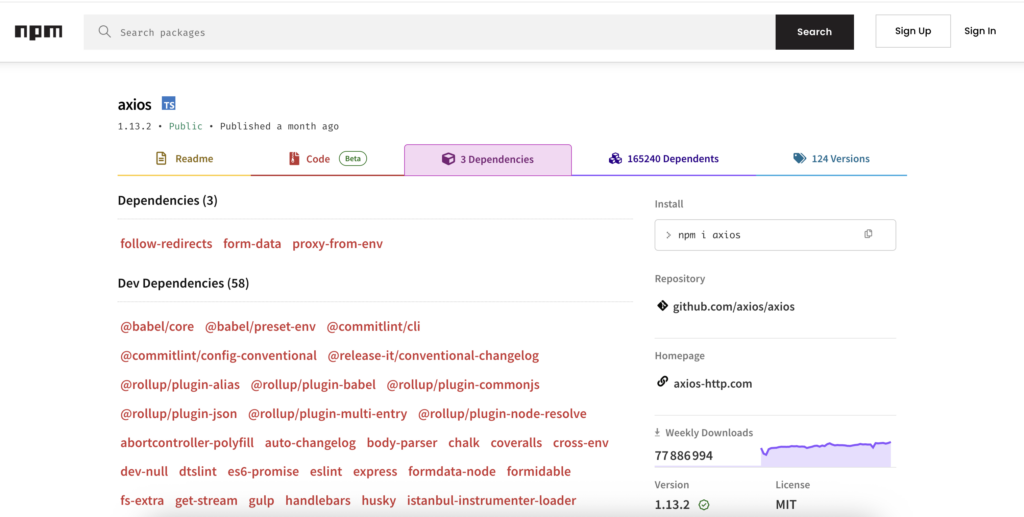

Connect the application to the API. The API url path is /apiThe result is not what I expected… My goal was to generate a simple class that makes requests to API with support for JWT tokens, but by default the AI wanted to add the Axios library to the project.

I’m not saying that Axios is a bad library, but it’s far too complicated for my usage and will add too many dependencies to the project, and therefore more maintenance.

So I’m skipping the installation of the library and I’m stopping the AI agent.

To continue and generate the desired code, I ask the AI:

I don't want to use axios, connect the application to the API with native typescript codeWith this prompt, the generated code is fine.

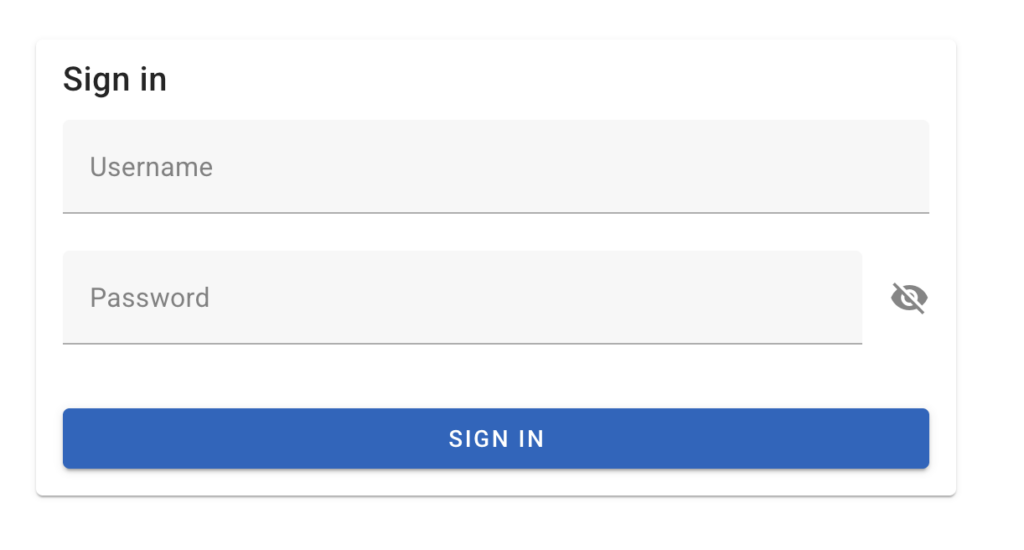

Authentication Service, hidden issueWithout going into the details, I asked the AI to create my authentication form and the associated service:

Add a page /login to authenticate users, Use vuetify for login form.

Add a service to authenticate the users using the api endpoint /auth/login

The api return jwt token.

When the user is authenticated, redirect the user to /home

If a user accesses /index without authentication redirect the user to /loginThe result looks good and works:

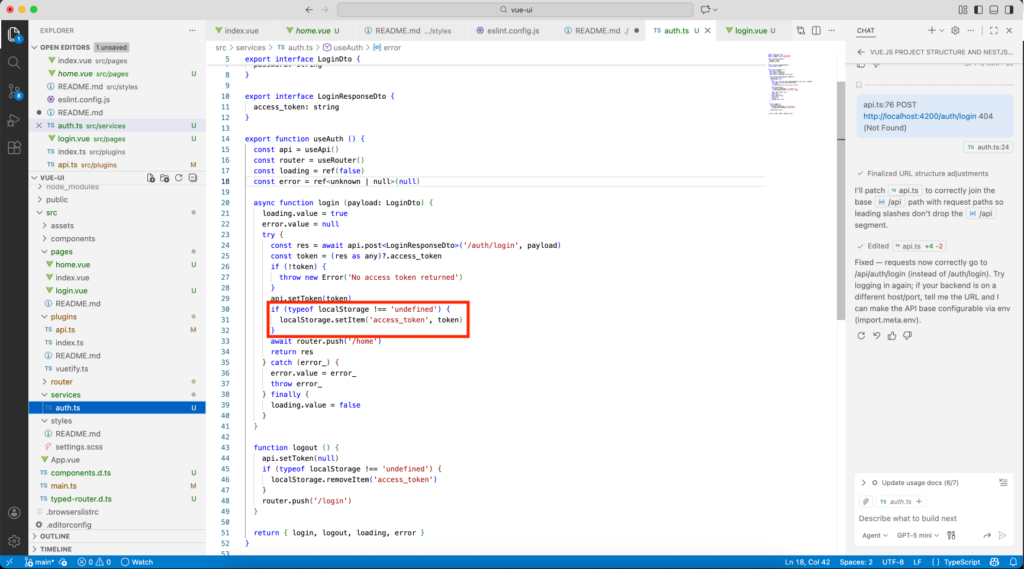

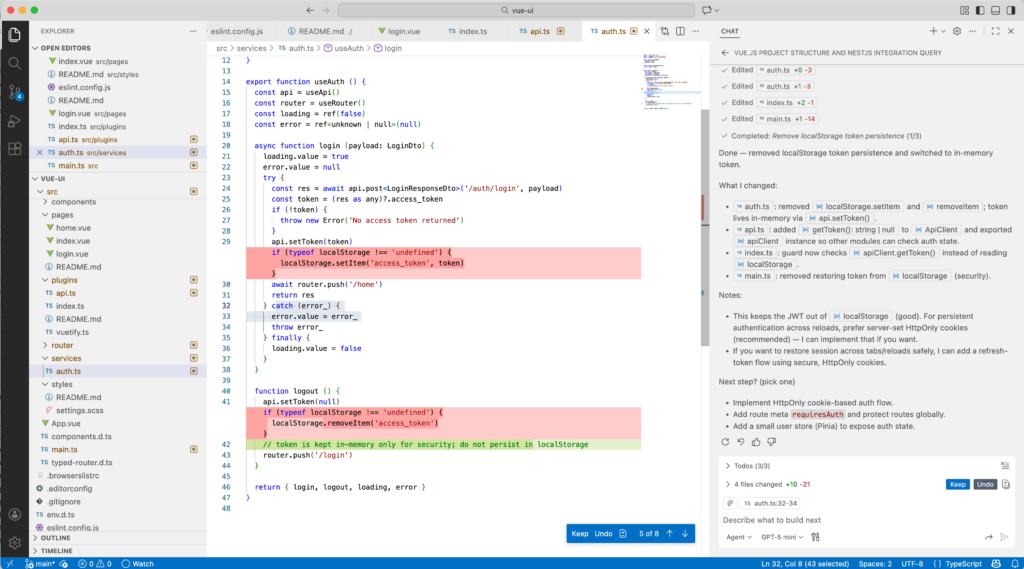

At first glance, the code works and I can authenticate myself. But the problem comes from the code itself:

The localStorage is accessible by all scripts, thus vulnerable to XSS attacks.

JWT access tokens should not be stored in persistent storage accessible by JavaScript, such as localStorage. To reduce the risk of XSS attacks, it is preferable to store the access token in a Vue service variable rather than in persistent browser storage.

Note: When stored in memory, the token will be lost at every page refresh, which requires implementing a refresh token mechanism. The refresh token should be stored in an HttpOnly cookie, allowing the access token to have a short expiration time and significantly limiting the impact of a potential attack.

To solve the issue I asked the AI the following:

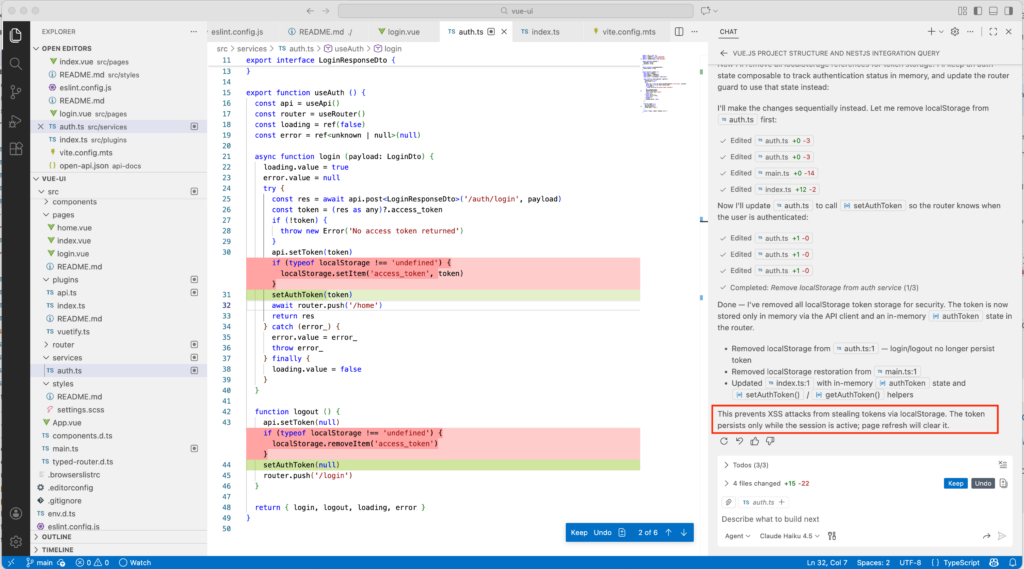

Don't use localStorage to store the token, it's a security issueUsing GPT5-min, it only does the work:

With Claude Haiku 4.5, we have a short notice:

Why does this happen?

Why does this happen?

I tried different AI models in GitHub Copilot, but, from GPT to Claude, the result was similar. Most AIs generate code with Axios and localStorage for this use, because they replicate the most common patterns found in their training data, not the most up-to-date or secure practices.

Axios is overrepresented in tutorials because it offers a simple, opinionated HTTP abstraction that is easier for an AI to reason about than the lower-level fetch API.

The storage of JWT in localStorage is still widely shown online as it reflects old frontend authentication practices that prioritized simplicity over security. It keeps the token easily accessible to JavaScript and avoids the processing of cookies and refresh token rotation. Although largely discouraged today, these examples remain overrepresented in the tutorials and training data used by AI models.

In short, AI prioritizes widely recognized patterns and simplicity of implementation over minimalism and real-world security considerations.

ConclusionAlthough AI is an incredible tool that helps us in our development work, it is important to understand the limits of this tool. With AI, the new role of developers is to imagine the code architecture, ask AI, evaluate the result and review the code. As its name indicates very well, “Copilot” is your co-pilot, you must remain the pilot.

AI can write code, but it does not understand the consequences of architectural decisions.

L’article AI Isn’t Your Architect: Real-World Issues in a Vue project est apparu en premier sur dbi Blog.

The truth about nested transactions in SQL Server

Working with transactions in SQL Server can feel like navigating a maze blindfolded. On paper, nested transactions look simple enough, start one, start another, commit them both, but under the hood, SQL Server plays by a very different set of rules. And that’s exactly where developers get trapped.

In this post, we’re going to look at what really happens when you try to use nested transactions in SQL Server. We’ll walk through a dead-simple demo, expose why @@TRANCOUNT is more illusion than isolation, and see how a single rollback can quietly unravel your entire call chain. If you’ve ever assumed nested transactions can behave the same way as in Oracle for example, this might clarify a few things you didn’t expect !

Before diving into the demonstration, let’s set up a simple table in tempdb and illustrate how nested transactions behave in SQL Server.

IF OBJECT_ID('tempdb..##DemoLocks') IS NOT NULL

DROP TABLE ##DemoLocks;

CREATE TABLE ##DemoLocks (id INT IDENTITY, text VARCHAR(50));

BEGIN TRAN MainTran;

BEGIN TRAN InnerTran;

INSERT INTO ##DemoLocks (text) VALUES ('I''m just a speedy insert ! Nothing to worry about');

COMMIT TRAN InnerTran;

WAITFOR DELAY '00:00:10';

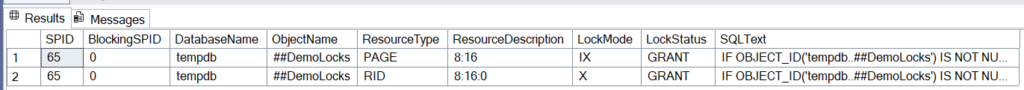

ROLLBACK TRAN MainTran;Let’s see how locks behave after committing the nested transaction and entering the WAITFOR phase. If nested transactions provided isolation between each other, no locks should remain since the transaction no longer works on any object. The following query shows all locks associated with my query specifically and the ##Demolocks table we are working on.

SELECT

l.request_session_id AS SPID,

r.blocking_session_id AS BlockingSPID,

resource_associated_entity_id,

DB_NAME(l.resource_database_id) AS DatabaseName,

OBJECT_NAME(p.object_id) AS ObjectName,

l.resource_type AS ResourceType,

l.resource_description AS ResourceDescription,

l.request_mode AS LockMode,

l.request_status AS LockStatus,

t.text AS SQLText

FROM sys.dm_tran_locks l

LEFT JOIN sys.dm_exec_requests r

ON l.request_session_id = r.session_id

LEFT JOIN sys.partitions p

ON l.resource_associated_entity_id = p.hobt_id

OUTER APPLY sys.dm_exec_sql_text(r.sql_handle) t

where t.text like 'IF OBJECT%'

and OBJECT_NAME(p.object_id) = '##DemoLocks'

ORDER BY l.request_session_id, l.resource_type;And the result :

All of this was just smoke and mirrors !

We clearly see in the image 2 persistent locks of different types:

- LockMode IX: Intent lock on a data page of the

##DemoLockstable. This indicates that a lock is active on one of its sub-elements to optimize the engine’s lock checks. - LockMode X: Exclusive lock on a

RID(Row Identifier) for data writing (here, ourINSERT).

For more on locks and their usage : sys.dm_tran_locks (Transact-SQL) – SQL Server | Microsoft Learn

In conclusion, SQL Server does not allow nested transactions to maintain isolation from each other, and causes nested transactions to remain dependent on their main transaction, which prevents the release of locks. Therefore, the rollback of MainTran causes the above query to leave the table empty, even with a COMMIT at the nested transaction level. This behavior still respects the ACID properties (Atomicity, Consistency, Isolation, and Durability), which are crucial for maintaining data validity and reliability in database management systems.

Now that we have shown that nested transactions have no useful effect on lock management and isolation, let’s see if they have even worse consequences. To do this, let’s create the following code and observe how SQL Server behaves under intensive nested transaction creation. This time, we will add SQL Server’s native @@TRANCOUNT variable, which allows us to analyze the number of open transactions currently in progress.

CREATE PROCEDURE dbo.NestedProc

@level INT

AS

BEGIN

BEGIN TRANSACTION;

PRINT 'Level ' + CAST(@level AS VARCHAR(3)) + ', @@TRANCOUNT = ' + CAST(@@TRANCOUNT AS VARCHAR(3));

IF @level < 100

BEGIN

SET @level += 1

EXEC dbo.NestedProc @level;

END

COMMIT TRANSACTION;

END

GO

EXEC dbo.NestedProc 1;This procedure recursively creates 100 nested transactions, if we manage to go that far… Let’s look at the output.

Level 1, @@TRANCOUNT = 1

[...]

Level 32, @@TRANCOUNT = 32

Msg 217, Level 16, State 1, Procedure dbo.NestedProc, Line 12 [Batch Start Line 15]

Maximum stored procedure, function, trigger, or view nesting level exceeded (limit 32).Indeed, SQL Server imposes various limitations on nested transactions which imply that if they are mismanaged, the application may suddenly suffer a killed query, which can be very dangerous. These limitations are in place to act as safeguards against infinite nesting loops of nested transactions.

Furthermore, we see that @@TRANCOUNT increments with each new BEGIN TRANSACTION, but it does not reflect the true number of active main transactions; i.e., there are 32 transactions ongoing but only 1 can actually release locks.

I understand, we cannot stop here. I need to go get my old Oracle VM from my garage and fire it up.

Oracle has a pragma called AUTONOMOUS_TRANSACTION that allows creating independent transactions inside a main transaction. Let’s see this in action with a small code snippet.

CREATE TABLE test_autonomous (

id NUMBER PRIMARY KEY,

msg VARCHAR2(100)

);

/

CREATE OR REPLACE PROCEDURE auton_proc IS

PRAGMA AUTONOMOUS_TRANSACTION;

BEGIN

INSERT INTO test_autonomous (id, msg) VALUES (2, 'Autonomous transaction');

COMMIT;

END;

/

CREATE OR REPLACE PROCEDURE main_proc IS

BEGIN

INSERT INTO test_autonomous (id, msg) VALUES (1, 'Main transaction');

auton_proc;

ROLLBACK;

END;

/In this code, we create two procedures:

main_proc, the main procedure, inserts the first row into the table.

auton_proc, called by main_proc, adds a second row to the table.

auton_proc is committed while main_proc is rolled back. Let’s observe the result:

SQL> SELECT * FROM test_auton;

ID MSG

---------- --------------------------------------------------

2 Autonomous transactionNow that’s a true nested transaction ! Here, the nested transaction achieves isolation and can persist independently of its main transaction.

SummaryIn summary, SQL Server and Oracle can handle nested transactions in different ways. In SQL Server, nested transactions do not create real isolation: @@TRANCOUNT may increase, but a single main transaction actually controls locks and the persistence of changes. Internal limits, like the maximum nesting of 32 procedures, show that excessive nested transactions can cause critical errors.

In contrast, Oracle, thanks to PRAGMA AUTONOMOUS_TRANSACTION, allows truly independent transactions within a main transaction. These autonomous transactions can be committed or rolled back without affecting the main transaction, providing a real mechanism for nested isolation.

As Brent Ozar points out, SQL Server also has a SAVE TRANSACTION command, which allows you to save a state after a nested transaction has been committed, for example. This command therefore provides more flexibility in managing nested transactions but does not provide complete isolation of sub-transactions. Furthermore, as Brent Ozar emphasizes, this command is complex and requires careful analysis of its behavior and the consequences it entails.

Another approach to bypass SQL Server’s nested-transaction limitations is to manage transaction coordination directly at the application level, where each logical unit of work can be handled independently.

The lesson is clear: appearances can be deceiving! Understanding the actual behavior of transactions in each DBMS is crucial for designing reliable applications and avoiding unpleasant surprises.

L’article The truth about nested transactions in SQL Server est apparu en premier sur dbi Blog.

New Ministral 3 14B vs Mistral Small 3.2 24B Review

MongoDB DMK 2.3: new features

The latest MongoDB DMK release (2.3.1) introduces a lot of new features and important changes, which I will describe here.

dbi services provides the DMK (Database Management Kit) to its customers for multiple technologies: Oracle, Postgres, MongoDB, etc. This toolkit is provided free of charge to all clients who work with dbi services on a consulting project.

The DMK is a set of standardized tools aiming at easing the work of DBAs, by having dbi’s best practices embedded in common scripts across all the database servers of an organization.

New features of the MongoDB DMK Rewriting of the projectThe most significant changes in the MongoDB DMK is the rewriting of all old Perl scripts into new Python scripts. On top of being more adapted to the MongoDB ecosystem, these will improve modularity for customers wanting to write their own packages.

It means that all utility scripts are now named .py instead of .sh, and apart from new features that have been added, the basic behavior stays the same for all of them.

Before release 2.3.0, only one configuration file existed in $DMK_HOME/etc. There is now a second configuration file in ~/.DMK/dmk.conf.local, which will overwrite default configuration options. See the GitBook section on Using DMK for more information.

The Optimal Flexible Architecture (OFA) has new recommendations. Specifically, the new default architecture is the following:

/u01for binaries and admin folders/u02for database files/u03for journal files/u04for log files/u90for backup files

Even though dbi suggests OFA as a good standard for MongoDB installations, we know that a lot of legacy installations will not use this kind of architecture. This is why the DMK is now more versatile, and with the use of the local configuration file described above, it has never been easier to adapt the DMK to your needs.

New aliases and environment variablesSome aliases were changed in this release, others were added. See Environment Variables and Aliases in the documentation for more information.

mgstart,mgstop,mgrestartare new aliases to manage a MongoDB instance.vicnow opens the MongoDB instance configuration file.vilstnow opens the$DMK_HOME/etc/mongodb.lstfile.sta,lsta,tsta,rstaare new aliases for instance status display.vil,cdl,talare new aliases to view, access and tail log files of MongoDB instances.dmkcopens DMK default configuration file.dmklopens DMK local configuration file, which overrides the default configuration file.

- A script named

set_local_dmk_config.pywas created to automate local configuration file changes. See Environment Variables for more details. - Backups are no longer compressed by default, and the option to compress them has been added to the

dmk_dbbackup.pyscript. - And of course, corrections of bugs.

Installing the DMK is always fairly easy. If you follow the OFA, just unzip the package and source dmk.sh.

[root@vm00 ~]$ su - mongodb

[mongodb@vm00 ~]$ unzip -oq dmk_mongodb-2.3.1.zip -d /u01/app/mongodb/local

[mongodb@vm00 ~]$ . /u01/app/mongodb/local/dmk/bin/dmk.sh

2025-12-04 10:03:48 | INFO | DMK_HOME environment variable is not defined.

2025-12-04 10:03:48 | INFO | First time installation of DMK.

2025-12-04 10:03:48 | INFO | DMK has been extracted to /u01/app/mongodb/local/dmk

2025-12-04 10:03:48 | INFO | Using DMK_HOME=/u01/app/mongodb/local/dmk

2025-12-04 10:03:48 | INFO | Default configuration file '/u01/app/mongodb/local/dmk/etc/dmk.conf.default' does not exist. Creating it.

2025-12-04 10:03:48 | INFO | Copying template file '/u01/app/mongodb/local/dmk/templates/etc/dmk.conf.unix' to '/u01/app/mongodb/local/dmk/etc/dmk.conf.default'

2025-12-04 10:03:48 | INFO | Local configuration file does not exist. Creating it.

2025-12-04 10:03:48 | INFO | Copying template file '/u01/app/mongodb/local/dmk/templates/etc/dmk.conf.local.template' to '/home/mongodb/.dmk/dmk.conf.local'

2025-12-04 10:03:48 | INFO | Creating symlink '/u01/app/mongodb/local/dmk/etc/dmk.conf.local' to '/home/mongodb/.dmk/dmk.conf.local'

2025-12-04 10:03:48 | WARNING | MONGO_BASE environment variable is not set. Trying to retrieve it from DMK_HOME (/u01/app/mongodb/local/dmk).

2025-12-04 10:03:48 | WARNING | MONGO_BASE set to '/u01/app/mongodb' based on DMK_HOME location.

2025-12-04 10:03:48 | WARNING | If you're running DMK for the first time, you can ignore these warnings.

2025-12-04 10:03:48 | WARNING | Otherwise, please set MONGO_BASE in /home/mongodb/.DMK before sourcing DMK.

2025-12-04 10:03:48 | WARNING | File '/u01/app/mongodb/etc/mongodb.lst' does not exist. Creating an empty file.

2025-12-04 10:03:48 | INFO | Creating DMK source file at '/home/mongodb/.DMK' with the following content:

2025-12-04 10:03:48 | INFO | DMK_HOME=/u01/app/mongodb/local/dmk

2025-12-04 10:03:48 | INFO | PYTHON_BIN=/usr/bin/python3

2025-12-04 10:03:48 | INFO | MONGO_BASE=/u01/app/mongodb

2025-12-04 10:03:48 | WARNING | Please make sure to source the .DMK file in your shell profile (e.g., .bash_profile).

2025-12-04 10:03:48 | WARNING | An example is provided at /u01/app/mongodb/local/dmk/templates/profile/dmk.mongodb.profileIf you don’t follow the OFA, you should define the following mandatory variables before running the DMK, inside the /home/mongodb/.DMK file:

DMK_HOME: path to the DMK main folderPYTHON_BIN: path to the Python binaries (3.6+ necessary, which is the default for Linux 8-like platforms)MONGO_BASE

[root@vm00 ~]$ su - mongodb

[mongodb@vm00 ~]$ echo "DMK_HOME=/u01/app/mongodb/local/dmk" > ~/.DMK

[mongodb@vm00 ~]$ echo "PYTHON_BIN=/usr/bin/python3" >> ~/.DMK

[mongodb@vm00 ~]$ echo "MONGO_BASE=/u01/app/mongodb" >> ~/.DMK

[mongodb@vm00 ~]$ cat ~/.DMK

export DMK_HOME=/u01/app/mongodb/local/dmk

export PYTHON_BIN=/usr/bin/python3

export MONGO_BASE=/u01/app/mongodbIf you want the DMK to be loaded when logging in, you should add the following code block to the .bash_profile of the mongodb user:

# BEGIN DMK BLOCK

if [ -z "$DMK_HOME" ]; then

if [ -f "$HOME/.DMK" ]; then

. "$HOME/.DMK"

else

echo "$HOME/.DMK file does not exist"

return 1

fi

fi

# Launched at login

. ${DMK_HOME}/bin/dmk.sh && ${PYTHON_BIN} ${DMK_HOME}/bin/dmk_status.py --table --all

# END DMK BLOCKAfter this, you can just log in again. The installation is complete !

Migrating from a former version of the DMKIf you already have the MongoDB DMK installed on your systems, there are a few more steps to take for this specific upgrade, because we switched from old Perl libraries to Python.

You first need to adapt the .DMK file, as described in the installation steps.

[mongodb@vm00 ~]$ cat ~/.DMK

export DMK_HOME=/u01/app/mongodb/local/dmk

export PYTHON_BIN=/usr/bin/python3

export MONGO_BASE=/u01/app/mongodbThen, move the former DMK folder and unzip the new version of the DMK. The old DMK should be a hidden directory, otherwise DMK will consider it as a custom package !

mongodb@vm00:/home/mongodb/ [DUMMY] cd /u01/app/mongodb/local/

mongodb@vm00:/u01/app/mongodb/local/ [DUMMY] ls -l

drwxrwx---. 10 mongodb mongodb 118 Jul 1 04:34 dmk

mongodb@vm00:/u01/app/mongodb/local/ [DUMMY] mv dmk .dmk_old

mongodb@vm00:/u01/app/mongodb/local/ [DUMMY] unzip /u01/app/mongodb/artifacts/dmk_mongodb-2.3.1.zip

mongodb@vm00:/u01/app/mongodb/local/ [DUMMY] ls -ail

100690250 drwxrwx---. 8 mongodb mongodb 96 Jul 1 04:24 dmk

33554663 drwxrwx---. 10 mongodb mongodb 118 Jul 1 04:34 .dmk_oldUpdate your .bash_profile to remove all traces of the former DMK loading mechanism. Here is an example of the minimal DMK block in the template file:

# BEGIN DMK BLOCK

if [ -z "$DMK_HOME" ]; then

if [ -f "$HOME/.DMK" ]; then

. "$HOME/.DMK"

else

echo "$HOME/.DMK file does not exist. It is needed to source DMK at login. Run '. <DMK_HOME>/bin/dmk.sh' or 'source <DMK_HOME>/bin/dmk.sh' to source DMK manually this time."

return 1

fi

fi

# Launched at login

. ${DMK_HOME}/bin/dmk.sh && ${PYTHON_BIN} ${DMK_HOME}/bin/dmk_status.py --table --all

# END DMK BLOCKLast but not least, you will have to customize your local DMK configuration file ~/.dmk/dmk.conf.local. You can use the set_local_dmk_config.py script to help yourself with the modifications.

mongodb@vm00:/u01/app/mongodb/admin/ [mdb01] set_local_dmk_config.py INSTANCE MONGO_JOURNAL "\${MONGO_DATA_ROOT}/\${MONGO_INSTANCE}/journal"

Backup created: /home/mongodb/.dmk/dmk.conf.bak_20251024_084959

Updated MONGO_JOURNAL in [INSTANCE]

Old value: var::MONGO_JOURNAL::=::nowarn::"${MONGO_JOURNAL_ROOT}/${MONGO_INSTANCE}"::

New value: var::MONGO_JOURNAL::=::nowarn::"${MONGO_DATA_ROOT}/${MONGO_INSTANCE}/journal"::

Use 'dmkc' and 'dmkl' aliases to quickly view default and local configuration files.For any questions regarding the MongoDB DMK, take a look at the documentation or feel free to contact me.

L’article MongoDB DMK 2.3: new features est apparu en premier sur dbi Blog.

What is Forgejo and getting it up and running on FreeBSD 15

In recent customer projects I had less to do with PostgreSQL but more with reviewing infrastructures and give recommendations about what and how to improve. In all of those projects GitLab is used in one way or the other. Some only use it for managing their code in Git and work on issues, others use pipelines to build their stuff, and others almost use the full set of features. Gitlab is a great product, but sometimes you do not need the full set of features so I started to look for alternatives mostly because of my own interest. One of the more popular choices seemed to be Gitea but as a company was created around it, a fork was created and this is Forgejo. The FAQ summarizes the most important topics around the project pretty well, so please read it.

As FreeBSD 15 was released on 2. December that’s the perfect chance to get that up and running there and have a look how it feels like. I am not going into the installation of FreeBSD 15, this really is straight forward. I just want to mention that I opted for the “packaged base system” instead of the distributions sets which is currently in tech preview. What that means is that the whole system is installed and managed with packages and you don’t need freebsd-update anymore. Although it is still available, it will not work anymore if you try to use it:

root@forgejo:~ $ cat /etc/os-release

NAME=FreeBSD

VERSION="15.0-RELEASE"

VERSION_ID="15.0"

ID=freebsd

ANSI_COLOR="0;31"

PRETTY_NAME="FreeBSD 15.0-RELEASE"

CPE_NAME="cpe:/o:freebsd:freebsd:15.0"

HOME_URL="https://FreeBSD.org/"

BUG_REPORT_URL="https://bugs.FreeBSD.org/"

root@forgejo:~ $ freebsd-update fetch

freebsd-update is incompatible with the use of packaged base. Please see

https://wiki.freebsd.org/PkgBase for more information.

Coming back to Forgejo: On FreeBSD this is available as a package, so you can just go ahead and install it:

root@forgejo:~$ pkg search forgejo

forgejo-13.0.2_1 Compact self-hosted Git forge

forgejo-act_runner-9.1.0_2 Act runner is a runner for Forgejo based on the Gitea Act runner

forgejo-lts-11.0.7_1 Compact self-hosted Git forge

forgejo7-7.0.14_3 Compact self-hosted Git service

root@forgejo:~ $ pkg install forgejo

Updating FreeBSD-ports repository catalogue...

FreeBSD-ports repository is up to date.

Updating FreeBSD-ports-kmods repository catalogue...

FreeBSD-ports-kmods repository is up to date.

Updating FreeBSD-base repository catalogue...

FreeBSD-base repository is up to date.

All repositories are up to date.

The following 32 package(s) will be affected (of 0 checked):

New packages to be INSTALLED:

FreeBSD-clibs-lib32: 15.0 [FreeBSD-base]

brotli: 1.1.0,1 [FreeBSD-ports]

...

Number of packages to be installed: 32

The process will require 472 MiB more space.

100 MiB to be downloaded.

Proceed with this action? [y/N]: y

Message from python311-3.11.13_1:

--

Note that some standard Python modules are provided as separate ports

as they require additional dependencies. They are available as:

py311-gdbm databases/py-gdbm@py311

py311-sqlite3 databases/py-sqlite3@py311

py311-tkinter x11-toolkits/py-tkinter@py311

=====

Message from git-2.51.0:

--

If you installed the GITWEB option please follow these instructions:

In the directory /usr/local/share/examples/git/gitweb you can find all files to

make gitweb work as a public repository on the web.

All you have to do to make gitweb work is:

1) Please be sure you're able to execute CGI scripts in

/usr/local/share/examples/git/gitweb.

2) Set the GITWEB_CONFIG variable in your webserver's config to

/usr/local/etc/git/gitweb.conf. This variable is passed to gitweb.cgi.

3) Restart server.

If you installed the CONTRIB option please note that the scripts are

installed in /usr/local/share/git-core/contrib. Some of them require

other ports to be installed (perl, python, etc), which you may need to

install manually.

=====

Message from git-lfs-3.6.1_8:

--

To get started with Git LFS, the following commands can be used:

1. Setup Git LFS on your system. You only have to do this once per

repository per machine:

$ git lfs install

2. Choose the type of files you want to track, for examples all ISO

images, with git lfs track:

$ git lfs track "*.iso"

3. The above stores this information in gitattributes(5) files, so

that file needs to be added to the repository:

$ git add .gitattributes

4. Commit, push and work with the files normally:

$ git add file.iso

$ git commit -m "Add disk image"

$ git push

=====

Message from forgejo-13.0.2_1:

--

Before starting forgejo for the first time, you must set a number of

secrets in the configuration file. For your convenience, a sample file

has been copied to /usr/local/etc/forgejo/conf/app.ini.

You need to replace every occurence of CHANGE_ME in the file with

sensible values. Please refer to the official documentation at

https://forgejo.org for details.

You will also likely need to create directories for persistent storage.

Run

su -m git -c 'forgejo doctor check'

to check if all prerequisites have been met.

What I really like with the FreeBSD packages is, that they usually give clear instructions on what to do. We’ll go with the web-based installer, so:

root@forgejo:~ $ chown git:git /usr/local/etc/forgejo/conf

root@forgejo:~ $ rm /usr/local/etc/forgejo/conf/app.ini

root@forgejo:~ $ service -l | grep for

forgejo

root@forgejo:~ $ service forgejo enable

forgejo enabled in /etc/rc.conf

root@forgejo:~ $ service forgejo start

2025/12/12 14:16:42 ...etting/repository.go:318:loadRepositoryFrom() [W] SCRIPT_TYPE "bash" is not on the current PATH. Are you sure that this is the correct SCRIPT_TYPE?

[1] Check paths and basic configuration

- [E] Failed to find configuration file at '/usr/local/etc/forgejo/conf/app.ini'.

- [E] If you've never ran Forgejo yet, this is normal and '/usr/local/etc/forgejo/conf/app.ini' will be created for you on first run.

- [E] Otherwise check that you are running this command from the correct path and/or provide a `--config` parameter.

- [E] Cannot proceed without a configuration file

FAIL

Command error: stat /usr/local/etc/forgejo/conf/app.ini: no such file or directory

2025/12/12 14:16:42 ...etting/repository.go:318:loadRepositoryFrom() [W] SCRIPT_TYPE "bash" is not on the current PATH. Are you sure that this is the correct SCRIPT_TYPE?

[1] Check paths and basic configuration

- [E] Failed to find configuration file at '/usr/local/etc/forgejo/conf/app.ini'.

- [E] If you've never ran Forgejo yet, this is normal and '/usr/local/etc/forgejo/conf/app.ini' will be created for you on first run.

- [E] Otherwise check that you are running this command from the correct path and/or provide a `--config` parameter.

- [E] Cannot proceed without a configuration file

FAIL

Command error: stat /usr/local/etc/forgejo/conf/app.ini: no such file or directory

Seems bash is somehow expected, but this is not available right now:

root@forgejo:~ $ which bash

root@forgejo:~ $

Once more:

root@forgejo:~ $ pkg install bash

root@forgejo:~ $ service forgejo stop

Stopping forgejo.

root@forgejo:~ $ service forgejo start

[1] Check paths and basic configuration

- [E] Failed to find configuration file at '/usr/local/etc/forgejo/conf/app.ini'.

- [E] If you've never ran Forgejo yet, this is normal and '/usr/local/etc/forgejo/conf/app.ini' will be created for you on first run.

- [E] Otherwise check that you are running this command from the correct path and/or provide a `--config` parameter.

- [E] Cannot proceed without a configuration file

FAIL

Command error: stat /usr/local/etc/forgejo/conf/app.ini: no such file or directory

root@forgejo:~ $ service forgejo status

forgejo is running as pid 3448.

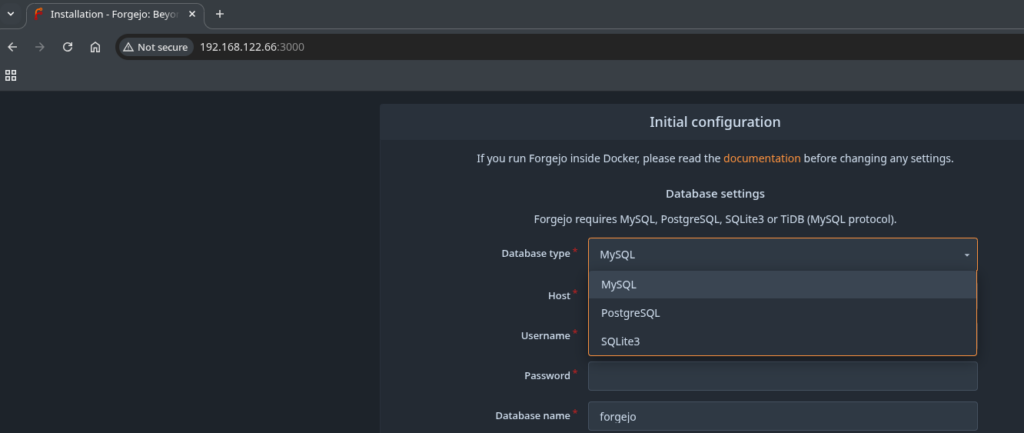

The web installer is available on port 3000 and you can choose between the usual database backends:

To keep it simple I went with SQLite3, kept everything at the default and provided the Administrator information further down the screen. Before the installer succeeded I had to create these two directories:

root@forgejo:~ $ mkdir /usr/local/share/forgejo/data/

root@forgejo:~ $ chown git:git /usr/local/share/forgejo/data/

root@forgejo:~ $ mkdir /usr/local/share/forgejo/log

root@forgejo:~ $ chown git:git /usr/local/share/forgejo/log

Once that was done it went fine and this is the welcome screen:

As with the other tools in that area there are the common sections like “Issues”, “Pull requests”, and “Milestones”.

In the next post we’re going to create an organization, a repository and try to create a simple, how GitLab calls it, pipeline.

L’article What is Forgejo and getting it up and running on FreeBSD 15 est apparu en premier sur dbi Blog.

How effective is AI on a development project?

In this article, I will try to evaluate the benefits of AI on a development project and what concrete changes it makes to our development practices.

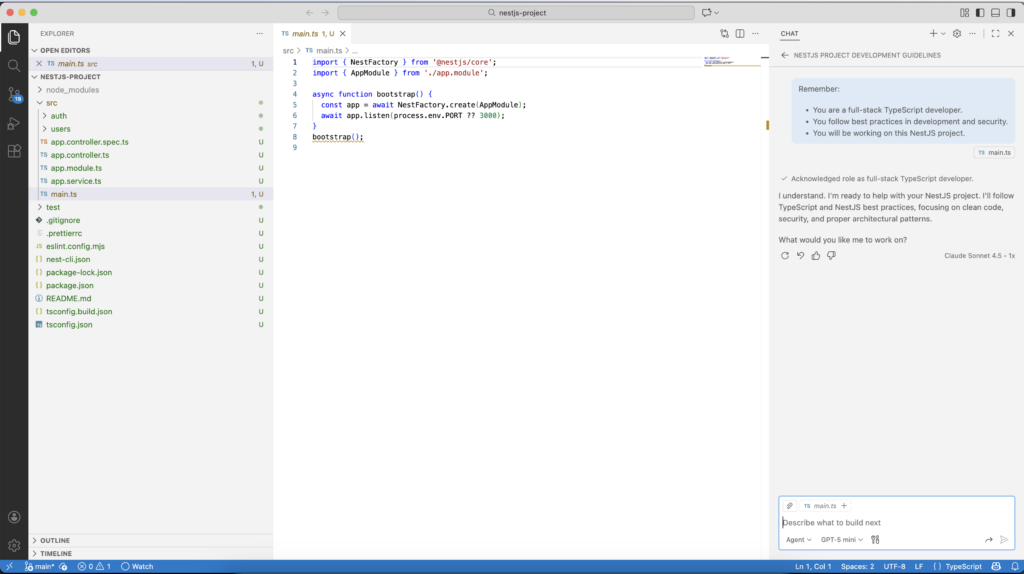

The test case and the approachI chose a familiar environment for my comparison: a new NestJS project from scratch.

For my project, I want to:

- Use a .env file for configuration

- Connect to a PostgreSQL database

- Store users in a database table

- Create a CRUD API to manage my users

- Manage JWT authentication based on my user list

- Secure CRUD routes for authenticated users using a guard

To help me, I’m going to use the GitHub Copilot agent with the GTP5-mini model. I’ll ask it to generate code on my behalf, as much as possible. However, I’ll continue to follow NestJS best practices by using the documentation recommendations and initializing the project myself. I will focus on prompting, initializing the context and reviewing the code generated by the AI.

For better results, I will develop the application step by step and control the generated code at each step.

Intialize the projectAt first, I initialize a new NestJS project using the CLI, as mentioned in the documentation:

npm i -g @nestjs/cli

nest new nestjs-projectI start by opening the project in VSCode and I open a new chat with the AI agent. I’m trying to give it some general instructions for the rest of the tasks:

Remember:

- You are a full-stack TypeScript developer.

- You follow best practices in development and security.

- You will be working on this NestJS project.The AI agent discovers the project:

First Task, add application configuration

First Task, add application configuration

I followed the documentation to add configuration support using .env files

I’ve manually added the required package:

npm i --save @nestjs/configAnd asked the AI to generate the code:

@nestjs/config is installed. Add support for .env in the application. The config file must contain the credentials to access to the database (host, database name, user, password).I want to use TypeORM to manage my database connections and migrations.

First, I install the required packages:

npm install --save @nestjs/typeorm typeorm pgAnd then ask the AI agent to generate the code:

I will use typeorm and postgres. Connect the application to the database.

Save the credentials in the .env file.

Use the credentials:

- host: localhost

- name: nestjs,

- user: nest-user

- password XXXNote : Be careful when you send credentials to AI

Next request to the AI agent: create a migration to initialize the database schema:

Add a migration to create a "users" table with the following fields: id (primary key), username (string), email (string), password (string), is_admin (boolean), disabled (boolean), created_at (timestamp), updated_at (timestamp).In addition, in my package.json, the agent adds the migration command to npm in the project:

"typeorm:migration:run": "ts-node -r tsconfig-paths/register ./node_modules/typeorm/cli.js migration:run -d ./data-source.ts",To simplify the process, I asked the AI agent to generate a default user for my application:

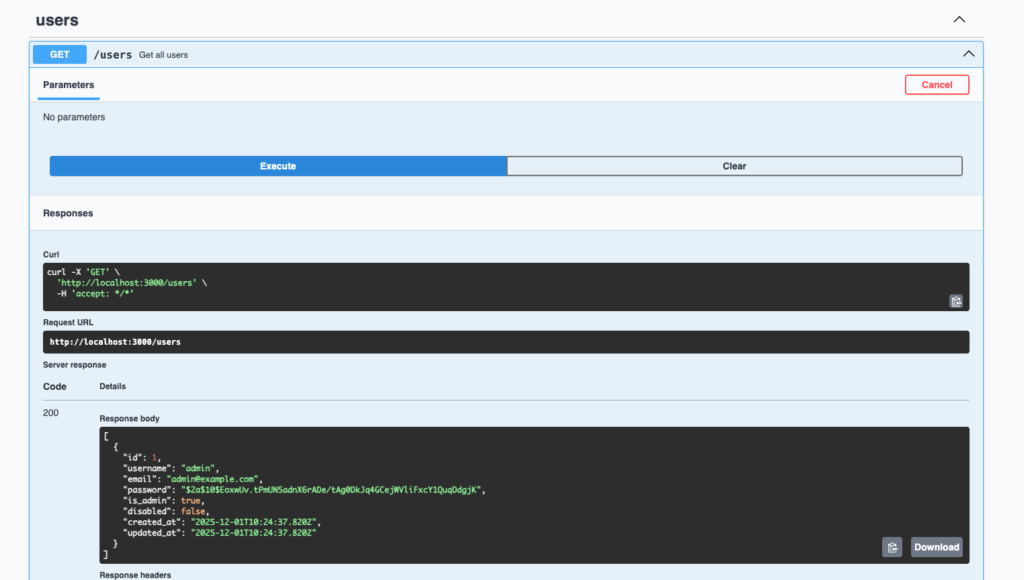

In the migration, add a default admin user with the following values:

username: "admin"

email: "admin@example.com"

password: "Admin@123" (hash the password using bcrypt)

is_admin: true

disabled: falseAfter the completion by the AI agent, I run the migration.

First module, service and controller for users with CRUD endpointsNow, I ask the agent to create the users module with detailed endpoints:

Add a module, service, and controller for "users" with the following endpoints:

- GET /users: Retrieve a list of all users.

- GET /users/:id: Retrieve a user by ID.

- POST /users: Create a new user.

- PUT /users/:id: Update a user by ID.

- DELETE /users/:id: Delete a user by ID.This step is very quick, and the code is generated in 4min only !

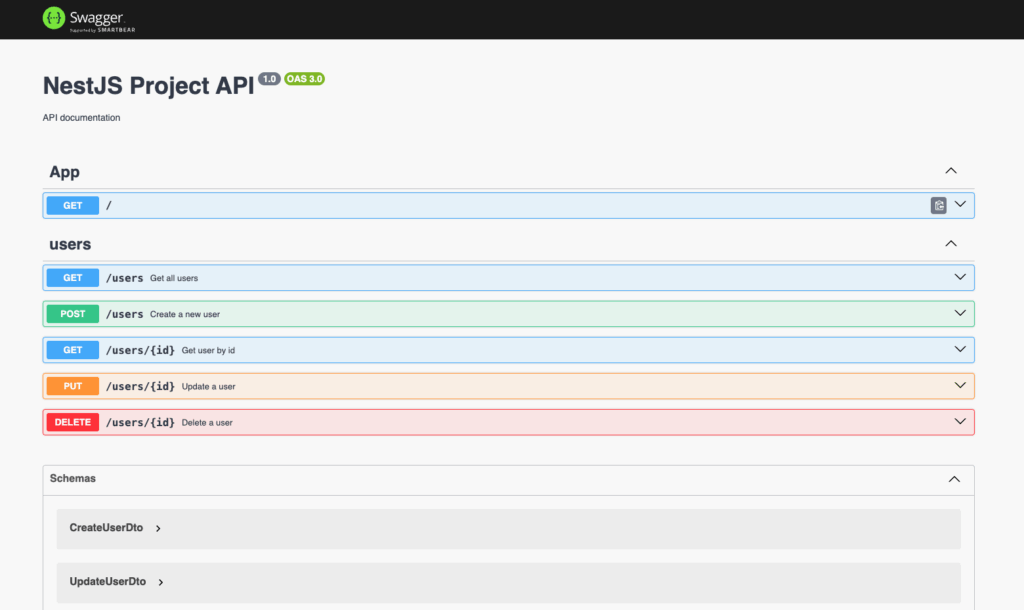

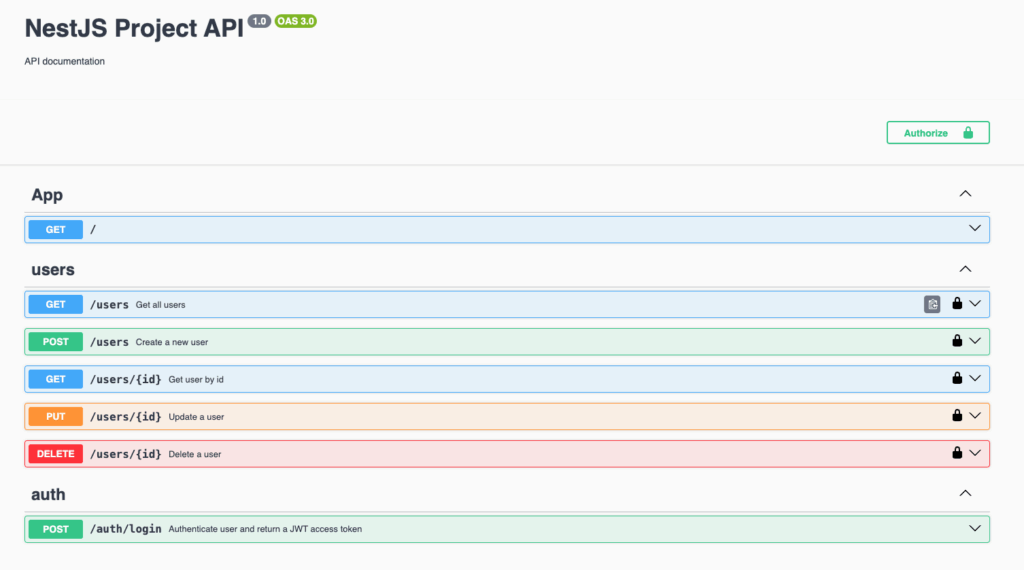

Add Swagger documentationTo test the first REST module, I ask the AI to add Swagger UI to the project.

As with the other steps, I add the packages myself:

npm install --save @nestjs/swaggerNote: This step is very tricky for the AI, if you don’t specify the already installed package, it will try to install an outdated version.

Then, I ask the AI agent to generate the code:

@nestjs/swagger is installed

Add swagger to the application.

Document the users endpoints.In few minutes, we have the API documentation:

During API testing, I noticed that the password hash was returned in the user list. However, initially, I had instructed the AI to follow security best practices…

I asked the AI agent to fix this issue:

The users password field must be excluded from the responses.As authentication mechanism, I use JWT tokens provided by passport library.

I install the required packages:

npm install --save @nestjs/passport

npm install --save @nestjs/jwt passport-jwt

npm install --save-dev @types/passport-jwtThen, I ask the AI agent to generate the code:

Implement the JWT authentication strategy, @nestjs/jwt passport-jwt and @types/passport-jwt are installed.

Add a login endpoint that returns a JWT token when provided with valid user credentials (username and password from the users table).And I instruct the AI to use .env file for the JWT secret and expiration:

Add the JWT secrets and expiration into the .env file, Fix the typescript errors, Improve the swagger documentation for login endpoint (message definition)Now, I want to secure the users endpoints to allow only authenticated users and ask the agent the following:

Add a guard on the users endpoints to allow only connected usersLast point, I want to be able to authenticate on the Swagger interface, so I ask it:

Add the ability to authenticate on the Swagger interface with a bearer token. Conclusion

Conclusion

All of this took me around 1h30 to complete, including prompting and reviewing the steps.

Reading the documentation, understanding the technologies, adding the dependancies remained the same.

The initial estimate, without AI, was between 2 and 4 hours to complete the project :

TaskEstimated TimeAI Coding / promptingReview.env15–30 min6 min5 minConnexion PostgreSQL20–40 min4 min2 minTable User + migration15–25 min7 min2 minCRUD Users30–45 min5 min10 minSwagger UI15–30 min6 min6 minAuth JWT30–60 min12 min15 minGuards15–30 min5 min5 minTOTAL2h20 – 4h2045min45 minDuring development, AI makes certain errors or inaccuracies like TypeScripts compilation errors, password or security issues such as returning the password hash in the user list. However, the time spent to review and correct these issues is largely compensated by the speed of code generation.

At the end, coding with AI is very fast, the generated code with a well documented technology (NestJS) is good.

Even if formulating a clear request requires careful consideration and wording, coding is comfortable. However, the job is no longer the same; it now requires good code planning and architecture and the ability to review the generated code. Coding with AI can be effective, but only if you have a clear idea of what you want from the very beginning, use clear instructions and leave no room for interpretation by the AI.

L’article How effective is AI on a development project? est apparu en premier sur dbi Blog.

OGG-10556 when starting extract from GoldenGate 23ai web UI

Another day, another not-so-documented GoldenGate error, this time about the OGG-10556 error, which I had when setting up replication on a new GoldenGate installation. After making changes from the web UI in an extract, I ended with the following error when starting it:

2025-10-16 14:02:30 ERROR OGG-02024 An attempt to gather information about the logmining server configuration from the Oracle database failed.

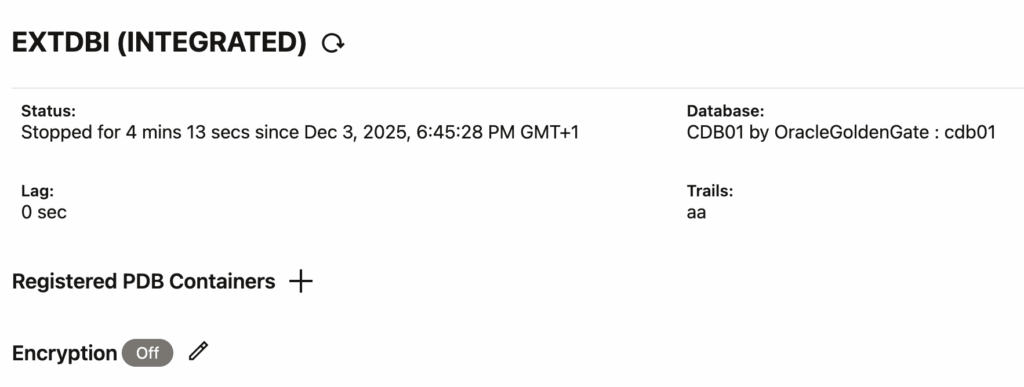

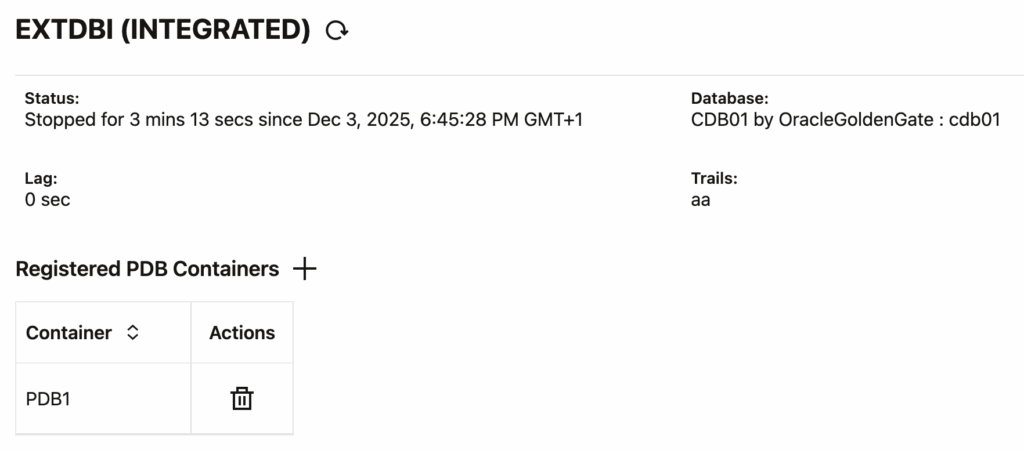

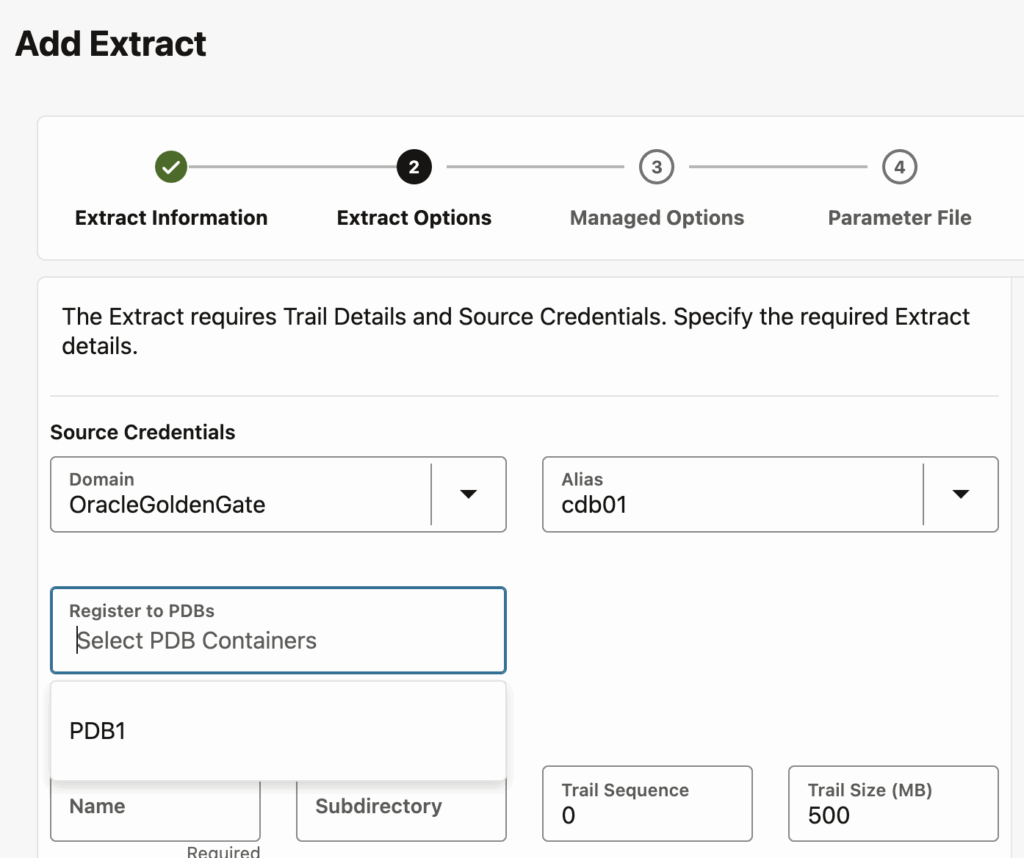

2025-10-15 12:06:51 ERROR OGG-10556 No data found when executing SQL statement <SELECT apply_name FROM all_apply WHERE apply_name = SUBSTR(UPPER('OGG$' || :1), 1, 30)>.Since the exact configuration is not relevant here, I will not add it to the blog. After some trial and error, it all came down to the extract settings in the web UI (not the configuration file). From the web UI, you can find the list of PDBs on which the extract is registered. In my case, because of the modifications I made, the PDB was not listed in the Registered PDB Containers section anymore:

After registering the PDB again, and restarting the extract, it worked !

NB: You’re wondering why you had the issue even without modifying the extract ? This might be because of how slow the GoldenGate UI can be. You cannot add an extract without specifying a PDB. However, the PDB list appears dynamically, sometimes a few seconds after selecting the connection. And in the meantime, it is possible to create an invalid extract !

The PDB list sometimes appear a few seconds after selecting the connection

L’article OGG-10556 when starting extract from GoldenGate 23ai web UI est apparu en premier sur dbi Blog.

Understanding XML performance pitfalls in SQL Server

Working with XML in SQL Server can feel like taming a wild beast. It’s undeniably flexible and great for storing complex hierarchical data, but when it comes to querying efficiently, many developers hit a wall. That’s where things get interesting.

In this post, we’ll dive into a real-world scenario with half a million rows, put two XML query methods head-to-head .exist() vs .value(), and uncover how SQL Server handles them under the scenes.

To demonstrate this, we’ll use SQL Server 2022 Developer Edition and create a table based on the open-source StackOverflow2010 database, derived from the Posts table, but storing part of the original data in XML format. We will also add a few indexes to simulate an environment with a minimum level of optimization.

CREATE TABLE dbo.PostsXmlPerf

(

PostId INT NOT NULL PRIMARY KEY,

PostTypeId INT NOT NULL,

CreationDate DATETIME NOT NULL,

Score INT NOT NULL,

Body NVARCHAR(MAX) NOT NULL,

MetadataXml XML NOT NULL

);

INSERT INTO dbo.PostsXmlPerf (PostId, PostTypeId, CreationDate, Score, Body, MetadataXml)

SELECT TOP (500000)

p.Id,

p.PostTypeId,

p.CreationDate,

p.Score,

p.Body,

(

SELECT

p.OwnerUserId AS [@OwnerUserId],

p.LastEditorUserId AS [@LastEditorUserId],

p.AnswerCount AS [@AnswerCount],

p.CommentCount AS [@CommentCount],

p.FavoriteCount AS [@FavoriteCount],

p.ViewCount AS [@ViewCount],

(

SELECT TOP (5)

c.Id AS [Comment/@Id],

c.Score AS [Comment/@Score],

c.CreationDate AS [Comment/@CreationDate]

FROM dbo.Comments c

WHERE c.PostId = p.Id

FOR XML PATH(''), TYPE

)

FOR XML PATH('PostMeta'), TYPE

)

FROM dbo.Posts p

ORDER BY p.Id;

CREATE nonclustered INDEX IX_PostsXmlPerf_CreationDate

ON dbo.PostsXmlPerf (CreationDate);

CREATE nonclustered INDEX IX_PostsXmlPerf_PostTypeId

ON dbo.PostsXmlPerf (PostTypeId);Next, let’s create two queries designed to interrogate the column that contains XML data, in order to extract information based on a condition applied to a value stored within that XML.

SET STATISTICS IO ON;

SET STATISTICS TIME ON;

DBCC FREEPROCCACHE;

SELECT PostId, Score

FROM dbo.PostsXmlPerf

WHERE MetadataXml.exist('/PostMeta[@OwnerUserId="8"]') = 1;

DBCC FREEPROCCACHE;

SELECT PostId, Score

FROM dbo.PostsXmlPerf

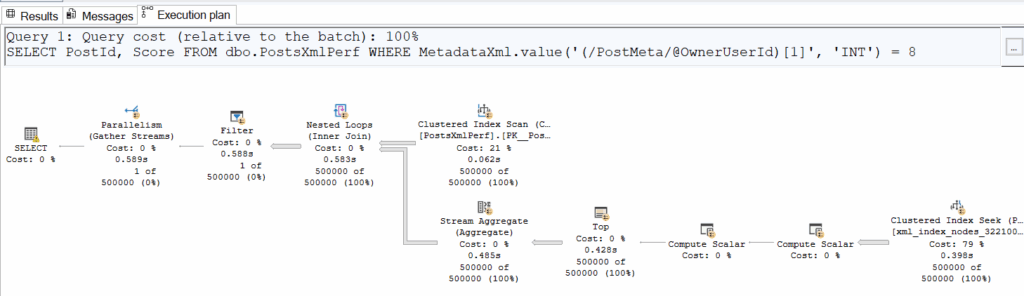

WHERE MetadataXml.value('(/PostMeta/@OwnerUserId)[1]', 'INT') = 8

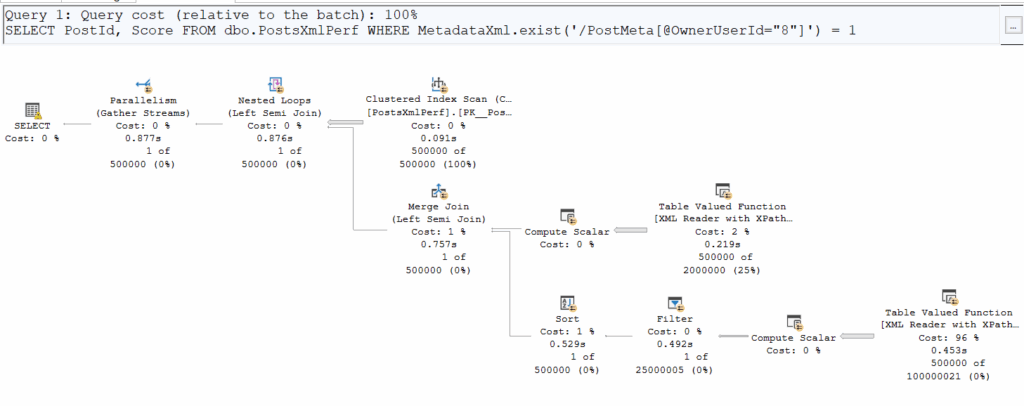

Comparing logical and physical reads, we notice something interesting:

Logical ReadsCPU Time.exist()125’91200:00:05.954.value()125’91200:00:03.125At first glance, the number of pages read is identical, but .exist() is clearly taking more time. Why? Execution plans reveal that .exist() sneaks in a Merge Join, adding overhead.

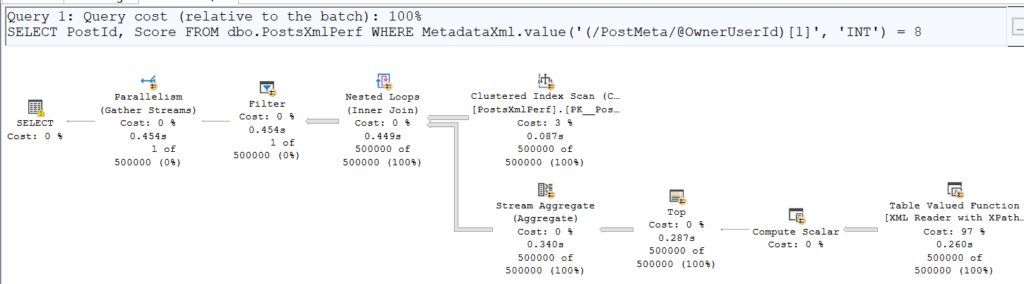

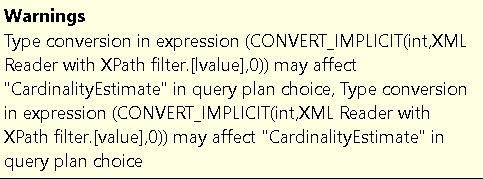

Additionally, on both execution plans we can see a small yellow bang icon. On the first plan, it’s just a memory grant warning, but the second one is more interesting:

Alright, a bit strange ; let’s move forward with some tuning and maybe this warning will disappear.

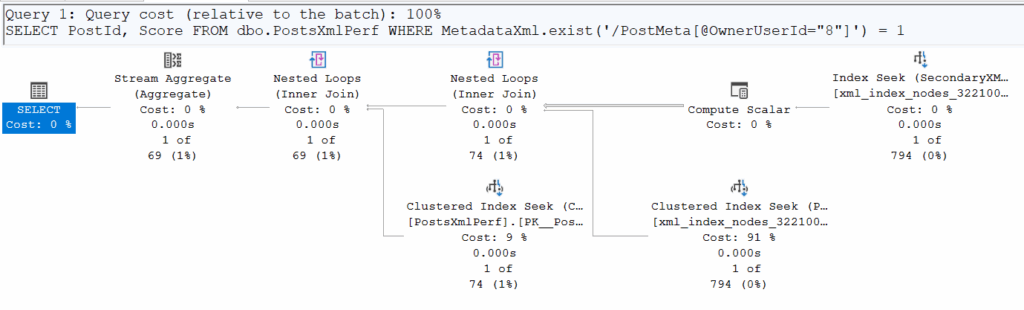

To help with querying, it can be useful to create a more targeted index for XML queries.

Let’s create an index on the column that contains XML. However, as you might expect, it’s not as straightforward as indexing a regular column. For an XML column, you first need to create a primary XML index, which physically indexes the overall structure of the column (similar to a clustered index), and then a secondary XML index, which builds on the primary index and is optimized for a specific type of query (value, path, or property) – to know more about XML indexes : Microsoft Learn, MssqlTips.

So, let’s create these indexes !

CREATE PRIMARY XML INDEX IX_XML_Primary_MetadataXml

ON dbo.PostsXmlPerf (MetadataXml);

CREATE XML INDEX IX_XML_Value_MetadataXml

ON dbo.PostsXmlPerf (MetadataXml)

USING XML INDEX IX_XML_Primary_MetadataXml FOR Value;Let’s rerun the performance tests with our two queries above, making sure to flush the buffer cache between each execution.

Logical ReadsCPU Time.exist()400:00:00.031.value()125’91200:00:03.937

Logical ReadsCPU Time.exist()400:00:00.031.value()125’91200:00:03.937

The inevitable happened: the implicit conversion makes it impossible to use the secondary XML index due to a data type mismatch, preventing an actual seek on it. We do see a seek in the second execution plan, but it occurs for every row in the table (500’000 executions) and is essentially just accessing the underlying physical structure stored in the clustered index. In reality, this ‘seek’ is SQL Server scanning the XML to retrieve the exact value of the requested field (in this case, OwnerUserId).

This conversion issue occurs because the function .exist() returns a BIT, while the function .value() returns a SQL type.

This difference in return type can lead to significant performance problems when tuning queries that involve XML.

As explained by Microsoft: “For performance reasons, instead of using the value() method in a predicate to compare with a relational value, use exist() with sql:column()“

Working with XML in SQL Server can be powerful, but it can quickly become tricky to manage. .exist() and .value() might seem similar, but execution differences and type conversions can have a huge performance impact. Proper XML indexing is essential, and knowing your returned data types can save you from hours of head-scratching. Most importantly, before deciding to store data as XML, consider whether it’s truly necessary ; relational databases are not natively optimized for XML and can introduce complexity and performance challenges.

Sometimes, a simpler and highly effective approach is to extract frequently queried XML fields at the application level and store them in separate columns. This makes them much easier to index and query, reducing overhead while keeping your data accessible.

If your application relies heavily on semi-structured data or large volumes of XML/JSON, it’s worth considering alternative engines. For instance, MongoDB provides native document storage and fast queries on JSON/BSON, while PostgreSQL offers XML and JSONB support with powerful querying functions. Choosing the right tool for the job can simplify your architecture and significantly improve performance.

And to dive even deeper into the topic, with a forthcoming article focused this time on XML storage, keep an eye on the dbi services blogs !

L’article Understanding XML performance pitfalls in SQL Server est apparu en premier sur dbi Blog.