Yann Neuhaus

Customer experience – How to change ip address in a fully clustered environment

If you have a clusturized environment already up and running, but you want or need change the complete ip adress of your wall with minimal downtime (full downtime during operation: 3 min).

You’re in the right place, I will show you how we can do that.

ContextTwo-node clustered environment in sql 2022 with OS environment in 2022 .In 1 node, there is a standalone instance and an Always-on instance with the other node. During the changes if you have just a standalone instance, your instance will be unvailable.

Here’s the step-by-step procedure for modify IPs adresses of a complete clustering environnement:

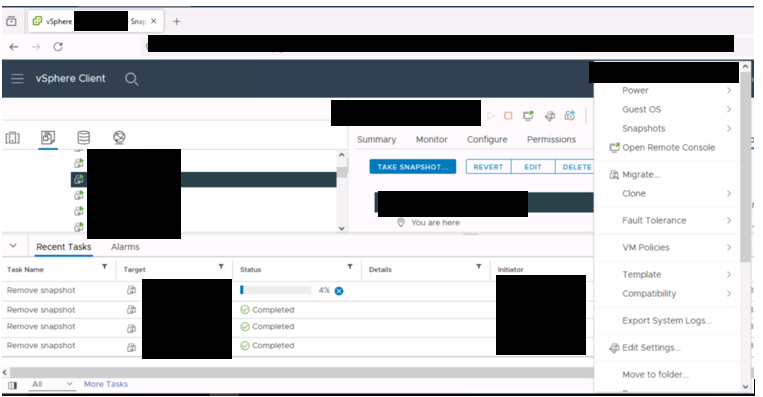

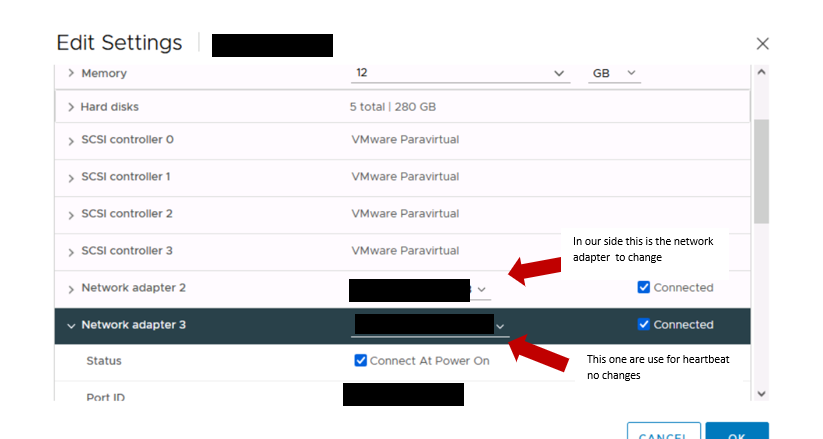

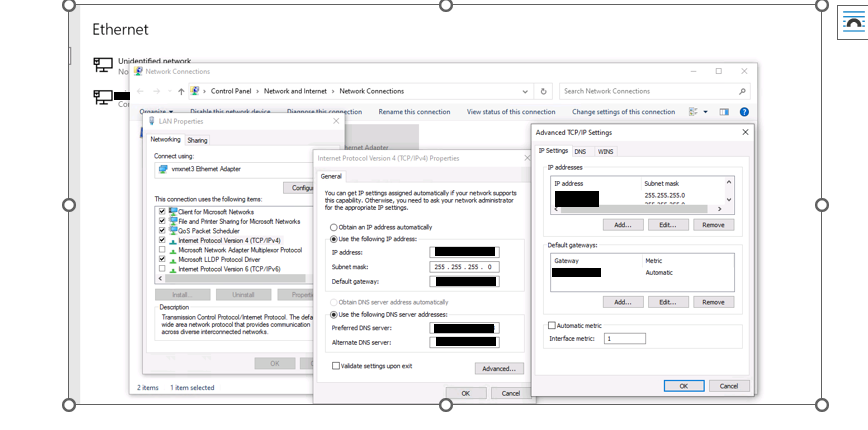

1) Change Ip of the two virtual machine

Change into Network parameters like VLAN settings and MAC address ( on vsphere)

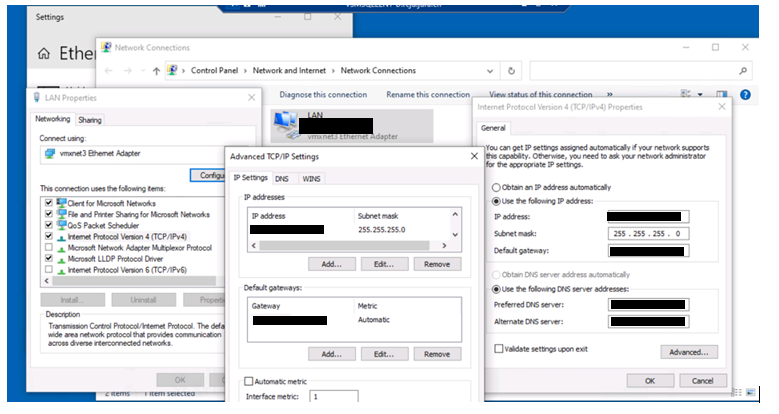

Than control IP on netword card

Access the network interface card on the respective nodes and make the change.

2) Change Ip of the cluster card on each vm

Modify on network card and change dns if necessary

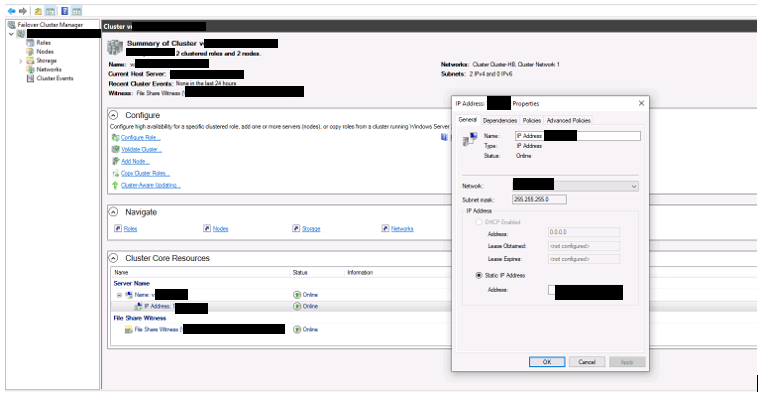

3) Change ip of the cluster ( on windows failover cluster)

In the Failover Cluster Manager pane, select your cluster and expand Cluster Core Resources.

Right-click the cluster, and select Properties >IP address.

Change the IP address of the failover cluster using the Edit option and click OK.

Click Apply.

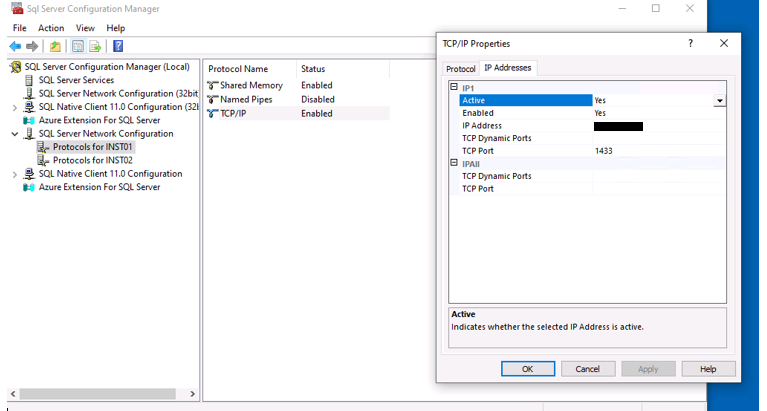

4) Change ip of the the instance sql

If you have specific IP for your instance add the new IP on network card

However you can just change the IP on on sql configuration manager

Restart the instance

Control connection with the changes

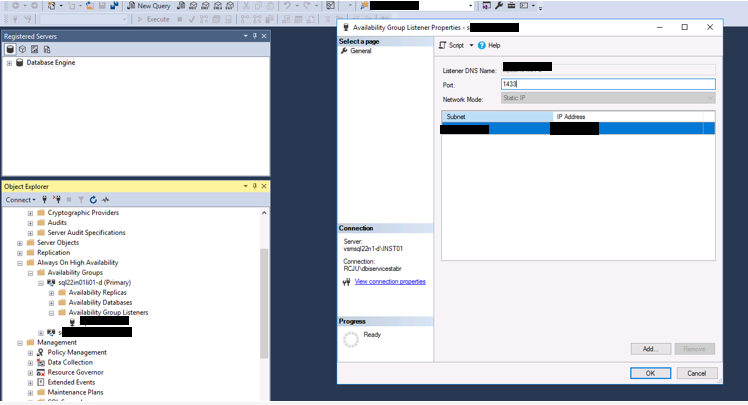

5) Change ip of the listener

Go to the AG in the failover cluster manager, locate the server name in the bottom panel, right-click and go to properties and change the static IP address.

Problem we encountered

Problem we encountered

As it was a cluster, the two IP ranges didn’t have the same firewall rules. This initially blocked the hardware part of the system, as well as the AG witness, which was unable to control the state of the two nodes. The network team then set the same rules on both ranges, and all was well.

L’article Customer experience – How to change ip address in a fully clustered environment est apparu en premier sur dbi Blog.

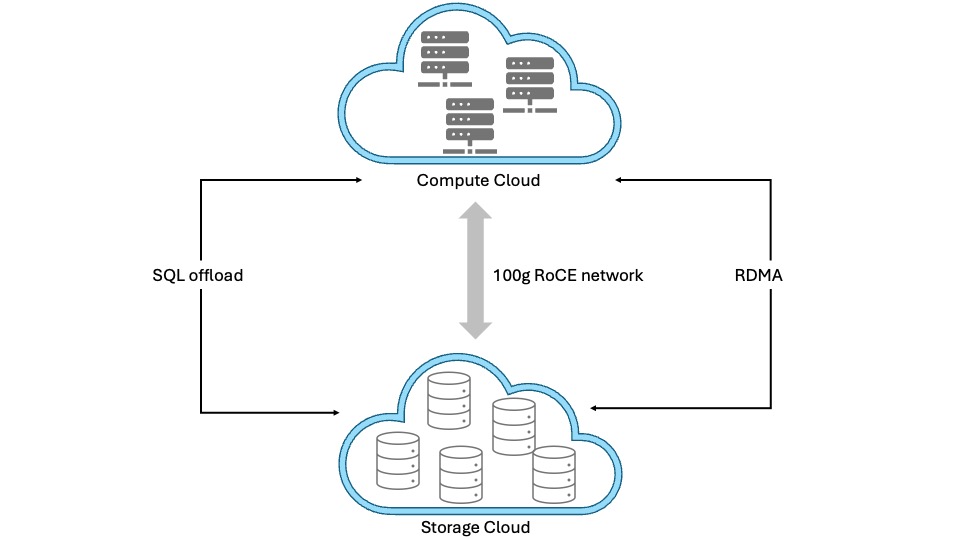

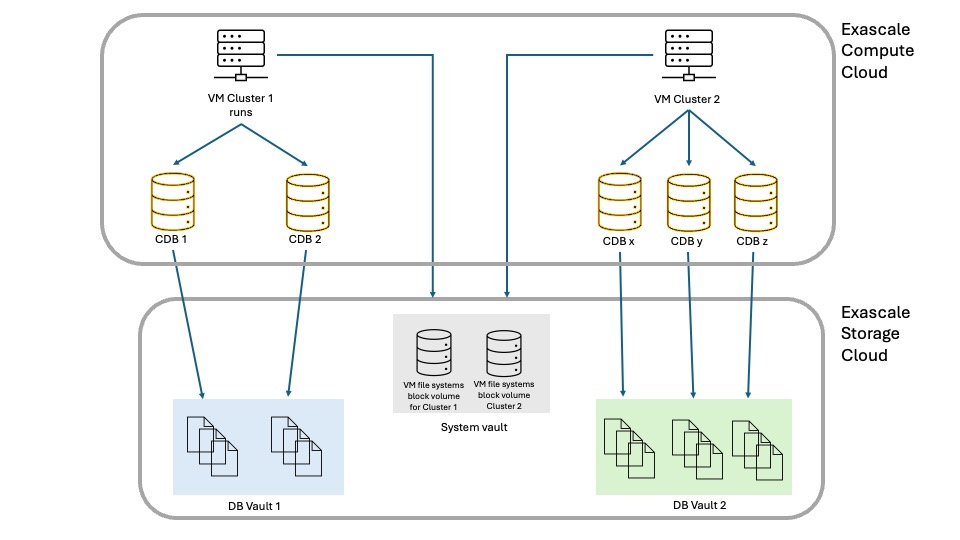

Exascale Infrastructure : new flavor of Exadata Database Service

Exadata on Exascale Infrastructure is a new deployment option for Exadata Database Service. It comes in addition to the well known Exadata Cloud@Customer or Exadata on Dedicated Infrastructure options already available. It is based on new storage management technology decoupling database and Grid Infrastructure clusters from the Exadata underlying storage servers by integrating the database kernel directly with the Exascale storage structures.

What is Exascale Infrastructure ?Simply put, Exascale is the next-generation of Oracle Exadata Database Services. It combines a cloud storage approach for flexibilty and hyper-elasticity with the performance of Exadata Infrastructure. It introduces a loosely-coupled shared and multitenant architecture where Database and Grid Infrastructure clusters are decoupled from the underlying Exadata storage servers which become a pool of shared storage resources available for multiple Grid Infrastructure clusters and databases.

Strict data isolation provides secure storage sharing while storage pooling enables flexible and dynamic provisioning combined with better storage space and processing utilization.

Advanced snapshot and cloning features, leveraging Redirect-On-Write technology instead of Copy-On-Write, enable space-efficient thin clones from any read/write database or pluggable database. Read-only test master are therefore a thing of the past. These features alone make Exascale a game-changer for database refreshes, CI/CD workflows and pipelines, development environments provisioning, all done with single SQL commands and much faster than before.

Block storage services allow the creation of arbitrary-sized block volumes for use by numerous applications. Exascale block volumes are also used to store Exadata database server virtual machines images enabling :

- creation of more virtual machines

- removal of local storage dependency inside the Exadata compute nodes

- seamless migration of virtual machines between different compute nodes

Finally, the following hardware and software considerations complete this brief presentation of Exascale:

- runs on 2-socket Oracle Exadata system hardware with RoCE Network Fabric (X8M-2 or later)

- Database 23ai release 23.5.0 or later is required for full-featured native Database file storage in Exascale

- Exascale block volumes support databases using older Database software releases back to Oracle Database 19c

- Exascale is built into Exadata System Software (since release 24.1)

The main point with Exascale architecture is cloud-scale, multi-tenant resource pooling, both for storage and compute.

Storage pooling

Storage pooling

Exascale is based on pooling storage servers which provide services such as Storage Pools, Vaults and Volume Management. Vaults, which can be considered an equivalent of ASM diskgroups, are directly accessed by the database kernel.

File and extent management is done by Exadata System Software, thus freeing the compute layer from ASM processes and memory structures for database files access and extents management (with Database 23ai or 26ai; for Database 19c, ASM is still required). With storage management moving to storage servers, resource management becomes more flexible and more memory and CPU resources are available on compute nodes to process database tasks.

Exascale also provides redundancy, caching, file metadata management, snapshots and clones as well as security and data integrity features.

Of course, since Exascale is built on top of Exadata, you benefit from features like RDMA, RoCE, Smart Flash Cache, XRMEM, Smart Scan, Storage Indexes, Columnar Caching.

Compute poolingOn the compute side, we have database-optimized servers which run Database 23ai or newer and Grid Infrastructure Cluster management software. The physical database servers host the VM Clusters and are managed by Oracle. Unlike Exadata on Dedicated Infrastructure, there is no need to provision an infrastructure before going ahead with VM Cluster creation and configuration : in Exascale, you only deal with VM Clusters.

VM file systems are centrally-hosted by Oracle on RDMA-enabled block volumes in a system-vault. VM images used by the VM Clusters are no more hosted on local storage on the database servers. This enables the number of VMs running on the database servers to raise from 12 to 50.

Each VM Cluster accesses a Database Vault storing the database files with strict isolation from other VM Clusters database files.

Virtual Cloud NetworkClient and backup connectivity is provided by Virtual Cloud Network (VCN) services.

This loosely-coupled, shared and multitenant architecture enables far greater flexibilty than what was possible with ASM or even Exadata on Dedicated Infrastructure. Exascale’s hyper-elasticity enables to start with very small VM Clusters and then scale as the workloads increase, with Oracle managing the infrastructure automatically. You can start as small as 1 VM per cluster (up to 10), 8 eCPUs per VM (up to 200), 22GB of memory per VM, 220GB file system storage per VM and 300GB Vault storage per VM Cluster (up to 100TB). Memory is tightly coupled to eCPUs configuration with 2.75GB per eCPU and thus does not scale independently from the eCPUs number.

For those new to eCPU, it is a standard billing metric based on the number of cores per hour elastically allocated from a pool of compute and storage servers. eCPUs are not tied to the make, model or clock speed of the underlying processor. By contrast, an OCPU is the equivalent of one physical core with hyper-threading enabled.

To summarizeTo best understand what Exascale Infrastructure is and introduces, here is the wording which best describes this new flavor of Exadata Database Service :

- loosely-coupling of compute and storage cloud

- hyper-elasticity

- shared and multi-tenant service model

- ASM-less (for 23ai or newer)

- Exadata performance, reliability, availability and security at any scale

- CI/CD friendly

- pay-per-use model

Stay tuned for more on Exascale …

L’article Exascale Infrastructure : new flavor of Exadata Database Service est apparu en premier sur dbi Blog.

OGG-00423 when performing initial load with GoldenGate 23ai

Very quick piece of blog today to tackle the OGG-00423 error. There is not much information online on the matter, and the official Oracle documentation doesn’t help. If you ever stumble upon an OGG-00423 error when setting up GoldenGate replication, remember that it’s most certainly related to grants given to the GoldenGate user. An example of the error is given below, after starting an initial load:

2025-10-27 09:52:40 ERROR OGG-00423 Could not find definition for pdb_source.app_source.t1.This error happened when doing an initial load with the following configuration:

extract extini

useridalias cdb01

extfile aa

SOURCECATALOG pdb_source

table app_source.t1, SQLPredicate "As of SCN 3899696";This error should be present whether you use SOURCECATALOG or the full three-part TABLE name.

For replicats, a common solution for OGG-00423 is to use the ASSUMETARGETDEFS parameter in the configuration file, but this is a replication-only parameter, and there is no such thing for the initial load. In this case, the error was due to the user defined in the cdb01 alias lacking select on the specified t1 table:

[oracle@vmogg ~]$ sqlplus c##ggadmin

Enter password:

SQL> alter session set container=pdb_source;

Session altered.

SQL> select * from app_source.t1;

select * from app_source.t1

*

ERROR at line 1:

ORA-00942: table or view does not existAfter granting the correct SELECT privilege to the GoldenGate user, it works ! Here is an example on how to grant this select. You might want to grant it differently, depending on security aspects in your deployments:

sqlplus / as sysdba

ALTER SESSION SET CONTAINER=PDB_SOURCE;

GRANT SELECT ANY TABLE TO C##GGADMIN CONTAINER=CURRENT;NB: When testing GoldenGate setups, a good practice to debug OGG-errors if you do not use a DBA user for replication is to temporarily grant DBA (or a DBA role) to the GoldenGate user. This way, you can quickly track down the root cause of your problem, at least if it’s related to grants.

L’article OGG-00423 when performing initial load with GoldenGate 23ai est apparu en premier sur dbi Blog.

SQL Server 2025 release

Microsoft has announced the release of SQL Server 2025. The solution can be downloaded using the following link: https://www.microsoft.com/en-us/sql-server/

Among the new features available, we have:

- The introduction of the Standard Developer Edition, which offers the same features as the Standard Edition, but is free when used in a non-production environment, similar to the Enterprise Developer Edition (formerly Developer Edition).

- The removal of the Web Edition.

- The Express Edition can now host databases of up to 50 GB. In practice, it is quite rare to see our customers use the Express Edition. It generally serves as a toolbox or for very specific scenarios where the previous 10 GB limit was not an issue. Therefore, lifting this limit will not have a major impact due to the many other restrictions it still has.

- The introduction of AI capabilities within the database engine, including vector indexes, vector data types, and the corresponding functions.

- For development purposes, SQL Server introduces a native JSON data type and adds support for regular expressions (regex).

- Improvements to Availability Groups, such as the ability to offload full, differential, and transaction log backups to a secondary replica.

- The introduction of optimized locking and a new ZSTD algorithm for backup compression.

We also note the release of SQL Server Management Studio 22.

References :

https://learn.microsoft.com/en-us/sql/sql-server/what-s-new-in-sql-server-2025?view=sql-server-ver17

https://techcommunity.microsoft.com/blog/sqlserver/sql-server-2025-is-now-generally-available/4470570

https://learn.microsoft.com/en-us/ssms/release-notes-22

Thank you, Amine Haloui.

L’article SQL Server 2025 release est apparu en premier sur dbi Blog.

ORA-44001 when setting up GoldenGate privileges on a CDB

I was recently setting up GoldenGate for a client when I was struck by a ORA-44001 error. I definitely wasn’t the first one to come across this while playing with grants on GoldenGate users, but nowhere could I find the exact reason for the issue. Not a single question or comment on that matter offered a solution.

The problem occurs when running the DBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE package described in the documentation. An example given by the documentation is the following:

EXEC DBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE(GRANTEE => 'c##ggadmin', CONTAINER => 'ALL');And the main complaint mentioned regarding this command was the following ORA-44001 error:

SQL> EXEC DBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE(grantee => 'c##ggadmin', container=>'ALL');

*

ERROR at line 1:

ORA-44001: invalid schema

ORA-06512: at "SYS.DBMS_XSTREAM_AUTH_IVK", line 3652

ORA-06512: at "SYS.DBMS_ASSERT", line 410

ORA-06512: at "SYS.DBMS_XSTREAM_ADM_INTERNAL", line 50

ORA-06512: at "SYS.DBMS_XSTREAM_ADM_INTERNAL", line 3082

ORA-06512: at "SYS.DBMS_XSTREAM_AUTH_IVK", line 3632

ORA-06512: at line 1

ORA-06512: at "SYS.DBMS_XSTREAM_AUTH_IVK", line 3812

ORA-06512: at "SYS.DBMS_GOLDENGATE_AUTH", line 63

ORA-06512: at line 2The solution is in fact quite simple. But I decided to investigate it a bit further, playing with the multitenant architecture. In this blog, I will use an Oracle 19c CDB with a single pluggable database named PDB1.

For me, it was really the only thing that mattered when encountering this error. On a CDB with tens of PDBs, you might have some PDBs in read-only mode. Whether it’s to keep templates aside, or for temporary restrictions on a specific PDB. Let’s try to replicate the error.

First example: PDB in read-write, grant operation succeedsIf you first try to grant the admin privileges with a PDB in read-write, it succeeds:

SQL> alter pluggable database pdb1 open read write;

Pluggable database altered.

SQL> create user c##oggadmin identified by ogg;

User created.

SQL> EXEC DBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE(grantee => 'c##oggadmin', container=>'ALL');

PL/SQL procedure successfully completed.ORA-44001

If you first put the PDB in read-only mode, and then create the user, then the user doesn’t exist, and you get the ORA-44001 when granting privileges.

SQL> drop user c##oggadmin;

User dropped.

SQL> alter pluggable database pdb1 close immediate;

Pluggable database altered.

SQL> alter pluggable database pdb1 open read only;

Pluggable database altered.

SQL> create user c##oggadmin identified by ogg;

User created.

SQL> EXEC DBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE(grantee => 'c##oggadmin', container=>'ALL');

*

ERROR at line 1:

ORA-44001: invalid schema

ORA-06512: at "SYS.DBMS_XSTREAM_AUTH_IVK", line 3652

ORA-06512: at "SYS.DBMS_ASSERT", line 410

ORA-06512: at "SYS.DBMS_XSTREAM_ADM_INTERNAL", line 50

ORA-06512: at "SYS.DBMS_XSTREAM_ADM_INTERNAL", line 3082

ORA-06512: at "SYS.DBMS_XSTREAM_AUTH_IVK", line 3632

ORA-06512: at line 1

ORA-06512: at "SYS.DBMS_XSTREAM_AUTH_IVK", line 3812

ORA-06512: at "SYS.DBMS_GOLDENGATE_AUTH", line 63

ORA-06512: at line 2ORA-16000

Where this gets tricky is the order in which you write the query. If you create the user before putting a PDB in read-only, you get another error, because the user actually exists:

SQL> drop user c##oggadmin;

User dropped.

SQL> alter pluggable database pdb1 close immediate;

Pluggable database altered.

SQL> alter pluggable database pdb1 open read write;

Pluggable database altered.

SQL> create user c##oggadmin identified by ogg;

User created.

SQL> alter pluggable database pdb1 close immediate;

Pluggable database altered.

SQL> alter pluggable database pdb1 open read only;

Pluggable database altered.

SQL> EXEC DBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE(grantee => 'c##oggadmin', container=>'ALL');

*

ERROR at line 1:

ORA-16000: database or pluggable database open for read-only access

ORA-06512: at "SYS.DBMS_XSTREAM_AUTH_IVK", line 3652

ORA-06512: at "SYS.DBMS_XSTREAM_AUTH_IVK", line 93

ORA-06512: at "SYS.DBMS_XSTREAM_AUTH_IVK", line 84

ORA-06512: at "SYS.DBMS_XSTREAM_AUTH_IVK", line 123

ORA-06512: at "SYS.DBMS_XSTREAM_AUTH_IVK", line 3635

ORA-06512: at line 1

ORA-06512: at "SYS.DBMS_XSTREAM_AUTH_IVK", line 3812

ORA-06512: at "SYS.DBMS_GOLDENGATE_AUTH", line 63

ORA-06512: at line 2As often with Oracle, the error messages can be misleading. The third example clearly points to the issue, while the second one is tricky to debug (even though it is completely valid).

Should I create a GoldenGate user at the CDB-level ?Depending on your replication configuration, you might need to create a common user instead of multiple users per PDB. For instance, this is strictly required when setting up a downstream extract. However, in general, it might be a bad idea to create a common C##GGADMIN user and granting it privileges with CONTAINER => ALL, because you might not want such a privileged user to exist on all your PDBs.

L’article ORA-44001 when setting up GoldenGate privileges on a CDB est apparu en premier sur dbi Blog.

Alfresco – Solr search result inconsistencies

We recently encountered an error at a customer’s site. Their Alfresco environment was behaving strangely.

Sometimes the search results worked, and sometimes they did not get the expected results.

The environment is composed of 2 Alfresco7 nodes in cluster and 2 Solr 6.6 nodes load balanced (in active-active mode).

Sometimes the customer isn’t able to retrieve the document he created recently.

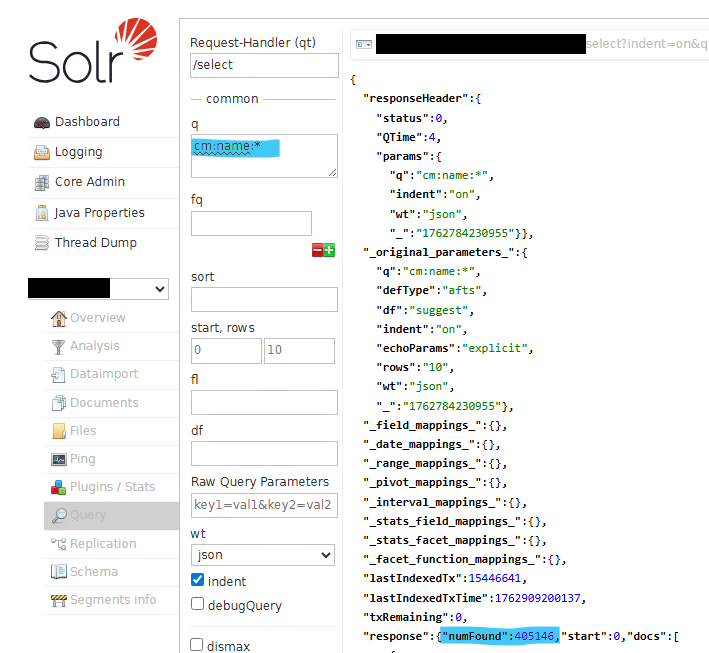

Investigation stepsSince we have load balancing in place, the first step is to confirm that everything is okay on the two nodes.

- I checked that Alfresco is running as expected. Nothing out of the ordinary; the processes are there and there are no errors in the log files and everything is green in the admin console.

- Then, I checked the alfresco-global.properties on both nodes to ensure the configuration is the same. We never know. I also checked the way we connect to Solr and confirmed that the load-balanced URL is being used.

- At this point, it is almost certain that the problem is with Solr. We will start by checking the administration console. Because we have load balancing, we must connect to each node individually and cannot use the URL in alfresco-global.properties.

- At first glance, everything seems fine, but a closer inspection of the Core Admin panel reveals a difference of several thousand “NumDocs” between the two nodes. These values may differ because they are internal Solr files. However, the discrepancy is too high in my opinion.

- How can this assumption be verified? Move to any core and run a query to list all the files (cm:name:*). On the first node, the query returns an error. On the second node, I received an answer similar to the one below:

- Now moving to the server where I have the error, in the logs there are errors like:

2025-11-10 15:33:32.466 ERROR (searcherExecutor-137-thread-1-processing-x:alfresco-3) [ x:alfresco-3] o.a.s.c.SolrCore null:org.alfresco.service.cmr.dictionary.DictionaryException10100009 d_dictionary.model.err.parse.failure

at org.alfresco.repo.dictionary.M2Model.createModel(M2Model.java:113)

at org.alfresco.repo.dictionary.M2Model.createModel(M2Model.java:99)

at org.alfresco.solr.tracker.ModelTracker.loadPersistedModels(ModelTracker.java:181)

at org.alfresco.solr.tracker.ModelTracker.<init>(ModelTracker.java:142)

at org.alfresco.solr.lifecycle.SolrCoreLoadListener.createModelTracker(SolrCoreLoadListener.java:341)

at org.alfresco.solr.lifecycle.SolrCoreLoadListener.newSearcher(SolrCoreLoadListener.java:139)

at org.apache.solr.core.SolrCore.lambda$getSearcher$15(SolrCore.java:2249)

at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)

at org.apache.solr.common.util.ExecutorUtil$MDCAwareThreadPoolExecutor.lambda$execute$0(ExecutorUtil.java:229)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)

at java.base/java.lang.Thread.run(Thread.java:829)

Caused by: org.jibx.runtime.JiBXException: Error accessing document

at org.jibx.runtime.impl.XMLPullReaderFactory$XMLPullReader.next(XMLPullReaderFactory.java:293)

at org.jibx.runtime.impl.UnmarshallingContext.toStart(UnmarshallingContext.java:446)

at org.jibx.runtime.impl.UnmarshallingContext.unmarshalElement(UnmarshallingContext.java:2750)

at org.jibx.runtime.impl.UnmarshallingContext.unmarshalDocument(UnmarshallingContext.java:2900)

at org.alfresco.repo.dictionary.M2Model.createModel(M2Model.java:108)

... 11 more

Caused by: java.io.EOFException: input contained no data

at org.xmlpull.mxp1.MXParser.fillBuf(MXParser.java:3003)

at org.xmlpull.mxp1.MXParser.more(MXParser.java:3046)

at org.xmlpull.mxp1.MXParser.parseProlog(MXParser.java:1410)

at org.xmlpull.mxp1.MXParser.nextImpl(MXParser.java:1395)

at org.xmlpull.mxp1.MXParser.next(MXParser.java:1093)

at org.jibx.runtime.impl.XMLPullReaderFactory$XMLPullReader.next(XMLPullReaderFactory.java:291)

... 15 more- It looks like the problem is related to the model definition. We need to check if the models are still there in ../solr_data/models. The models are still in place, but one of them is 0 KB.

- So we need to force delete the empty file and restart Solr to force the model to be reimported.

After taking these actions, we reimported the model file and the errors in the logs disappeared. In the admin console, we can see NumDocs increasing again. When we re-run the query, we get a result.

L’article Alfresco – Solr search result inconsistencies est apparu en premier sur dbi Blog.

PostgreSQL 19: Logical replication of sequences

Logical replication in PostgreSQL got a lot of features and performance improvements over the last releases. It was introduced in PostgreSQL 10 back in 2017, and PostgreSQL 9.6 (in 2016) introduced logical decoding which is the basis for logical replication. Today logical replication is really mature and from my point of view only two major features are missing: DDL replication and the replication of sequences. The latter is now possible with the upcoming PostgreSQL 19 next year, and this is what this post is about.

Before we can see how this works we need a logical replication setup. An easy method to set this up is to create a physical replica and then transform that into a logical replica using pg_createsubscriber:

postgres@:/home/postgres/ [pgdev] psql -c "create table t ( a int primary key generated always as identity, b text)"

CREATE TABLE

postgres@:/home/postgres/ [pgdev] psql -c "insert into t (b) values ('aaaa')"

INSERT 0 1

postgres@:/home/postgres/ [pgdev] psql -c "insert into t (b) values ('bbbb')"

INSERT 0 1

postgres@:/home/postgres/ [pgdev] pg_basebackup --pgdata=/var/tmp/dummy --write-recovery-conf --checkpoint=fast

postgres@:/home/postgres/ [pgdev] echo "port=8888" >> /var/tmp/dummy/postgresql.auto.conf

postgres@:/home/postgres/ [pgdev] pg_createsubscriber --all --pgdata=/var/tmp/dummy --subscriber-port=8888 --publisher-server="host=localhost,port=5432"

postgres@:/home/postgres/ [pgdev] pg_ctl --pgdata=/var/tmp/dummy start

2025-11-11 13:21:20.818 CET - 1 - 9669 - - @ - 0LOG: redirecting log output to logging collector process

2025-11-11 13:21:20.818 CET - 2 - 9669 - - @ - 0HINT: Future log output will appear in directory "pg_log".

2025-11-11 13:21:21.250 CET - 1 - 9684 - - @ - 0LOG: redirecting log output to logging collector process

2025-11-11 13:21:21.250 CET - 2 - 9684 - - @ - 0HINT: Future log output will appear in directory "pg_log".

Once this is done we have a logical replica and the data is synchronized:

postgres@:/home/postgres/ [pgdev] psql -p 8888 -c "select * from t"

a | b

---+------

1 | aaaa

2 | bbbb

(2 rows)

A quick check the replication is ongoing:

postgres@:/home/postgres/ [pgdev] psql -p 5432 -c "insert into t (b) values('cccc');"

INSERT 0 1

postgres@:/home/postgres/ [pgdev] psql -p 8888 -c "select * from t"

a | b

---+------

1 | aaaa

2 | bbbb

3 | cccc

(3 rows)

The “generated always as identidy” we used above to create the table automatically created a sequence for us:

ostgres@:/home/postgres/ [pgdev] psql -p 5432 -c "\x" -c "select * from pg_sequences;"

Expanded display is on.

-[ RECORD 1 ]-+-----------

schemaname | public

sequencename | t_a_seq

sequenceowner | postgres

data_type | integer

start_value | 1

min_value | 1

max_value | 2147483647

increment_by | 1

cycle | f

cache_size | 1

last_value | 3

Checking the same sequence on the replica clearly shows that the sequence is not synchronized (last_value is still a 2):

postgres@:/home/postgres/ [pgdev] psql -p 8888 -c "\x" -c "select * from pg_sequences;"

Expanded display is on.

-[ RECORD 1 ]-+-----------

schemaname | public

sequencename | t_a_seq

sequenceowner | postgres

data_type | integer

start_value | 1

min_value | 1

max_value | 2147483647

increment_by | 1

cycle | f

cache_size | 1

last_value | 2

The reason is, that sequences are not synchronized automatically:

postgres@:/home/postgres/ [pgdev] psql -p 5432 -c "\x" -c "select * from pg_publication;"

Expanded display is on.

-[ RECORD 1 ]---+-------------------------------

oid | 16397

pubname | pg_createsubscriber_5_f58e9acd

pubowner | 10

puballtables | t

puballsequences | f

pubinsert | t

pubupdate | t

pubdelete | t

pubtruncate | t

pubviaroot | f

pubgencols | n

As there currently is no way to enable sequence synchronization for an existing publication we can either drop and re-create or add an additional publication and subscription just for the sequences:

postgres@:/home/postgres/ [pgdev] psql -p 5432 -c "create publication pubseq for all sequences;"

CREATE PUBLICATION

postgres@:/home/postgres/ [pgdev] psql -p 5432 -c "\x" -c "select * from pg_publication;"

Expanded display is on.

-[ RECORD 1 ]---+-------------------------------

oid | 16397

pubname | pg_createsubscriber_5_f58e9acd

pubowner | 10

puballtables | t

puballsequences | f

pubinsert | t

pubupdate | t

pubdelete | t

pubtruncate | t

pubviaroot | f

pubgencols | n

-[ RECORD 2 ]---+-------------------------------

oid | 16398

pubname | pubseq

pubowner | 10

puballtables | f

puballsequences | t

pubinsert | t

pubupdate | t

pubdelete | t

pubtruncate | t

pubviaroot | f

pubgencols | n

The publication has sequence replication enabled and the subscription to consume this can be created like this:

postgres@:/home/postgres/ [pgdev] psql -p 8888 -c "create subscription subseq connection 'host=localhost port=5432' publication pubseq"

CREATE SUBSCRIPTION

Now the sequence is visible in pg_subscription_rel on the subscriber:

postgres@:/home/postgres/ [pgdev] psql -p 8888 -c "select * from pg_subscription_rel;"

srsubid | srrelid | srsubstate | srsublsn

---------+---------+------------+------------

24589 | 16385 | r |

24590 | 16384 | r | 0/04004780 -- sequence

(2 rows)

State “r” means ready, so the sequence should have synchronized, and indeed:

postgres@:/home/postgres/ [pgdev] psql -p 8888 -c "\x" -c "select * from pg_sequences"

Expanded display is on.

-[ RECORD 1 ]-+-----------

schemaname | public

sequencename | t_a_seq

sequenceowner | postgres

data_type | integer

start_value | 1

min_value | 1

max_value | 2147483647

increment_by | 1

cycle | f

cache_size | 1

last_value | 3

postgres@:/home/postgres/ [pgdev] psql -p 5432 -c "\x" -c "select * from pg_sequences"

Expanded display is on.

-[ RECORD 1 ]-+-----------

schemaname | public

sequencename | t_a_seq

sequenceowner | postgres

data_type | integer

start_value | 1

min_value | 1

max_value | 2147483647

increment_by | 1

cycle | f

cache_size | 1

last_value | 3

Adding new rows to the table, which also increases the last_value of the sequence, should also synchronize the sequences:

postgres@:/home/postgres/ [pgdev] psql -p 5432 -c "insert into t (b) values ('eeee')"

INSERT 0 1

postgres@:/home/postgres/ [pgdev] psql -p 5432 -c "insert into t (b) values ('ffff')"

INSERT 0 1

postgres@:/home/postgres/ [pgdev] psql -p 5432 -c "\x" -c "select * from pg_sequences"

Expanded display is on.

-[ RECORD 1 ]-+-----------

schemaname | public

sequencename | t_a_seq

sequenceowner | postgres

data_type | integer

start_value | 1

min_value | 1

max_value | 2147483647

increment_by | 1

cycle | f

cache_size | 1

last_value | 6

postgres@:/home/postgres/ [pgdev] psql -p 8888 -c "\x" -c "select * from pg_sequences"

Expanded display is on.

-[ RECORD 1 ]-+-----------

schemaname | public

sequencename | t_a_seq

sequenceowner | postgres

data_type | integer

start_value | 1

min_value | 1

max_value | 2147483647

increment_by | 1

cycle | f

cache_size | 1

last_value | 4

… but is not happening automatically. To get them synchronized you need to refresh the subscription:

postgres@:/home/postgres/ [pgdev] psql -p 8888 -c "\x" -c "alter subscription subseq refresh sequences"

Expanded display is on.

ALTER SUBSCRIPTION

postgres@:/home/postgres/ [pgdev] psql -p 8888 -c "\x" -c "select * from pg_sequences"

Expanded display is on.

-[ RECORD 1 ]-+-----------

schemaname | public

sequencename | t_a_seq

sequenceowner | postgres

data_type | integer

start_value | 1

min_value | 1

max_value | 2147483647

increment_by | 1

cycle | f

cache_size | 1

last_value | 6

Great, this reduces the work to fix the sequences quite a bit and is really helpful. As usual, thanks to all involved.

L’article PostgreSQL 19: Logical replication of sequences est apparu en premier sur dbi Blog.

PostgreSQL 19: The “WAIT FOR” command

When you go for replication and you don’t use synchronous replication there is always a window when data written on the primary is not yet available in the replica. This is known as “replication lag” and can be monitored using the pg_stat_replication catalog view. A recent commit to PostgreSQL 19 implements a way to wait for data to be visible on the replica without switching to synchronous replication, and this is what the “WAIT FOR” command is for.

Before we can see how that works we need a replica, because when you try to execute this command on a primary you’ll get this:

postgres=# select version();

version

---------------------------------------------------------------------------------------

PostgreSQL 19devel on x86_64-linux, compiled by gcc-15.1.1, 64-bit

(1 row)

postgres=# WAIT FOR LSN '0/306EE20';

ERROR: recovery is not in progress

HINT: Waiting for the replay LSN can only be executed during recovery.

postgres=#

So, let’s create a replica and start it up:

postgres@:/home/postgres/ [pgdev] mkdir /var/tmp/dummy

postgres@:/home/postgres/ [pgdev] pg_basebackup --pgdata=/var/tmp/dummy --write-recovery-conf --checkpoint=fast

postgres@:/home/postgres/ [pgdev] echo "port=8888" >> /var/tmp/dummy/postgresql.auto.conf

postgres@:/home/postgres/ [pgdev] chmod 700 /var/tmp/dummy

postgres@:/home/postgres/ [pgdev] pg_ctl --pgdata=/var/tmp/dummy start

postgres@:/home/postgres/ [pgdev] psql -p 8888 -c "select pg_is_in_recovery()"

pg_is_in_recovery

-------------------

t

(1 row)

As nothing is happening on the primary right now, data on the primary and the replica is exactly the same:

postgres=# select usename,sent_lsn,write_lsn,flush_lsn,replay_lsn from pg_stat_replication;

usename | sent_lsn | write_lsn | flush_lsn | replay_lsn

----------+------------+------------+------------+------------

postgres | 0/03000060 | 0/03000060 | 0/03000060 | 0/03000060

To see how “WAIT FOR” behaves we need to a little cheating and pause WAL replaying on the replica (we could also cut the the network between the primary and the replica):

postgres@:/home/postgres/ [pgdev] psql -p 8888 -c "select * from pg_wal_replay_pause();"

pg_wal_replay_pause

---------------------

(1 row)

postgres@:/home/postgres/ [pgdev] psql -p 8888 -c "select * from pg_is_wal_replay_paused();"

pg_is_wal_replay_paused

-------------------------

t

(1 row)

On the primary, create a table and get the current LSN:

postgres@:/home/postgres/ [pgdev] psql -c "create table t(a int)"

CREATE TABLE

postgres@:/home/postgres/ [pgdev] psql -c "insert into t values(1)"

INSERT 0 1

postgres@:/home/postgres/ [pgdev] psql -c "select pg_current_wal_insert_lsn();"

pg_current_wal_insert_lsn

---------------------------

0/03018CA8

(1 row)

As WAL replay on the replica is paused, the “WAIT FOR” command will now block:

postgres@:/home/postgres/ [pgdev] psql -p 8888 -c "WAIT FOR LSN '0/03018CA8'"

This is the intended behavior as we want to make sure that we can see all the data up to this LSN. Once we resume WAL replay on the replica the “WAIT FOR” command will return success as all the data reached the replica:

postgres@:/home/postgres/ [DEV] psql -p 8888 -c "select * from pg_wal_replay_resume();"

pg_wal_replay_resume

----------------------

(1 row)

… the other session will unblock:

postgres@:/home/postgres/ [pgdev] psql -p 8888 -c "WAIT FOR LSN '0/03018CA8'"

status

---------

success

(1 row)

So, starting with PostgreSQL 19 next year, there is a way for applications to make sure that a replica reached all the data up to a specific LSN by blocking until the data is there.

L’article PostgreSQL 19: The “WAIT FOR” command est apparu en premier sur dbi Blog.

Setting up TLS encryption and authentication in MongoDB

When securing a MongoDB deployment, protecting sensitive data is paramount. MongoDB supports encryption throughout the lifecycle of the data, with three primary types of data encryption :

- Encryption in transit

- Encryption at rest

- Encryption in use

Among these, encryption in transit is fundamental : it protects data as it moves between your application and the database. In MongoDB, this is achieved through TLS (Transport Layer Security), which ensures that communication remains private and secure. You have two options when it comes to using TLS for your database :

- Using TLS for encryption only.

- Using TLS both for encryption and authentication to the database.

We’ll first create a Certificate Authority. These certificates will be self-signed, which is fine for testing, but you shouldn’t use self-signed certificates in a production environment ! On Linux, use the openssl library to generate the certificates.

openssl req -newkey rsa:4096 -nodes -x509 -days 365 -keyout ca.key -out ca.pem -subj "/C=CH/ST=ZH/L=Zurich/O=dbi/OU=MongoDBA/CN=vm.domain.com"Here is a description of some important parameters of the commands :

-newkey rsa:4096: Generates a new private key and a certificate request using RSA with a 4096-bit key size.-nodes: Skips password encryption of the private key. Without it, OpenSSL would prompt you to set a passphrase.-x509: Generates a self-signed certificate.x509is supported by MongoDB.-days 365: Validity of the certificate in days.-keyout ca.key: Filename for the private key.-out ca.pem: Filename for the certificate.-subj "...": Provides the subject’s Distinguished Name (DN). If you don’t specify it, OpenSSL will prompt for each field.

Then, we’ll create the server certificate for the MongoDB instance. In the openssl-server.cnf file below, you should change the req_distinguished_name fields with what you used while creating the Certificate Authority, and replace vm.domain.com by the name of your machine.

If you only have an IP and no DNS entry for your VM, use IP.1 instead of DNS.1 in the alt_names section.

cat > openssl-server.cnf <<EOF

[ req ]

distinguished_name = req_distinguished_name

req_extensions = v3_req

prompt = no

[ req_distinguished_name ]

C = CH

ST = ZH

L = Zurich

O = dbi

OU = MongoDBA

CN = myVM

[ v3_req ]

keyUsage = digitalSignature, keyEncipherment

extendedKeyUsage = serverAuth

subjectAltName = @alt_names

[ alt_names ]

DNS.1 = myVM

EOFThen, generates the certificate with these commands :

openssl req -newkey rsa:4096 -nodes -keyout mongodb-server.key -out mongodb-server.csr -config openssl-server.cnf

openssl x509 -req -in mongodb-server.csr -CA ca.pem -CAkey ca.key -CAcreateserial -out mongodb-server.crt -days 365 -extensions v3_req -extfile openssl-server.cnf

cat mongodb-server.key mongodb-server.crt > mongodb-server.pemFinally, we’ll create a client certificate. The process is the same, with a few tweaks :

OUshould be different from the one from the server certificate. It is not mandatory for the communication, but it will be for the authentication if you decide to enable it.CNshould also be different.extendedKeyUsageshould be set withclientAuthinstead ofserverAuth.

cat > openssl-client.cnf <<EOF

[ req ]

distinguished_name = req_distinguished_name

req_extensions = v3_req

prompt = no

[ req_distinguished_name ]

C = CH

ST = ZH

L = Zurich

O = dbi

OU = MongoDBAClient

CN = userApp

[ v3_req ]

keyUsage = digitalSignature, keyEncipherment

extendedKeyUsage = clientAuth

EOFThe creation of the certificate is the same.

openssl req -newkey rsa:4096 -nodes -keyout mongodb-client.key -out mongodb-client.csr -config openssl-client.cnf

openssl x509 -req -in mongodb-client.csr -CA ca.pem -CAkey ca.key -CAcreateserial -out mongodb-client.crt -days 365 -extensions v3_req -extfile openssl-client.cnf

cat mongodb-client.key mongodb-client.crt > mongodb-client.pemMake sure to set permissions correctly for your certificates.

chmod 600 ca.pem mongodb-server.pem mongodb-client.pem

chown mongod: ca.pem mongodb-server.pem mongodb-client.pemNow, you can change your MongoDB configuration file to include the certificates. Simply add the net.tls part to your mongod.conf file.

net:

bindIp: yourIP

port: 27017

tls:

mode: requireTLS

certificateKeyFile: /path/to/mongodb-server.pem

CAFile: /path/to/ca.pemYou can now restart your MongoDB instance with systemctl restart mongod (or whatever you’re using), and then try the connection to your instance for your client. Of course, the port mentioned in the net.port field of your configuration file shouldn’t be blocked by your firewall.

> mongosh --host myVM --port 27017 --tls --tlsCertificateKeyFile mongodb-client.pem --tlsCAFile ca.pem

Current Mongosh Log ID: 682c9641bbe4593252ee7c8c

Connecting to: mongodb://vmIP:27017/?directConnection=true&tls=true&tlsCertificateKeyFile=Fclient.pem&tlsCAFile=ca.pem&appName=mongosh+2.5.1

Using MongoDB: 8.0.9

Using Mongosh: 2.5.1

For mongosh info see: https://www.mongodb.com/docs/mongodb-shell/

test>You’re now connected to your MongoDB instance through TLS ! And if you’re not using the certificate, the requireTLS mode prevents the connection from being established, and generated these error messages in your logs :

{"t":{"$date":"2025-05-21T04:43:55.277+00:00"},"s":"I", "c":"EXECUTOR", "id":22988, "ctx":"conn52","msg":"Error receiving request from client. Ending connection from remote","attr":{"error":{"code":141,"codeName":"SSLHandshakeFailed","errmsg":"The server is configured to only allow SSL connections"},"remote":"IP:50100","connectionId":52}}If you want to learn more about MongoDB logs, I wrote a blog on this topic: MongoDB Log Analysis : A Comprehensive Guide.

Setting up TLS authenticationNow that you’re connected, we will set up authentication so that you can be connected as a specific user to MongoDB. Using the already established connection, create a user in the $external database. Each client certificate that you create can be mapped to one MongoDB user. Retrieve the username that you will use :

> openssl x509 -in mongodb-client.pem -inform PEM -subject -nameopt RFC2253 | grep subject

subject=CN=userApp,OU=MongoDBA,O=dbi,L=Zurich,ST=ZH,C=CHAnd then create the user in the $external database, using the existing MongoDB connection :

test> db.getSiblingDB("$external").runCommand({

createUser: "CN=userApp,OU=MongoDBA,O=dbi,L=Zurich,ST=ZH,C=CH",

roles: [

{ role: "userAdminAnyDatabase", db: "admin" }

]

});To check that everything works as intended, you can try to display collections in the admin database. For the moment, there is no error because you have all the rights to do it (no authorization is enforced).

test> use admin

switched to db admin

admin> show collections;

system.users

system.versionYou can now edit the MongoDB configuration file by adding the net.tls.allowConnectionsWithoutCertificates set to true, and the security.authorization flag set to enabled. The mongod.conf file should look like this :

net:

bindIp: X.X.X.X

port: XXXXX

tls:

mode: requireTLS

certificateKeyFile: /path/to/mongodb-server.pem

CAFile: /path/to/ca.pem

allowConnectionsWithoutCertificates: false

security:

authorization: enabledAfter restarting with systemctl restart mongod, you can now connect again. If you use the same command as before, you will log in without authentication, and get the error below whenever you try to do anything :

MongoServerError[Unauthorized]: Command listCollections requires authenticationSo you should now connect via this command :

mongosh --host vmIP --port 27017 --tls --tlsCertificateKeyFile mongodb-client.pem --tlsCAFile ca.pem --authenticationDatabase '$external' --authenticationMechanism MONGODB-X509If you want to show the admin collections, you will now get an error, because your user only has the userAdminAnyDatabase role granted (this role was chosen during the user creation, see above).

admin> show collections

MongoServerError[Unauthorized]: not authorized on admin to execute command { listCollections: 1, filter: {}, cursor: {}, nameOnly: true, authorizedCollections: false, lsid: { id: UUID("9c48b4c4-7702-49ce-a97c-52763b2ad6b3") }, $db: "admin" }But it’s fine, you can grant yourself more roles (readWriteAnyDatabase, for instance) and create new users if you want.

The communication between the client and the server is now fully secured. Congratulations !

MongoServerError[BadValue]Side note : if you ever encounter this error:

MongoServerError[BadValue]: Cannot create an x.509 user with a subjectname that would be recognized as an internal cluster member… make sure to follow the RFC-2253 standards. For instance, you could have this error if one of the field is too long. Also, as a reminder, the client certificate should have a different Distinguished Name (DN) than the server certificate (see documentation for more information).

L’article Setting up TLS encryption and authentication in MongoDB est apparu en premier sur dbi Blog.

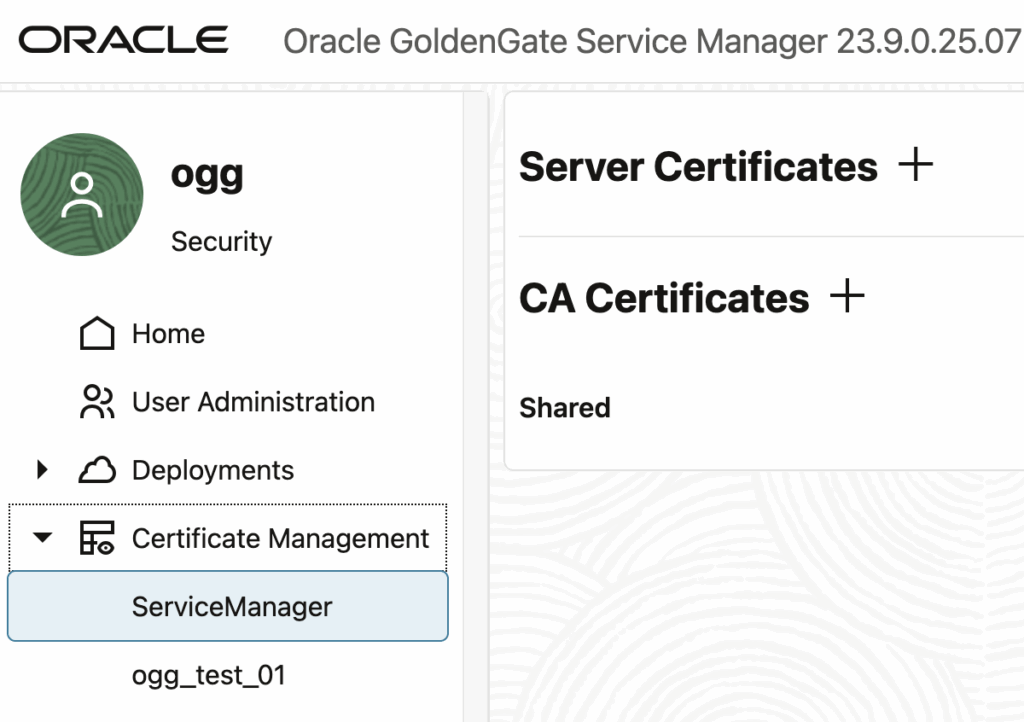

Securing an Existing Unsecure GoldenGate Installation

You might have an existing unsecure GoldenGate installation that you would like to secure, whether it’s for security reasons or because you would like to dissociate the installation and its securing process. After searching everywhere in the Oracle documentation for how to proceed, I decided to try, investigate and eventually even asked Oracle directly. Here is the answer.

For a TL;DR version of the answer, please go to the end of the blog, but in the meantime, here was my reasoning.

Setup differences between a secure and unsecure GoldenGate installation Installation differencesFrom an installation perspective, the difference between a secure and unsecure installation is narrow. I talked earlier about graphic and silent GoldenGate installations, and for the silent installation, the following response file parameters are the only one involved in this security aspect:

# SECTION C - SECURITY MANAGER

SECURITY_ENABLED=false

# SECTION H - SECURITY

TLS_1_2_ENABLED=false

TLS_1_3_ENABLED=false

FIPS_ENABLED=false

SERVER_CERTIFICATE=

SERVER_CERTIFICATE_KEY_FILE=

SERVER_CA_CERTIFICATES_FILE=

CLIENT_CERTIFICATE=

CLIENT_CERTIFICATE_KEY_FILE=

CLIENT_CA_CERTIFICATES_FILE*_ENABLED parameters are just flags that should be set to true to secure the installation (at least for SECURITY_ENABLED and one TLS parameter), and then you need to provide the certificate files (client and server, three for each).

To summarize, there is not much you have to do to configure a secure GoldenGate setup. So it shouldn’t be that difficult to enable these security features after installation: one flag, and a few certificates.

Configuration differencesFrom a configuration perspective, there are not many differences either. Looking at the deploymentConfiguration.dat file for both secure and unsecure service managers, the only difference lies in the SecurityManager.config.securityDetails section. After cleaning what is similar, here are the differences:

# Secure installation

"securityDetails": {

"network": {

"common": {

"fipsEnabled": false,

},

"inbound": {

"authMode": "clientOptional_server",

"cipherSuites": [

"TLS_AES_256_GCM_SHA384",

"TLS_AES_128_GCM_SHA256",

"TLS_CHACHA20_POLY1305_SHA256"

],

"protocolVersion": "TLS_ALL"

},

"outbound": {

"authMode": "clientOptional_server",

}

}

},

# Unsecure installation

"securityDetails": {

"network": {

"common": {

"fipsEnabled": false,

},

"inbound": {

"authMode": "clientOptional_server",

"cipherSuites": "^((?!anon|RC4|NULL|3DES).)*$",

},

"outbound": {

"authMode": "client_server",

}

}

},Basically, securityDetails.outbound.authMode is set to clientOptional_server on one side, and client_server on the other. And the unsecure configuration has a different securityDetails.inbound.cipherSuites parameter, and a missing securityDetails.protocolVersion parameter.

But nothing in the configuration points to the wallet files, locates in $OGG_ETC_HOME/ssl. So, how to add them here ?

When connecting to an unsecure GoldenGate service manager, you still have the ability to add and manage certificates from the UI, the same way you would do on a secure installation:

It is unfortunate, but just adding the certificates from the UI doesn’t make your installation secure. In fact, even after modifying the deploymentConfiguration.dat files, the last piece missing in the configuration, as described above, it doesn’t work. You will only end up with a broken installation, even when doing the same with all your deployments and restarting everything.

Unfortunately, not at this point. And it was confirmed earlier this week on the MOSC forums by Gopal Gaur, Senior Principal Software Engineer working on GoldenGate at Oracle.

You can not convert non secure deployment into secure deployment, you will need a new service manager that supports sever side SSL/TLS.

You can not convert non secure deployment into secure deployment at this stage, we have an opened enhancement for this.

To wrap up, bad news: it is not possible to secure an existing GoldenGate installation, but good news, Oracle is apparently working on it. In the meantime, just re-install GoldenGate…

L’article Securing an Existing Unsecure GoldenGate Installation est apparu en premier sur dbi Blog.

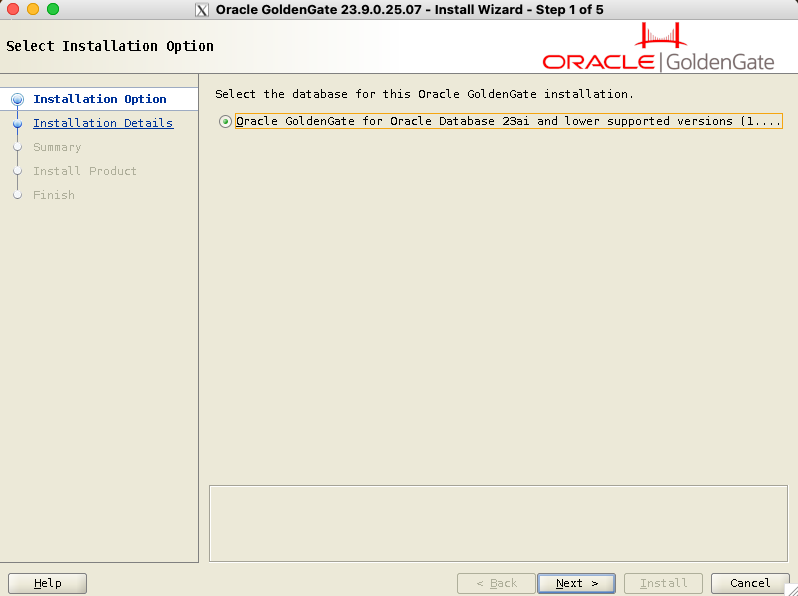

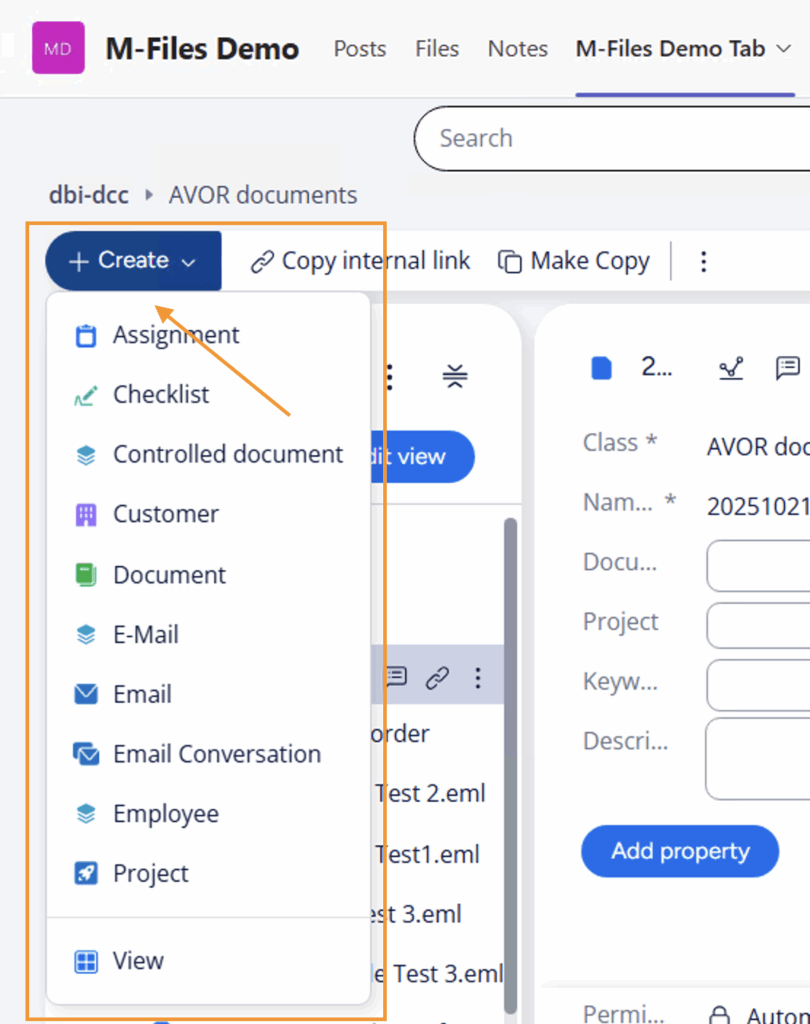

GoldenGate 23ai Installation: Graphic and Silent Mode Comparison for Automation

Automating Oracle installations can sometimes be daunting, given the long list of parameters available. We’ll compare both graphic and silent installations of GoldenGate 23ai, focusing on building minimalist response files for automation purposes.

You can set up GoldenGate in two different ways:

- From the base archive, available on eDelivery (V1042871-01.zip for Linux x86-64, for instance)

- From the patched archive, updated quarterly and available on the Oracle Support. At the time of writing of this blog, GoldenGate 23.9 is the latest version available (23.10, now called 23.26, was announced but not released yet). You can find the MOS Document 3093376.1 on the subject, or 2193391.1 for general patching information on GoldenGate. Patch 38139663 is the completely patched installation (we will use this one in the blog), while patch 38139662 is the patch-only archive, applied on an existing GoldenGate installation.

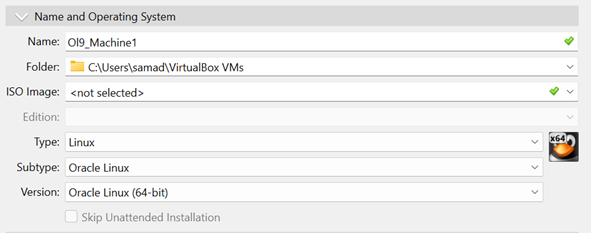

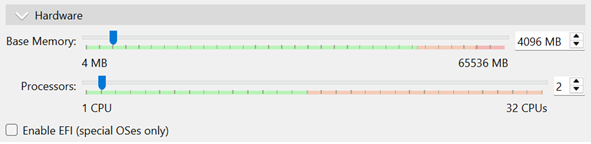

For the purpose of this installation, we will use the oracle-database-preinstall rpm, even if we don’t need all the things it brings. If you plan on installing GoldenGate on an existing Oracle database server, Oracle recommends using a separate user. We will keep oracle here.

[root@vmogg ~] dnf install -y oracle-database-preinstall-23ai

[root@vmogg ~] mkdir -p /u01/stage

[root@vmogg ~] chown oracle:oinstall -R /u01With the oracle user created through the rpm installation, unzip GoldenGate source file into a stage area:

[root@vmogg ~] su - oracle

[oracle@vmogg ~] cd /u01/stage

[oracle@vmogg stage] unzip -oq p38139663_23902507OGGRU_Linux-x86-64.zip -d /u01/stage/Running the graphic installation of GoldenGate is not any different from what you would do with an Oracle database installation.

After setting up X11 display (out of the scope of this blog), you should first define the OGG_HOME variable to the location of the GoldenGate installation and then run the installer:

[oracle@vmogg ~]$ export OGG_HOME=/u01/app/oracle/product/ogg23ai

[oracle@vmogg ~]$ /u01/stage/fbo_ggs_Linux_x64_Oracle_services_shiphome/Disk1/runInstallerBug: depending on the display options you have, you might have a color mismatch on the GoldenGate installation window, most of it appearing black (see this image). If this happens, run the following command before launching the installation: export _JAVA_OPTIONS="-Dsun.java2d.xrender=false"

Just click Next on the first step. Starting from GoldenGate 23ai, Classic Architecture was desupported, so you don’t have to worry anymore about which architecture to choose. The Microservices Architecture is the only choice now.

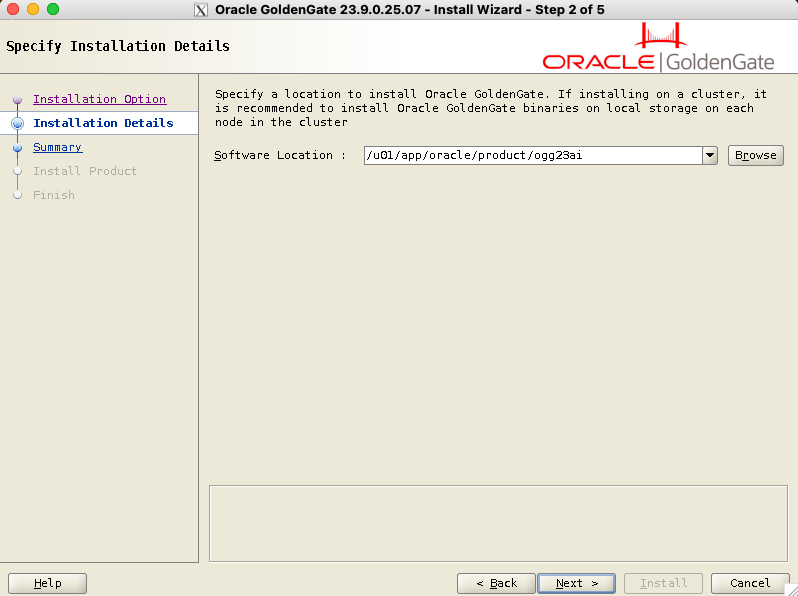

Fill in the software location for your installation of GoldenGate. This will match the OGG_HOME environment variable. If the variable is set prior to launching the runInstaller, the software location is filled automatically.

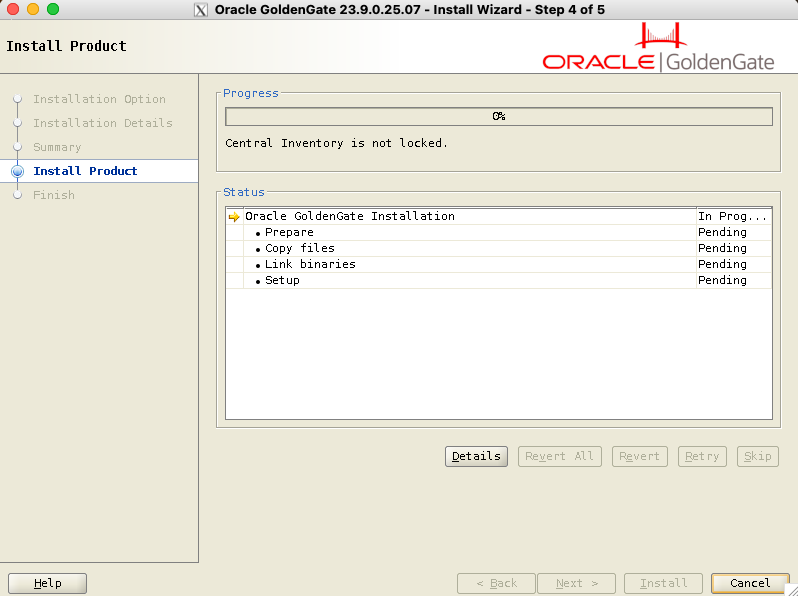

Step 3 is just a summary of the installation. You can save the response file at this stage and use it later to standardize your installations with the silent installation described below. Then, click on Install.

After a few seconds, the installation is complete, and you can exit the installer.

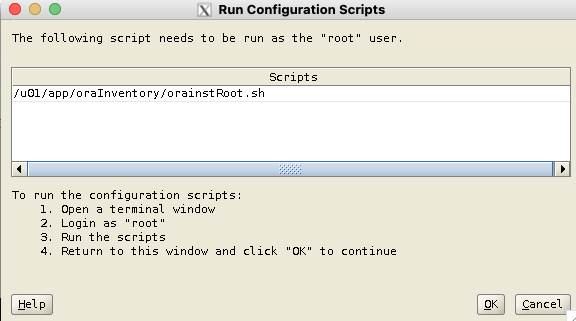

If it’s your first Oracle-related installation on this server, you might have to run the /u01/app/oraInventory/orainstRoot.sh script as root when prompted to do so.

[root@vmogg ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.The binary installation is complete.

Installing the Service Manager and the First Deployment

Installing the Service Manager and the First Deployment

Once the binaries are installed, with the same oracle X11 terminal, run the oggca.sh script located in the $OGG_HOME/bin directory:

[oracle@vmogg ~]$ export OGG_HOME=/u01/app/oracle/product/ogg23ai

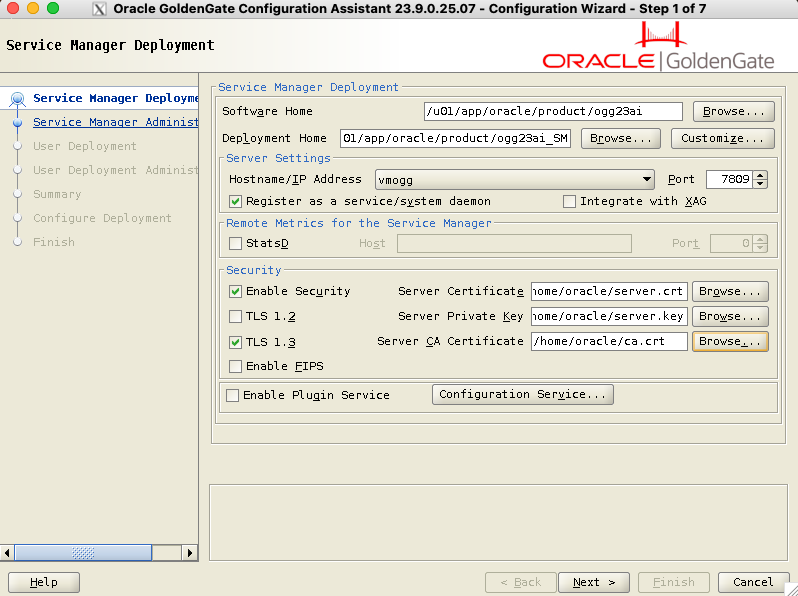

[oracle@vmogg ~]$ $OGG_HOME/bin/oggca.shOn the first step (see below), you will have:

- Software Home, which contains the GoldenGate binaries, also called

$OGG_HOME - Deployment Home, filled with the location of the service manager directory (and not the GoldenGate deployment, thank you Oracle for this one…).

- Port (default is 7809) of the service manager. This will be the main point of entry for the web UI.

- Register as a service/system daemon, if you want GoldenGate to be a service on your server.

- Integrate with XAG, for a GoldenGate RAC installation (out of the scope of this blog).

- Enable Security, with the associated certificates and key. You can leave this unchecked if you just want to test the GoldenGate installation process.

- We leave the rest unchecked.

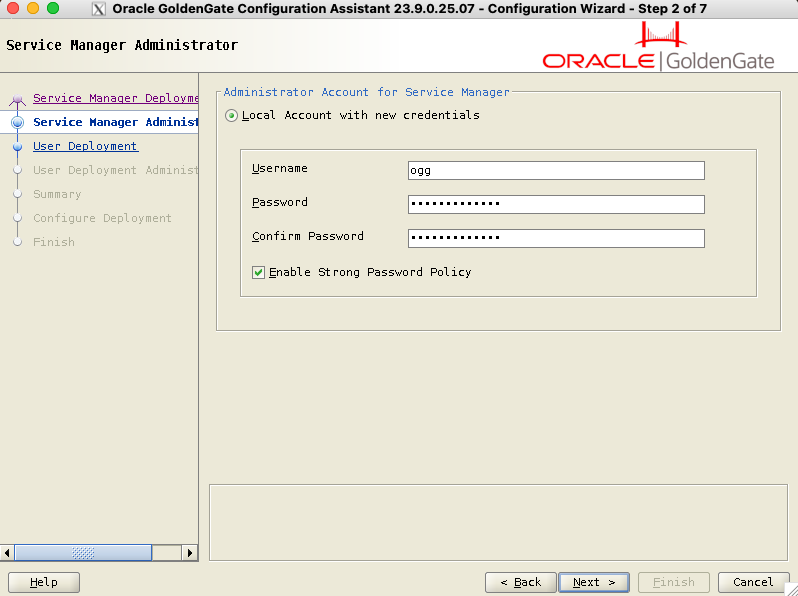

Next, fill in the credentials for the service manager. Enabling Strong Password Policy will force you to enter a secure password.

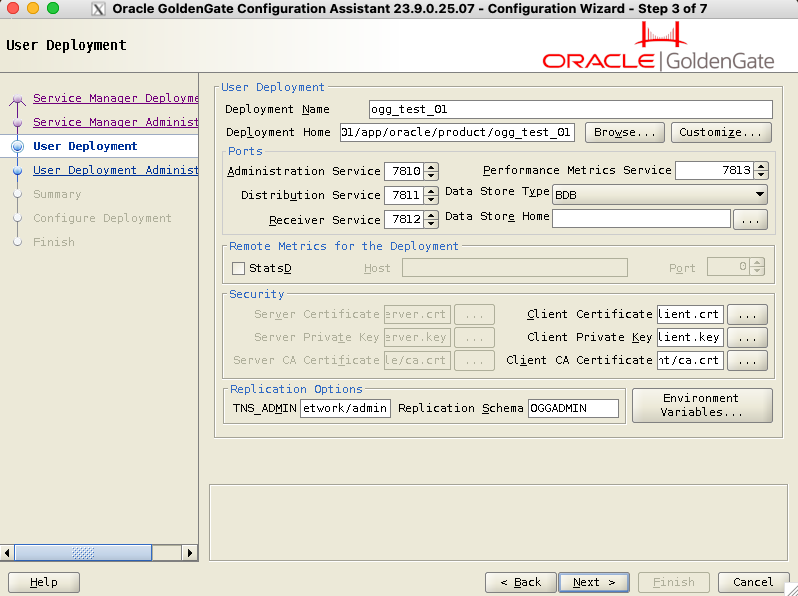

In previous versions of GoldenGate, you could first set up the service manager and wait before configuring your first deployment. It is now mandatory to set up the first deployment:

- Deployment Name:

ogg_test_01for this installation. It is not just cosmetic, you will refer to this name for connection, in theadminclientand on the Web UI. - Deployment Home: Path to the deployment home. Logs, trail files and configuration will sit there.

- Ports: Four ports need to be filled here. I would recommend using the default ports for the first deployment (7810, 7811, 7812 and 7813), or ports following the service manager port (7809). For a second deployment, you can continue with the following ports (7814, 7815, 7816, 7817), or keep the same units digit (7820, 7821, 7822, 7823) for a better understanding of your GoldenGate infrastructure.

- Remote Metrics for the Deployment: out of the scope of this blog, not needed for a basic GoldenGate installation.

- Security: If you secured your service manager earlier in the previous step, you should secure your deployment here, providing keys and certificates.

- Replication Options:

TNS_ADMINcould already be filled, otherwise just specify its path. GoldenGate will look for TNS entries here. You should also fill in the replication schema name.

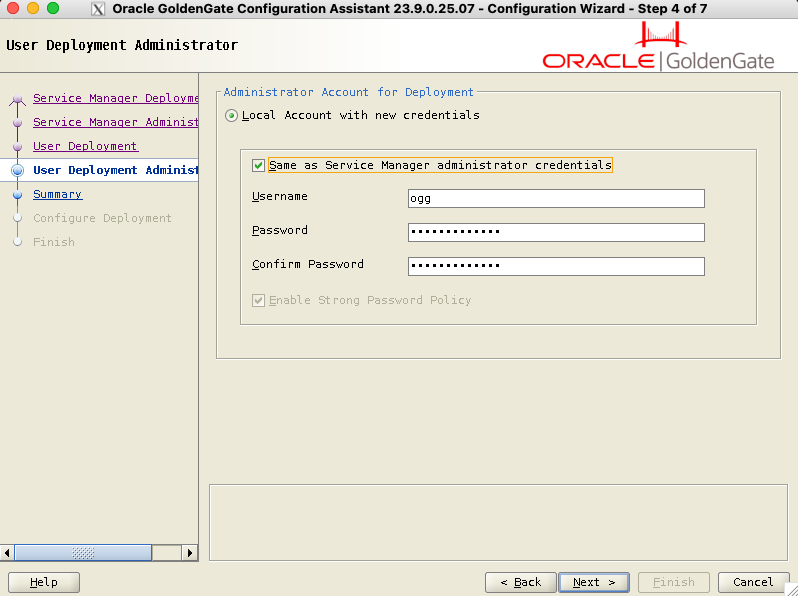

Later, fill in the credentials for the deployment. They can be different from the service manager credentials, or you can check the box to keep the same credentials.

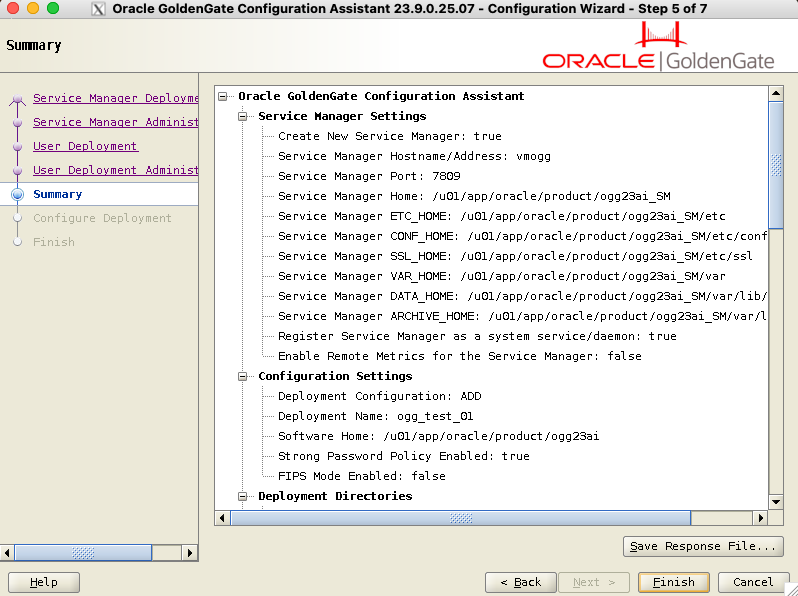

In the summary screen, review your configuration, and save the response file for later if required. Click on Finish to start the installation.

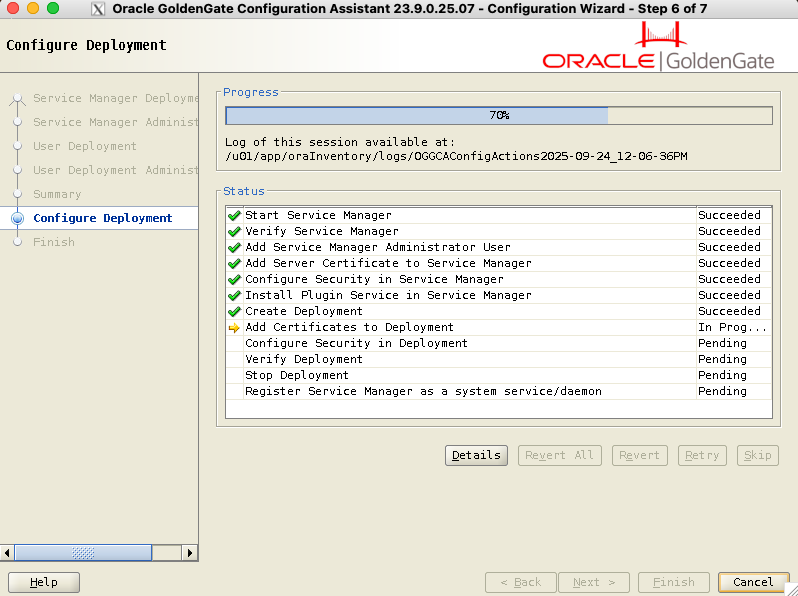

The installation should take a few seconds:

That’s it, you have successfully installed GoldenGate 23ai ! Go to the web UI section for how to connect to your GoldenGate environment.

Installing GoldenGate with the CLI (silent installation) Installing GoldenGate binariesTo perform the GoldenGate installation process in silent mode, you can either use a response file containing the arguments needed for the installation or give these arguments in the command line.

For the GoldenGate binaries installation, create a oggcore_23ai.rsp file, changing SOFTWARE_LOCATION, INVENTORY_LOCATION and UNIX_GROUP_NAME as needed:

[oracle@vmogg ~]$ cat oggcore_23ai.rsp

oracle.install.responseFileVersion=/oracle/install/rspfmt_ogginstall_response_schema_v23_1_0

INSTALL_OPTION=ORA23ai

SOFTWARE_LOCATION=/u01/app/oracle/product/ogg23ai

INVENTORY_LOCATION=/u01/app/oraInventory

UNIX_GROUP_NAME=oinstallThen, run the installer with the -silent and -responseFile options:

[oracle@vmogg ~]$ /u01/stage/fbo_ggs_Linux_x64_Oracle_services_shiphome/Disk1/runInstaller -silent -responseFile /home/oracle/oggcore_23ai.rsp

Starting Oracle Universal Installer...

Checking Temp space: must be greater than 120 MB. Actual 17094 MB Passed

Checking swap space: must be greater than 150 MB. Actual 4095 MB Passed

Preparing to launch Oracle Universal Installer from /tmp/OraInstall2025-09-24_09-53-23AM. Please wait ...

You can find the log of this install session at:

/u01/app/oraInventory/logs/installActions2025-09-24_09-53-23AM.log

As a root user, run the following script(s):

1. /u01/app/oraInventory/orainstRoot.sh

Successfully Setup Software.

The installation of Oracle GoldenGate Services was successful.

Please check '/u01/app/oraInventory/logs/silentInstall2025-09-24_09-53-23AM.log' for more details.Same thing as with the graphic installation: if it’s the first time you run an Oracle-related installation on this server, run the orainstRoot.sh script as root:

[root@vmogg ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.Once the binaries are installed, run the oggca.sh script with the response file corresponding to the service manager and deployment that you want to create. The content of the response file oggca.rsp should be adapted to your needs, but I integrated a full example in the appendix below.

[oracle@vmogg ~]$ /u01/app/oracle/product/ogg23ai/bin/oggca.sh -silent -responseFile /home/oracle/oggca.rsp

As part of the process of registering Service Manager as a system daemon, the following steps will be performed:

1- The deployment will be stopped before registering the Service Manager as a daemon.

2- A new popup window will show the details of the script used to register the Service Manager as a daemon.

3- After the register script is executed, the Service Manager daemon will be started in the background and the deployment will be automatically restarted.

Click "OK" to continue.

In order to register Service Manager as a system service/daemon, as a "root" user, execute the following script:

(1). /u01/app/oracle/product/ogg23ai_SM/bin/registerServiceManager.sh

To execute the configuration scripts:

1.Open a terminal window

2.Login as "root"

3.Run the script

Successfully Setup Software.If you asked for the creation of a service, then run the following command as root:

[root@vmogg ~]# /u01/app/oracle/product/ogg23ai_SM/bin/registerServiceManager.sh

Copyright (c) 2017, 2024, Oracle and/or its affiliates. All rights reserved.

----------------------------------------------------

Oracle GoldenGate Install As Service Script

----------------------------------------------------

OGG_HOME=/u01/app/oracle/product/ogg23ai

OGG_CONF_HOME=/u01/app/oracle/product/ogg23ai_SM/etc/conf

OGG_VAR_HOME=/u01/app/oracle/product/ogg23ai_SM/var

OGG_USER=oracle

Running OracleGoldenGateInstall.sh...

Created symlink /etc/systemd/system/multi-user.target.wants/OracleGoldenGate.service → /etc/systemd/system/OracleGoldenGate.service.

Successfully Setup Software.Warning: If you plan on automating a GoldenGate installation and setup, make sure response files can only be read by the oracle user, and clean the response files if you need to keep passwords in plain text inside the file.

To add or remove a deployment to an existing service manager, you can do that graphically with oggca.sh, or in silent mode. In silent mode, I give below a minimal response file example to add a new deployment (removing everything that would be already configured, like service manager properties). Of course, the deployment name, paths, and ports should be different from an existing deployment. And if your deployment is secured, you should fill SECTION H - SECURITY in the same way you did for the first installation, and specify SECURITY_ENABLED=true in SECTION C - SERVICE MANAGER.

oracle.install.responseFileVersion=/oracle/install/rspfmt_oggca_response_schema_v23_1_0

# SECTION A - GENERAL

CONFIGURATION_OPTION=ADD

DEPLOYMENT_NAME=ogg_test_02

# SECTION B - ADMINISTRATOR ACCOUNT

ADMINISTRATOR_USER=ogg

ADMINISTRATOR_PASSWORD=ogg_password

DEPLOYMENT_ADMINISTRATOR_USER=ogg

DEPLOYMENT_ADMINISTRATOR_PASSWORD=ogg_password

# SECTION C - SERVICE MANAGER

HOST_SERVICEMANAGER=your_host

PORT_SERVICEMANAGER=7809

SECURITY_ENABLED=false

STRONG_PWD_POLICY_ENABLED=false

# SECTION E - SOFTWARE HOME

OGG_SOFTWARE_HOME=/u01/app/oracle/product/ogg23ai

# SECTION F - DEPLOYMENT DIRECTORIES

OGG_DEPLOYMENT_HOME=/u01/app/oracle/product/ogg_test_02

OGG_ETC_HOME=/u01/app/oracle/product/ogg_test_02/etc

OGG_CONF_HOME=/u01/app/oracle/product/ogg_test_02/etc/conf

OGG_SSL_HOME=/u01/app/oracle/product/ogg_test_02/etc/ssl

OGG_VAR_HOME=/u01/app/oracle/product/ogg_test_02/var

OGG_DATA_HOME=/u01/app/oracle/product/ogg_test_02/var/lib/data

OGG_ARCHIVE_HOME=/u01/app/oracle/product/ogg_test_02/var/lib/archive

# SECTION G - ENVIRONMENT VARIABLES

ENV_LD_LIBRARY_PATH=${OGG_HOME}/lib/instantclient:${OGG_HOME}/lib

ENV_TNS_ADMIN=/u01/app/oracle/network/admin

ENV_STREAMS_POOL_SIZE=

ENV_USER_VARS=

# SECTION H - SECURITY

TLS_1_2_ENABLED=false

TLS_1_3_ENABLED=false

FIPS_ENABLED=false

SERVER_CERTIFICATE=

SERVER_CERTIFICATE_KEY_FILE=

SERVER_CA_CERTIFICATES_FILE=

CLIENT_CERTIFICATE=

CLIENT_CERTIFICATE_KEY_FILE=

CLIENT_CA_CERTIFICATES_FILE=

# SECTION I - SERVICES

ADMINISTRATION_SERVER_ENABLED=true

PORT_ADMINSRVR=7820

DISTRIBUTION_SERVER_ENABLED=true

PORT_DISTSRVR=7821

NON_SECURE_DISTSRVR_CONNECTS_TO_SECURE_RCVRSRVR=false

RECEIVER_SERVER_ENABLED=true

PORT_RCVRSRVR=7822

METRICS_SERVER_ENABLED=true

METRICS_SERVER_IS_CRITICAL=false

PORT_PMSRVR=7823

PMSRVR_DATASTORE_TYPE=BDB

PMSRVR_DATASTORE_HOME=

ENABLE_DEPLOYMENT_REMOTE_METRICS=false

DEPLOYMENT_REMOTE_METRICS_LISTENING_HOST=

DEPLOYMENT_REMOTE_METRICS_LISTENING_PORT=0

# SECTION J - REPLICATION OPTIONS

OGG_SCHEMA=OGGADMINSame thing for the removal of an existing deployment, where the minimal response file is even simpler. You just need the deployment name and service manager information.

oracle.install.responseFileVersion=/oracle/install/rspfmt_oggca_response_schema_v23_1_0

# SECTION A - GENERAL

CONFIGURATION_OPTION=REMOVE

DEPLOYMENT_NAME=ogg_test_02

# SECTION B - ADMINISTRATOR ACCOUNT

ADMINISTRATOR_USER=ogg

ADMINISTRATOR_PASSWORD=ogg_password

DEPLOYMENT_ADMINISTRATOR_USER=ogg

DEPLOYMENT_ADMINISTRATOR_PASSWORD=ogg_password

# SECTION C - SERVICE MANAGER

HOST_SERVICEMANAGER=your_host

PORT_SERVICEMANAGER=7809

SECURITY_ENABLED=false

# SECTION H - SECURITY

TLS_1_2_ENABLED=false

TLS_1_3_ENABLED=false

FIPS_ENABLED=false

SERVER_CERTIFICATE=

SERVER_CERTIFICATE_KEY_FILE=

SERVER_CA_CERTIFICATES_FILE=

CLIENT_CERTIFICATE=

CLIENT_CERTIFICATE_KEY_FILE=

CLIENT_CA_CERTIFICATES_FILE=

# SECTION K - REMOVE DEPLOYMENT OPTIONS

REMOVE_DEPLOYMENT_FROM_DISK=trueWhether you installed GoldenGate graphically or silently, you will now be able to connect to the Web UI. Except for the design, it is pretty much the same thing as the Microservices Architecture of GoldenGate 19c and 21c. Connect to the hostname of your GoldenGate installation, on the service manager port: http://hostname:port, or https://hostname:post if the installation is secured.

With the example of this blog:

7809— Service manager7810— Administration service of the first deployment you created, for managing extracts and replicats7811— Distribution service, to send trail files to other GoldenGate deployments.7812— Receiver service, to receive trail files.7813— Performance metrics service, for extraction and replication analysis.

Log in either with the service manager credentials, or with the deployment credentials:

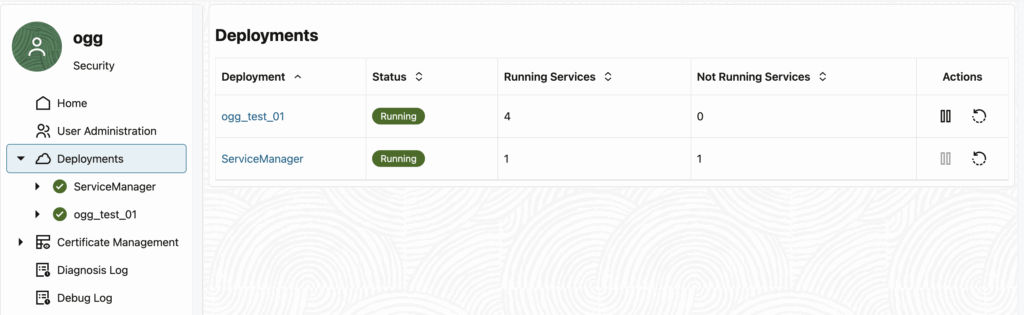

Service Manager Web UI

Service Manager Web UI

The service manager web UI allows you to stop and start deployment services, manage users and certificates. You will hardly ever use it, even less since deployment creation/removal will be done through oggca.sh anyway. If you have many deployments, it can still be useful to log in to these deployments quickly.

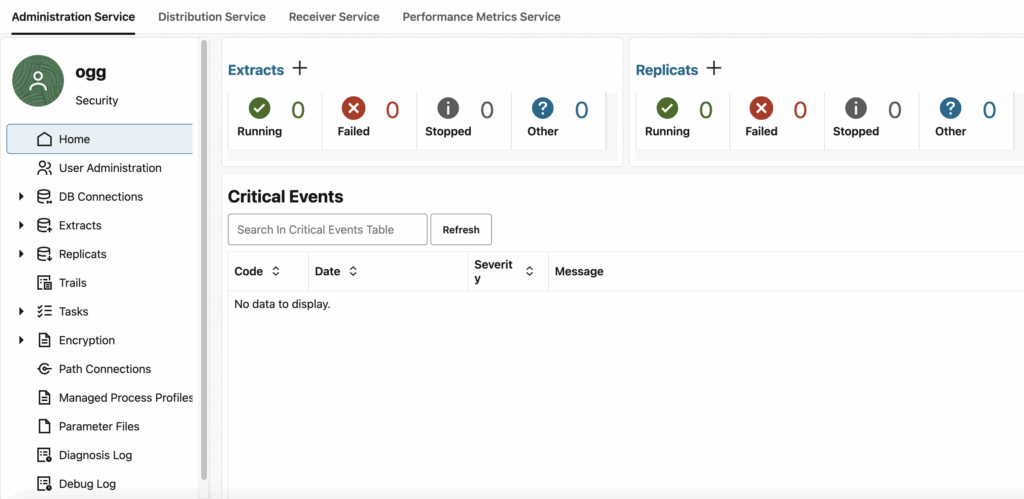

Deployment Web UI

Deployment Web UI

The deployment web UI, however, is used throughout the whole lifecycle of your GoldenGate replications. You manage extracts, replicats, distribution paths, and more.

NB for the newcomers: you don’t have to bookmark all the services of a deployment. Once logged in to the administration service of a deployment, you can just jump between services through the UI, by clicking on the services on the top bar.

You now have a full GoldenGate 23ai installation, and can start configuring your first replication !

Appendix:oggca.rsp example

Here is an example of a response file to create both the service manager and the first deployment of a GoldenGate installation. I included at the end the full file with Oracle annotations.

oracle.install.responseFileVersion=/oracle/install/rspfmt_oggca_response_schema_v23_1_0

# SECTION A - GENERAL

CONFIGURATION_OPTION=ADD

DEPLOYMENT_NAME=ogg_test_01

# SECTION B - ADMINISTRATOR ACCOUNT

ADMINISTRATOR_USER=ogg

ADMINISTRATOR_PASSWORD=ogg_password

DEPLOYMENT_ADMINISTRATOR_USER=ogg

DEPLOYMENT_ADMINISTRATOR_PASSWORD=ogg_password

# SECTION C - SERVICE MANAGER

SERVICEMANAGER_DEPLOYMENT_HOME=/u01/app/oracle/product/ogg23ai_SM

SERVICEMANAGER_ETC_HOME=/u01/app/oracle/product/ogg23ai_SM/etc

SERVICEMANAGER_CONF_HOME=/u01/app/oracle/product/ogg23ai_SM/etc/conf

SERVICEMANAGER_SSL_HOME=/u01/app/oracle/product/ogg23ai_SM/etc/ssl

SERVICEMANAGER_VAR_HOME=/u01/app/oracle/product/ogg23ai_SM/var

SERVICEMANAGER_DATA_HOME=/u01/app/oracle/product/ogg23ai_SM/var/lib/data

SERVICEMANAGER_ARCHIVE_HOME=/u01/app/oracle/product/ogg23ai_SM/var/lib/archive

HOST_SERVICEMANAGER=your_host

PORT_SERVICEMANAGER=7809

SECURITY_ENABLED=false

STRONG_PWD_POLICY_ENABLED=false

CREATE_NEW_SERVICEMANAGER=true

REGISTER_SERVICEMANAGER_AS_A_SERVICE=true

INTEGRATE_SERVICEMANAGER_WITH_XAG=false

EXISTING_SERVICEMANAGER_IS_XAG_ENABLED=false

ENABLE_SERVICE_MANAGER_REMOTE_METRICS=false

SERVICE_MANAGER_REMOTE_METRICS_LISTENING_HOST=

SERVICE_MANAGER_REMOTE_METRICS_LISTENING_PORT=0

PLUGIN_SERVICE_ENABLED=false

# SECTION D - CONFIGURATION SRVICE

CONFIGURATION_SERVICE_ENABLED=false

CONFIGURATION_SERVICE_BACKEND_TYPE=FILESYSTEM

CONFIGURATION_SERVICE_BACKEND_CONNECTION_STRING=

CONFIGURATION_SERVICE_BACKEND_USERNAME=

CONFIGURATION_SERVICE_BACKEND_PASSWORD=

CONFIGURATION_SERVICE_BACKEND_TABLE_NAME=

# SECTION E - SOFTWARE HOME

OGG_SOFTWARE_HOME=/u01/app/oracle/product/ogg23ai

# SECTION F - DEPLOYMENT DIRECTORIES

OGG_DEPLOYMENT_HOME=/u01/app/oracle/product/ogg_test_01

OGG_ETC_HOME=/u01/app/oracle/product/ogg_test_01/etc

OGG_CONF_HOME=/u01/app/oracle/product/ogg_test_01/etc/conf

OGG_SSL_HOME=/u01/app/oracle/product/ogg_test_01/etc/ssl

OGG_VAR_HOME=/u01/app/oracle/product/ogg_test_01/var

OGG_DATA_HOME=/u01/app/oracle/product/ogg_test_01/var/lib/data

OGG_ARCHIVE_HOME=/u01/app/oracle/product/ogg_test_01/var/lib/archive

# SECTION G - ENVIRONMENT VARIABLES

ENV_LD_LIBRARY_PATH=${OGG_HOME}/lib/instantclient:${OGG_HOME}/lib

ENV_TNS_ADMIN=/u01/app/oracle/network/admin

ENV_STREAMS_POOL_SIZE=

ENV_USER_VARS=

# SECTION H - SECURITY

TLS_1_2_ENABLED=false

TLS_1_3_ENABLED=true

FIPS_ENABLED=false

SERVER_CERTIFICATE=

SERVER_CERTIFICATE_KEY_FILE=

SERVER_CA_CERTIFICATES_FILE=

CLIENT_CERTIFICATE=

CLIENT_CERTIFICATE_KEY_FILE=

CLIENT_CA_CERTIFICATES_FILE=

# SECTION I - SERVICES

ADMINISTRATION_SERVER_ENABLED=true

PORT_ADMINSRVR=7810

DISTRIBUTION_SERVER_ENABLED=true

PORT_DISTSRVR=7811

NON_SECURE_DISTSRVR_CONNECTS_TO_SECURE_RCVRSRVR=false

RECEIVER_SERVER_ENABLED=true

PORT_RCVRSRVR=7812

METRICS_SERVER_ENABLED=true

METRICS_SERVER_IS_CRITICAL=false

PORT_PMSRVR=7813

PMSRVR_DATASTORE_TYPE=BDB

PMSRVR_DATASTORE_HOME=

ENABLE_DEPLOYMENT_REMOTE_METRICS=false

DEPLOYMENT_REMOTE_METRICS_LISTENING_HOST=

DEPLOYMENT_REMOTE_METRICS_LISTENING_PORT=0

# SECTION J - REPLICATION OPTIONS

OGG_SCHEMA=OGGADMIN

# SECTION K - REMOVE DEPLOYMENT OPTIONS

REMOVE_DEPLOYMENT_FROM_DISK=Full file :

################################################################################

## Copyright(c) Oracle Corporation 2016, 2024. All rights reserved. ##

## ##

## Specify values for the variables listed below to customize your ##

## installation. ##

## ##

## Each variable is associated with a comment. The comments can help to ##

## populate the variables with the appropriate values. ##

## ##

## IMPORTANT NOTE: This file should be secured to have read permission only ##

## by the Oracle user or an administrator who owns this configuration to ##

## protect any sensitive input values. ##

## ##

################################################################################

#-------------------------------------------------------------------------------

# Do not change the following system generated value.

#-------------------------------------------------------------------------------

oracle.install.responseFileVersion=/oracle/install/rspfmt_oggca_response_schema_v23_1_0

################################################################################

## ##

## Oracle GoldenGate deployment configuration options and details ##

## ##

################################################################################

################################################################################

## ##

## Instructions to fill out this response file ##

## ------------------------------------------- ##

## Fill out section A, B, and C for general deployment information ##

## Additionally: ##

## Fill out sections D, E, F, G, H, I, and J for adding a deployment ##

## Fill out section K for removing a deployment ##

## ##

################################################################################

################################################################################

# #

# SECTION A - GENERAL #

# #

################################################################################

#-------------------------------------------------------------------------------

# Specify the configuration option.

# Specify:

# - ADD : for adding a new GoldenGate deployment.

# - REMOVE : for removing an existing GoldenGate deployment.

#-------------------------------------------------------------------------------

CONFIGURATION_OPTION=ADD

#-------------------------------------------------------------------------------

# Specify the name for the new or existing deployment.

#-------------------------------------------------------------------------------

DEPLOYMENT_NAME=ogg_test_01

################################################################################

# #

# SECTION B - ADMINISTRATOR ACCOUNT #

# #

# * If creating a new Service Manager, set the Administrator Account username #

# and password. #

# #

# * If reusing an existing Service Manager: #

# * Enter the credentials for the Administrator Account in #

# the existing Service Manager. #

# #

################################################################################

#-------------------------------------------------------------------------------

# Specify the administrator account username for the Service Manager.

#-------------------------------------------------------------------------------

ADMINISTRATOR_USER=ogg

#-------------------------------------------------------------------------------

# Specify the administrator account password for the Service Manager.

#-------------------------------------------------------------------------------

ADMINISTRATOR_PASSWORD=ogg_password

#-------------------------------------------------------------------------------

# Optionally, specify a different administrator account username for the deployment,

# or leave blanks to use the same Service Manager administrator credentials.

#-------------------------------------------------------------------------------

DEPLOYMENT_ADMINISTRATOR_USER=ogg

#-------------------------------------------------------------------------------

# If creating a different administrator account username for the deployment,

# specify the password for it.

#-------------------------------------------------------------------------------

DEPLOYMENT_ADMINISTRATOR_PASSWORD=ogg_password

################################################################################

# #

# SECTION C - SERVICE MANAGER #

# #

################################################################################

#-------------------------------------------------------------------------------

# Specify the location for the Service Manager deployment.

# This is only needed if the Service Manager deployment doesn't exist already.

#-------------------------------------------------------------------------------

SERVICEMANAGER_DEPLOYMENT_HOME=/u01/app/oracle/product/ogg23ai_SM

#-------------------------------------------------------------------------------

# Optionally, specify a custom location for the Service Manager deployment ETC_HOME.

#-------------------------------------------------------------------------------

SERVICEMANAGER_ETC_HOME=/u01/app/oracle/product/ogg23ai_SM/etc

#-------------------------------------------------------------------------------

# Optionally, specify a custom location for the Service Manager deployment CONF_HOME.

#-------------------------------------------------------------------------------

SERVICEMANAGER_CONF_HOME=/u01/app/oracle/product/ogg23ai_SM/etc/conf

#-------------------------------------------------------------------------------

# Optionally, specify a custom location for the Service Manager deployment SSL_HOME.

#-------------------------------------------------------------------------------

SERVICEMANAGER_SSL_HOME=/u01/app/oracle/product/ogg23ai_SM/etc/ssl

#-------------------------------------------------------------------------------

# Optionally, specify a custom location for the Service Manager deployment VAR_HOME.

#-------------------------------------------------------------------------------

SERVICEMANAGER_VAR_HOME=/u01/app/oracle/product/ogg23ai_SM/var

#-------------------------------------------------------------------------------

# Optionally, specify a custom location for the Service Manager deployment DATA_HOME.

#-------------------------------------------------------------------------------

SERVICEMANAGER_DATA_HOME=/u01/app/oracle/product/ogg23ai_SM/var/lib/data

#-------------------------------------------------------------------------------

# Optionally, specify a custom location for the Service Manager deployment ARCHIVE_HOME.

#-------------------------------------------------------------------------------

SERVICEMANAGER_ARCHIVE_HOME=/u01/app/oracle/product/ogg23ai_SM/var/lib/archive

#-------------------------------------------------------------------------------

# Specify the host for the Service Manager.

#-------------------------------------------------------------------------------

HOST_SERVICEMANAGER=your_host

#-------------------------------------------------------------------------------

# Specify the port for the Service Manager.

#-------------------------------------------------------------------------------

PORT_SERVICEMANAGER=7809

#-------------------------------------------------------------------------------

# Specify if SSL / TLS is or will be enabled for the deployment.

# Specify true if SSL / TLS is or will be enabled, false otherwise.

#-------------------------------------------------------------------------------

SECURITY_ENABLED=false

#-------------------------------------------------------------------------------

# Specify if the deployment should enforce a strong password policy.

# Specify true to enable strong password policy management.