Feed aggregator

Deploying Azure Terraform code with Azure DevOps and a storage account as remote backend

While I was working for a customer, I was tasked to create an Azure infrastructure, using Terraform and Azure DevOps (ADO). I thought about doing it like I usually do with GitLab but it wasn’t possible with ADO as it doesn’t store the state file itself. Instead I have to use an Azure Storage Account. I configured it, blocked public network, and realized that my pipeline couldn’t push the state in the Storage Account

In fact, ADO isn’t supported as a “Trusted Microsoft Service” and so it can’t bypass firewall rules using that option in Storage Accounts. For this to work, I had to create a self-hosted agent that run on Azure VM Scale Set and that will be the topic of this blog.

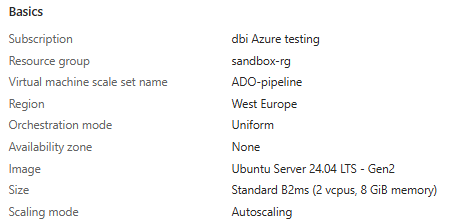

Azure resources creation Agent CreationFirst thing, we create a Azure VM Scale Set. I kept most parameters to their default values but it can be customized. I chose Linux as operating system as it was what I needed. One important thing is to set the “Orchestration mode” to “Uniform”, else ADO pipelines won’t work.

Storage account

Storage account

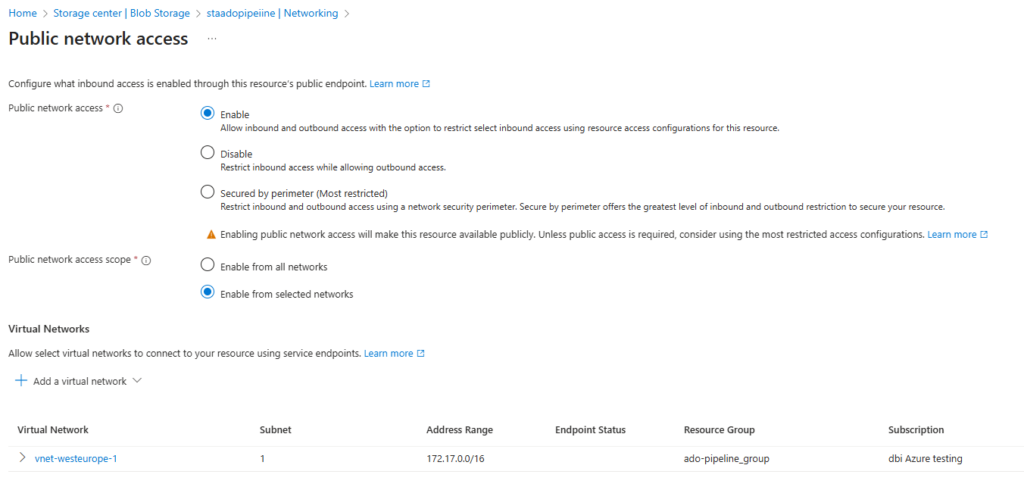

For the storage account that will store our state, any storage account should work. Just note that you also need to create a container inside of it to fill your terraform provider. Also, for network preferences we will go with “Public access” and “Enable from selected networks”. This is will allow public access only from restricted networks. I do this to avoid creating a private endpoint to connect to a fully private storage account.

Entra ID identity for the pipeline

Entra ID identity for the pipeline

We also need to create an Entra ID Enterprise Application that we will provide to the pipeline. This identity must have Contributor (or any look alike) role over the scope you target. Also, it must have at least Storage Blob Data Contributor on the Storage Account to be able to write in it.

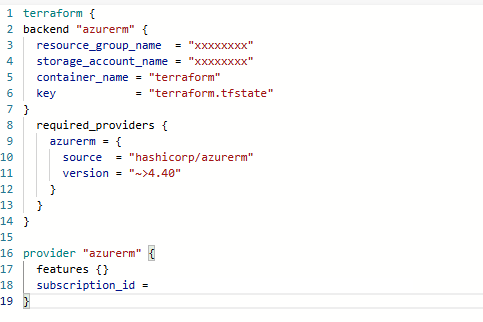

Azure DevOps setup Terraform codeYou can use any Terraform code you want, for my example I only use one which creates a Resource Group and a Virtual Network. Just note that your provider should look like this

Pipeline code

Pipeline code

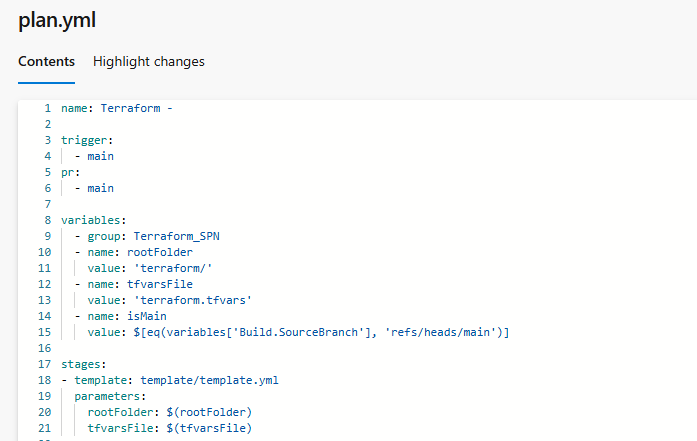

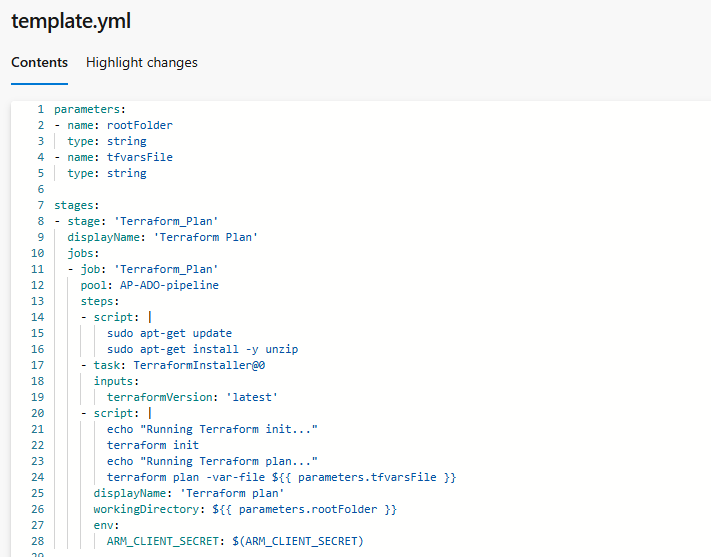

I’m used to split my pipeline in two files, the plan.yml will be given to the ADO pipeline and it will call the template to run its code. The things done in the pipeline are pretty simple. It installs Terraform on the VM Scale Set instance, then run the Terraform commands. The block of code can be reused for the “apply”.

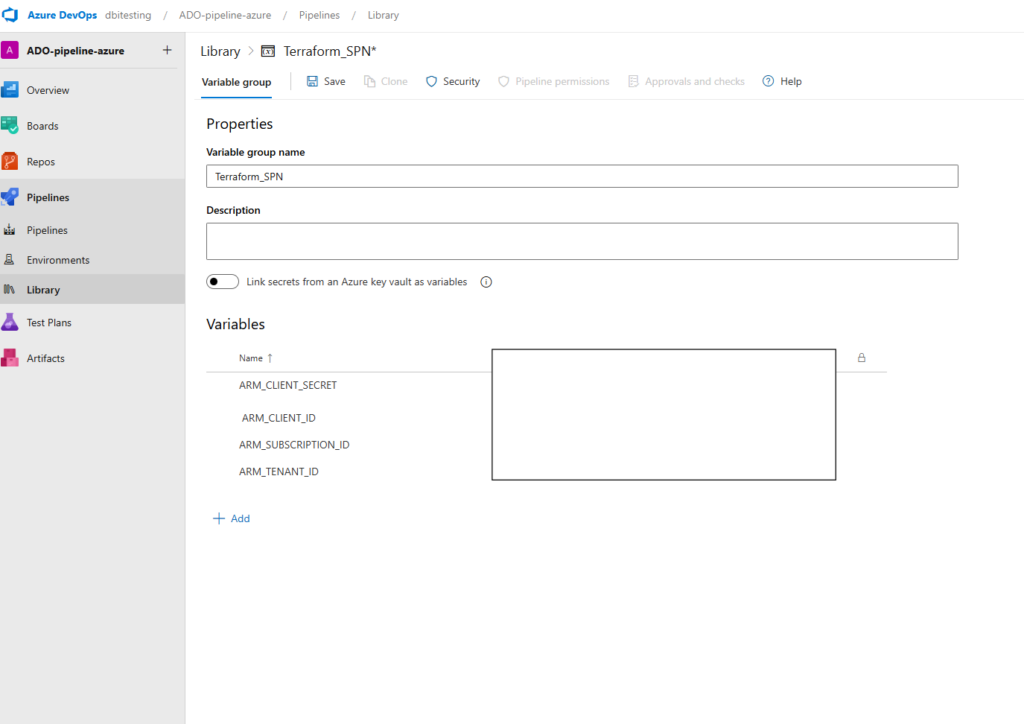

Few things to note, in my plan.yml I set a Variable Group “Terraform_SPN” that I will show you just after. That’s where we will find the information about our previously created Entra Id Enterprise Application

In the template.yml, what is important to note is the pool definition. Here I point just a name, which correspond to ADO Agent Pool that I created. I’ll also show this step a bit further.

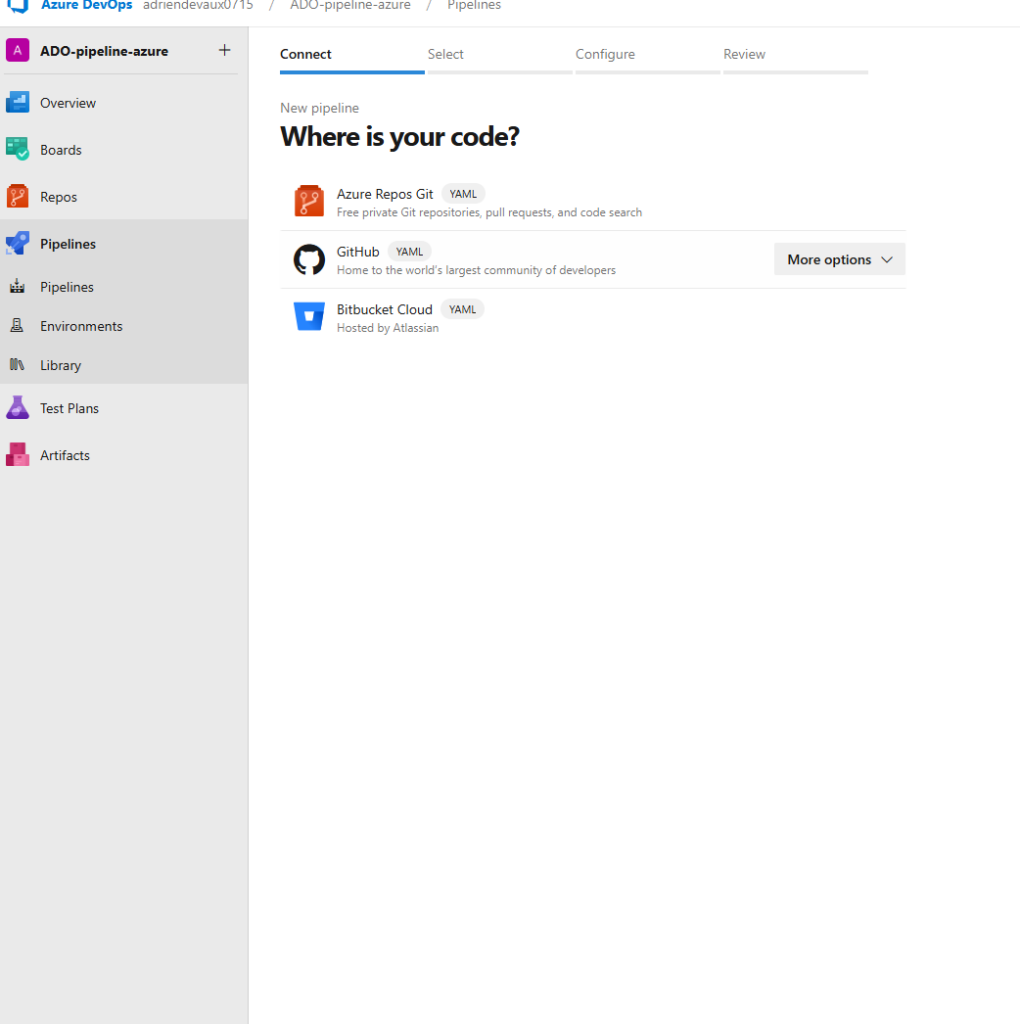

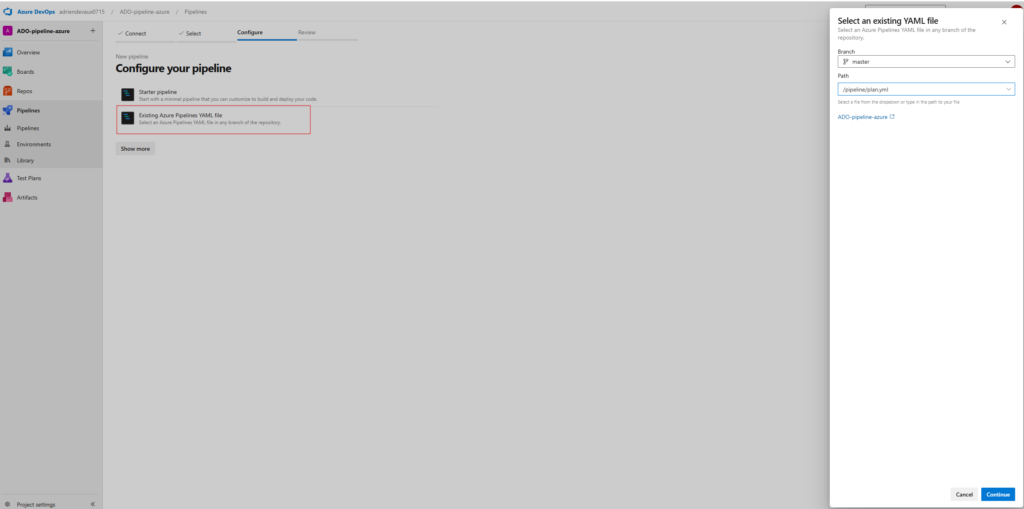

For the pipeline creation itself, we will go to Pipeline -> Create a new pipeline -> Azure Repos Git

Then Existing Azure Pipelines YAML file, and pick our file from our repo.

We will also create a Variable Group, the name doesn’t matter, just remember to put the same in your YAML code. Here you create 4 variables which are information coming from your tenant and your enterprise application. That’s gonna be used during the pipeline run to deploy your resources.

ADO Agent Pool

ADO Agent Pool

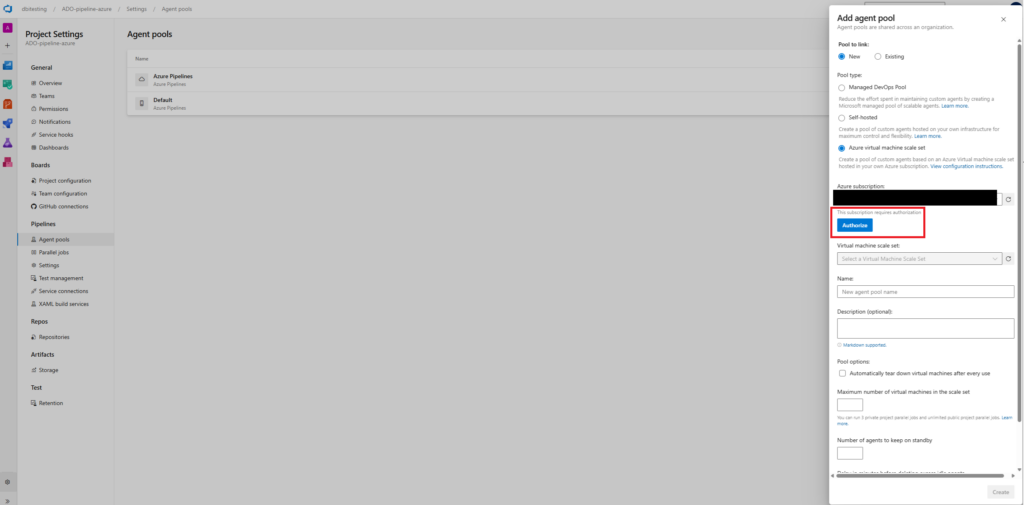

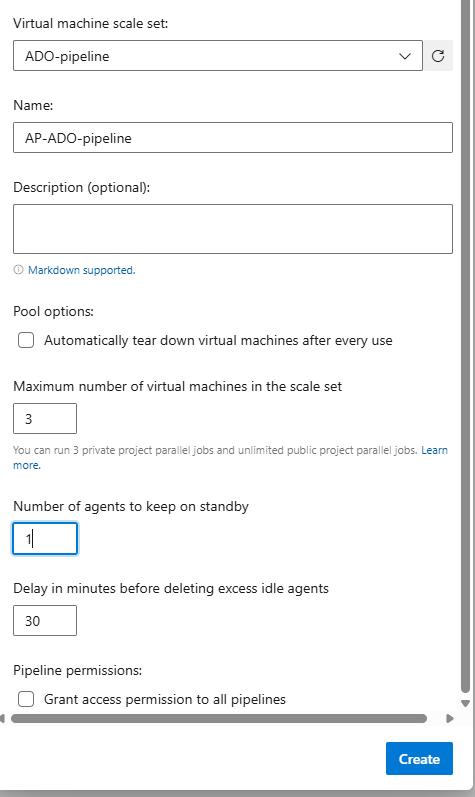

In the Project Settings, look for Agent Pools. Then create a new one and fill it as follow:

The Authorize button will appear after you select the subscription you want, and to accept this your user must have the Owner role, as it adds rights. This will allow ADO to communicate with Azure by creating a Service Principal. Then you can fill the rest as follow:

ADO pipeline run

ADO pipeline run

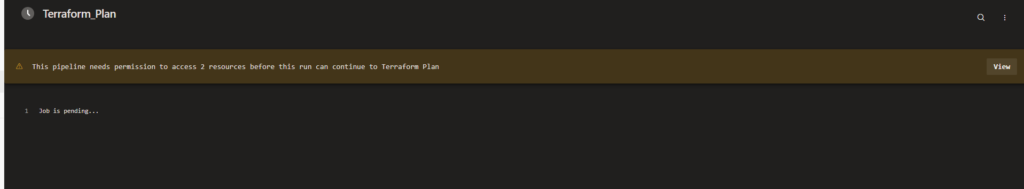

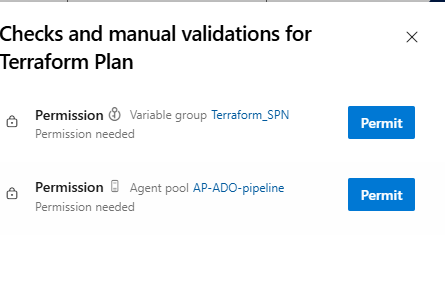

When you first run your pipeline you must authorize it to use the Variable Group and the Agent pool.

One this is done, everything should go smoothly and end like this.

I hope that this blog was useful and could help you troubleshoot that king of problem between Azure and Azure DevOps.

L’article Deploying Azure Terraform code with Azure DevOps and a storage account as remote backend est apparu en premier sur dbi Blog.

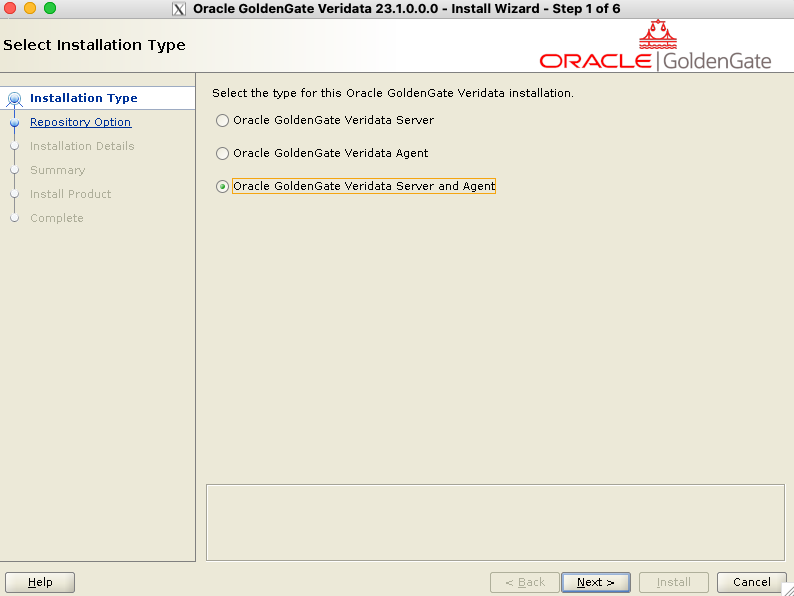

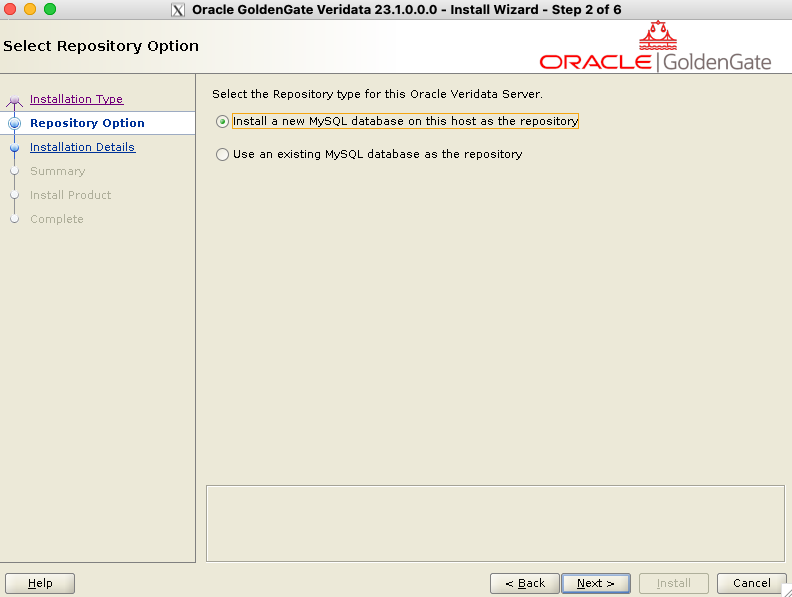

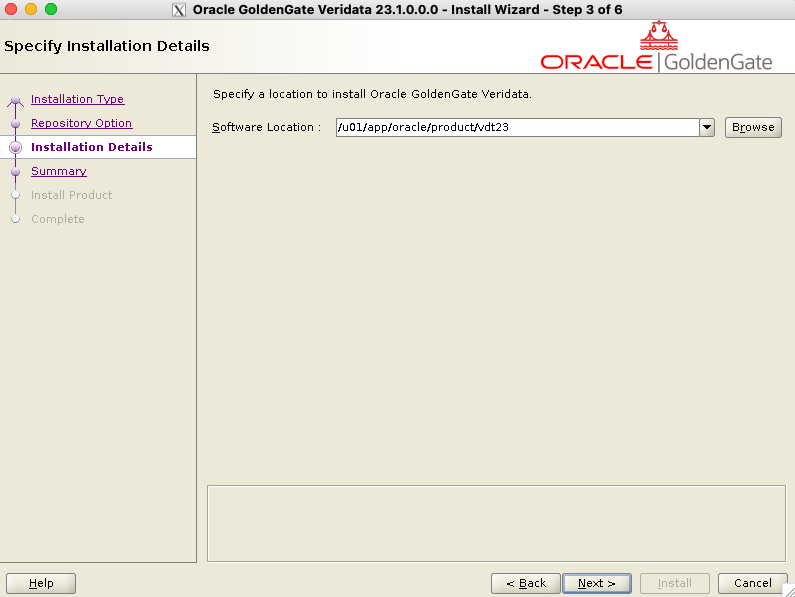

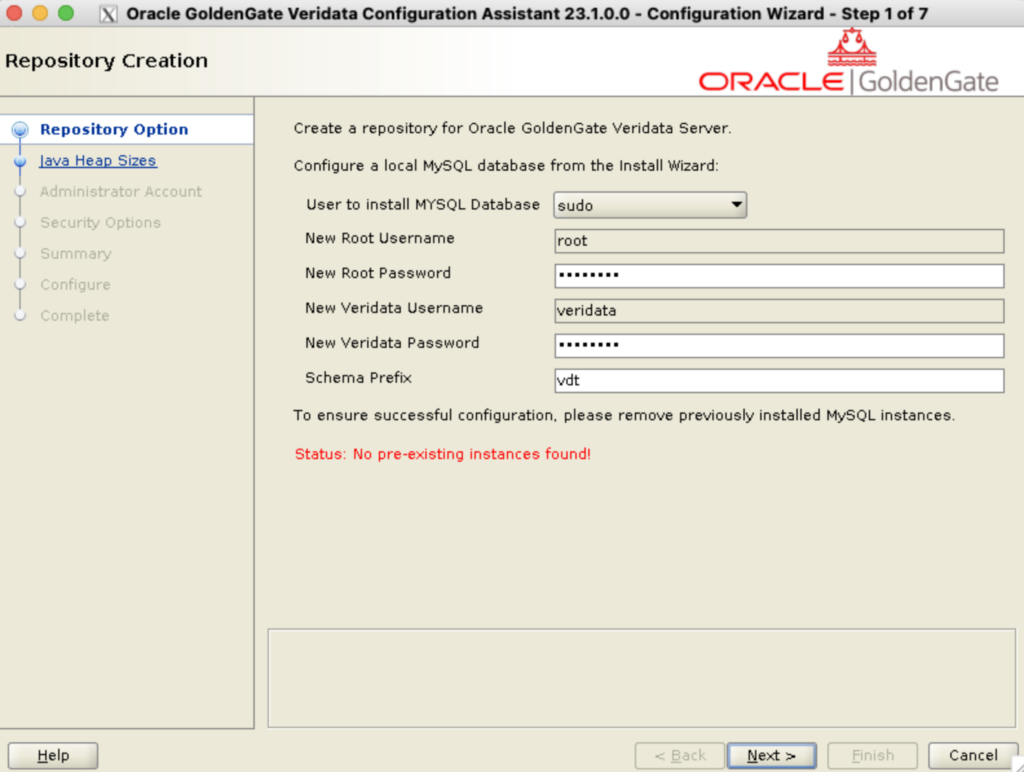

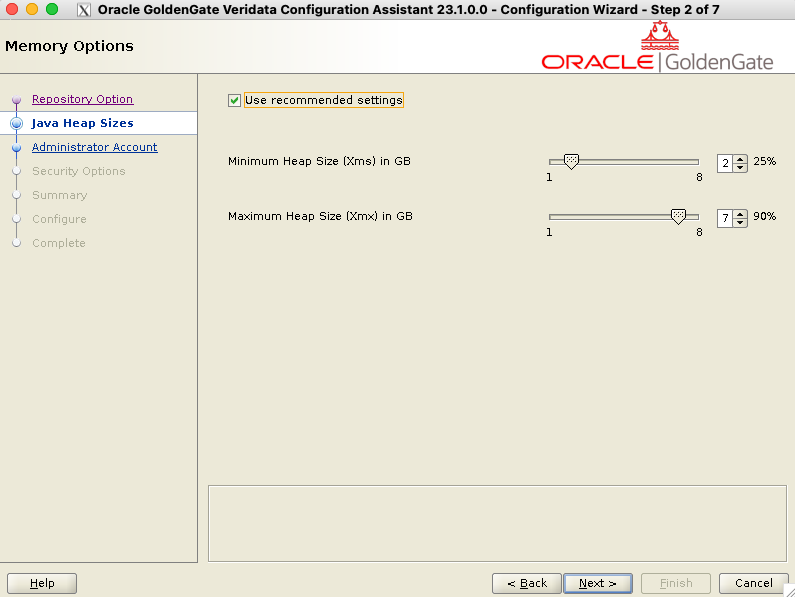

Debugging GoldenGate Veridata Agents

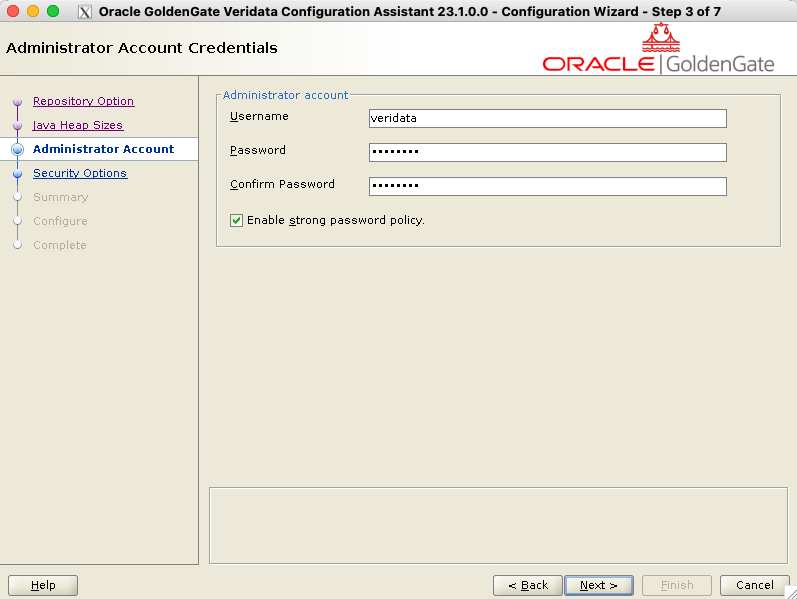

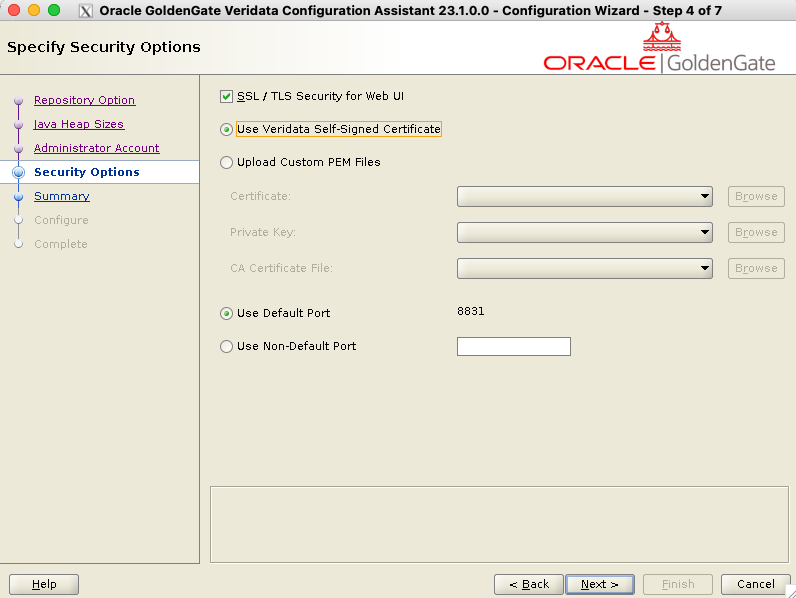

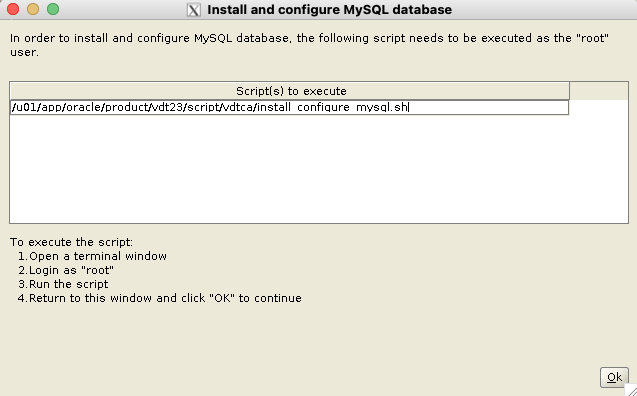

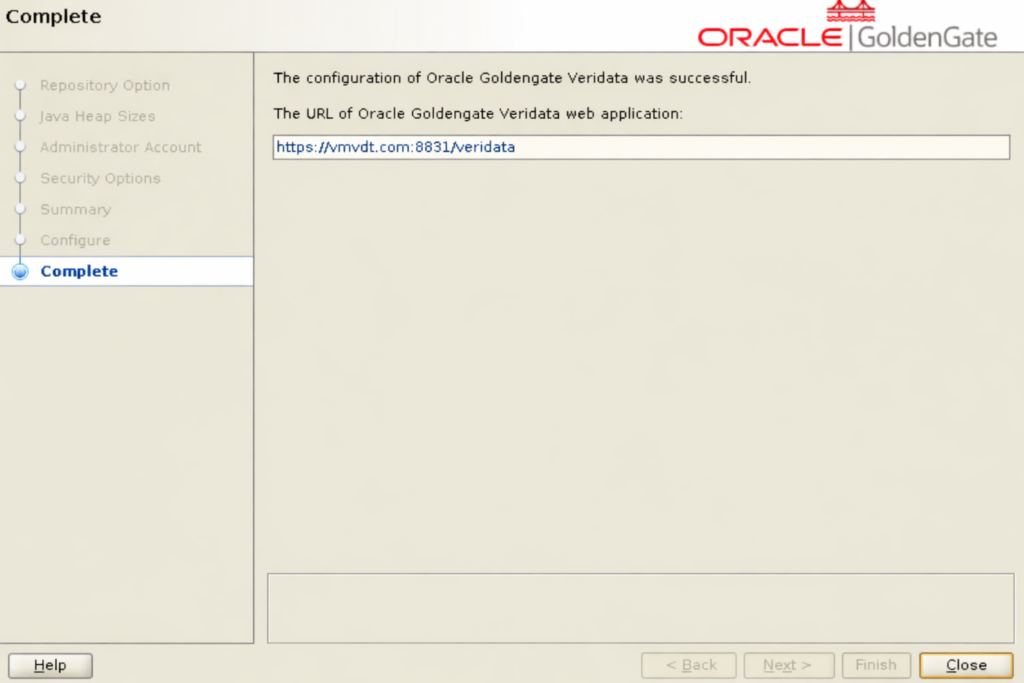

A crucial step after installing a Veridata server is to set up Veridata agents. And, as mentioned in a previous blog post about GoldenGate Veridata installation, Oracle didn’t bother to indicate when an agent is failing. For instance, the following agent named vdt_agent1 starts successfully:

[oracle@vmvdt vdt_agent1]$ ./agent.sh start agent.properties

Formatter type set to TEXT in odl.xml.

[oracle@vmvdt vdt_agent1]$ ps -ef|grep java | grep agent

oracle 56408 1 0 12:47 pts/0 00:00:00 /u01/app/oracle/product/jdk-17.0.17/bin/java -Djava.util.logging.config.class=oracle.core.ojdl.logging.LoggingConfiguration -Doracle.core.ojdl.logging.config.file=/u01/app/oracle/product/vdt_agent1/config/odl.xml -Dhome=/u01/app/oracle/product/vdt23/agent -DagentHome=/u01/app/oracle/product/vdt_agent1 -XX:+UseParallelGC -Xms1024M -Dagent-manifest.jar=/u01/app/oracle/product/vdt23/agent/agent-manifest.jar -jar /u01/app/oracle/product/vdt23/agent/JavaAgent.jar agent.propertiesAnd this agent named vdt_agent2 fails to start:

[oracle@vmvdt vdt_agent2]$ ./agent.sh start agent.properties

Formatter type set to TEXT in odl.xml.

[oracle@vmvdt vdt_agent2]$ ps -ef|grep java | grep agent

Zero difference in the agent.sh output, yet one agent is started, while the other is not. It is quite sad, when you compare the Veridata solution to other Oracle solutions, where you usually have a return on the status of what you’re starting.

The first thing to do would be to look at the logs, right ? So let’s see how this looks in practice:

[oracle@vmvdt3 product]$ pwd

/u01/app/oracle/product

[oracle@vmvdt3 product]$ ls -lrt vdt_agent*

vdt_agent1:

total 32

-rw-r-----. 1 oracle oinstall 79 Dec 21 10:49 VAOH.sh

-rw-r-----. 1 oracle oinstall 5037 Dec 21 10:49 agent.properties.sample

-rw-r-----. 1 oracle oinstall 172 Dec 21 10:49 ReadMe.txt

-rwxr-----. 1 oracle oinstall 261 Dec 21 10:49 configure_agent_ssl.sh

-rwxr-----. 1 oracle oinstall 1057 Dec 21 10:49 agent.sh

-rw-r-----. 1 oracle oinstall 5094 Dec 21 12:45 agent.properties

drwxr-x---. 3 oracle oinstall 62 Dec 21 12:47 config

drwxr-----. 2 oracle oinstall 57 Dec 21 12:47 logs

vdt_agent2:

total 32

-rw-r-----. 1 oracle oinstall 79 Dec 21 14:11 VAOH.sh

-rw-r-----. 1 oracle oinstall 172 Dec 21 14:11 ReadMe.txt

-rw-r-----. 1 oracle oinstall 5037 Dec 21 14:11 agent.properties.sample

-rwxr-----. 1 oracle oinstall 261 Dec 21 14:11 configure_agent_ssl.sh

-rwxr-----. 1 oracle oinstall 1057 Dec 21 14:11 agent.sh

-rw-r-----. 1 oracle oinstall 5050 Dec 21 14:12 agent.properties

drwxr-x---. 3 oracle oinstall 62 Dec 21 14:12 configvdt_agent2 didn’t start, so there is no logs folder yet. But even if it had started before, the logs would not necessarily be updated. So, are we completely lost in this situation ? We first have to understand why there is no log at startup. Looking at the agent.sh script, we see the following:

...

6 SCRIPT_DIR="`dirname "$0"`"

7 AGENT_HOME="`cd "$SCRIPT_DIR" ; pwd`"

8 export AGENT_HOME

9

10 . $AGENT_HOME/VAOH.sh

...

24 sed -i "0,/class=['\"][^'\"]*['\"]/s|class=['\"][^'\"]*['\"]|cla ss='oracle.core.ojdl.logging.ODLHandlerFactory'|" "$ODL_XML_PATH"

25 echo "Formatter type set to TEXT in odl.xml."

26 fi

27

28 $AGENT_ORACLE_HOME/agent_int.sh "$@"AGENT_HOME refers to the agent deployed location, in our case it is /u01/app/oracle/product/vdt_agent2. As for AGENT_ORACLE_HOME, it is defined in the VAOH.sh script sourced earlier.

AGENT_ORACLE_HOME=/u01/app/oracle/product/vdt23/agent

export AGENT_ORACLE_HOMEIt simply refers to the agent directory inside your Veridata home directory. So all options of agent.sh are passed to agent_int.sh, which looks like this:

...

59 case "$1" in

60 start)

61 nohup "$JAVA_EXECUTABLE" $JAVA_OPTS -jar "$JAR_FILE" $2 >/ dev/null 2>&1 &

62 ;;

63 run)

64 exec "$JAVA_EXECUTABLE" $JAVA_OPTS -jar "$JAR_FILE" $2

65 ;;

66 version)

67 exec "$JAVA_EXECUTABLE" $JAVA_OPTS -jar "$JAR_FILE" versio n $2

68 ;;

...We have the culprit here ! The start and run options are identical: start discards any error message and moves the process to the background, while run displays errors in the terminal. Let’s try to start the agent with this option instead:

[oracle@vmvdt vdt_agent2]$ ./agent.sh run agent.properties

Formatter type set to TEXT in odl.xml.

#server.jdbcDriver=ojdbc11-23.2.0.0.jar

[VERIAGT-BOOT] INFO Looking for home directory.

[VERIAGT-BOOT] INFO Found bootstrap class in file:/u01/app/oracle/product/vdt23/agent/JavaAgent.jar!/com/goldengate/veridata/agent/BootstrapNextGen.class.

[VERIAGT-BOOT] INFO Home directory: /u01/app/oracle/product/vdt23/agent

[VERIAGT-BOOT] INFO AGENT_DEPLOY_PATH not set, falling back to homeDir.

[VERIAGT-BOOT] INFO Preparing classpath.

[VERIAGT-BOOT] ERROR /u01/app/oracle/product/vdt_agent2/ojdbc11-23.9.0.25.07.jar does not exist. Check agent.properties configurationIn this case, the combination of the server.driversLocation and server.jdbcDriver parameters does not allow Veridata to locate the driver.

Apart from the java process that you can search for, once started, the agent home will have a logs folder with two log files.

[oracle@vmvdt logs]$ pwd

/u01/app/oracle/product/vdt_agent3/logs

[oracle@vmvdt logs]$ ll

total 4

-rw-r-----. 1 oracle oinstall 0 Dec 21 18:57 vdtperf-agent.log

-rw-r-----. 1 oracle oinstall 778 Dec 21 18:57 veridata-agent.logIf you don’t have one after starting the agent for the first time, you know for sure something went wrong. However, if you want to automatically detect if the agent was started, you should search for the following message in veridata-agent.log.

[2025-12-21T19:01:21.570+00:00] [veridata] [NOTIFICATION] [OGGV-60002] [oracle.veridata.agent] [tid: 1] [ecid: 0000Ph2IQNqFk305zzDCiW1dI4G1000001,0] Veridata Agent running on vmvdt port 8833To change the log level of the agent, edit the config/odl.xml file in your agent deployed location. See the documentation for a list of all the options available. For instance, you can change the oracle.veridata logger from NOTIFICATION:1 to TRACE:1 to generate detailed debugging information.

<logger name='oracle.veridata' level='NOTIFICATION:1'

useParentHandlers='false'>

<handler name='odl-handler' />

<handler name='my-console-handler' />

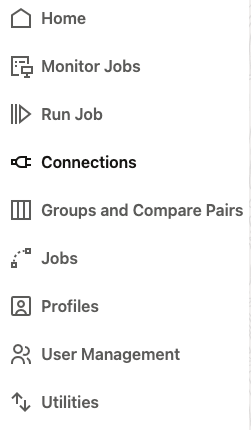

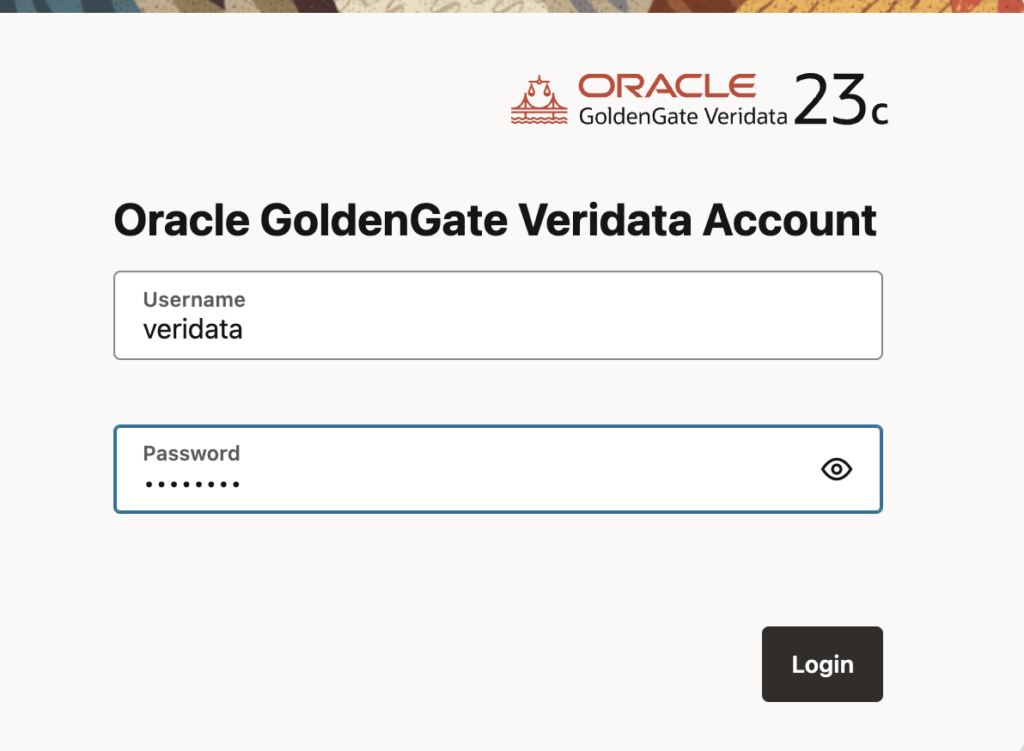

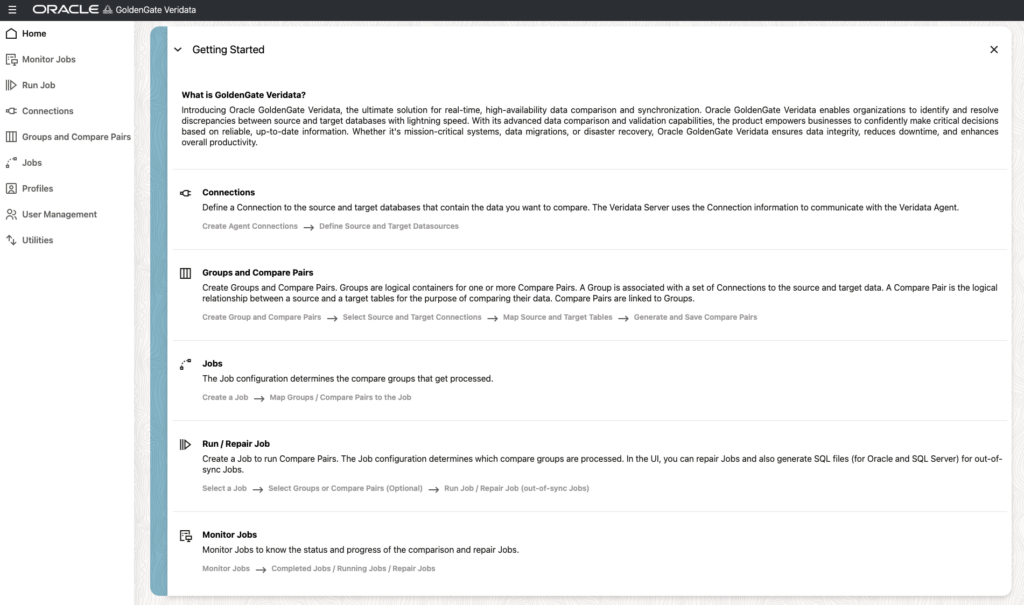

</logger>Once the agent is started, you can test the connection from the Veridata Web UI. Once logged in, go to the Connections panel, and click on Create.

In the agent connection creation panel, indicate the agent port, the host name and the database type. After testing the connection, you will know if your agent is correctly configured with the red ribbon showing “Agent validation is successful”.

A good practice when using the agent.sh script is to first run the agent, and then start it. This way, you will quickly know is something went wrong.

L’article Debugging GoldenGate Veridata Agents est apparu en premier sur dbi Blog.

Dctm – Managing licenses through OTDS

As you might know, since Documentum 24.4, there is a new requirement to have a valid license assigned to a user in order to log in to Documentum. This is a pretty important change, most likely designed with the X-Plan license model in mind, and one of the reasons why OpenText changed the license approach in the first place. This was likely done to provide better visibility and control over who is allowed to access and use Documentum, and to ensure that all usage is correctly licensed.

In this blog, I will use Documentum as well as OTDS in their freshly released version 25.4. Depending on the version you are working with, the steps might slightly differ. I will assume that both the Documentum and OTDS environments are fully available and ready for inter-connectivity and license configuration. I will use a demo environment I have internally that is hosted on RKE2 (Kubernetes from SUSE).. It doesn’t use the out-of-the-box images from OpenText but our own custom images built from scratch (same process used since Documentum 7.3, even before images/containers were officially provided):

- OTDS URL: https://otds.dns.com/otdsws

- Dctm Repo Name: dbi01

- Dctm Repo inline account: adm_morgan

- Dctm Repo OTDS (AD) account: morgan

- OTDS Repo Resource ID: 12345678-1234-5678-9abc-123456789abc

- OTDS Repo Secret Key: AbCd1234efGH5678IJkl9==

- OTDS License Key Name: dctmlicense

- OTDS Partition for inline accounts: dctminline

- OTDS Partition for AD accounts: dbi

- OTDS Business Admin: businessadmin

All the values above can be changed and you should update them based on your own environment/setup.

Note: The OTDS Partition for my AD, the Repository Resource & Access Roles as well as the OAuth Clients (+ possibly the Auth Handlers) are already created and configured. Since that is outside of the scope of the “license” part and it’s something you would already require even for versions prior to 24.4, I won’t cover that part in this blog. If you need help on that, don’t hesitate to contact us and we can help with the OTDS design and implementation.

1. Dctm Server – OTDS Authentication (turned auth+lic)In previous versions of Documentum (like 16.4, 20.2, …, 25.2), the OTDS Authentication was handled by the JMS (Tomcat or WildFly if you go further back in versions). There was an application “OTDSAuthentication” deployed on the JMS that would link the Documentum Server and OTDS (REST calls). Starting in 25.4, this is now a standalone process that you can find under “$DM_HOME/OTDSAuthLicenseHttpServerBin” (a simple JAR file). This is started by the Repository automatically, but our custom images make sure it’s always running properly, even if something goes wrong and the Repository isn’t able to start it itself.

In that OTDS Auth application, there is a single configuration file that you need to configure to allow communication:

- E.g. 16.4 (JMS on WildFly): $JMS_HOME/server/DctmServer_MethodServer/deployments/ServerApps.ear/OTDSAuthentication.war/WEB-INF/classes/otdsauth.properties

- E.g. 23.4 (JMS on Tomcat): $JMS_HOME/webapps/OTDSAuthentication/WEB-INF/classes/otdsauth.properties

- E.g. 25.4 (Standalone): $DM_HOME/OTDSAuthLicenseHttpServerBin/config/otdsauth.properties

The content is roughly the same for all versions of Documentum (it changed slightly but not too much). The main change that I wanted to mention here, linked to the licensing checks, is the addition of a new parameter called “admin_username“.

With the above parameters, this is a “simple” way to configure the “otdsauth.properties” file:

[dmadmin@cs-0 ~]$ # Definition of parameters, to fill the file

[dmadmin@cs-0 ~]$ otdsauth_properties="$DM_HOME/OTDSAuthLicenseHttpServerBin/config/otdsauth.properties"

[dmadmin@cs-0 ~]$ otds_base_url="https://otds.dns.com/otdsws"

[dmadmin@cs-0 ~]$ install_owner="dmadmin"

[dmadmin@cs-0 ~]$ repo="dbi01"

[dmadmin@cs-0 ~]$ otds_resource_id="12345678-1234-5678-9abc-123456789abc"

[dmadmin@cs-0 ~]$ otds_resource_key="AbCd1234efGH5678IJkl9=="

[dmadmin@cs-0 ~]$

[dmadmin@cs-0 ~]$ # First, to make sure the file is in "unix" file format. It's often provided by OT as DOS, even on linux, which is a problem...

[dmadmin@cs-0 ~]$ awk '{ sub("\r$", ""); print }' ${otdsauth_properties} > temp.properties

[dmadmin@cs-0 ~]$ mv temp.properties ${otdsauth_properties}

[dmadmin@cs-0 ~]$ chmod 660 ${otdsauth_properties}

[dmadmin@cs-0 ~]$

[dmadmin@cs-0 ~]$ # Configuration of the properties file

[dmadmin@cs-0 ~]$ sed -i "s,otds_rest_credential_url=.*,otds_rest_credential_url=${otds_base_url}/rest/authentication/credentials," ${otdsauth_properties}

[dmadmin@cs-0 ~]$ sed -i "s,otds_rest_ticket_url=.*,otds_rest_ticket_url=${otds_base_url}/rest/authentication/resource/validation," ${otdsauth_properties}

[dmadmin@cs-0 ~]$ sed -i "s,otds_rest_oauth2_url=.*,otds_rest_oauth2_url=${otds_base_url}/oauth2/token," ${otdsauth_properties}

[dmadmin@cs-0 ~]$ sed -i "s,synced_user_login_name=.*,synced_user_login_name=sAMAccountName," ${otdsauth_properties}

[dmadmin@cs-0 ~]$ sed -i "s,auto_cert_refresh=.*,auto_cert_refresh=true," ${otdsauth_properties}

[dmadmin@cs-0 ~]$ sed -i "s,cert_jwks_url=.*,cert_jwks_url=${otds_base_url}/oauth2/jwks," ${otdsauth_properties}

[dmadmin@cs-0 ~]$ sed -i "s,admin_username=.*,admin_username=${install_owner}," ${otdsauth_properties}

[dmadmin@cs-0 ~]$ sed -i "s,.*_resource_id=.*,${repo}_resource_id=${otds_resource_id}," ${otdsauth_properties}

[dmadmin@cs-0 ~]$ sed -i "s,.*_secretKey=.*,${repo}_secretKey=${otds_resource_key}," ${otdsauth_properties}

[dmadmin@cs-0 ~]$

With the new license requirement in conjunction with the fact that it is not handled by the JMS anymore, the URL to be configured in the Repository has been changed too. If you are upgrading your environment from a previous version, you will normally already have an “app_server_name” for “OTDSAuthentication“, with an URL of “http(s)://hostname:port/OTDSAuthentication/servlet/authenticate” (where hostname is either localhost or your local hostname // port is 9080 or 9082 for example). With the new version of Documentum, this will need to be changed to “http://localhost:port/otdsauthlicense” (where port is 8400 by default).

# Example of commands to add a new entry for "OTDSAuthentication" (if it already exists, you need to change the "append" to "set" the correct ID)

API> retrieve,c,dm_server_config

API> append,c,l,app_server_name

SET> OTDSAuthentication

API> append,c,l,app_server_uri

SET> http://localhost:8400/otdsauthlicense

API> save,c,l

Note: When I first saw that new standalone process through Java, I was a bit skeptical… The OTDS Authentication used to run through the JMS. That means that you could configured your Tomcat with all security and best practices and it would apply to the OTDS Authentication as well. With the new standalone process, it is only available through HTTP and it also only listens on localhost/127.0.0.1… I guess that means that you can forget about the failover across Documentum Servers if you have a High-Availability environment! The source code isn’t available so I don’t know if we could force it to HTTPS on another address with the help of Java Options, and the documentation doesn’t mention anything in that regard either (and I don’t want to de-compile the classes)… So not very good I would say. The only workaround would be to setup a front-end but that over-complicates things.

3. Status (#1)At that point in time, assuming that you have your OTDS Partition / Resource / Access Role and that you have a Documentum version < 24.4 (with the “old” URL configured in the “dm_server_config“), you should be able to log in with any OTDS account (and inline accounts as well, obviously).

However, for higher versions, such as our 25.4 environment, you should still not be able to log in to the Repository. Trying to do so with either an inline account or an OTDS-enabled account would both result in failure with this message:

[dmadmin@cs-0 ~]$ # Definition of parameters, to test the log in

[dmadmin@cs-0 ~]$ repo="dbi01"

[dmadmin@cs-0 ~]$ inline_test_account="adm_morgan"

[dmadmin@cs-0 ~]$

[dmadmin@cs-0 ~]$ # Trying to log in to the Repository

[dmadmin@cs-0 ~]$ iapi ${repo} -U${inline_test_account}

Please enter password for adm_morgan:

OpenText Documentum iapi - Interactive API interface

Copyright (c) 2025. OpenText Corporation

All rights reserved.

Client Library Release 25.4.0000.0134

Connecting to Server using docbase dbi01

[DM_SESSION_E_AUTH_FAIL]error: "Authentication failed for user adm_morgan with docbase dbi01."

[DM_LICENSE_E_NO_LICENSE_CONFIG]error: "Could not find dm_otds_license_config object."

Could not connect

[dmadmin@cs-0 ~]$

To be more precise, without any configuration on a 25.4 environment, you can log in in a few ways, fortunately, otherwise it would break Documentum. Some “exceptions” were put in place by OpenText and from what I could see, it appears that the current behavior is as follows (GR = Global Registry):

- Log in through iAPI / iDQL will only work with “dmadmin” and other default GR-linked accounts (dm_bof_registry / dmc_wdk_presets_owner / dmc_wdk_preferences_owner) in a GR Repository

- Log in through iAPI / iDQL will only work with “dmadmin” in a non-GR Repository

- Log in through DA will work with “dmadmin”

- Log in to any other DFC Client will not work with any account

So far, I talked about things that would mostly be setup/available if you have a Documentum environment already using OTDS for its Authentication. Now, let’s proceed with the OTDS configuration related to the license management, with screenshots and example.

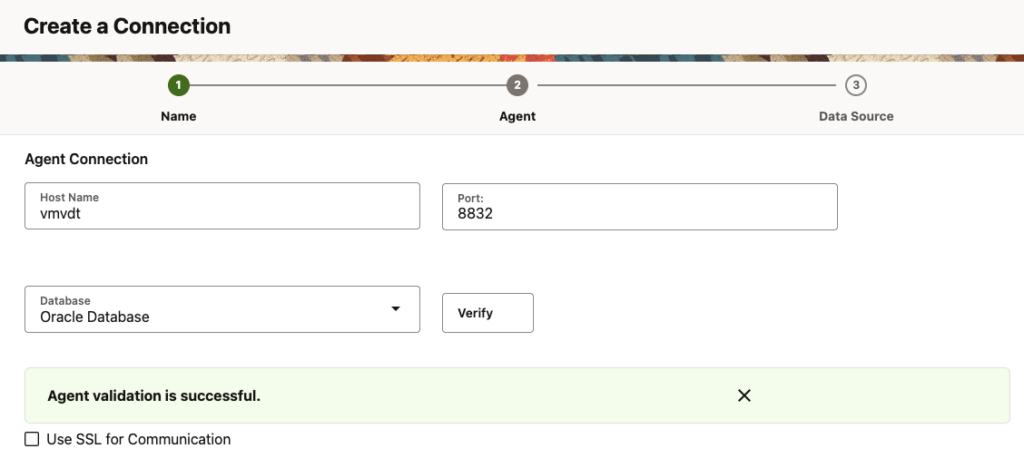

4.1. Uploading a license keyLet’s start with uploading the license key for Documentum into OTDS:

- Log in to “https://otds.dns.com/otds-admin” with the “admin” account (=otadmin@otds.admin)

- Go to “License Keys“

- Click on “Add“

- Set the “License Key Name” to “dctmlicense“

- Set the “Resource ID” to the Repository Resource ID previously created

- Click on “Next“

- Click on “Get License File” and browse your local filesystem to find the needed license file (.lic)

- Click on “Save“

You should end up with something like this:

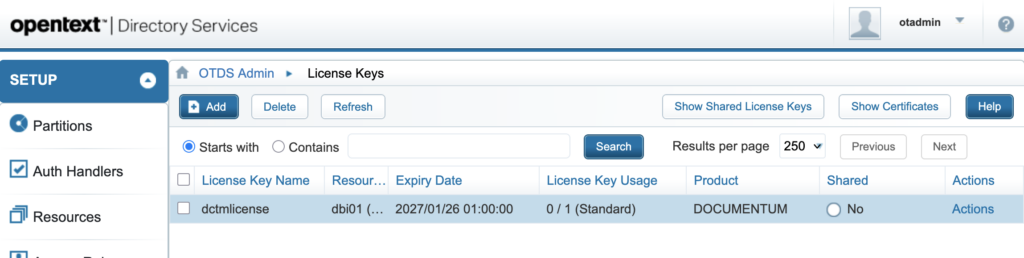

4.2. Creating a Partition for “inline” accounts

4.2. Creating a Partition for “inline” accounts

Then, let’s create a new non-synchronized partition that will be used to store the Documentum inline accounts:

- Go to “Partitions“

- Click on “Add” and then “New Non-synchronized User Partition“

- Set the “Name” to “dctminline“

- Click on “Save“

You should end up with something like this (Note: “dbi” is a synchronized partition coming from a development LDAP that I created for this demo environment):

4.3. Allocating a license to a Partition

4.3. Allocating a license to a Partition

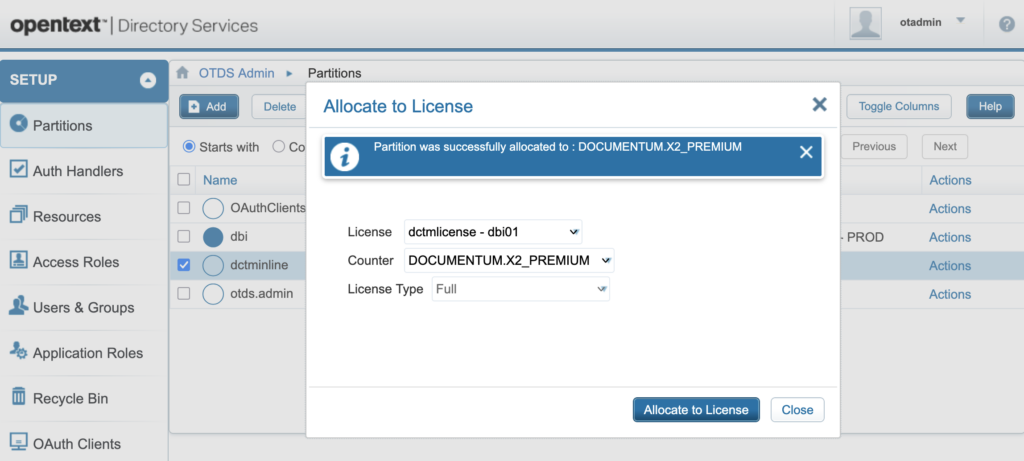

Now, let’s allocate the license to that inline Partition (“dctminline“), so that later, when the users get added, they will be able to take a license from the pool to log in to the Repository. Please note that I specifically didn’t allocate the license to the LDAP Partition (“dbi“), to show the difference later on:

- For the Partition “dctminline“, click on “Actions” and then “Allocate to License“

- Select the correct license, if you have multiple. For my parameters, it should be “dctmlicense – dbi01“

- Select the correct counter, if you have multiple (like System Accounts / X2_Premium / …)

- Click on “Allocate to License“

You should see a message saying that it was successfully allocated:

4.4. Creating a “Business Admin” account

4.4. Creating a “Business Admin” account

The next step in OTDS is to create what OpenText calls a “Business Admin” account. When someone tries to log in to Documentum or a Client Application, Documentum will use that account to contact OTDS to check whether there are enough licenses available and if the person trying to log in is allowed to do so (i.e. has a license allocated). We will configure that connection on Documentum side later, but for now, let’s create that Business Admin account:

- For the Partition “otds.admin“, click on “Actions” and then “View Members“

- Click on “Add” and then “New User“

- Set the “User Name” to “businessadmin“

- Click on “Next“

- Set the “Password Options” to “Do not require password change on reset” (dropdown)

- Set the “Password Options” to “Password never expires” (checkbox)

- Set the “Password” to XXX (remember that password, it will be used later)

- Set the “Confirm Password” to XXX (re-enter here the account password)

- Click on “Save“

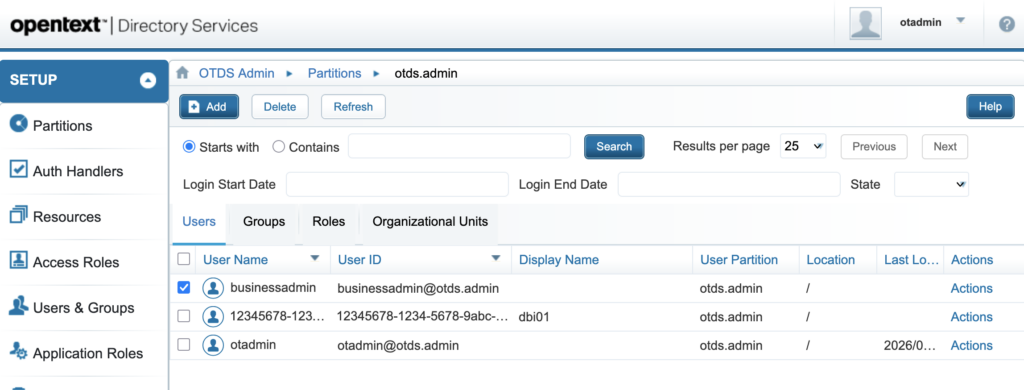

You should end-up with a new account in the “otds.admin” Partition (3rd for me, the default “admin” account + another one for the OTDS Resource for “dbi01” Repository):

4.5. Granting permissions to the “Business Admin” account

4.5. Granting permissions to the “Business Admin” account

The last step is then to grant the necessary permission to the newly created Business Admin account, so it can check the license details:

- Go to “Users & Groups“

- Click on “Groups“

- Search for the group named “otdsbusinessadmins” (there should be 1 result only)

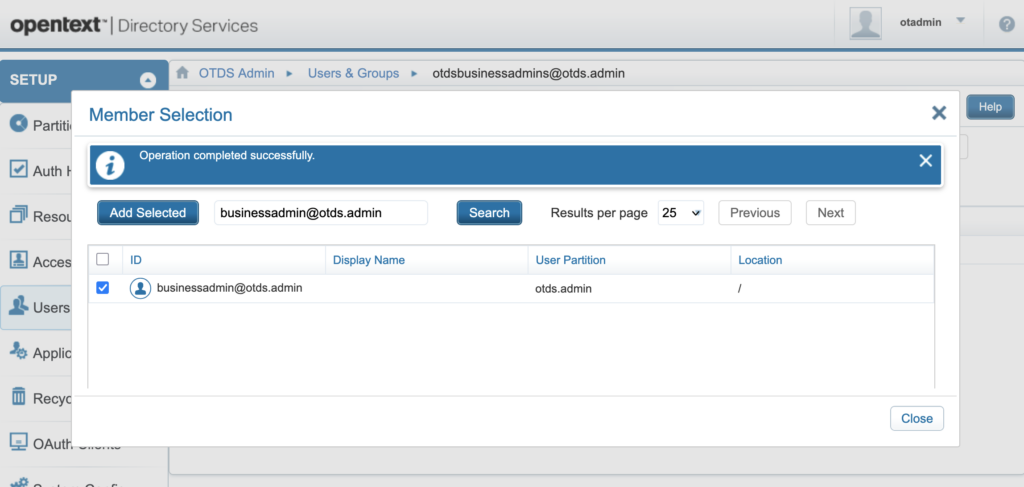

- For the Group “otdsbusinessadmins“, click on “Actions” and then “Edit Membership“

- Click on “Add Member“

- Search for the user named “businessadmin@otds.admin” (= user “businessadmin” created in “otds.admin” Partition)

- Select the checkbox for that account

- Click on “Add Selected“

You should see a message saying that it was successfully done:

That completes the configuration on the OTDS Admin UI. There are quite a few manual steps to be done, but fortunately, you should (normally) only do that once. The documentation mention other steps about creating a Resource for the inline Partition but that’s only required in case you are going to create accounts inside OTDS and you expect them to be pushed to Documentum. In our case, since the inline accounts already exist in Documentum and we want to do the opposite, we don’t need a Resource for that. There are also other steps about creating roles and whatnot, but for the testing / initial setup that we are doing here, it’s not needed either.

5. Repo – “dm_otds_license_config” objectNow that OTDS is fully configured, the last step, as previously mentioned, is to tell Documentum which account it can use for license checks. This is done through the “dm_otds_license_config” object that you will need to create:

[dmadmin@cs-0 ~]$ # Definition of parameters, to create the license config object

[dmadmin@cs-0 ~]$ otds_base_url="https://otds.dns.com/otdsws"

[dmadmin@cs-0 ~]$ install_owner="dmadmin"

[dmadmin@cs-0 ~]$ repo="dbi01"

[dmadmin@cs-0 ~]$ otds_license_name="dctmlicense"

[dmadmin@cs-0 ~]$ otds_business_admin_username="businessadmin"

[dmadmin@cs-0 ~]$

[dmadmin@cs-0 ~]$ # Set the password in a secure way

[dmadmin@cs-0 ~]$ read -s -p " --> Please enter the password here: " otds_business_admin_password

--> Please enter the password here: XXX

[dmadmin@cs-0 ~]$

[dmadmin@cs-0 ~]$ # Creation of the license config object

[dmadmin@cs-0 ~]$ iapi ${repo} -U${install_owner} -Pxxx << EOC

create,c,dm_otds_license_config

set,c,l,otds_url

${otds_base_url}/rest

set,c,l,license_keyname

dctmlicense

set,c,l,business_admin_name

${otds_business_admin_username}

set,c,l,business_admin_password

${otds_business_admin_password}

save,c,l

reinit,c

apply,c,NULL,FLUSH_OTDS_CONFIG

EOC

OpenText Documentum iapi - Interactive API interface

Copyright (c) 2025. OpenText Corporation

All rights reserved.

Client Library Release 25.4.0000.0134

Connecting to Server using docbase dbi01

[DM_SESSION_I_SESSION_START]info: "Session 012345678000aa1b started for user dmadmin."

Connected to OpenText Documentum Server running Release 25.4.0000.0143 Linux64.Oracle

Session id is s0

API> ...

000f424180001d00

API> SET> ...

OK

API> SET> ...

OK

API> SET> ...

OK

API> SET> ...

OK

API> ...

OK

API> ...

OK

API> ...

SUCCESS

API> Bye

[dmadmin@cs-0 ~]$

The “FLUSH_OTDS_CONFIG” apply command is only required if you modify an existing “dm_otds_license_config” object. However, I still added it, as this is a nice and simple way to make sure that the Repository is able to communicate with the standalone Java process (“OTDSAuthLicenseHttpServerBin“).

If it succeeds, you should see the “SUCCESS” message at the end. If it fails to communicate (e.g. you have an issue in your “dm_server_config.app_server_uri“), you should get an error about not being able to open a socket or something similar.

6. Status (#2)At that point in time, since both the OTDS and Documentum Server configurations are complete, you might think that you would be able to log in to Documentum with an inline account. But that’s not the case. Trying to do so will result in this error:

[dmadmin@cs-0 ~]$ # Definition of parameters, to test the log in

[dmadmin@cs-0 ~]$ repo="dbi01"

[dmadmin@cs-0 ~]$ inline_test_account="adm_morgan"

[dmadmin@cs-0 ~]$

[dmadmin@cs-0 ~]$ # Trying to log in to the Repository with an inline account

[dmadmin@cs-0 ~]$ iapi ${repo} -U${inline_test_account}

Please enter password for adm_morgan:

OpenText Documentum iapi - Interactive API interface

Copyright (c) 2025. OpenText Corporation

All rights reserved.

Client Library Release 25.4.0000.0134

Connecting to Server using docbase dbi01

[DM_SESSION_E_AUTH_FAIL]error: "Authentication failed for user adm_morgan with docbase dbi01."

[DM_LICENSE_E_USER_NOT_FOUND_OR_DUPLICATE]error: "User adm_morgan not found in OTDS or duplicate user exists."

Could not connect

[dmadmin@cs-0 ~]$

This is because the inline account isn’t yet present inside OTDS. We did create an inline Partition, but it’s currently empty.

If you try to log in with an LDAP Partition account (e.g. “morgan“), then you will get a slightly different error message, since the account does exist in OTDS, but it’s in a Partition that we specifically didn’t allocate to the license yet:

[dmadmin@cs-0 ~]$ # Definition of parameters, to test the log in

[dmadmin@cs-0 ~]$ repo="dbi01"

[dmadmin@cs-0 ~]$ ldap_test_account="morgan"

[dmadmin@cs-0 ~]$

[dmadmin@cs-0 ~]$ # Trying to log in to the Repository with an inline account

[dmadmin@cs-0 ~]$ iapi ${repo} -U${ldap_test_account}

Please enter password for morgan:

OpenText Documentum iapi - Interactive API interface

Copyright (c) 2025. OpenText Corporation

All rights reserved.

Client Library Release 25.4.0000.0134

Connecting to Server using docbase dbi01

[DM_SESSION_E_AUTH_FAIL]error: "Authentication failed for user morgan with docbase dbi01."

[DM_LICENSE_E_USER_NO_LICENSE_ALLOCATED]error: "No License allocated for current user."

Could not connect

[dmadmin@cs-0 ~]$

At least, the error messages are accurate!

7. Dctm Server – migrating inline accounts to OTDSAs mentioned, the very last step is therefore to get all Documentum inline accounts created in OTDS. You can, obviously, create them all manually inside the inline Partition (“dctminline“), but if you have hundreds or even thousands of such accounts, it’s going to take hours (and probably a lot of human errors on such a repetitive task). For that purpose, OpenText provides a migration utility which you can use in this way:

[dmadmin@cs-0 ~]$ # Definition of parameters

[dmadmin@cs-0 ~]$ install_owner="dmadmin"

[dmadmin@cs-0 ~]$ repo="dbi01"

[dmadmin@cs-0 ~]$ otds_inline_partition="dctminline"

[dmadmin@cs-0 ~]$

[dmadmin@cs-0 ~]$ # Execution of the migration utility

[dmadmin@cs-0 ~]$ cd $DOCUMENTUM/dfc

[dmadmin@cs-0 dfc]$ java -Ddfc.properties.file=../config/dfc.properties -cp .:dfc.jar com.documentum.fc.tools.MigrateInlineUsersToOtds ${repo} ${install_owner} xxx ${otds_inline_partition}

...

imported user to otds: dmc_wdk_presets_owner

imported user to otds: dmc_wdk_preferences_owner

imported user to otds: dm_bof_registry

imported user to otds: d2ssouser

imported user to otds: dm_fulltext_index_user

imported user to otds: adm_morgan

...

[dmadmin@cs-0 dfc]$

If you go back to OTDS Admin UI, you should now be able to see all users being present inside the inline Partition (“dctminline“). Trying to log in with your inline account should now be working properly:

[dmadmin@cs-0 ~]$ # Definition of parameters, to test the log in

[dmadmin@cs-0 ~]$ repo="dbi01"

[dmadmin@cs-0 ~]$ inline_test_account="adm_morgan"

[dmadmin@cs-0 ~]$

[dmadmin@cs-0 ~]$ # Trying to log in to the Repository with an inline account

[dmadmin@cs-0 ~]$ iapi ${repo} -U${inline_test_account}

Please enter password for adm_morgan:

OpenText Documentum iapi - Interactive API interface

Copyright (c) 2025. OpenText Corporation

All rights reserved.

Client Library Release 25.4.0000.0134

Connecting to Server using docbase dbi01

[DM_SESSION_I_SESSION_START]info: "Session 012345678000aa2d started for user adm_morgan."

Connected to OpenText Documentum Server running Release 25.4.0000.0143 Linux64.Oracle

Session id is s0

API> exit

Bye

[dmadmin@cs-0 ~]$

In the OTDS Admin UI, it is possible to see the current license usage. For that, go to “License Keys” and, for “dctmlicense“, you can click on “Actions” and then “View Counters“. This should now display “1” under “Unit Usage“, as the account has taken a license from the pool (“Reserved Seat“). If needed, you can proceed with the allocation of other Partitions (or users/groups). Please note that log in with one of the “exceptions” (i.e. “dmadmin“) shouldn’t use a counter.

L’article Dctm – Managing licenses through OTDS est apparu en premier sur dbi Blog.

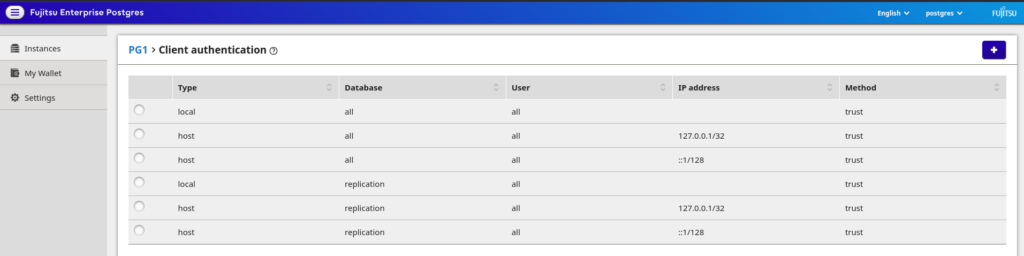

Commercial PostgreSQL distributions with TDE (1) Fujitsu Enterprise Postgres (2) TDE

In the last post we did the setup of Fujitsu Enterprise Postgres so we’re now ready to look at how TDE is implemented in this distribution of PostgreSQL. The unit of encryption in this version of PostgreSQL is a tablespace but before we can encrypt anything we need to create a master encryption key.

The location where the keystore gets created is specified by the “keystore_location” parameter, a parameter not known in community PostgreSQL:

postgres@rhel9-latest:/home/postgres/ [fe18] mkdir /u02/pgdata/keystore

postgres@rhel9-latest:/home/postgres/ [fe18] psql -c "alter system set keystore_location = '/u02/pgdata/keystore'"

ALTER SYSTEM

postgres@rhel9-latest:/home/postgres/ [fe18] sudo systemctl restart fe-postgres-1.service

postgres@rhel9-latest:/home/postgres/ [fe18] psql -c "show keystore_location"

keystore_location

----------------------

/u02/pgdata/keystore

(1 row)

Once that is ready we need to create the master encryption key using a function called “pgx_set_master_key”:

postgres@rhel9-latest:/home/postgres/ [fe18] psql

psql (18.0)

Type "help" for help.

postgres=# \df pgx_set_master_key

List of functions

Schema | Name | Result data type | Argument data types | Type

------------+--------------------+------------------+---------------------+------

pg_catalog | pgx_set_master_key | void | text | func

(1 row)

postgres=# SELECT pgx_set_master_key('secret');

ERROR: passphrase is too short or too long

DETAIL: The length of the passphrase must be between 8 and 200 bytes.

postgres=# SELECT pgx_set_master_key('secret123');

pgx_set_master_key

--------------------

(1 row)

This created a new keystore file under the location we’ve specified above which is of course not human readable:

postgres=# \! ls -la /u02/pgdata/keystore

total 4

drwxr-xr-x. 2 postgres postgres 25 Jan 28 10:43 .

drwxr-xr-x. 4 postgres postgres 34 Jan 28 10:39 ..

-rw-------. 1 postgres postgres 928 Jan 28 10:43 keystore.ks

postgres=# \! strings /u02/pgdata/keystore/keystore.ks

KSTR

@NA\

Io BS

a!]I

>yu;

2r<:4

G)n%j

6wE"

@{OT

ym&M]

@1l'z

}5>,

The advantage of implementing TDE on the tablespace level and not the whole instance is, that we can still restart the instance without specifying the master encryption key:

postgres@rhel9-latest:/home/postgres/ [fe18] sudo systemctl restart fe-postgres-1.service

postgres@rhel9-latest:/home/postgres/ [fe18] psql

psql (18.0)

Type "help" for help.

postgres=$

Before we can encrypt anything we need to open the keystore using another function called “pgx_open_keystore”:

postgres=# \df pgx_open_keystore

List of functions

Schema | Name | Result data type | Argument data types | Type

------------+-------------------+------------------+---------------------+------

pg_catalog | pgx_open_keystore | void | text | func

(1 row)

postgres=# select pgx_open_keystore('secret123');

pgx_open_keystore

-------------------

(1 row)

Once the keystore is open we can create an encrypted tablespace and put some data inside:

postgres=# \! mkdir /var/tmp/tbsencr

postgres=# create tablespace tbsencr location '/var/tmp/tbsencr' with (tablespace_encryption_algorithm = 'AES256' );

CREATE TABLESPACE

postgres=# \dbs+

List of tablespaces

Name | Owner | Location | Access privileges | Options | Size | Description

------------+----------+------------------+-------------------+------------------------------------------+-----------+-------------

pg_default | postgres | | | | 23 MB |

pg_global | postgres | | | | 790 kB |

tbsencr | postgres | /var/tmp/tbsencr | | {tablespace_encryption_algorithm=AES256} | 928 bytes |

(3 rows)

postgres=# create table t1 ( a int, b text ) tablespace tbsencr;

CREATE TABLE

postgres=# insert into t1 select i, i::text from generate_series(1,100) i;

INSERT 0 100

Trying to read from that table without opening the keystore will of course fail:

postgres@rhel9-latest:/home/postgres/ [fe18] sudo systemctl restart fe-postgres-1.service

postgres@rhel9-latest:/home/postgres/ [fe18] psql -c "select * from t1"

ERROR: could not encrypt or decrypt data because the keystore is not open

HINT: Open the existing keystore, or set the master encryption key to create and open a new keystore

There are not only pgx_* functions, there are also pgx_* catalog views which give you more information about specific topics not available in community PostgreSQL, e.g. for listing the tablespaces and their encryption scheme:

postgres=# select spcname, spcencalgo from pg_tablespace ts, pgx_tablespaces tsx where ts.oid = tsx.spctablespace;

spcname | spcencalgo

------------+------------

pg_default | none

pg_global | none

tbsencr | AES256

(3 rows)

Like in Oracle with the wallet there is the option to auto open the keystore:

postgres@rhel9-latest:/home/postgres/ [fe18] pgx_keystore --enable-auto-open /u02/pgdata/keystore/keystore.ks

Enter passphrase:

auto-open of the keystore has been enabled

The whole management of the key store and also the auto open options are explained well in the official documentation.

Btw: If you dump the data with pg_dump while the keystore is open this will result in an unencrypted dump:

postgres@rhel9-latest:/home/postgres/ [fe18] pg_dump | grep -A 3 COPY

COPY public.t (a) FROM stdin;

1

\.

--

COPY public.t1 (a, b) FROM stdin;

1 1

2 2

3 3

There is the pgx_dmpall command which can be used to backup the whole instance, but this is out of scope for this post.

L’article Commercial PostgreSQL distributions with TDE (1) Fujitsu Enterprise Postgres (2) TDE est apparu en premier sur dbi Blog.

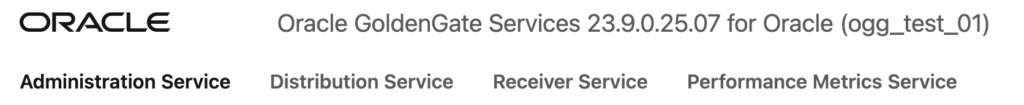

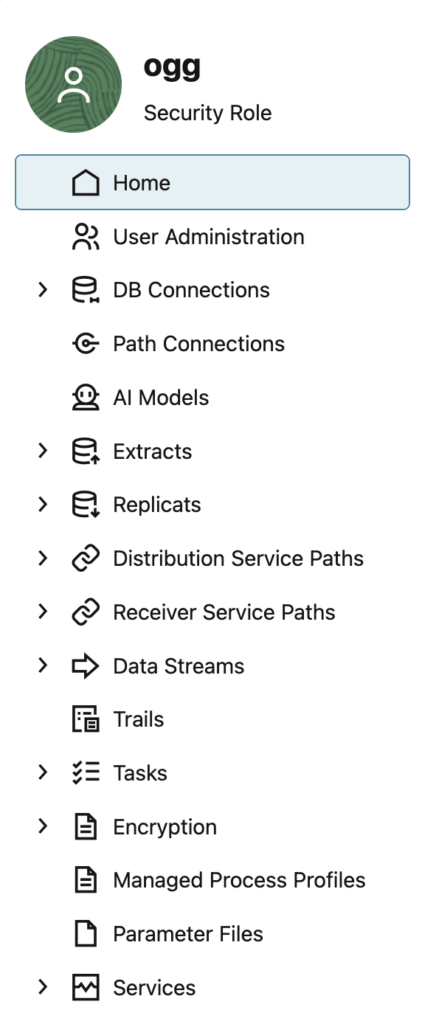

GoldenGate REST API basics with Python

Oracle GoldenGate REST API has been around for quite some time now, but I’ve yet to see it used in practice at customers. Every time I tried to introduce it, it was some sort of novelty. Mainly because DBAs tend to dislike automation, but also for technical reasons. Even though the REST API was introduced in GoldenGate 12c with the Microservices Architecture, some customers are still stuck with the Classic Architecture. As a reminder, the classic architecture is now completely absent from the 23ai version (plan your migration now !).

That being said, where to start when using the GoldenGate REST API? Oracle has some basic documentation using curl, but I want to take things a bit further by leveraging the power of Python, starting with basic requests.

If you really have no idea what a REST API is, there are tons of excellent articles online for you to get into it. Getting back to the basics, in Python, the requests module will handle the API calls for us.

The most basic REST API call would look like what I show below. Adapt the credentials, and the service manager host and port.

import requests

url = "http://vmogg:7809/services"

auth = ("ogg_user", "ogg_password")

result = requests.get(url, auth=auth)

Until now, nothing fascinating, but with the 200 return code below, we know that the call succeeded. The ok flag gives us the status of the call:

>>> result

<Response [200]>

>>> result.ok

TrueAnd to get the real data returned by the API, use the json method.

>>> result.json()

{'$schema': 'api:versions', 'links': [{'rel': 'current', 'href': 'http://vmogg:7809/services/v2', 'mediaType': 'application/json'}, {'rel': 'canonical', 'href': 'http://vmogg:7809/services', 'mediaType': 'application/json'}, {'rel': 'self', 'href': 'http://vmogg:7809/services', 'mediaType': 'application/json'}], 'items': [{'$schema': 'api:version', 'version': 'v2', 'isLatest': True, 'lifecycle': 'active', 'catalog': {'links': [{'rel': 'canonical', 'href': 'http://vmogg:7809/services/v2/metadata-catalog', 'mediaType': 'application/json'}]}}]}Up until that point, you are successfully making API connections to your GoldenGate service manager, but nothing more. What you need to change is the URL, and more specifically the endpoint.

/services is called an endpoint, and the full list of endpoints can be found in the GoldenGate documentation. Not all of them are useful, but when looking for a specific GoldenGate action, this endpoint library is a good starting point.

For instance, to get the list of all the deployments associated with your service manager, use the /services/v2/deployments endpoint. If you get an OGG-12064 error, it means that the credentials are not correct (they were technically not needed for the first example).

>>> url = "http://vmogg:7809/services/v2/deployments"

>>> requests.get(url, auth=auth).json()

{

'$schema': 'api:standardResponse',

'links': [

{'rel': 'canonical', 'href': 'http://vmogg:7809/services/v2/deployments', 'mediaType': 'application/json'},

{'rel': 'self', 'href': 'http://vmogg:7809/services/v2/deployments', 'mediaType': 'application/json'},

{'rel': 'describedby', 'href': 'http://vmogg:7809/services/v2/metadata-catalog/versionDeployments', 'mediaType': 'application/schema+json'}

],

'messages': [],

'response': {

'$schema': 'ogg:collection',

'items': [

{'links': [{'rel': 'parent', 'href': 'http://vmogg:7809/services/v2/deployments', 'mediaType': 'application/json'}, {'rel': 'canonical', 'href': 'http://vmogg:7809/services/v2/deployments/ServiceManager', 'mediaType': 'application/json'}], '$schema': 'ogg:collectionItem', 'name': 'ServiceManager', 'status': 'running'},

{'links': [{'rel': 'parent', 'href': 'http://vmogg:7809/services/v2/deployments', 'mediaType': 'application/json'}, {'rel': 'canonical', 'href': 'http://vmogg:7809/services/v2/deployments/ogg_test_01', 'mediaType': 'application/json'}], '$schema': 'ogg:collectionItem', 'name': 'ogg_test_01', 'status': 'running'},

{'links': [{'rel': 'parent', 'href': 'http://vmogg:7809/services/v2/deployments', 'mediaType': 'application/json'}, {'rel': 'canonical', 'href': 'http://vmogg:7809/services/v2/deployments/ogg_test_02', 'mediaType': 'application/json'}], '$schema': 'ogg:collectionItem', 'name': 'ogg_test_02', 'status': 'running'}

]

}

}Already, we’re starting to get a bit lost in the output (even though I cleaned it for you). Without going too much into the details, when the call succeeds, we are interested in the response.items object, discarding $schema and links objects. When the call fails, let’s just display the output for now.

def parse(response):

try:

return response.json()

except ValueError:

return response.text

def extract_main(result):

if not isinstance(result, dict):

return result

resp = result.get("response", result)

if "items" not in resp:

return resp

exclude = {"links", "$schema"}

return [{k: v for k, v in i.items() if k not in exclude} for i in resp["items"]]

result = requests.get(url, auth=auth)

if result.ok:

response = parse(result)

main_response = extract_main(response)

We now have a more human-friendly output for our API calls ! For this specific example, we only retrieve the deployment names and their status.

>>> main_response

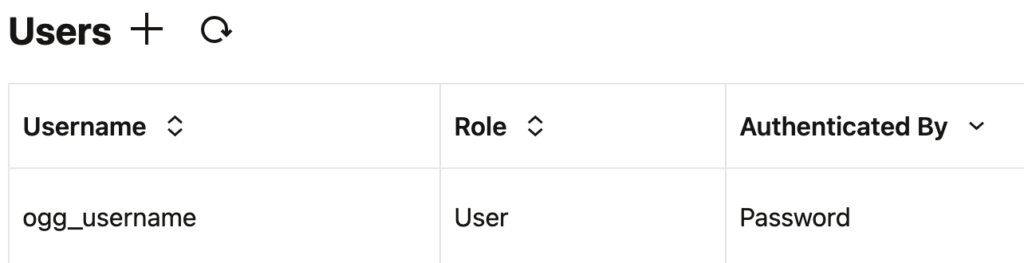

[{'name': 'ServiceManager', 'status': 'running'}, {'name': 'ogg_test_01', 'status': 'running'}, {'name': 'ogg_test_02', 'status': 'running'}]Some API calls require you to send data instead of just receiving it. A common example is the creation of a GoldenGate user. Both the role and the username are part of the endpoint. Using the post method instead of get, we will give the user settings (the password, essentially) in the params argument:

import requests

role = "User"

user = "ogg_username"

url = f"http://vmogg:7809/services/v2/authorizations/{role}/{user}"

auth = ("ogg_user", "ogg_password")

data = {

"credential": "your_password"

}

result = requests.post(url, auth=auth, json=data)

To check if the user was created, you can go to the Web UI or check the ok flag again.

>>> result.ok

True

Here, the API doesn’t provide us with much information when the call succeeds:

>>> result.text

'{"$schema":"api:standardResponse","links":[{"rel":"canonical","href":"http://vmogg:7809/services/v2/authorizations/User/ogg_username","mediaType":"application/json"},{"rel":"self","href":"http://vmogg:7809/services/v2/authorizations/User/ogg_username","mediaType":"application/json"}],"messages":[]}'OGGRestAPI Python class

When dealing with the REST API, you will quickly feel the need for a standard client object that will handle everything for you. A very basic ogg_rest_api.py script class will look like this:

import requests

import urllib3

class OGGRestAPI:

def __init__(self, url, username=None, password=None, ca_cert=None, verify_ssl=True):

"""

Initialize Oracle GoldenGate REST API client.

:param url: Base URL of the OGG REST API. It can be:

'http(s)://hostname:port' without NGINX reverse proxy,

'https://nginx_host:nginx_port' with NGINX reverse proxy.

:param username: service username

:param password: service password

:param ca_cert: path to a trusted CA cert (for self-signed certs)

:param verify_ssl: bool, whether to verify SSL certs

"""

self.base_url = url

self.username = username

self.auth = (self.username, password)

self.headers = {'Accept': 'application/json', 'Content-Type': 'application/json'}

self.verify_ssl = ca_cert if ca_cert else verify_ssl

if not verify_ssl and protocol == 'https':

# Disable InsecureRequestWarning if verification is off

urllib3.disable_warnings(urllib3.exceptions.InsecureRequestWarning)

def _request(self, method, path, *, params=None, data=None, extract=True):

url = f'{self.base_url}{path}'

response = requests.request(

method,

url,

auth=self.auth,

headers=self.headers,

params=params,

json=data,

verify=self.verify_ssl

)

self._check_response(response)

result = self._parse(response)

return self._extract_main(result) if extract else result

def _get(self, path, params=None, extract=True):

return self._request('GET', path, params=params, extract=extract)

def _post(self, path, data=None, extract=True):

return self._request('POST', path, data=data, extract=extract)

def _put(self, path, data=None, extract=True):

return self._request('PUT', path, data=data, extract=extract)

def _patch(self, path, data=None, extract=True):

return self._request('PATCH', path, data=data, extract=extract)

def _delete(self, path, extract=True):

return self._request('DELETE', path, extract=extract)

def _check_response(self, response):

if not response.ok:

print(f'HTTP {response.status_code}: {response.text}')

response.raise_for_status()

def _parse(self, response):

try:

return response.json()

except ValueError:

return response.text

def _extract_main(self, result):

if not isinstance(result, dict):

return result

resp = result.get('response', result)

if 'items' not in resp:

return resp

exclude = {'links', '$schema'}

return [{k: v for k, v in i.items() if k not in exclude} for i in resp['items']]With this, we can connect to the API and generate the same GET query as before to retrieve all deployments. This time, we only provide the endpoint, and not the whole URL.

>>> from ogg_rest_api import OGGRestAPI

>>> client_blog = OGGRestAPI(url="http://vmmogg:7809", username="ogg", password="ogg")

>>> client_blog._get("/services/v2/deployments")

[{'name': 'ServiceManager', 'status': 'running'}, {'name': 'ogg_test_01', 'status': 'running'}, {'name': 'ogg_test_02', 'status': 'running'}]As you can imagine, all GoldenGate API functionalities can be integrated in this class, enhancing GoldenGate management and monitoring. Next time you want to automate your GoldenGate processes, please consider using this REST API !

REST API calls to a secured GoldenGate deploymentIf your GoldenGate deployment is secure, you can still use this Python class. The requests module will handle it for you. I give two examples below for a secured deployment using a self-signed certificate:

# Checking CA automatically (Trusted CA)

>>> client_blog = OGGRestAPI(url="https://vmogg:7809", username="ogg", password="ogg")

# Providing RootCA (self-signed certificate)

>>> client_blog = OGGRestAPI(url="https://vmogg:7809", username="ogg", password="ogg", ca_cert="/path/to/RootCA_cert.pem", verify_ssl=True)

# Disabling verification

>>> client_blog = OGGRestAPI(url="https://vmogg:7809", username="ogg", password="ogg", verify_ssl=False)L’article GoldenGate REST API basics with Python est apparu en premier sur dbi Blog.

Thoughts on the M-Files GKO 2026 in Lisbon

My colleague, David Hueber, and I had the pleasure of attending the M-Files Global Kick-Off 2026 (GKO 2026) in Lisbon, Portugal, this week. The weather was unfortunate, but that’s not what matters.

From January 26 to January 29, this event brought together M-Files employees and partners from around the world for the first time. They aligned their strategy, exchanged views, and motivated each other to help our customers achieve even more with the solution.

Of course, a lot of the information is still confidential, so I won’t reveal any big secrets in the article. However, there are always things to share.

Now more than ever, partners are the cornerstone of the M-Files strategy. We are not just “executors”; we are an extension of their vision. A real player of the team and for me it makes a big difference.

From strategic sessions to deep dives into product vision, GKO showcased once again why M‑Files continues to be a leader in intelligent information management.

Key Themes: AI, Metadata,…It’s no surprise that this year is a logical continuation of the previous ones. Artificial intelligence is at the heart of the solution. Metadata increases the accuracy of AI results, and the recently introduced workspaces provide more context for your data. “Context” is the key word for this year!

A major focus this year is the evolving role of AI in document management, and how M-Files continues to push the boundaries with metadata‑driven automation. The momentum around:

- Enhancing information discovery

- Improving knowledge work productivity

- Scaling AI-powered document processes

Throughout the sessions, we were given a sneak preview of M-Files’ strategic direction for 2026. The presentations and discussions particularly resonated with our daily experience in the field, where we see organizations seeking clarity, automation, and structure in an information-overloaded world.

Collaboration with ColleaguesAttending with David made the experience even more memorable. Yep, he’s my boss, so I have to suck up a little.

Together, we engaged with peers from different regions, compared our consulting practice experiences, and aligned ourselves more closely with M-Files’ strategic priorities. Events like GKO demonstrate the importance of community and collaboration in developing effective ECM and information management solutions.

Ready for 2026GKO 2026 is almost over, and it left us inspired, aligned, and energized to bring even more value to our clients this year. With M-Files continuing to innovate at a rapid pace, we’re excited to translate these insights into impactful ECM solutions across our projects.

Stay tuned, more reflections and deep dives into M-Files and intelligent content management are on the way!

Feel free to ask us any digitalization questions.

L’article Thoughts on the M-Files GKO 2026 in Lisbon est apparu en premier sur dbi Blog.

SQL Server 2022 CU23: Database Mail is broken, but your alerts shouldn’t be

“One single alerting email is missing, and all your monitoring feels deserted.”

This is the harsh lesson many SQL Server DBAs learned the hard way this week. If your inbox has been suspiciously quiet since your last maintenance window, don’t celebrate just yet: your instance might have simply lost its voice.

CU23: The poisoned giftThe Cumulative Update 23 for SQL Server 2022 (KB5074819), released on January 15 2026, quickly became the “hot” topic on technical forums. The reason? A major regression that purely and simply breaks Database Mail.

Could not load file or assembly 'Microsoft.SqlServer.DatabaseMail.XEvents, Version=17.0.0.0, Culture=neutral, PublicKeyToken=89845dcd8080cc91' or one of its dependencies. The system cannot find the file specified.

This error message is the visible part of the iceberg. Beyond the technical crash, it is the silence of your monitoring that should worry you. The real danger here is that while the mailing engine fails, your SQL Agent jobs don’t necessarily report an error. Since the mail items are never even processed, they don’t appear in the failed_items or unsent_items views with typical error statuses. For most monitoring configurations, this means you stay completely unaware that your instance has lost its voice. You aren’t getting alerts, but everything looks fine on the surface.

We often tend to downplay the importance of Database Mail, treating it as a minor utility. Yet, for many of us, it is the backbone of our monitoring. From SQL Agent job failure notifications to corruption alerts or disk space thresholds, Database Mail is a critical component. When it fails, your infrastructure visibility evaporates, leaving you flying blind in a rather uncomfortable technical fog.

Rollback or Status Quo?While waiting for an official fix, the best way to protect your production remains a rollback (guidelines available here). Uninstalling a CU is never an easy task: it implies additional downtime and a fair amount of stress. However, staying in total darkness regarding your servers’ health is a much higher risk. Microsoft has promised a hotfix “soon” but in the meantime, a server that reboots is better than a server that suffers in silence.

If a rollback is impossible due to SLAs or internal policies, remember that not all is lost. Even if the emails aren’t being sent, the information itself isn’t volatile. SQL Server continues to conscientiously log everything it wants to tell you inside the msdb system tables.

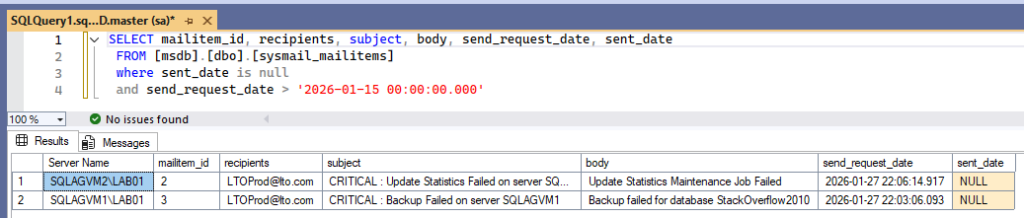

You can query the following table to keep an eye on the alerts piling up:

SELECT mailitem_id, recipients, subject, body, send_request_date, sent_date

FROM [msdb].[dbo].[sysmail_mailitems]

where sent_date is null

and send_request_date > '2026-01-15 00:00:00.000'

It’s less stylish than a push notification, but it’s your final safety net to ensure your Log Shipping hasn’t flatlined or your backups haven’t devoured the last available Gigabyte.

How to Build a Solid “Home-Made” Quick FixIf you have an SMTP gateway reachable via PowerShell, you can bridge the gap using the native Send-MailMessage cmdlet. This approach effectively bypasses the broken DatabaseMail.exe by using the PowerShell network stack to ship your alerts.

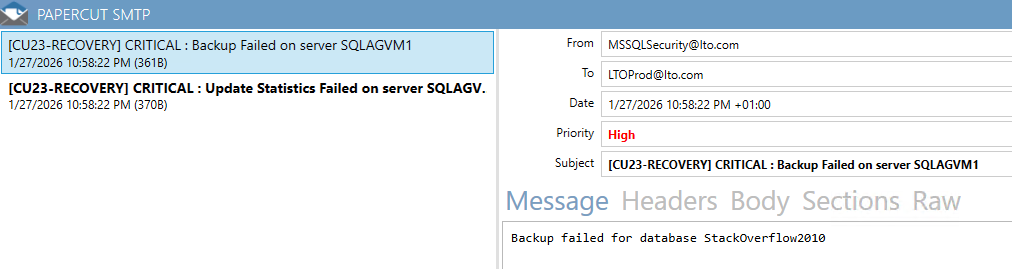

In a Lab environment, you likely don’t have a full-blown Exchange server. To test this script, I recommend using Papercut. It acts as a local SMTP gateway that catches any outgoing mail and displays it in a UI without actually sending it to the internet. Simply run the Papercut executable, and it will listen on localhost:25.

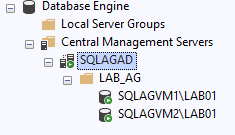

For the purpose of this article’s demonstration, the following Central Management Server (CMS) setup is used:

Run the following script as a scheduled task every 15 minutes to replay any messages stuck in the msdb queue:

Import-Module -Name dbatools;

Set-DbatoolsConfig -FullName 'sql.connection.trustcert' -Value $true;

$cmsServer = "SQLAGAD\LAB01"

$cmsGroupName = "LAB_AG"

$smtpServer = "localhost"

try {

$instances = Get-DbaRegServer -SqlInstance $cmsServer -ErrorAction Stop |

Where-Object group -match $cmsGroupName;

if (-not $instances) {

Write-Warning "No instances found in the group '$cmsGroupName' on $cmsServer."

return

}

foreach ($server in $instances) {

Write-Host "Processing: $($server.Name)" -ForegroundColor Cyan

$query = "SELECT SERVERPROPERTY('ServerName') SQLInstance, * FROM [msdb].[dbo].[sysmail_mailitems] where sent_date is null and send_request_date > DATEADD(minute, -15, GETDATE())"

$blockedMails = Invoke-DbaQuery -SqlInstance $server.Name -Database msdb -Query $query -ErrorAction SilentlyContinue

if ($blockedMails) {

foreach ($mail in $blockedMails) {

Send-MailMessage -SmtpServer $smtpServer `

-From "MSSQLSecurity@lto.com" `

-To $mail.recipients `

-Subject "[CU23-RECOVERY] $($mail.subject)" `

-Body $mail.body `

-Priority High

Write-Host "Successfully re-sent: $($mail.subject)" -ForegroundColor Green

}

}

}

}

catch {

Write-Error "Error accessing the CMS: $($_.Exception.Message)"

}Resulting alerts in the Papercut mailbox:

By leveraging the dbatools module and the native Send-MailMessage function, we can create a “recovery bridge.” The following script is designed to be run as a scheduled task (e.g., every 15 minutes). It scans your entire infrastructure via your CMS, identifies any messages that failed to send in the last 15-minute window, and replays them through PowerShell’s network stack instead of SQL Server’s.

Note on Data Integrity: You will notice that this script intentionally performs no UPDATE or DELETE operations on the msdb tables. We chose to treat the system database as a read-only ‘source of truth’ to avoid any further corruption or inconsistency while the instance is already in an unstable state.

Until Microsoft releases an official fix for this capricious CU23, this script acts as a life support system for your monitoring alerts. It is simple, effective, and most importantly, it prevents the Monday morning nightmare of discovering failed weekend backups that went unnoticed because the notification engine was silent.

So, if your SQL alerts prefer staying cozy in the msdb rather than doing their job, you now have the bridge to get them moving. Set up the scheduled task, run the script, and go grab a coffee, your emails are finally back on track.

Until the hotfix lands, keep your scripts ready!

L’article SQL Server 2022 CU23: Database Mail is broken, but your alerts shouldn’t be est apparu en premier sur dbi Blog.

Commercial PostgreSQL distributions with TDE (1) Fujitsu Enterprise Postgres (1) Setup

While TDE (Transparent Data Encryption) is considered a checklist feature in some parts of the PostgreSQL community this topic comes up all over again. The same is true with our customers, it doesn’t matter at all if it technically makes sense to have TDE or not, some just must have it for reasons outside of their control, mostly due to legal requirements. As vanilla community PostgreSQL does not provide TDE the only option is to use one of the commercial distributions of PostgtreSQL. There are several out there and we’ll take a look at some of them but today we’ll start with Fujitsu Enterprise Postgres. This will be a two blog series, the first one (this) describing how to setup this version of PostgreSQL and in the follow up post we’ll look at how TDE looks like in this distribution and how it can be setup in Fujitsu Enterprise Postgres.

Fujitsu provides a 90 trial version for which you need to register here. Once you’ve done that you should receive an Email with a link to download the software (around 1.3gb). The supported operating systems are either RHEL 8 & 9 or SLES 15. If you are on Debian or anything based on Debian such as Ubuntu then you can already stop here as it is not supported.

As usual, the operating system needs to be prepared, and on RHEL 9 which I am using here this means enabling the code ready builder repository and installing the required packages:

[root@rhel9-latest ~]$ subscription-manager repos --enable codeready-builder-for-rhel-9-x86_64-rpms

[root@rhel9-latest ~]$ dnf install alsa-lib audit-libs bzip2-libs cyrus-sasl-lib gdb pcp-system-tools glibc glibc.i686 iputils libnsl2 libicu libgcc libmemcached-awesome libselinux libstdc++ libtool-ltdl libzstd llvm lz4-libs ncurses-libs net-tools nss-softokn-freebl pam perl-libs protobuf-c python3 rsync sudo sysstat tcl unzip xz-libs zlib perl

For this the system needs to be subscribed to Red Hat but you might also try either Rocky or Alma Linux, this should work as well (both are clones of RHEL) and those do not need a subscription.

In the Quick Start Guide an operating system user called “fepuser” is used, but we prefer to go with standard “postgres” user, so:

[root@rhel9-latest ~]$ groupadd postgres

[root@rhel9-latest ~]$ useradd -g postgres -m postgres

[root@rhel9-latest ~]$ grep postgres /etc/sudoers

postgres ALL=(ALL) NOPASSWD: ALL

We’ll also not use “/database/inst1” as PGDATA but rather our standard:

[root@rhel9-latest ~]$ su - postgres

[postgres@rhel9-latest ~]$ sudo mkdir -p /u02/pgdata

[postgres@rhel9-latest ~]$ sudo chown postgres:postgres /u02/pgdata/

[postgres@rhel9-latest ~]$ exit

As the installation files are provided in ISO format, loop-back mount that:

[root@rhel9-latest ~]$ mkdir /mnt/media

[root@rhel9-latest ~]$ mount -t iso9660 -r -o loop /root/ep-postgresae-linux-x64-1800-1.iso /mnt/media/

[root@rhel9-latest ~]$ ls -l /mnt/media/

total 161

dr-xr-xr-x. 4 root root 2048 Dec 4 03:24 CIR

dr-xr-xr-x. 3 root root 2048 May 18 2023 CLIENT64

dr-xr-xr-x. 4 root root 2048 Dec 4 03:24 COMMON

-r-xr-xr-x. 1 root root 4847 Aug 14 10:49 install.sh

dr-xr-xr-x. 5 root root 2048 Nov 5 08:47 manual

dr-xr-xr-x. 2 root root 24576 Oct 29 17:04 OSS_Licence

dr-xr-xr-x. 3 root root 2048 Jun 13 2016 parser

dr-xr-xr-x. 3 root root 2048 Mar 27 2024 PGBACKREST

dr-xr-xr-x. 3 root root 2048 May 18 2023 PGPOOL2

-r--r--r--. 1 root root 46882 Nov 28 02:39 readme.txt

-r--r--r--. 1 root root 53702 Nov 28 01:21 readme_utf8.txt

dr-xr-xr-x. 2 root root 2048 May 18 2023 sample

dr-xr-xr-x. 3 root root 2048 May 18 2023 SERVER

-r-xr-xr-x. 1 root root 12848 Aug 14 10:50 silent.sh

dr-xr-xr-x. 3 root root 2048 May 18 2023 WEBADMIN

That should be all to start the installation (there is a silent mode as well, but we’re not going to look into this for the scope of this post):

[root@rhel9-latest ~]$ cd /mnt/media/

[root@rhel9-latest media]$ ./install.sh

ERROR: The installation of Uninstall (middleware) ended abnormally.

Installation was ended abnormally.

… and that directly fails. Looking at the log file it becomes clear that “tar” is missing (this is a minimal installation of RHEL 9 and this does apparently does not come with tar by default):

[root@rhel9-latest media]$ grep tar /var/log/fsep_SERVER64_media_1800_install.log

sub_envcheck.sh start

sub_cir_install.sh start

./CIR/Linux/cirinst.sh: line 562: tar: command not found

sub_envcheck.sh start

sub_cir_install.sh start

./CIR/Linux/cirinst.sh: line 562: tar: command not found

So, once more:

[root@rhel9-latest media]$ dnf install -y tar

[root@rhel9-latest media]$ ./install.sh

The following products can be installed:

1: Fujitsu Enterprise Postgres Advanced Edition (64bit) 18

2: Fujitsu Enterprise Postgres Client (64bit) 18

3: Fujitsu Enterprise Postgres WebAdmin 18

4: Fujitsu Enterprise Postgres Pgpool-II 18

5: Fujitsu Enterprise Postgres pgBackRest 18

Select the product to be installed.

Note: If installing the Server, it is strongly recommended to install WebAdmin.

To select multiple products, separate using commas (,). (Example: 1,2)

[number,all,q](The default value is 1,2,3): all

From the output above we can see that Fujitsu bundles Pgpool-II and pgBackRest with their distribution of PostgreSQL. We’re just going to install “all” and the installation runs smoothly until the end:

Selected product

Fujitsu Enterprise Postgres Advanced Edition (64bit) 18

Fujitsu Enterprise Postgres Client (64bit) 18

Fujitsu Enterprise Postgres WebAdmin 18

Fujitsu Enterprise Postgres Pgpool-II 18

Fujitsu Enterprise Postgres pgBackRest 18

Do you want to install the above product?

y: Proceed to the next step

n: Select the product again

q: Quit without installing

[y,n,q](The default value is y): y

==============================================================================

Product to be installed

Fujitsu Enterprise Postgres Advanced Edition (64bit) 18

New installation

Fujitsu Enterprise Postgres Client (64bit) 18

New installation

Fujitsu Enterprise Postgres WebAdmin 18

New installation

Fujitsu Enterprise Postgres Pgpool-II 18

New installation

Fujitsu Enterprise Postgres pgBackRest 18

New installation

Installation directory information

Fujitsu Enterprise Postgres Advanced Edition (64bit) 18

/opt/fsepv18server64

Fujitsu Enterprise Postgres Client (64bit) 18

/opt/fsepv18client64

Fujitsu Enterprise Postgres WebAdmin 18

/opt/fsepv18webadmin

Fujitsu Enterprise Postgres Pgpool-II 18

/opt/fsepv18pgpool-II

Fujitsu Enterprise Postgres pgBackRest 18

/opt/fsepv18pgbackrest

Setup information

WebAdmin setup: Execute

Web server port number: 27515

WebAdmin internal port number: 27516

Start installation using the above information?

y: Start the installation

c: Change the information

q: Quit without installing

[y,c,q](The default value is y): y

==============================================================================

Starting installation.

Fujitsu Enterprise Postgres Advanced Edition (64bit) 18 Installation

Installation is complete.

Fujitsu Enterprise Postgres Client (64bit) 18 Installation

Installation is complete.

Fujitsu Enterprise Postgres WebAdmin 18 Installation

Installation is complete.

Fujitsu Enterprise Postgres Pgpool-II 18 Installation

Installation is complete.

Fujitsu Enterprise Postgres pgBackRest 18 Installation

Installation is complete.

Starting setup.

Sets up WebAdmin.

Setup is complete.

Installed successfully.

All the files have been installed under “/opt”, grouped by component:

[root@rhel9-latest ~]$ ls -l /opt/

total 4

dr-xr-x---. 5 root root 59 Jan 26 09:41 FJSVcir

drwxr-xr-x. 3 root root 4096 Jan 26 09:42 FJSVqstl

drwxr-xr-x. 14 root root 155 Nov 21 08:57 fsepv18client64

drwxr-xr-x. 7 root root 63 Jan 26 09:42 fsepv18pgbackrest

drwxr-xr-x. 9 root root 91 Jan 26 09:42 fsepv18pgpool-II

drwxr-xr-x. 15 root root 162 Oct 27 05:57 fsepv18server64

drwxr-xr-x. 13 root root 137 Jan 26 09:42 fsepv18webadmin

What follows below is the installation of our DMK, just ignore it (or ask in the comments if you’re interested), this makes it a bit easier with the environment:

[postgres@rhel9-latest ~]$ sudo mkdir -p /opt/local

[postgres@rhel9-latest ~]$ sudo chown postgres:postgres /opt/local/

[postgres@rhel9-latest ~]$ cd /opt/local/

[postgres@rhel9-latest local]$ unzip /home/postgres/dmk_postgres-2.4.1.zip

[postgres@rhel9-latest local]$ cat /etc/pgtab

fe18:/opt/fsepv18server64/bin/:dummy:9999:D

[postgres@rhel9-latest local]$ sudo chown postgres:postgres /etc/pgtab

[postgres@rhel9-latest local]$ cp dmk/etc/dmk.conf.unix dmk/etc/dmk.conf

[postgres@rhel9-latest local]$ dmk/bin/dmk.bash

[postgres@rhel9-latest local]$ cat dmk/templates/profile/dmk.postgres.profile >> ~/.bash_profile

[postgres@rhel9-latest local]$ exit

logout

[root@rhel9-latest ~]$ su - postgres

Last login: Mon Jan 26 09:51:49 CET 2026 from 192.168.122.1 on pts/1

PostgreSQL Clusters up and running on this host:

----------------------------------------------------------------------------------------------

PostgreSQL Clusters NOT running on this host:

----------------------------------------------------------------------------------------------

Having that setup we do not need to worry about PATH and other environment settings, they just got setup correctly, so we can directly ask for which version of PostgreSQL we’re faced here:

postgres@rhel9-latest:/home/postgres/ [fe18] which initdb

/opt/fsepv18server64/bin/initdb

postgres@rhel9-latest:/home/postgres/ [fe18] initdb --version

initdb (PostgreSQL) 18.0

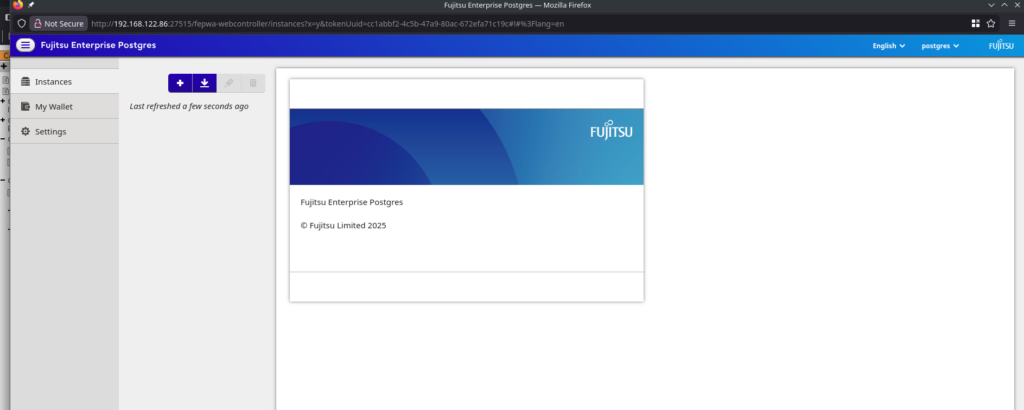

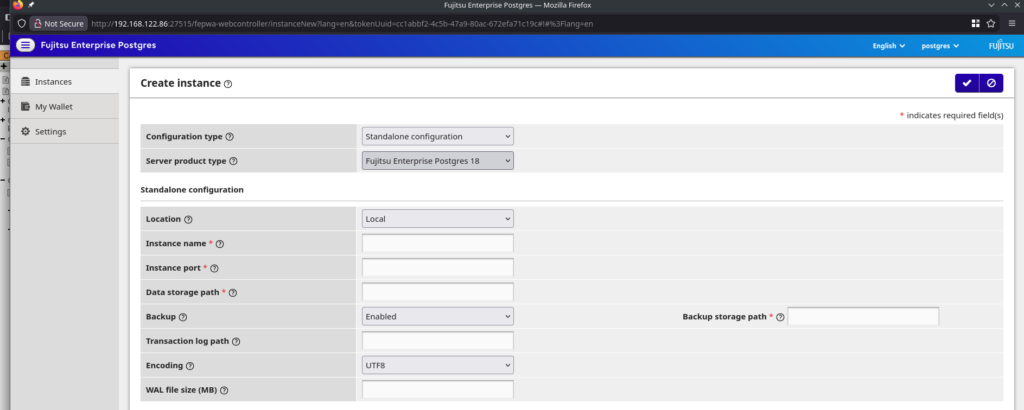

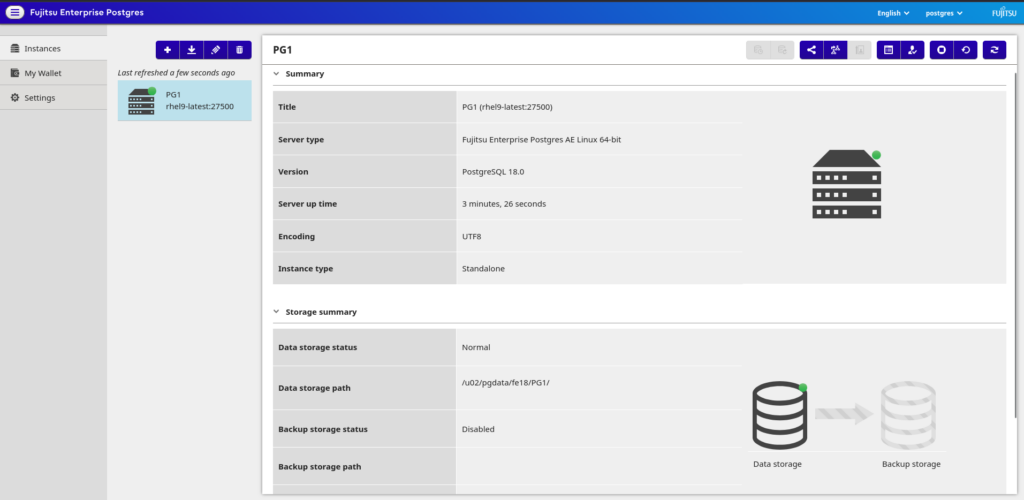

Except for the installation directory there does not seem to be a special Fujitsu branding and we’re on PostgreSQL 18.0. The preferred method of creating a new PostgreSQL instance seems to be to use WebAdmin which was installed and started during the installation:

We could go this way, but as we do want to know how it looks in the background we’re going to use plain initdb. Looking at the switches of this version of initdb it is mostly the same as plain PostgreSQL except for the “coordinator”, “coord_conninfo”, and “datanode” switches:

postgres@rhel9-latest:/home/postgres/ [fe18] initdb --help

initdb initializes a PostgreSQL database cluster.

Usage:

initdb [OPTION]... [DATADIR]

Options:

-A, --auth=METHOD default authentication method for local connections

--auth-host=METHOD default authentication method for local TCP/IP connections

--auth-local=METHOD default authentication method for local-socket connections

--coordinator create an instance of the coordinator <- XXXXXXXXXXXXXXXXXXXXXXXXX

--coord_conninfo connection parameters to the coordinator <- XXXXXXXXXXXXXXXXXXXXXXXXX

--datanode create an instance of the datanode <- XXXXXXXXXXXXXXXXXXXXXXXXX

[-D, --pgdata=]DATADIR location for this database cluster

-E, --encoding=ENCODING set default encoding for new databases

-g, --allow-group-access allow group read/execute on data directory

--icu-locale=LOCALE set ICU locale ID for new databases

--icu-rules=RULES set additional ICU collation rules for new databases

-k, --data-checksums use data page checksums

--locale=LOCALE set default locale for new databases

--lc-collate=, --lc-ctype=, --lc-messages=LOCALE

--lc-monetary=, --lc-numeric=, --lc-time=LOCALE

set default locale in the respective category for

new databases (default taken from environment)

--no-locale equivalent to --locale=C

--builtin-locale=LOCALE

set builtin locale name for new databases

--locale-provider={builtin|libc|icu}

set default locale provider for new databases

--no-data-checksums do not use data page checksums

--pwfile=FILE read password for the new superuser from file

-T, --text-search-config=CFG

default text search configuration

-U, --username=NAME database superuser name

-W, --pwprompt prompt for a password for the new superuser

-X, --waldir=WALDIR location for the write-ahead log directory

--wal-segsize=SIZE size of WAL segments, in megabytes

Less commonly used options:

-c, --set NAME=VALUE override default setting for server parameter

-d, --debug generate lots of debugging output

--discard-caches set debug_discard_caches=1

-L DIRECTORY where to find the input files

-n, --no-clean do not clean up after errors

-N, --no-sync do not wait for changes to be written safely to disk

--no-sync-data-files do not sync files within database directories

--no-instructions do not print instructions for next steps

-s, --show show internal settings, then exit

--sync-method=METHOD set method for syncing files to disk

-S, --sync-only only sync database files to disk, then exit

Other options:

-V, --version output version information, then exit

-?, --help show this help, then exit

If the data directory is not specified, the environment variable PGDATA

is used.

Report bugs to <pgsql-bugs@lists.postgresql.org>.

PostgreSQL home page: <https://www.postgresql.org/>

(Not sure if bugs for this version of initdb really should go to the official mailing lists, but this is another topic).

Initializing a new instance is not different from what we know from vanilla PostgreSQL:

postgres@rhel9-latest:/home/postgres/ [fe18] initdb --pgdata=/u02/pgdata/fe18/PG1 --lc-collate="C" --lc-ctype="C" --encoding=UTF8

The files belonging to this database system will be owned by user "postgres".

This user must also own the server process.

The database cluster will be initialized with this locale configuration:

locale provider: libc

LC_COLLATE: C

LC_CTYPE: C

LC_MESSAGES: en_US.UTF-8

LC_MONETARY: en_US.UTF-8

LC_NUMERIC: en_US.UTF-8

LC_TIME: en_US.UTF-8

The default text search configuration will be set to "english".

Data page checksums are enabled.

creating directory /u02/pgdata/fe18/PG1 ... ok

creating subdirectories ... ok

selecting dynamic shared memory implementation ... posix

selecting default "max_connections" ... 100

selecting default "shared_buffers" ... 128MB

selecting default time zone ... Europe/Berlin

creating configuration files ... ok

running bootstrap script ... ok

performing post-bootstrap initialization ... ok

syncing data to disk ... ok

initdb: warning: enabling "trust" authentication for local connections

initdb: hint: You can change this by editing pg_hba.conf or using the option -A, or --auth-local and --auth-host, the next time you run initdb.

Success. You can now start the database server using:

pg_ctl -D /u02/pgdata/fe18/PG1 -l logfile start

As we do want the instance to start automatically when the operating system is starting up lets use the systemd service template provided by Fujitsu to integrate that with systemd:

## Template

postgres@rhel9-latest:/home/postgres/ [fe18] cat /opt/fsepv18server64/share/fsepsvoi.service.sample

# Copyright FUJITSU LIMITED 2025

[Unit]

Description=FUJITSU Enterprise Postgres <inst1>

Requires=network-online.target

After=network.target network-online.target

[Service]

ExecStart=/bin/bash -c '/opt/fsepv<x>server64/bin/pgx_symstd start /opt/fsepv<x>server64 /database/inst1'

ExecStop=/bin/bash -c '/opt/fsepv<x>server64/bin/pgx_symstd stop /opt/fsepv<x>server64 /database/inst1'

ExecReload=/bin/bash -c '/opt/fsepv<x>server64/bin/pgx_symstd reload /opt/fsepv<x>server64 /database/inst1'

Type=forking

User=fsepuser

Group=fsepuser

[Install]

WantedBy=multi-user.target

## Adapted

postgres@rhel9-latest:/home/postgres/ [fe18] sudo cat /etc/systemd/system/fe-postgres-1.service

# Copyright FUJITSU LIMITED 2025

[Unit]

Description=FUJITSU Enterprise Postgres PG1

Requires=network-online.target

After=network.target network-online.target

[Service]

Environment="PATH=/opt/fsepv18server64/bin/:$PATH"

ExecStart=/bin/bash -c '/opt/fsepv18server64/bin/pgx_symstd start /opt/fsepv18server64 /u02/pgdata/fe18/PG1/'

ExecStop=/bin/bash -c '/opt/fsepv18server64/bin/pgx_symstd stop /opt/fsepv18server64 /u02/pgdata/fe18/PG1/'

ExecReload=/bin/bash -c '/opt/fsepv18server64/bin/pgx_symstd reload /opt/fsepv18server64 /u02/pgdata/fe18/PG1/'

Type=forking

User=postgres

Group=postgres

[Install]

WantedBy=multi-user.target

“pgx_symstd” is a simple wrapper around pg_ctl, not sure about the reason for this. Enable and start:

postgres@rhel9-latest:/home/postgres/ [fe18] sudo systemctl enable fe-postgres-1

Created symlink /etc/systemd/system/multi-user.target.wants/fe-postgres-1.service → /etc/systemd/system/fe-postgres-1.service.

11:22:11 postgres@rhel9-latest:/home/postgres/ [fe18] sudo systemctl daemon-reload

11:22:13 postgres@rhel9-latest:/home/postgres/ [fe18] sudo systemctl start fe-postgres-1

11:22:20 postgres@rhel9-latest:/home/postgres/ [fe18] sudo systemctl status fe-postgres-1