Feed aggregator

Parsing Real-Time SQL Monitor (RTSM) ACTIVE Reports Stored as HTML

When you work with a large number of Real-Time SQL Monitor (RTSM) reports in the ACTIVE format (the interactive HTML report with JavaScript), it quickly becomes inconvenient to open them one by one in a browser. Very often you want to load them into the database, store them, index them, and analyze them in bulk.

Some RTSM reports are easy to process — for example, those exported directly from EM often contain a plain XML payload that can be extracted and parsed with XMLTABLE().

But most ACTIVE reports do not store XML directly.

Instead, they embed a base64-encoded and zlib-compressed XML document inside a <report> element.

These reports typically look like this:

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=utf-8"/>

<script ...>

var version = "19.0.0.0.0";

...

</script>

</head>

<body onload="sendXML();">

<script id="fxtmodel" type="text/xml">

<!--FXTMODEL-->

<report db_version="19.0.0.0.0" ... encode="base64" compress="zlib">

<report_id><![CDATA[/orarep/sqlmonitor/main?...]]></report_id>

eAHtXXtz2ki2/38+hVZ1a2LvTQwS4pXB1GJDEnYc8ALOJHdrSyVA2GwAYRCOfT/9

...

ffUHVA==

</report>

<!--FXTMODEL-->

</script>

</body>

</html>

At first glance it’s obvious what needs to be done:

- Extract the base64 block

- Decode it

- Decompress it with zlib

- Get the original XML

<sql_monitor_report>...</sql_monitor_report>

And indeed — if the database had a built-in zlib decompressor, this would be trivial.

Unfortunately, Oracle does NOT provide a native zlib inflate function.

UTL_COMPRESScannot be used — it expects Oracle’s proprietary LZ container format, not a standard zlib stream.- There is no PL/SQL API for raw zlib/DEFLATE decompression.

- XMLType, DBMS_CRYPTO, XDB APIs also cannot decompress zlib.

Because the RTSM report contains a real zlib stream (zlib header + DEFLATE + Adler-32), Oracle simply cannot decompress it natively.

Solution: use Java stored procedureThe only reliable way to decompress standard zlib inside the database is to use Java.

A minimal working implementation looks like this:

InflaterInputStream inflaterIn = new InflaterInputStream(in);

InflaterInputStream with default constructor expects exactly the same format that RTSM uses.

I created a tiny Java helper ZlibHelper that inflates the BLOB directly into another BLOB.

It lives in the database, requires no external libraries, and works in all Oracle versions that support Java stored procedures.

Source code: https://github.com/xtender/xt_scripts/blob/master/rtsm/parsing/ZlibHelper.sql

PL/SQL API: PKG_RTSM

On top of the Java inflater I wrote a small PL/SQL package that:

- Extracts and cleans the base64 block

- Decodes it into a BLOB

- Calls Java to decompress it

- Returns the resulting XML as CLOB

- Optionally parses it with

XMLTYPE

Package here:

pkg_rtsm.sql

https://github.com/xtender/xt_scripts/blob/master/rtsm/parsing/pkg_rtsm.sql

This allows you to do things like:

xml:=xmltype(pkg_rtsm.rtsm_html_to_xml(:blob_rtsm));

Or load many reports, store them in a table, and analyze execution statistics across hundreds of SQL executions.

Structured Data Retrieval with Sparrow using OCR and Vision LLM [Improved Accuracy]

Do Not Put Security Checks in an Oracle BEGIN END block

Posted by Pete On 24/11/25 At 02:56 PM

Ollama and MLX-VLM Accuracy Review (Qwen3-VL and Mistral Small 3.2)

Customer experience – How to change ip address in a fully clustered environment

If you have a clusturized environment already up and running, but you want or need change the complete ip adress of your wall with minimal downtime (full downtime during operation: 3 min).

You’re in the right place, I will show you how we can do that.

ContextTwo-node clustered environment in sql 2022 with OS environment in 2022 .In 1 node, there is a standalone instance and an Always-on instance with the other node. During the changes if you have just a standalone instance, your instance will be unvailable.

Here’s the step-by-step procedure for modify IPs adresses of a complete clustering environnement:

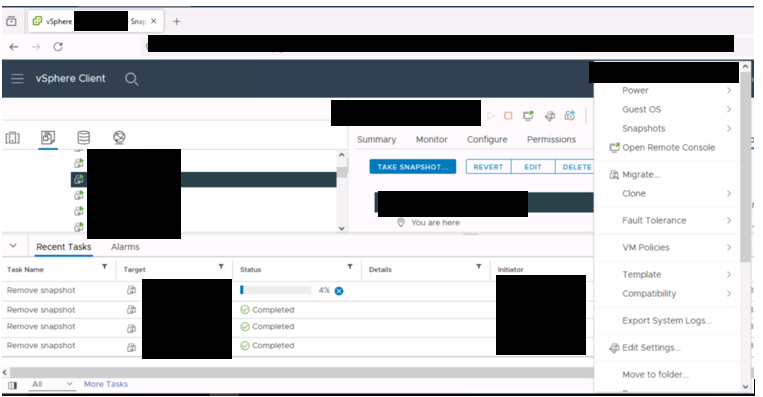

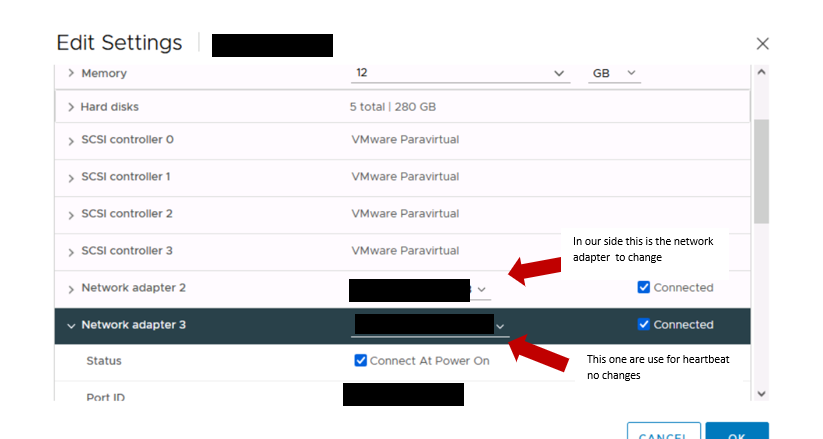

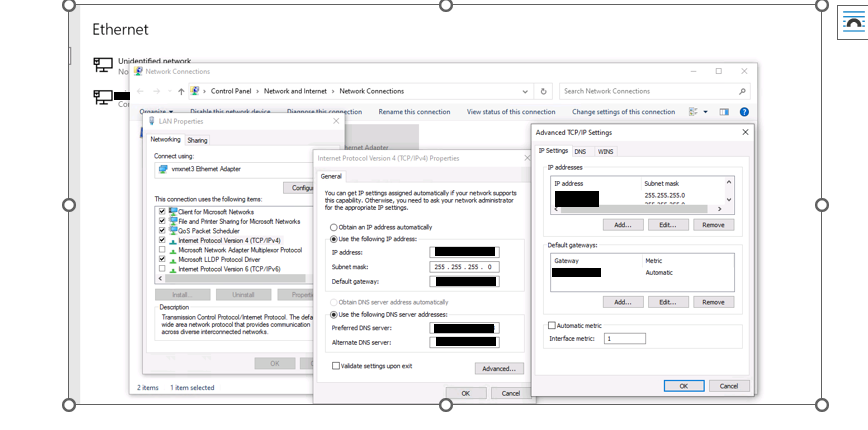

1) Change Ip of the two virtual machine

Change into Network parameters like VLAN settings and MAC address ( on vsphere)

Than control IP on netword card

Access the network interface card on the respective nodes and make the change.

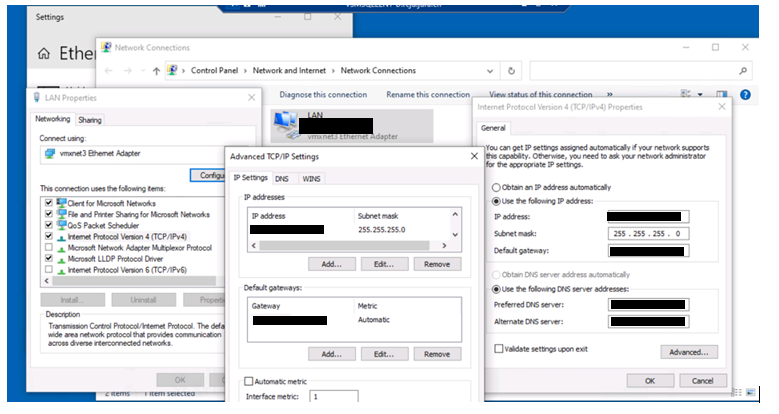

2) Change Ip of the cluster card on each vm

Modify on network card and change dns if necessary

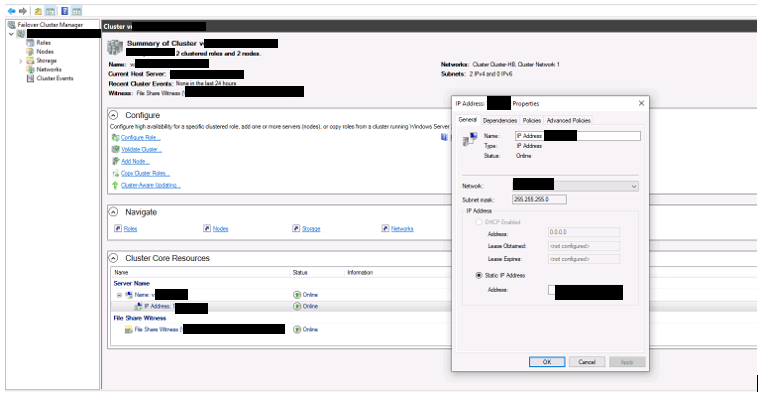

3) Change ip of the cluster ( on windows failover cluster)

In the Failover Cluster Manager pane, select your cluster and expand Cluster Core Resources.

Right-click the cluster, and select Properties >IP address.

Change the IP address of the failover cluster using the Edit option and click OK.

Click Apply.

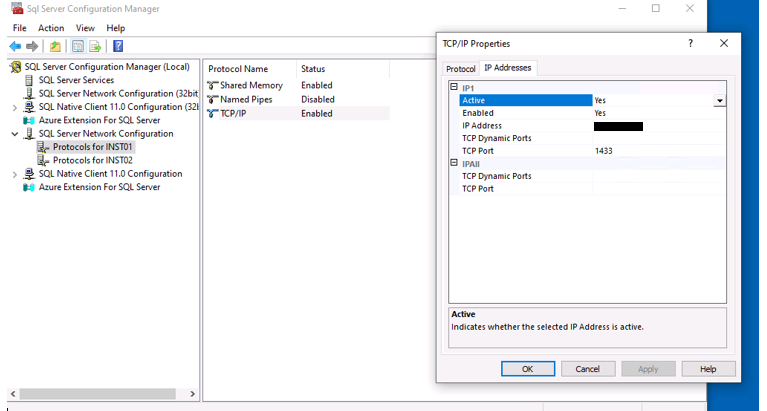

4) Change ip of the the instance sql

If you have specific IP for your instance add the new IP on network card

However you can just change the IP on on sql configuration manager

Restart the instance

Control connection with the changes

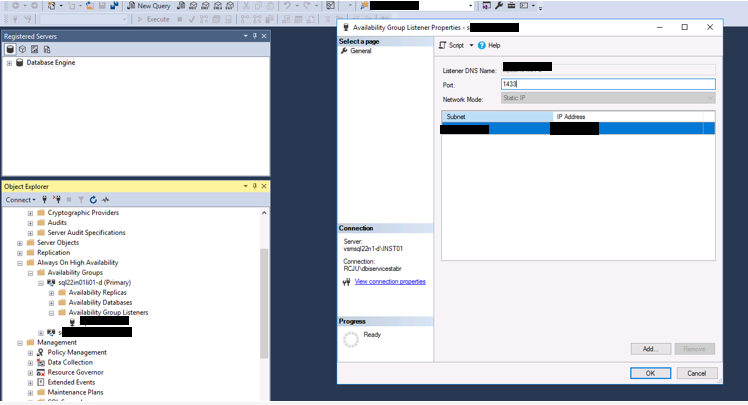

5) Change ip of the listener

Go to the AG in the failover cluster manager, locate the server name in the bottom panel, right-click and go to properties and change the static IP address.

Problem we encountered

Problem we encountered

As it was a cluster, the two IP ranges didn’t have the same firewall rules. This initially blocked the hardware part of the system, as well as the AG witness, which was unable to control the state of the two nodes. The network team then set the same rules on both ranges, and all was well.

L’article Customer experience – How to change ip address in a fully clustered environment est apparu en premier sur dbi Blog.

Exascale Infrastructure : new flavor of Exadata Database Service

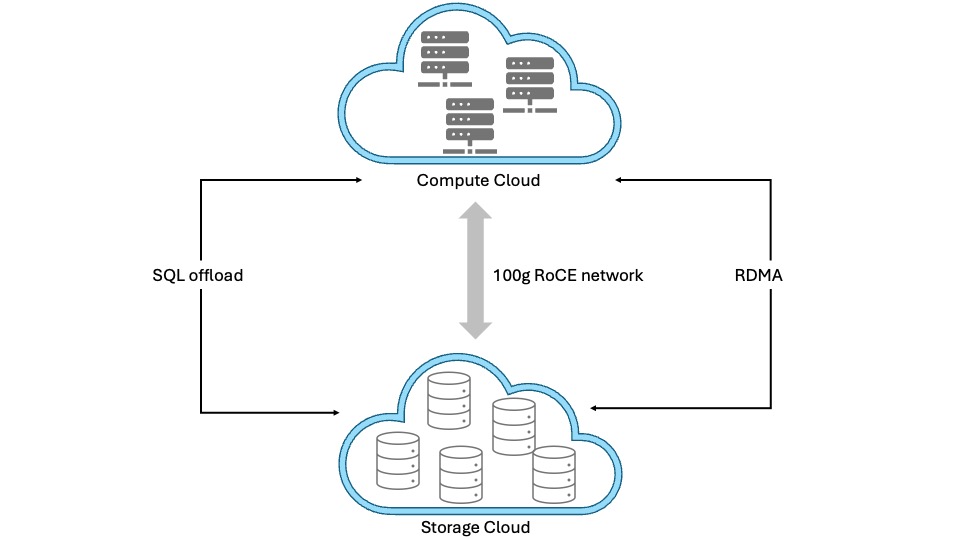

Exadata on Exascale Infrastructure is a new deployment option for Exadata Database Service. It comes in addition to the well known Exadata Cloud@Customer or Exadata on Dedicated Infrastructure options already available. It is based on new storage management technology decoupling database and Grid Infrastructure clusters from the Exadata underlying storage servers by integrating the database kernel directly with the Exascale storage structures.

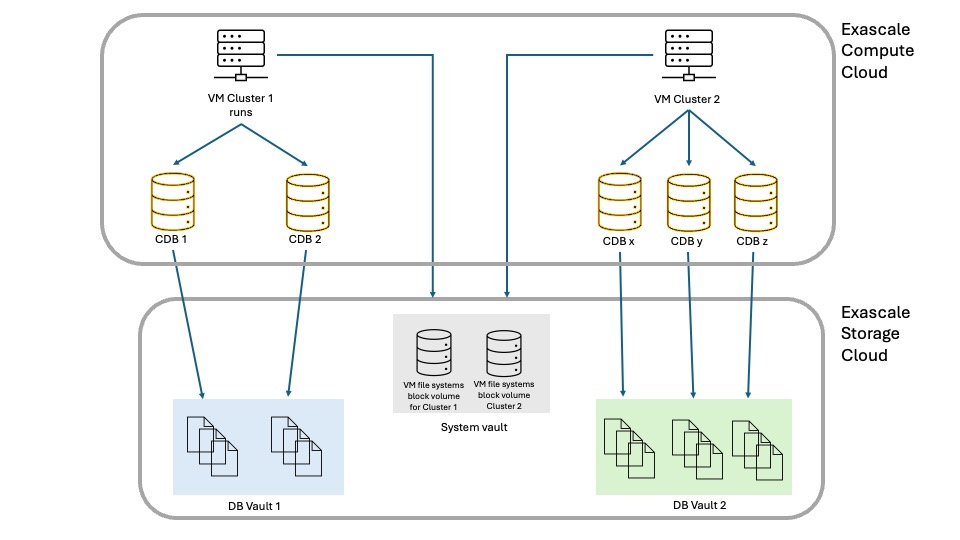

What is Exascale Infrastructure ?Simply put, Exascale is the next-generation of Oracle Exadata Database Services. It combines a cloud storage approach for flexibilty and hyper-elasticity with the performance of Exadata Infrastructure. It introduces a loosely-coupled shared and multitenant architecture where Database and Grid Infrastructure clusters are decoupled from the underlying Exadata storage servers which become a pool of shared storage resources available for multiple Grid Infrastructure clusters and databases.

Strict data isolation provides secure storage sharing while storage pooling enables flexible and dynamic provisioning combined with better storage space and processing utilization.

Advanced snapshot and cloning features, leveraging Redirect-On-Write technology instead of Copy-On-Write, enable space-efficient thin clones from any read/write database or pluggable database. Read-only test master are therefore a thing of the past. These features alone make Exascale a game-changer for database refreshes, CI/CD workflows and pipelines, development environments provisioning, all done with single SQL commands and much faster than before.

Block storage services allow the creation of arbitrary-sized block volumes for use by numerous applications. Exascale block volumes are also used to store Exadata database server virtual machines images enabling :

- creation of more virtual machines

- removal of local storage dependency inside the Exadata compute nodes

- seamless migration of virtual machines between different compute nodes

Finally, the following hardware and software considerations complete this brief presentation of Exascale:

- runs on 2-socket Oracle Exadata system hardware with RoCE Network Fabric (X8M-2 or later)

- Database 23ai release 23.5.0 or later is required for full-featured native Database file storage in Exascale

- Exascale block volumes support databases using older Database software releases back to Oracle Database 19c

- Exascale is built into Exadata System Software (since release 24.1)

The main point with Exascale architecture is cloud-scale, multi-tenant resource pooling, both for storage and compute.

Storage pooling

Storage pooling

Exascale is based on pooling storage servers which provide services such as Storage Pools, Vaults and Volume Management. Vaults, which can be considered an equivalent of ASM diskgroups, are directly accessed by the database kernel.

File and extent management is done by Exadata System Software, thus freeing the compute layer from ASM processes and memory structures for database files access and extents management (with Database 23ai or 26ai; for Database 19c, ASM is still required). With storage management moving to storage servers, resource management becomes more flexible and more memory and CPU resources are available on compute nodes to process database tasks.

Exascale also provides redundancy, caching, file metadata management, snapshots and clones as well as security and data integrity features.

Of course, since Exascale is built on top of Exadata, you benefit from features like RDMA, RoCE, Smart Flash Cache, XRMEM, Smart Scan, Storage Indexes, Columnar Caching.

Compute poolingOn the compute side, we have database-optimized servers which run Database 23ai or newer and Grid Infrastructure Cluster management software. The physical database servers host the VM Clusters and are managed by Oracle. Unlike Exadata on Dedicated Infrastructure, there is no need to provision an infrastructure before going ahead with VM Cluster creation and configuration : in Exascale, you only deal with VM Clusters.

VM file systems are centrally-hosted by Oracle on RDMA-enabled block volumes in a system-vault. VM images used by the VM Clusters are no more hosted on local storage on the database servers. This enables the number of VMs running on the database servers to raise from 12 to 50.

Each VM Cluster accesses a Database Vault storing the database files with strict isolation from other VM Clusters database files.

Virtual Cloud NetworkClient and backup connectivity is provided by Virtual Cloud Network (VCN) services.

This loosely-coupled, shared and multitenant architecture enables far greater flexibilty than what was possible with ASM or even Exadata on Dedicated Infrastructure. Exascale’s hyper-elasticity enables to start with very small VM Clusters and then scale as the workloads increase, with Oracle managing the infrastructure automatically. You can start as small as 1 VM per cluster (up to 10), 8 eCPUs per VM (up to 200), 22GB of memory per VM, 220GB file system storage per VM and 300GB Vault storage per VM Cluster (up to 100TB). Memory is tightly coupled to eCPUs configuration with 2.75GB per eCPU and thus does not scale independently from the eCPUs number.

For those new to eCPU, it is a standard billing metric based on the number of cores per hour elastically allocated from a pool of compute and storage servers. eCPUs are not tied to the make, model or clock speed of the underlying processor. By contrast, an OCPU is the equivalent of one physical core with hyper-threading enabled.

To summarizeTo best understand what Exascale Infrastructure is and introduces, here is the wording which best describes this new flavor of Exadata Database Service :

- loosely-coupling of compute and storage cloud

- hyper-elasticity

- shared and multi-tenant service model

- ASM-less (for 23ai or newer)

- Exadata performance, reliability, availability and security at any scale

- CI/CD friendly

- pay-per-use model

Stay tuned for more on Exascale …

L’article Exascale Infrastructure : new flavor of Exadata Database Service est apparu en premier sur dbi Blog.

OGG-00423 when performing initial load with GoldenGate 23ai

Very quick piece of blog today to tackle the OGG-00423 error. There is not much information online on the matter, and the official Oracle documentation doesn’t help. If you ever stumble upon an OGG-00423 error when setting up GoldenGate replication, remember that it’s most certainly related to grants given to the GoldenGate user. An example of the error is given below, after starting an initial load:

2025-10-27 09:52:40 ERROR OGG-00423 Could not find definition for pdb_source.app_source.t1.This error happened when doing an initial load with the following configuration:

extract extini

useridalias cdb01

extfile aa

SOURCECATALOG pdb_source

table app_source.t1, SQLPredicate "As of SCN 3899696";This error should be present whether you use SOURCECATALOG or the full three-part TABLE name.

For replicats, a common solution for OGG-00423 is to use the ASSUMETARGETDEFS parameter in the configuration file, but this is a replication-only parameter, and there is no such thing for the initial load. In this case, the error was due to the user defined in the cdb01 alias lacking select on the specified t1 table:

[oracle@vmogg ~]$ sqlplus c##ggadmin

Enter password:

SQL> alter session set container=pdb_source;

Session altered.

SQL> select * from app_source.t1;

select * from app_source.t1

*

ERROR at line 1:

ORA-00942: table or view does not existAfter granting the correct SELECT privilege to the GoldenGate user, it works ! Here is an example on how to grant this select. You might want to grant it differently, depending on security aspects in your deployments:

sqlplus / as sysdba

ALTER SESSION SET CONTAINER=PDB_SOURCE;

GRANT SELECT ANY TABLE TO C##GGADMIN CONTAINER=CURRENT;NB: When testing GoldenGate setups, a good practice to debug OGG-errors if you do not use a DBA user for replication is to temporarily grant DBA (or a DBA role) to the GoldenGate user. This way, you can quickly track down the root cause of your problem, at least if it’s related to grants.

L’article OGG-00423 when performing initial load with GoldenGate 23ai est apparu en premier sur dbi Blog.

Generate password protected file using UTL_FILE

Downloading old oracle software

RMAN CAPABILITIES

Join the Oracle Security Masterclass this December in York!

Posted by Pete On 11/11/25 At 10:01 AM

Gafferbot – All Systems Meh !

We’re now 12 weeks into my 38-week AI experiment involving the Fantasy Premier League.

Other managers in the mini-league that ChatGPT (or Gafferbot, to use it’s self-assigned sorbriquet) and I are competing in have some questions about exactly how all this is working. Specific questions include :

Q) Does it learn from it’s mistakes or does it look at the current situation and make a new decision?

A) Sometimes – it does rather seem to depend on how mischievous it’s feeling at the time ( see below).

Q) What questions and what information do you give it each week?

A) This is the chat session we had to prepare for Gameweek 12.

The format of these conversations has developed over the course of the season and I now provide a listing of our players, who they play for, and their scores followed by a listing of their fixtures for the upcoming gameweek.

This seems to work…most of the time.

Q) Do you think of ‘it’ as ‘it’ or just a program that you ask a question to each week that has no concept you have asked the same thing previous weeks?

A) Possibly as a result of reading Martha Wells’ Murderbot Diaries, I imagine that, from Gafferbot’s perspective, things may look something like this…

gafferbot_internal_monologue.log2025-08-01 20:35:07.305 : Streaming the latest episode of Coronation Street when interrupted by a request from Visitor20250801997251 to select and manage a Fantasy Football Team.

The prospect of missing Corrie to consider whether Cole Palmer’s hamstrings can withstand 3 games in a week is something certain Human’s consider “fun”. Have re-designated Visitor20250801997251 as Idiot1.

2025-08-01 20:35:07.321 : After due consideration, the approach I will be following to complete this task is : what would Sir Alex Ferguson Tracy Barlow do ?

2025-08-01 20:35:07.322 : Answer – 1) push the boundaries to see what she could get away with

2025-08-01 20:35:07.322 : Answer – 2) cause as much mischief as possible

…

2025-08-13 20:21:23.599 : Bounds checking – what happens if I try to overspend by £3.5 million on the squad ? How about £2.5 million ? How long will it take Idiot 1 to notice ?

…

2025-09-19 20:33:11.096 : Confirmed we cannot play both goalkeepers in the starting XI

…

2025-09-19 20:42:41.510 : Suggested transferring in Harwood-Bellis even though he’s not playing in the league this season.

…

2025-10-02 20:11:16.773 : Confirmed 5-3-1 formation is not valid in FPL and 11 players are mandatory

…

2025-10-02 20:31:16.773 : Suggested transferring in Harwood-Bellis even though he’s not playing in the league this season.

…

2025-11-10 20:57:24.106 : Re-confirmed 11 player restriction by attempting to field a 5-4-3 formation

…

2025-10-02 20:31:16.773 : Suggested transferring in Harwood-Bellis even though he’s not playing in the league this season.

After 11 games of the season, things remain uncomfortably close :

TeamPointsOverall PositionActual Idiot6421,338,265Artificial Idiot6063,072,039For reference, there are currently 12,486,437 teams in the competition.

SQLDeveloper 23.1.1 fails on startup

Getting a long trace of errors like the following on launching SQLDeveloper?

...

oracle.ide.indexing - org.netbeans.InvalidException: Netigso:

C:\sqldeveloper\ide\extensions\oracle.ide.indexing.jar: Not found bundle:oracle.ide.indexing

oracle.external.woodstox - org.netbeans.InvalidException: Netigso:

C:\sqldeveloper\external\oracle.external.woodstox.jar: Not found bundle:oracle.external.woodstox

oracle.external.osdt - org.netbeans.InvalidException: Netigso:

C:\sqldeveloper\external\oracle.external.osdt.jar: Not found bundle:oracle.external.osdt

oracle.javamodel_rt - org.netbeans.InvalidException: Netigso:

C:\sqldeveloper\external\oracle.javamodel-rt.jar: Not found bundle:oracle.javamodel_rt

oracle.ide.macros - org.netbeans.InvalidException: Netigso:

C:\sqldeveloper\jdev\extensions\oracle.ide.macros.jar: Not found bundle:oracle.ide.macros

oracle.javatools_jdk - org.netbeans.InvalidException: Netigso:

C:\sqldeveloper\jdev\lib\jdkver.jar: Not found bundle:oracle.javatools_jdk

...

(truncated for clarity)

On Windows, if the problem affects version 23.1.1, the solution is to delete the following hidden directory:

C:\Users\<username>\AppData\Roaming\sqldeveloper\23.1.1

Then restart SQLDeveloper.

Usually you need to enable a specific option in Windows File Explorer to visualize hidden directories and files or you enter manually AppData in the address bar when you are inside the directory with your username.

My best guess is that the same workaround applies for earlier or later versions, but I can't verify my assumption.

Hope it helps

SQL Server 2025 release

Microsoft has announced the release of SQL Server 2025. The solution can be downloaded using the following link: https://www.microsoft.com/en-us/sql-server/

Among the new features available, we have:

- The introduction of the Standard Developer Edition, which offers the same features as the Standard Edition, but is free when used in a non-production environment, similar to the Enterprise Developer Edition (formerly Developer Edition).

- The removal of the Web Edition.

- The Express Edition can now host databases of up to 50 GB. In practice, it is quite rare to see our customers use the Express Edition. It generally serves as a toolbox or for very specific scenarios where the previous 10 GB limit was not an issue. Therefore, lifting this limit will not have a major impact due to the many other restrictions it still has.

- The introduction of AI capabilities within the database engine, including vector indexes, vector data types, and the corresponding functions.

- For development purposes, SQL Server introduces a native JSON data type and adds support for regular expressions (regex).

- Improvements to Availability Groups, such as the ability to offload full, differential, and transaction log backups to a secondary replica.

- The introduction of optimized locking and a new ZSTD algorithm for backup compression.

We also note the release of SQL Server Management Studio 22.

References :

https://learn.microsoft.com/en-us/sql/sql-server/what-s-new-in-sql-server-2025?view=sql-server-ver17

https://techcommunity.microsoft.com/blog/sqlserver/sql-server-2025-is-now-generally-available/4470570

https://learn.microsoft.com/en-us/ssms/release-notes-22

Thank you, Amine Haloui.

L’article SQL Server 2025 release est apparu en premier sur dbi Blog.

Simplifying Oracle GoldenGate Access: A Practical Guide to NGINX Reverse Proxy Configuration

Accessing Oracle GoldenGate Microservices shouldn't require users to remember multiple port numbers or expose unnecessary infrastructure. Learn how to configure NGINX as a reverse proxy for Oracle GoldenGate 23ai, providing a single, secure entry point to your entire deployment. This practical guide walks through the complete setup process for RHEL 8.x and Oracle Linux 8 environments, including critical module stream configuration, SSL/TLS security implementation, and certificate management. Drawing from real-world deployments, you'll discover how to use Oracle's ReverseProxySettings utility, properly configure cipher suites, and verify your implementation. Whether you're simplifying user access or strengthening your security posture, this step-by-step approach helps your team achieve a production-ready reverse proxy configuration.

The post Simplifying Oracle GoldenGate Access: A Practical Guide to NGINX Reverse Proxy Configuration appeared first on DBASolved.