Yann Neuhaus

What’s New in M-Files 25.3

I’m not a big fan of doing a post for each new release, but I think the last one is a big step towards what M-Files will tend to be in the coming months.

M-Files 25.3, was released to the cloud on March 30th, and is available for download and auto-update since April 2nd. It brings a suite of powerful updates designed to improve document management efficiency and user experience.

Here’s a breakdown of the most notable features, improvements, and fixes.

Admin Workflow State Changes in M-Files Web

System administrators can now override any workflow state directly from the context menu in M-Files Web using the new “Change state (Admin)” option. This allows for greater control and quicker resolution of workflow issues.

Zero-Click Metadata Filling

When users drag and drop new objects into specific views, required metadata fields can now be automatically prefilled without displaying the metadata card. This creates a seamless and efficient upload process.

Object-Based Hierarchies Support

Object-based hierarchies are now available on the metadata card in both M-Files Web and the new Desktop interface, providing more structured data representation.

Enhanced Keyboard Navigation

Improved keyboard shortcuts now allow users to jump quickly to key interface elements like the search bar and tabs, streamlining navigation for power users.

Document Renaming in Web and Desktop

Users can now rename files in M-Files Web and the new Desktop interface via the context menu or the F2 key, making file management more intuitive.

Default gRPC Port Update

The default gRPC port for new vault connections is now set to 443, improving compatibility with standard cloud environments and simplifying firewall configurations.

AutoCAD 2025 Support

The M-Files AutoCAD add-in is now compatible with AutoCAD 2025, ensuring continued integration with the latest CAD workflows.

- Drag-and-Drop Upload Error Resolved: Fixed a bug that caused “Upload session not found” errors during file uploads.

- Automatic Property Filling: Ensured property values now update correctly when source properties are modified.

- Version-Specific Links: Resolved an issue where links pointed to the latest version rather than the correct historical version.

- Anonymous User Permissions: Closed a loophole that allowed anonymous users to create and delete views.

- Theme Display Consistency: Custom themes now persist correctly across multiple vault sessions.

- Office Add-In Fixes: Resolved compatibility issues with merged cells in Excel documents.

- Date & Time Accuracy: Fixed timezone issues that affected Date & Time metadata.

- Metadata Card Configuration: Ensured proper application of workflow settings.

- Annotation Display in Web: Annotations are now correctly tied to their document versions.

- Improved Link Functionality: Object ID-based links now work as expected in the new Desktop client.

M-Files 25.3 introduces thoughtful improvements that empower both administrators and end-users. From seamless metadata handling to improved keyboard accessibility and robust error fixes, this release makes it easier than ever to manage documents effectively.

Stay tuned for more insights and tips on making the most of your M-Files solution with us!

L’article What’s New in M-Files 25.3 est apparu en premier sur dbi Blog.

PostgreSQL 18: Support for asynchronous I/O

This is maybe one the biggest steps forward for PostgreSQL: PostgreSQL 18 will come with support for asynchronous I/O. Traditionally PostgreSQL relies on the operating system to hide the latency of writing to disk, which is done synchronously and can lead to double buffering (PostgreSQL shared buffers and the OS file cache). This is most important for WAL writes, as PostgreSQL must make sure that changes are flushed to disk and needs to wait until it is confirmed.

Before we do some tests let’s see what’s new from a parameter perspective. One of the new parameters is io_method:

postgres=# show io_method;

io_method

-----------

worker

(1 row)

The default is “worker” and the maximum number of worker processes to perform asynchronous is controller by io_workers:

postgres=# show io_workers;

io_workers

------------

3

(1 row)

This can also be seen on the operating system:

postgres=# \! ps aux | grep "io worker" | grep -v grep

postgres 29732 0.0 0.1 224792 7052 ? Ss Apr08 0:00 postgres: pgdev: io worker 1

postgres 29733 0.0 0.2 224792 9884 ? Ss Apr08 0:00 postgres: pgdev: io worker 0

postgres 29734 0.0 0.1 224792 7384 ? Ss Apr08 0:00 postgres: pgdev: io worker 2

The other possible settings for io_method are:

io_uring: Asynchronous I/O using io_uringsync: The behavior before PostgreSQL 18, do synchronous I/O

io_workers only has an effect if io_method is set to “worker”, which is the default configuration.

As usual: What follows are just some basic tests. Test for your own, in your environment with your specific workload to get some meaningful numbers. Especially if you test in a public cloud, be aware that the numbers might not show you the full truth.

We’ll do the tests on an AWS EC2 t3.large instance running Debian 12. The storage volume is gp3 with ext4 (default settings):

postgres@ip-10-0-1-209:~$ grep proc /proc/cpuinfo

processor : 0

processor : 1

postgres@ip-10-0-1-209:~$ free -g

total used free shared buff/cache available

Mem: 7 0 4 0 3 7

Swap: 0 0 0

postgres@ip-10-0-1-209:~$ mount | grep 18

/dev/nvme1n1 on /u02/pgdata/18 type ext4 (rw,relatime)

PostgreSQL was initialized with the default settings:

postgres@ip-10-0-1-209:~$ /u01/app/postgres/product/18/db_0/bin/initdb --pgdata=/u02/pgdata/18/data/

The files belonging to this database system will be owned by user "postgres".

This user must also own the server process.

The database cluster will be initialized with locale "C.UTF-8".

The default database encoding has accordingly been set to "UTF8".

The default text search configuration will be set to "english".

Data page checksums are enabled.

fixing permissions on existing directory /u02/pgdata/18/data ... ok

creating subdirectories ... ok

selecting dynamic shared memory implementation ... posix

selecting default "max_connections" ... 100

selecting default "autovacuum_worker_slots" ... 16

selecting default "shared_buffers" ... 128MB

selecting default time zone ... Etc/UTC

creating configuration files ... ok

running bootstrap script ... ok

performing post-bootstrap initialization ... ok

syncing data to disk ... ok

initdb: warning: enabling "trust" authentication for local connections

initdb: hint: You can change this by editing pg_hba.conf or using the option -A, or --auth-local and --auth-host, the next time you run initdb.

Success. You can now start the database server using:

/u01/app/postgres/product/18/db_0/bin/pg_ctl -D /u02/pgdata/18/data/ -l logfile start

The following settings have been changed:

postgres@ip-10-0-1-209:~$ echo "shared_buffers='2GB'" >> /u02/pgdata/18/data/postgresql.auto.conf

postgres@ip-10-0-1-209:~$ echo "checkpoint_timeout='20min'" >> /u02/pgdata/18/data/postgresql.auto.conf

postgres@ip-10-0-1-209:~$ echo "random_page_cost=1.1" >> /u02/pgdata/18/data/postgresql.auto.conf

postgres@ip-10-0-1-209:~$ echo "max_wal_size='8GB'" >> /u02/pgdata/18/data/postgresql.auto.conf

postgres@ip-10-0-1-209:~$ /u01/app/postgres/product/18/db_0/bin/pg_ctl --pgdata=/u02/pgdata/18/data/ -l /dev/null start

postgres@ip-10-0-1-209:~$ /u01/app/postgres/product/18/db_0/bin/psql -c "select version()"

version

---------------------------------------------------------------------------------------

PostgreSQL 18devel dbi services build on x86_64-linux, compiled by gcc-12.2.0, 64-bit

(1 row)

postgres@ip-10-0-1-209:~$ export PATH=/u01/app/postgres/product/18/db_0/bin/:$PATH

The first test is data loading. How long does that take when io_method is set to worker (3 times in a row), this gives a data set of around 1536MB:

postgres@ip-10-0-1-209:~$ pgbench -i -s 100

dropping old tables...

NOTICE: table "pgbench_accounts" does not exist, skipping

NOTICE: table "pgbench_branches" does not exist, skipping

NOTICE: table "pgbench_history" does not exist, skipping

NOTICE: table "pgbench_tellers" does not exist, skipping

creating tables...

generating data (client-side)...

vacuuming...

creating primary keys...

done in 31.85 s (drop tables 0.00 s, create tables 0.01 s, client-side generate 24.82 s, vacuum 0.35 s, primary keys 6.68 s).

postgres@ip-10-0-1-209:~$ pgbench -i -s 100

dropping old tables...

creating tables...

generating data (client-side)...

vacuuming...

creating primary keys...

done in 31.97 s (drop tables 0.24 s, create tables 0.00 s, client-side generate 25.44 s, vacuum 0.34 s, primary keys 5.93 s).

postgres@ip-10-0-1-209:~$ pgbench -i -s 100

dropping old tables...

creating tables...

generating data (client-side)...

vacuuming...

creating primary keys...

done in 30.72 s (drop tables 0.26 s, create tables 0.00 s, client-side generate 23.93 s, vacuum 0.55 s, primary keys 5.98 s).

The same test with “sync”:

postgres@ip-10-0-1-209:~$ psql -c "alter system set io_method='sync'"

ALTER SYSTEM

postgres@ip-10-0-1-209:~$ pg_ctl --pgdata=/u02/pgdata/18/data/ restart -l /dev/null

postgres@ip-10-0-1-209:~$ psql -c "show io_method"

io_method

-----------

sync

(1 row)

postgres@ip-10-0-1-209:~$ pgbench -i -s 100

dropping old tables...

creating tables...

generating data (client-side)...

vacuuming...

creating primary keys...

done in 20.89 s (drop tables 0.29 s, create tables 0.01 s, client-side generate 14.70 s, vacuum 0.45 s, primary keys 5.44 s).

postgres@ip-10-0-1-209:~$ pgbench -i -s 100

dropping old tables...

creating tables...

generating data (client-side)...

vacuuming...

creating primary keys...

done in 21.57 s (drop tables 0.20 s, create tables 0.00 s, client-side generate 16.13 s, vacuum 0.46 s, primary keys 4.77 s).

postgres@ip-10-0-1-209:~$ pgbench -i -s 100

dropping old tables...

creating tables...

generating data (client-side)...

vacuuming...

creating primary keys...

done in 21.44 s (drop tables 0.20 s, create tables 0.00 s, client-side generate 16.04 s, vacuum 0.52 s, primary keys 4.67 s).

… and finally “io_uring”:

postgres@ip-10-0-1-209:~$ psql -c "alter system set io_method='io_uring'"

ALTER SYSTEM

postgres@ip-10-0-1-209:~$ pg_ctl --pgdata=/u02/pgdata/18/data/ restart -l /dev/null

waiting for server to shut down.... done

server stopped

waiting for server to start.... done

server started

postgres@ip-10-0-1-209:~$ psql -c "show io_method"

io_method

-----------

io_uring

(1 row)

postgres@ip-10-0-1-209:~$ pgbench -i -s 100

dropping old tables...

creating tables...

generating data (client-side)...

vacuuming...

creating primary keys...

done in 20.63 s (drop tables 0.35 s, create tables 0.01 s, client-side generate 14.92 s, vacuum 0.47 s, primary keys 4.88 s).

postgres@ip-10-0-1-209:~$ pgbench -i -s 100

dropping old tables...

creating tables...

generating data (client-side)...

vacuuming...

creating primary keys...

done in 20.81 s (drop tables 0.29 s, create tables 0.00 s, client-side generate 14.43 s, vacuum 0.46 s, primary keys 5.63 s).

postgres@ip-10-0-1-209:~$ pgbench -i -s 100

dropping old tables...

creating tables...

generating data (client-side)...

vacuuming...

creating primary keys...

done in 21.11 s (drop tables 0.24 s, create tables 0.00 s, client-side generate 15.63 s, vacuum 0.53 s, primary keys 4.70 s).

There not much difference for “sync” and “io_uring”, but “worker” clearly is slower for that type of workload.

Moving on, let’s see how that looks like for a standard pgbench benchmark. We’ll start with “io_uring” as this is the current setting:

postgres@ip-10-0-1-209:~$ pgbench --time=600 --client=2 --jobs=2

pgbench (18devel dbi services build)

starting vacuum...end.

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 100

query mode: simple

number of clients: 2

number of threads: 2

maximum number of tries: 1

duration: 600 s

number of transactions actually processed: 567989

number of failed transactions: 0 (0.000%)

latency average = 2.113 ms

initial connection time = 8.996 ms

tps = 946.659673 (without initial connection time)

postgres@ip-10-0-1-209:~$ pgbench --time=600 --client=2 --jobs=2

pgbench (18devel dbi services build)

starting vacuum...end.

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 100

query mode: simple

number of clients: 2

number of threads: 2

maximum number of tries: 1

duration: 600 s

number of transactions actually processed: 557640

number of failed transactions: 0 (0.000%)

latency average = 2.152 ms

initial connection time = 6.994 ms

tps = 929.408406 (without initial connection time)

postgres@ip-10-0-1-209:~$ pgbench --time=600 --client=2 --jobs=2

pgbench (18devel dbi services build)

starting vacuum...end.

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 100

query mode: simple

number of clients: 2

number of threads: 2

maximum number of tries: 1

duration: 600 s

number of transactions actually processed: 563613

number of failed transactions: 0 (0.000%)

latency average = 2.129 ms

initial connection time = 16.351 ms

tps = 939.378627 (without initial connection time)

Same test with “worker”:

postgres@ip-10-0-1-209:~$ psql -c "alter system set io_method='worker'"

ALTER SYSTEM

postgres@ip-10-0-1-209:~$ pg_ctl --pgdata=/u02/pgdata/18/data/ restart -l /dev/null

waiting for server to shut down............. done

server stopped

waiting for server to start.... done

server started

postgres@ip-10-0-1-209:~$ pgbench --time=600 --client=2 --jobs=2

pgbench (18devel dbi services build)

starting vacuum...end.

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 100

query mode: simple

number of clients: 2

number of threads: 2

maximum number of tries: 1

duration: 600 s

number of transactions actually processed: 549176

number of failed transactions: 0 (0.000%)

latency average = 2.185 ms

initial connection time = 7.189 ms

tps = 915.301403 (without initial connection time)

postgres@ip-10-0-1-209:~$ pgbench --time=600 --client=2 --jobs=2

pgbench (18devel dbi services build)

starting vacuum...end.

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 100

query mode: simple

number of clients: 2

number of threads: 2

maximum number of tries: 1

duration: 600 s

number of transactions actually processed: 564898

number of failed transactions: 0 (0.000%)

latency average = 2.124 ms

initial connection time = 11.332 ms

tps = 941.511304 (without initial connection time)

postgres@ip-10-0-1-209:~$ pgbench --time=600 --client=2 --jobs=2

pgbench (18devel dbi services build)

starting vacuum...end.

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 100

query mode: simple

number of clients: 2

number of threads: 2

maximum number of tries: 1

duration: 600 s

number of transactions actually processed: 563041

number of failed transactions: 0 (0.000%)

latency average = 2.131 ms

initial connection time = 9.120 ms

tps = 938.412979 (without initial connection time)

… and finally “sync”:

postgres@ip-10-0-1-209:~$ psql -c "alter system set io_method='sync'"

ALTER SYSTEM

postgres@ip-10-0-1-209:~$ pg_ctl --pgdata=/u02/pgdata/18/data/ restart -l /dev/null

waiting for server to shut down............ done

server stopped

waiting for server to start.... done

server started

postgres@ip-10-0-1-209:~$ pgbench --time=600 --client=2 --jobs=2

pgbench (18devel dbi services build)

starting vacuum...end.

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 100

query mode: simple

number of clients: 2

number of threads: 2

maximum number of tries: 1

duration: 600 s

number of transactions actually processed: 560420

number of failed transactions: 0 (0.000%)

latency average = 2.141 ms

initial connection time = 12.000 ms

tps = 934.050237 (without initial connection time)

postgres@ip-10-0-1-209:~$ pgbench --time=600 --client=2 --jobs=2

pgbench (18devel dbi services build)

starting vacuum...end.

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 100

query mode: simple

number of clients: 2

number of threads: 2

maximum number of tries: 1

duration: 600 s

number of transactions actually processed: 560077

number of failed transactions: 0 (0.000%)

latency average = 2.143 ms

initial connection time = 7.204 ms

tps = 933.469665 (without initial connection time)

postgres@ip-10-0-1-209:~$ pgbench --time=600 --client=2 --jobs=2

pgbench (18devel dbi services build)

starting vacuum...end.

transaction type: <builtin: TPC-B (sort of)>

scaling factor: 100

query mode: simple

number of clients: 2

number of threads: 2

maximum number of tries: 1

duration: 600 s

number of transactions actually processed: 566150

number of failed transactions: 0 (0.000%)

latency average = 2.120 ms

initial connection time = 7.579 ms

tps = 943.591451 (without initial connection time)

As you see there is not much difference, no matter the io_method. Let’s stress the system a bit more (only putting the summaries here):

postgres@ip-10-0-1-209:~$ pgbench --time=600 --client=10 --jobs=10

## sync

tps = 2552.785398 (without initial connection time)

tps = 2505.476064 (without initial connection time)

tps = 2542.419230 (without initial connection time)

## io_uring

tps = 2511.138931 (without initial connection time)

tps = 2529.705311 (without initial connection time)

tps = 2573.195751 (without initial connection time)

## worker

tps = 2531.657962 (without initial connection time)

tps = 2523.854335 (without initial connection time)

tps = 2515.490351 (without initial connection time)

Some picture, there is not much difference. One last test, hammering the system even more:

postgres@ip-10-0-1-209:~$ pgbench --time=600 --client=20 --jobs=20

## worker

tps = 2930.268033 (without initial connection time)

tps = 2799.499964 (without initial connection time)

tps = 3033.491153 (without initial connection time)

## io_uring

tps = 2942.542882 (without initial connection time)

tps = 3061.487286 (without initial connection time)

tps = 2995.175169 (without initial connection time)

## sync

tps = 2997.654084 (without initial connection time)

tps = 2924.269626 (without initial connection time)

tps = 2753.853272 (without initial connection time)

At least for these tests, there is not much difference between the three settings for io_method (sync seems to be a bit slower), but I think this is still great. For such a massive change getting to the same performance as before is great. Things in PostgreSQL improve all the time, and I am sure there will be a lot of improvements in this area as well.

Usually I link to the commit here, but in this case that would be a whole bunch of commits. To everyone involved in this, a big thank you.

L’article PostgreSQL 18: Support for asynchronous I/O est apparu en premier sur dbi Blog.

Use a expired SLES with OpenSUSE repositories

Last week, I hit a wall when my SUSE Linux Enterprise Server license expired, stopping all repository access. Needing PostgreSQL urgently, I couldn’t wait for SUSE to renew my license and had to act fast.

I chose to disable every SLES repository and switched to the openSUSE Leap repository. This worked flawless and made my system usable in very short time. This is why I wanted to make a short blog about it:

# First, check what repos are active with:

$ sudo zypper repos

# In case you only have SLES-Repositories on your system you can disable all of them at once. Otherwise you will be spammed with error messages when running zypper. To disable all repos in one shot, use:

$ sudo zypper modifyrepo --all --disable

# Now we come to the fun part. Depending on what minor version of release 15 you use, it is needed to change it inside the repository link. In my case I'm using 15.6:

$ slesver=15.6 && sudo zypper addrepo http://download.opensuse.org/distribution/leap/$slesver/repo/oss/ opensuse-leap-oss

# Now we only need to refresh the repositories and accept the gpg-keys:

$ sudo zypper refresh

# From now on we can install any packages we need without ever having to activate the system.This guide shows you how to swap out the paid SUSE Linux Enterprise Server (SLES) repositories, which are professionally managed and maintained by SUSE, for the open-source openSUSE Leap repositories. A community of volunteers, with some help from SUSE, drives and supports the openSUSE Leap repositories. But they lack the enterprise grade support, testing, and update guarantees provided by SLES. By following these steps, you will lose access to SUSE’s official updates, security patches, and support services tied to your expired SLES license. This process converts your system into a community supported setup, which may not align with production or enterprise needs. Proceed at your own risk, and ensure you understand the implications. Especially regarding security, stability, and compliance. To stay up to date with security announcements on OpenSUSE you can subscribe here.

L’article Use a expired SLES with OpenSUSE repositories est apparu en premier sur dbi Blog.

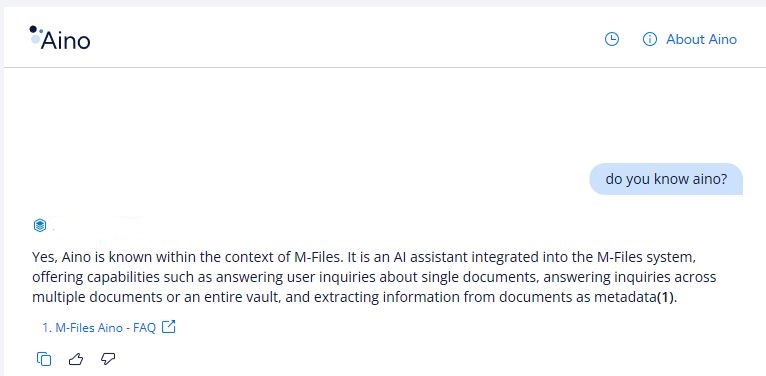

PostgreSQL 18: Add function to report backend memory contexts

Another great feature was committed for PostgreSQL 18 if you are interested how memory is used by a backend process. While you can take a look at the memory contexts for your current session since PostgreSQL 14, there was no way to retrieve that information for another backend.

Since PostgreSQL 14 there is the pg_backend_memory_contexts catalog view. This view displays the memory contexts of the server process attached to the current session, e.g.:

postgres=# select * from pg_backend_memory_contexts;

name | ident | type | level | path | total_bytes | total_nblocks | free_bytes | free_chunks | used_bytes

------------------------------------------------+------------------------------------------------+------------+-------+-----------------------+-------------+---------------+------------+-------------+------------

TopMemoryContext | | AllocSet | 1 | {1} | 174544 | 7 | 36152 | 20 | 138392

Record information cache | | AllocSet | 2 | {1,2} | 8192 | 1 | 1640 | 0 | 6552

RegexpCacheMemoryContext | | AllocSet | 2 | {1,3} | 1024 | 1 | 784 | 0 | 240

collation cache | | AllocSet | 2 | {1,4} | 8192 | 1 | 6808 | 0 | 1384

TableSpace cache | | AllocSet | 2 | {1,5} | 8192 | 1 | 2152 | 0 | 6040

Map from relid to OID of cached composite type | | AllocSet | 2 | {1,6} | 8192 | 1 | 2544 | 0 | 5648

Type information cache | | AllocSet | 2 | {1,7} | 24624 | 2 | 2672 | 0 | 21952

Operator lookup cache | | AllocSet | 2 | {1,8} | 24576 | 2 | 10816 | 4 | 13760

search_path processing cache | | AllocSet | 2 | {1,9} | 8192 | 1 | 5656 | 8 | 2536

RowDescriptionContext | | AllocSet | 2 | {1,10} | 8192 | 1 | 6920 | 0 | 1272

MessageContext | | AllocSet | 2 | {1,11} | 32768 | 3 | 1632 | 0 | 31136

Operator class cache | | AllocSet | 2 | {1,12} | 8192 | 1 | 616 | 0 | 7576

smgr relation table | | AllocSet | 2 | {1,13} | 32768 | 3 | 16904 | 9 | 15864

PgStat Shared Ref Hash | | AllocSet | 2 | {1,14} | 9264 | 2 | 712 | 0 | 8552

PgStat Shared Ref | | AllocSet | 2 | {1,15} | 8192 | 4 | 3440 | 5 | 4752

PgStat Pending | | AllocSet | 2 | {1,16} | 16384 | 5 | 15984 | 58 | 400

TopTransactionContext | | AllocSet | 2 | {1,17} | 8192 | 1 | 7776 | 0 | 416

TransactionAbortContext | | AllocSet | 2 | {1,18} | 32768 | 1 | 32528 | 0 | 240

Portal hash | | AllocSet | 2 | {1,19} | 8192 | 1 | 616 | 0 | 7576

TopPortalContext | | AllocSet | 2 | {1,20} | 8192 | 1 | 7688 | 0 | 504

Relcache by OID | | AllocSet | 2 | {1,21} | 16384 | 2 | 3608 | 3 | 12776

CacheMemoryContext | | AllocSet | 2 | {1,22} | 8487056 | 14 | 3376568 | 3 | 5110488

LOCALLOCK hash | | AllocSet | 2 | {1,23} | 8192 | 1 | 616 | 0 | 7576

WAL record construction | | AllocSet | 2 | {1,24} | 50200 | 2 | 6400 | 0 | 43800

PrivateRefCount | | AllocSet | 2 | {1,25} | 8192 | 1 | 608 | 0 | 7584

MdSmgr | | AllocSet | 2 | {1,26} | 8192 | 1 | 7296 | 0 | 896

GUCMemoryContext | | AllocSet | 2 | {1,27} | 24576 | 2 | 8264 | 1 | 16312

Timezones | | AllocSet | 2 | {1,28} | 104112 | 2 | 2672 | 0 | 101440

ErrorContext | | AllocSet | 2 | {1,29} | 8192 | 1 | 7952 | 0 | 240

RegexpMemoryContext | ^(.*memory.*)$ | AllocSet | 3 | {1,3,30} | 13360 | 5 | 6800 | 8 | 6560

PortalContext | <unnamed> | AllocSet | 3 | {1,20,31} | 1024 | 1 | 608 | 0 | 416

relation rules | pg_backend_memory_contexts | AllocSet | 3 | {1,22,32} | 8192 | 4 | 3840 | 1 | 4352

index info | pg_toast_1255_index | AllocSet | 3 | {1,22,33} | 3072 | 2 | 1152 | 2 | 1920

index info | pg_toast_2619_index | AllocSet | 3 | {1,22,34} | 3072 | 2 | 1152 | 2 | 1920

index info | pg_constraint_conrelid_contypid_conname_index | AllocSet | 3 | {1,22,35} | 3072 | 2 | 1016 | 1 | 2056

index info | pg_statistic_ext_relid_index | AllocSet | 3 | {1,22,36} | 2048 | 2 | 752 | 2 | 1296

index info | pg_index_indrelid_index | AllocSet | 3 | {1,22,37} | 2048 | 2 | 680 | 2 | 1368

index info | pg_db_role_setting_databaseid_rol_index | AllocSet | 3 | {1,22,38} | 3072 | 2 | 1120 | 1 | 1952

index info | pg_opclass_am_name_nsp_index | AllocSet | 3 | {1,22,39} | 3072 | 2 | 1048 | 1 | 2024

index info | pg_foreign_data_wrapper_name_index | AllocSet | 3 | {1,22,40} | 2048 | 2 | 792 | 3 | 1256

index info | pg_enum_oid_index | AllocSet | 3 | {1,22,41} | 2048 | 2 | 824 | 3 | 1224

index info | pg_class_relname_nsp_index | AllocSet | 3 | {1,22,42} | 3072 | 2 | 1080 | 3 | 1992

index info | pg_foreign_server_oid_index | AllocSet | 3 | {1,22,43} | 2048 | 2 | 824 | 3 | 1224

index info | pg_publication_pubname_index | AllocSet | 3 | {1,22,44} | 2048 | 2 | 824 | 3 | 1224

index info | pg_statistic_relid_att_inh_index | AllocSet | 3 | {1,22,45} | 3072 | 2 | 872 | 2 | 2200

index info | pg_cast_source_target_index | AllocSet | 3 | {1,22,46} | 3072 | 2 | 1080 | 3 | 1992

index info | pg_language_name_index | AllocSet | 3 | {1,22,47} | 2048 | 2 | 824 | 3 | 1224

index info | pg_transform_oid_index | AllocSet | 3 | {1,22,48} | 2048 | 2 | 824 | 3 | 1224

index info | pg_collation_oid_index | AllocSet | 3 | {1,22,49} | 2048 | 2 | 680 | 2 | 1368

index info | pg_amop_fam_strat_index | AllocSet | 3 | {1,22,50} | 3248 | 3 | 840 | 0 | 2408

index info | pg_index_indexrelid_index | AllocSet | 3 | {1,22,51} | 2048 | 2 | 680 | 2 | 1368

index info | pg_ts_template_tmplname_index | AllocSet | 3 | {1,22,52} | 3072 | 2 | 1296 | 3 | 1776

index info | pg_ts_config_map_index | AllocSet | 3 | {1,22,53} | 3072 | 2 | 1192 | 2 | 1880

index info | pg_opclass_oid_index | AllocSet | 3 | {1,22,54} | 2048 | 2 | 680 | 2 | 1368

index info | pg_foreign_data_wrapper_oid_index | AllocSet | 3 | {1,22,55} | 2048 | 2 | 792 | 3 | 1256

index info | pg_publication_namespace_oid_index | AllocSet | 3 | {1,22,56} | 2048 | 2 | 792 | 3 | 1256

index info | pg_event_trigger_evtname_index | AllocSet | 3 | {1,22,57} | 2048 | 2 | 824 | 3 | 1224

index info | pg_statistic_ext_name_index | AllocSet | 3 | {1,22,58} | 3072 | 2 | 1296 | 3 | 1776

index info | pg_publication_oid_index | AllocSet | 3 | {1,22,59} | 2048 | 2 | 824 | 3 | 1224

index info | pg_ts_dict_oid_index | AllocSet | 3 | {1,22,60} | 2048 | 2 | 824 | 3 | 1224

index info | pg_event_trigger_oid_index | AllocSet | 3 | {1,22,61} | 2048 | 2 | 824 | 3 | 1224

index info | pg_conversion_default_index | AllocSet | 3 | {1,22,62} | 2224 | 2 | 216 | 0 | 2008

index info | pg_operator_oprname_l_r_n_index | AllocSet | 3 | {1,22,63} | 3248 | 3 | 840 | 0 | 2408

index info | pg_trigger_tgrelid_tgname_index | AllocSet | 3 | {1,22,64} | 3072 | 2 | 1296 | 3 | 1776

index info | pg_extension_oid_index | AllocSet | 3 | {1,22,65} | 2048 | 2 | 824 | 3 | 1224

index info | pg_enum_typid_label_index | AllocSet | 3 | {1,22,66} | 3072 | 2 | 1296 | 3 | 1776

index info | pg_ts_config_oid_index | AllocSet | 3 | {1,22,67} | 2048 | 2 | 824 | 3 | 1224

index info | pg_user_mapping_oid_index | AllocSet | 3 | {1,22,68} | 2048 | 2 | 824 | 3 | 1224

index info | pg_opfamily_am_name_nsp_index | AllocSet | 3 | {1,22,69} | 3072 | 2 | 1192 | 2 | 1880

index info | pg_foreign_table_relid_index | AllocSet | 3 | {1,22,70} | 2048 | 2 | 824 | 3 | 1224

index info | pg_type_oid_index | AllocSet | 3 | {1,22,71} | 2048 | 2 | 680 | 2 | 1368

index info | pg_aggregate_fnoid_index | AllocSet | 3 | {1,22,72} | 2048 | 2 | 680 | 2 | 1368

index info | pg_constraint_oid_index | AllocSet | 3 | {1,22,73} | 2048 | 2 | 824 | 3 | 1224

index info | pg_rewrite_rel_rulename_index | AllocSet | 3 | {1,22,74} | 3072 | 2 | 1152 | 3 | 1920

index info | pg_ts_parser_prsname_index | AllocSet | 3 | {1,22,75} | 3072 | 2 | 1296 | 3 | 1776

index info | pg_ts_config_cfgname_index | AllocSet | 3 | {1,22,76} | 3072 | 2 | 1296 | 3 | 1776

index info | pg_ts_parser_oid_index | AllocSet | 3 | {1,22,77} | 2048 | 2 | 824 | 3 | 1224

index info | pg_publication_rel_prrelid_prpubid_index | AllocSet | 3 | {1,22,78} | 3072 | 2 | 1264 | 2 | 1808

index info | pg_operator_oid_index | AllocSet | 3 | {1,22,79} | 2048 | 2 | 680 | 2 | 1368

index info | pg_namespace_nspname_index | AllocSet | 3 | {1,22,80} | 2048 | 2 | 680 | 2 | 1368

index info | pg_ts_template_oid_index | AllocSet | 3 | {1,22,81} | 2048 | 2 | 824 | 3 | 1224

index info | pg_amop_opr_fam_index | AllocSet | 3 | {1,22,82} | 3072 | 2 | 904 | 1 | 2168

index info | pg_default_acl_role_nsp_obj_index | AllocSet | 3 | {1,22,83} | 3072 | 2 | 1160 | 2 | 1912

index info | pg_collation_name_enc_nsp_index | AllocSet | 3 | {1,22,84} | 3072 | 2 | 904 | 1 | 2168

index info | pg_publication_rel_oid_index | AllocSet | 3 | {1,22,85} | 2048 | 2 | 824 | 3 | 1224

index info | pg_range_rngtypid_index | AllocSet | 3 | {1,22,86} | 2048 | 2 | 824 | 3 | 1224

index info | pg_ts_dict_dictname_index | AllocSet | 3 | {1,22,87} | 3072 | 2 | 1296 | 3 | 1776

index info | pg_type_typname_nsp_index | AllocSet | 3 | {1,22,88} | 3072 | 2 | 1080 | 3 | 1992

index info | pg_opfamily_oid_index | AllocSet | 3 | {1,22,89} | 2048 | 2 | 680 | 2 | 1368

index info | pg_statistic_ext_oid_index | AllocSet | 3 | {1,22,90} | 2048 | 2 | 824 | 3 | 1224

index info | pg_statistic_ext_data_stxoid_inh_index | AllocSet | 3 | {1,22,91} | 3072 | 2 | 1264 | 2 | 1808

index info | pg_class_oid_index | AllocSet | 3 | {1,22,92} | 2048 | 2 | 680 | 2 | 1368

index info | pg_proc_proname_args_nsp_index | AllocSet | 3 | {1,22,93} | 3072 | 2 | 1048 | 1 | 2024

index info | pg_partitioned_table_partrelid_index | AllocSet | 3 | {1,22,94} | 2048 | 2 | 792 | 3 | 1256

index info | pg_range_rngmultitypid_index | AllocSet | 3 | {1,22,95} | 2048 | 2 | 824 | 3 | 1224

index info | pg_transform_type_lang_index | AllocSet | 3 | {1,22,96} | 3072 | 2 | 1296 | 3 | 1776

index info | pg_attribute_relid_attnum_index | AllocSet | 3 | {1,22,97} | 3072 | 2 | 1080 | 3 | 1992

index info | pg_proc_oid_index | AllocSet | 3 | {1,22,98} | 2048 | 2 | 680 | 2 | 1368

index info | pg_language_oid_index | AllocSet | 3 | {1,22,99} | 2048 | 2 | 824 | 3 | 1224

index info | pg_namespace_oid_index | AllocSet | 3 | {1,22,100} | 2048 | 2 | 680 | 2 | 1368

index info | pg_amproc_fam_proc_index | AllocSet | 3 | {1,22,101} | 3248 | 3 | 840 | 0 | 2408

index info | pg_foreign_server_name_index | AllocSet | 3 | {1,22,102} | 2048 | 2 | 824 | 3 | 1224

index info | pg_attribute_relid_attnam_index | AllocSet | 3 | {1,22,103} | 3072 | 2 | 1296 | 3 | 1776

index info | pg_publication_namespace_pnnspid_pnpubid_index | AllocSet | 3 | {1,22,104} | 3072 | 2 | 1264 | 2 | 1808

index info | pg_conversion_oid_index | AllocSet | 3 | {1,22,105} | 2048 | 2 | 824 | 3 | 1224

index info | pg_user_mapping_user_server_index | AllocSet | 3 | {1,22,106} | 3072 | 2 | 1264 | 2 | 1808

index info | pg_subscription_rel_srrelid_srsubid_index | AllocSet | 3 | {1,22,107} | 3072 | 2 | 1264 | 2 | 1808

index info | pg_sequence_seqrelid_index | AllocSet | 3 | {1,22,108} | 2048 | 2 | 824 | 3 | 1224

index info | pg_extension_name_index | AllocSet | 3 | {1,22,109} | 2048 | 2 | 824 | 3 | 1224

index info | pg_conversion_name_nsp_index | AllocSet | 3 | {1,22,110} | 3072 | 2 | 1296 | 3 | 1776

index info | pg_authid_oid_index | AllocSet | 3 | {1,22,111} | 2048 | 2 | 680 | 2 | 1368

index info | pg_auth_members_member_role_index | AllocSet | 3 | {1,22,112} | 3072 | 2 | 1160 | 2 | 1912

index info | pg_subscription_oid_index | AllocSet | 3 | {1,22,113} | 2048 | 2 | 824 | 3 | 1224

index info | pg_parameter_acl_oid_index | AllocSet | 3 | {1,22,114} | 2048 | 2 | 824 | 3 | 1224

index info | pg_tablespace_oid_index | AllocSet | 3 | {1,22,115} | 2048 | 2 | 680 | 2 | 1368

index info | pg_parameter_acl_parname_index | AllocSet | 3 | {1,22,116} | 2048 | 2 | 824 | 3 | 1224

index info | pg_shseclabel_object_index | AllocSet | 3 | {1,22,117} | 3072 | 2 | 1192 | 2 | 1880

index info | pg_replication_origin_roname_index | AllocSet | 3 | {1,22,118} | 2048 | 2 | 792 | 3 | 1256

index info | pg_database_datname_index | AllocSet | 3 | {1,22,119} | 2048 | 2 | 680 | 2 | 1368

index info | pg_subscription_subname_index | AllocSet | 3 | {1,22,120} | 3072 | 2 | 1296 | 3 | 1776

index info | pg_replication_origin_roiident_index | AllocSet | 3 | {1,22,121} | 2048 | 2 | 792 | 3 | 1256

index info | pg_auth_members_role_member_index | AllocSet | 3 | {1,22,122} | 3072 | 2 | 1160 | 2 | 1912

index info | pg_database_oid_index | AllocSet | 3 | {1,22,123} | 2048 | 2 | 680 | 2 | 1368

index info | pg_authid_rolname_index | AllocSet | 3 | {1,22,124} | 2048 | 2 | 680 | 2 | 1368

GUC hash table | | AllocSet | 3 | {1,27,125} | 32768 | 3 | 11696 | 6 | 21072

ExecutorState | | AllocSet | 4 | {1,20,31,126} | 49200 | 4 | 13632 | 3 | 35568

tuplestore tuples | | Generation | 5 | {1,20,31,126,127} | 32768 | 3 | 13360 | 0 | 19408

printtup | | AllocSet | 5 | {1,20,31,126,128} | 8192 | 1 | 7952 | 0 | 240

Table function arguments | | AllocSet | 5 | {1,20,31,126,129} | 8192 | 1 | 7912 | 0 | 280

ExprContext | | AllocSet | 5 | {1,20,31,126,130} | 32768 | 3 | 5656 | 4 | 27112

pg_get_backend_memory_contexts | | AllocSet | 6 | {1,20,31,126,130,131} | 16384 | 2 | 5664 | 3 | 10720

(131 rows)

This is quite some information, but as said above, this information is only available for the backend process which is attached to the current session.

Starting with PostgreSQL 18, you can most probably get those statistics for other backends as well. For this a new function was added:

postgres=# \dfS pg_get_process_memory_contexts

List of functions

Schema | Name | Result data type | Argument da>

------------+--------------------------------+------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------->

pg_catalog | pg_get_process_memory_contexts | SETOF record | pid integer, summary boolean, retries double precision, OUT name text, OUT ident text, OUT type text, OUT path integer[], OUT level integer, OUT total_bytes bigint, OUT >

(1 row)

Let’s play a bit with this. Suppose we have a session which reports this backend process ID:

postgres=# select version();

version

--------------------------------------------------------------------

PostgreSQL 18devel on x86_64-linux, compiled by gcc-14.2.1, 64-bit

(1 row)

postgres=# select pg_backend_pid();

pg_backend_pid

----------------

31291

(1 row)

In another session we can now ask for the summary of the memory contexts for the PID we got in the first session like this (the second parameter turns on the summary, the third is the waiting time in seconds for updated statistics):

postgres=# select * from pg_get_process_memory_contexts(31291,true,2);

name | ident | type | path | level | total_bytes | total_nblocks | free_bytes | free_chunks | used_bytes | num_agg_contexts | stats_timestamp

------------------------------+-------+----------+--------+-------+-------------+---------------+------------+-------------+------------+------------------+------------------------------

TopMemoryContext | | AllocSet | {1} | 1 | 141776 | 6 | 5624 | 11 | 136152 | 1 | 2025-04-08 13:37:38.63979+02

| | ??? | | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2025-04-08 13:37:38.63979+02

search_path processing cache | | AllocSet | {1,2} | 2 | 8192 | 1 | 5656 | 8 | 2536 | 1 | 2025-04-08 13:37:38.63979+02

RowDescriptionContext | | AllocSet | {1,3} | 2 | 8192 | 1 | 6920 | 0 | 1272 | 1 | 2025-04-08 13:37:38.63979+02

MessageContext | | AllocSet | {1,4} | 2 | 16384 | 2 | 7880 | 2 | 8504 | 2 | 2025-04-08 13:37:38.63979+02

Operator class cache | | AllocSet | {1,5} | 2 | 8192 | 1 | 616 | 0 | 7576 | 1 | 2025-04-08 13:37:38.63979+02

smgr relation table | | AllocSet | {1,6} | 2 | 16384 | 2 | 4664 | 3 | 11720 | 1 | 2025-04-08 13:37:38.63979+02

PgStat Shared Ref Hash | | AllocSet | {1,7} | 2 | 9264 | 2 | 712 | 0 | 8552 | 1 | 2025-04-08 13:37:38.63979+02

PgStat Shared Ref | | AllocSet | {1,8} | 2 | 4096 | 3 | 1760 | 3 | 2336 | 1 | 2025-04-08 13:37:38.63979+02

PgStat Pending | | AllocSet | {1,9} | 2 | 8192 | 4 | 7832 | 28 | 360 | 1 | 2025-04-08 13:37:38.63979+02

TopTransactionContext | | AllocSet | {1,10} | 2 | 8192 | 1 | 7952 | 0 | 240 | 1 | 2025-04-08 13:37:38.63979+02

TransactionAbortContext | | AllocSet | {1,11} | 2 | 32768 | 1 | 32528 | 0 | 240 | 1 | 2025-04-08 13:37:38.63979+02

Portal hash | | AllocSet | {1,12} | 2 | 8192 | 1 | 616 | 0 | 7576 | 1 | 2025-04-08 13:37:38.63979+02

TopPortalContext | | AllocSet | {1,13} | 2 | 8192 | 1 | 7952 | 1 | 240 | 1 | 2025-04-08 13:37:38.63979+02

Relcache by OID | | AllocSet | {1,14} | 2 | 16384 | 2 | 3608 | 3 | 12776 | 1 | 2025-04-08 13:37:38.63979+02

CacheMemoryContext | | AllocSet | {1,15} | 2 | 737984 | 182 | 183208 | 221 | 554776 | 88 | 2025-04-08 13:37:38.63979+02

LOCALLOCK hash | | AllocSet | {1,16} | 2 | 8192 | 1 | 616 | 0 | 7576 | 1 | 2025-04-08 13:37:38.63979+02

WAL record construction | | AllocSet | {1,17} | 2 | 50200 | 2 | 6400 | 0 | 43800 | 1 | 2025-04-08 13:37:38.63979+02

PrivateRefCount | | AllocSet | {1,18} | 2 | 8192 | 1 | 2672 | 0 | 5520 | 1 | 2025-04-08 13:37:38.63979+02

MdSmgr | | AllocSet | {1,19} | 2 | 8192 | 1 | 7936 | 0 | 256 | 1 | 2025-04-08 13:37:38.63979+02

GUCMemoryContext | | AllocSet | {1,20} | 2 | 57344 | 5 | 19960 | 7 | 37384 | 2 | 2025-04-08 13:37:38.63979+02

Timezones | | AllocSet | {1,21} | 2 | 104112 | 2 | 2672 | 0 | 101440 | 1 | 2025-04-08 13:37:38.63979+02

Turning off the summary, gives you the full picture:

postgres=# select * from pg_get_process_memory_contexts(31291,false,2) order by level, name;

name | ident | type | path | level | total_bytes | total_nblocks | free_bytes | free_chunks | used_bytes | num_agg_contexts | stats_timestamp

---------------------------------------+------------------------------------------------+----------+------------+-------+-------------+---------------+------------+-------------+------------+------------------+-------------------------------

TopMemoryContext | | AllocSet | {1} | 1 | 141776 | 6 | 5624 | 11 | 136152 | 1 | 2025-04-08 13:38:02.508423+02

CacheMemoryContext | | AllocSet | {1,15} | 2 | 524288 | 7 | 101280 | 1 | 423008 | 1 | 2025-04-08 13:38:02.508423+02

ErrorContext | | AllocSet | {1,22} | 2 | 8192 | 1 | 7952 | 4 | 240 | 1 | 2025-04-08 13:38:02.508423+02

GUCMemoryContext | | AllocSet | {1,20} | 2 | 24576 | 2 | 8264 | 1 | 16312 | 1 | 2025-04-08 13:38:02.508423+02

LOCALLOCK hash | | AllocSet | {1,16} | 2 | 8192 | 1 | 616 | 0 | 7576 | 1 | 2025-04-08 13:38:02.508423+02

MdSmgr | | AllocSet | {1,19} | 2 | 8192 | 1 | 7936 | 0 | 256 | 1 | 2025-04-08 13:38:02.508423+02

MessageContext | | AllocSet | {1,4} | 2 | 16384 | 2 | 2664 | 4 | 13720 | 1 | 2025-04-08 13:38:02.508423+02

Operator class cache | | AllocSet | {1,5} | 2 | 8192 | 1 | 616 | 0 | 7576 | 1 | 2025-04-08 13:38:02.508423+02

PgStat Pending | | AllocSet | {1,9} | 2 | 8192 | 4 | 7832 | 28 | 360 | 1 | 2025-04-08 13:38:02.508423+02

PgStat Shared Ref | | AllocSet | {1,8} | 2 | 4096 | 3 | 1760 | 3 | 2336 | 1 | 2025-04-08 13:38:02.508423+02

PgStat Shared Ref Hash | | AllocSet | {1,7} | 2 | 9264 | 2 | 712 | 0 | 8552 | 1 | 2025-04-08 13:38:02.508423+02

Portal hash | | AllocSet | {1,12} | 2 | 8192 | 1 | 616 | 0 | 7576 | 1 | 2025-04-08 13:38:02.508423+02

PrivateRefCount | | AllocSet | {1,18} | 2 | 8192 | 1 | 2672 | 0 | 5520 | 1 | 2025-04-08 13:38:02.508423+02

Relcache by OID | | AllocSet | {1,14} | 2 | 16384 | 2 | 3608 | 3 | 12776 | 1 | 2025-04-08 13:38:02.508423+02

RowDescriptionContext | | AllocSet | {1,3} | 2 | 8192 | 1 | 6920 | 0 | 1272 | 1 | 2025-04-08 13:38:02.508423+02

search_path processing cache | | AllocSet | {1,2} | 2 | 8192 | 1 | 5656 | 8 | 2536 | 1 | 2025-04-08 13:38:02.508423+02

smgr relation table | | AllocSet | {1,6} | 2 | 16384 | 2 | 4664 | 3 | 11720 | 1 | 2025-04-08 13:38:02.508423+02

Timezones | | AllocSet | {1,21} | 2 | 104112 | 2 | 2672 | 0 | 101440 | 1 | 2025-04-08 13:38:02.508423+02

TopPortalContext | | AllocSet | {1,13} | 2 | 8192 | 1 | 7952 | 1 | 240 | 1 | 2025-04-08 13:38:02.508423+02

TopTransactionContext | | AllocSet | {1,10} | 2 | 8192 | 1 | 7952 | 0 | 240 | 1 | 2025-04-08 13:38:02.508423+02

TransactionAbortContext | | AllocSet | {1,11} | 2 | 32768 | 1 | 32528 | 0 | 240 | 1 | 2025-04-08 13:38:02.508423+02

WAL record construction | | AllocSet | {1,17} | 2 | 50200 | 2 | 6400 | 0 | 43800 | 1 | 2025-04-08 13:38:02.508423+02

GUC hash table | | AllocSet | {1,20,111} | 3 | 32768 | 3 | 11696 | 6 | 21072 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_ts_dict_oid_index | AllocSet | {1,15,46} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_event_trigger_oid_index | AllocSet | {1,15,47} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_conversion_default_index | AllocSet | {1,15,48} | 3 | 2224 | 2 | 216 | 0 | 2008 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_operator_oprname_l_r_n_index | AllocSet | {1,15,49} | 3 | 2224 | 2 | 216 | 0 | 2008 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_trigger_tgrelid_tgname_index | AllocSet | {1,15,50} | 3 | 3072 | 2 | 1296 | 3 | 1776 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_extension_oid_index | AllocSet | {1,15,51} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_enum_typid_label_index | AllocSet | {1,15,52} | 3 | 3072 | 2 | 1296 | 3 | 1776 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_ts_config_oid_index | AllocSet | {1,15,53} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_user_mapping_oid_index | AllocSet | {1,15,54} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_opfamily_am_name_nsp_index | AllocSet | {1,15,55} | 3 | 3072 | 2 | 1192 | 2 | 1880 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_foreign_table_relid_index | AllocSet | {1,15,56} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_type_oid_index | AllocSet | {1,15,57} | 3 | 2048 | 2 | 680 | 2 | 1368 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_aggregate_fnoid_index | AllocSet | {1,15,58} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_constraint_oid_index | AllocSet | {1,15,59} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_rewrite_rel_rulename_index | AllocSet | {1,15,60} | 3 | 3072 | 2 | 1296 | 3 | 1776 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_ts_parser_prsname_index | AllocSet | {1,15,61} | 3 | 3072 | 2 | 1296 | 3 | 1776 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_ts_config_cfgname_index | AllocSet | {1,15,62} | 3 | 3072 | 2 | 1296 | 3 | 1776 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_ts_parser_oid_index | AllocSet | {1,15,63} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_publication_rel_prrelid_prpubid_index | AllocSet | {1,15,64} | 3 | 3072 | 2 | 1264 | 2 | 1808 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_operator_oid_index | AllocSet | {1,15,65} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_namespace_nspname_index | AllocSet | {1,15,66} | 3 | 2048 | 2 | 680 | 2 | 1368 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_ts_template_oid_index | AllocSet | {1,15,67} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_amop_opr_fam_index | AllocSet | {1,15,68} | 3 | 3072 | 2 | 1192 | 2 | 1880 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_default_acl_role_nsp_obj_index | AllocSet | {1,15,69} | 3 | 3072 | 2 | 1160 | 2 | 1912 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_collation_name_enc_nsp_index | AllocSet | {1,15,70} | 3 | 3072 | 2 | 1192 | 2 | 1880 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_publication_rel_oid_index | AllocSet | {1,15,71} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_range_rngtypid_index | AllocSet | {1,15,72} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_ts_dict_dictname_index | AllocSet | {1,15,73} | 3 | 3072 | 2 | 1296 | 3 | 1776 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_type_typname_nsp_index | AllocSet | {1,15,74} | 3 | 3072 | 2 | 1296 | 3 | 1776 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_opfamily_oid_index | AllocSet | {1,15,75} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_statistic_ext_oid_index | AllocSet | {1,15,76} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_statistic_ext_data_stxoid_inh_index | AllocSet | {1,15,77} | 3 | 3072 | 2 | 1264 | 2 | 1808 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_class_oid_index | AllocSet | {1,15,78} | 3 | 2048 | 2 | 680 | 2 | 1368 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_proc_proname_args_nsp_index | AllocSet | {1,15,79} | 3 | 3072 | 2 | 1048 | 1 | 2024 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_partitioned_table_partrelid_index | AllocSet | {1,15,80} | 3 | 2048 | 2 | 792 | 3 | 1256 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_range_rngmultitypid_index | AllocSet | {1,15,81} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_transform_type_lang_index | AllocSet | {1,15,82} | 3 | 3072 | 2 | 1296 | 3 | 1776 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_attribute_relid_attnum_index | AllocSet | {1,15,83} | 3 | 3072 | 2 | 1080 | 3 | 1992 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_proc_oid_index | AllocSet | {1,15,84} | 3 | 2048 | 2 | 680 | 2 | 1368 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_language_oid_index | AllocSet | {1,15,85} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_namespace_oid_index | AllocSet | {1,15,86} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_amproc_fam_proc_index | AllocSet | {1,15,87} | 3 | 3248 | 3 | 912 | 0 | 2336 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_foreign_server_name_index | AllocSet | {1,15,88} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_attribute_relid_attnam_index | AllocSet | {1,15,89} | 3 | 3072 | 2 | 1296 | 3 | 1776 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_publication_namespace_pnnspid_pnpubid_index | AllocSet | {1,15,90} | 3 | 3072 | 2 | 1264 | 2 | 1808 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_conversion_oid_index | AllocSet | {1,15,91} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_user_mapping_user_server_index | AllocSet | {1,15,92} | 3 | 3072 | 2 | 1264 | 2 | 1808 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_subscription_rel_srrelid_srsubid_index | AllocSet | {1,15,93} | 3 | 3072 | 2 | 1264 | 2 | 1808 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_sequence_seqrelid_index | AllocSet | {1,15,94} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_extension_name_index | AllocSet | {1,15,95} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_conversion_name_nsp_index | AllocSet | {1,15,96} | 3 | 3072 | 2 | 1296 | 3 | 1776 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_authid_oid_index | AllocSet | {1,15,97} | 3 | 2048 | 2 | 680 | 2 | 1368 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_subscription_oid_index | AllocSet | {1,15,99} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_parameter_acl_oid_index | AllocSet | {1,15,100} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_tablespace_oid_index | AllocSet | {1,15,101} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_parameter_acl_parname_index | AllocSet | {1,15,102} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_shseclabel_object_index | AllocSet | {1,15,103} | 3 | 3072 | 2 | 1192 | 2 | 1880 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_replication_origin_roname_index | AllocSet | {1,15,104} | 3 | 2048 | 2 | 792 | 3 | 1256 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_database_datname_index | AllocSet | {1,15,105} | 3 | 2048 | 2 | 680 | 2 | 1368 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_subscription_subname_index | AllocSet | {1,15,106} | 3 | 3072 | 2 | 1296 | 3 | 1776 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_replication_origin_roiident_index | AllocSet | {1,15,107} | 3 | 2048 | 2 | 792 | 3 | 1256 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_auth_members_role_member_index | AllocSet | {1,15,108} | 3 | 3072 | 2 | 1160 | 2 | 1912 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_database_oid_index | AllocSet | {1,15,109} | 3 | 2048 | 2 | 680 | 2 | 1368 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_authid_rolname_index | AllocSet | {1,15,110} | 3 | 2048 | 2 | 680 | 2 | 1368 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_auth_members_member_role_index | AllocSet | {1,15,98} | 3 | 3072 | 2 | 1160 | 2 | 1912 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_db_role_setting_databaseid_rol_index | AllocSet | {1,15,24} | 3 | 3072 | 2 | 1120 | 1 | 1952 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_opclass_am_name_nsp_index | AllocSet | {1,15,25} | 3 | 3072 | 2 | 1192 | 2 | 1880 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_foreign_data_wrapper_name_index | AllocSet | {1,15,26} | 3 | 2048 | 2 | 792 | 3 | 1256 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_enum_oid_index | AllocSet | {1,15,27} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_class_relname_nsp_index | AllocSet | {1,15,28} | 3 | 3072 | 2 | 1296 | 3 | 1776 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_foreign_server_oid_index | AllocSet | {1,15,29} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_publication_pubname_index | AllocSet | {1,15,30} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_statistic_relid_att_inh_index | AllocSet | {1,15,31} | 3 | 3072 | 2 | 1160 | 2 | 1912 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_cast_source_target_index | AllocSet | {1,15,32} | 3 | 3072 | 2 | 1296 | 3 | 1776 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_language_name_index | AllocSet | {1,15,33} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_transform_oid_index | AllocSet | {1,15,34} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_collation_oid_index | AllocSet | {1,15,35} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_amop_fam_strat_index | AllocSet | {1,15,36} | 3 | 2224 | 2 | 216 | 0 | 2008 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_index_indexrelid_index | AllocSet | {1,15,37} | 3 | 2048 | 2 | 680 | 2 | 1368 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_ts_template_tmplname_index | AllocSet | {1,15,38} | 3 | 3072 | 2 | 1296 | 3 | 1776 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_ts_config_map_index | AllocSet | {1,15,39} | 3 | 3072 | 2 | 1192 | 2 | 1880 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_opclass_oid_index | AllocSet | {1,15,40} | 3 | 2048 | 2 | 680 | 2 | 1368 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_foreign_data_wrapper_oid_index | AllocSet | {1,15,41} | 3 | 2048 | 2 | 792 | 3 | 1256 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_publication_namespace_oid_index | AllocSet | {1,15,42} | 3 | 2048 | 2 | 792 | 3 | 1256 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_event_trigger_evtname_index | AllocSet | {1,15,43} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_statistic_ext_name_index | AllocSet | {1,15,44} | 3 | 3072 | 2 | 1296 | 3 | 1776 | 1 | 2025-04-08 13:38:02.508423+02

index info | pg_publication_oid_index | AllocSet | {1,15,45} | 3 | 2048 | 2 | 824 | 3 | 1224 | 1 | 2025-04-08 13:38:02.508423+02

pg_get_remote_backend_memory_contexts | | AllocSet | {1,4,23} | 3 | 16384 | 2 | 6568 | 3 | 9816 | 1 | 2025-04-08 13:38:02.508423+02

(111 rows)

As you can see above there are many entries with “index info” which are not directly visible in the summary view. The reason is the aggregation when you go for the summary. All “index info” entries are aggregated into under “CacheMemoryContext” and we can easily verify this:

postgres=# select count(*) from pg_get_process_memory_contexts(31291,false,2) where name = 'index info';

count

-------

87

(1 row)

… which is very close to the 88 aggregations reported in the summary. Excluding all the system/catalog indexes we get the following picture:

postgres=# select * from pg_get_process_memory_contexts(31291,false,2) where name = 'index info' and ident !~ 'pg_';

name | ident | type | path | level | total_bytes | total_nblocks | free_bytes | free_chunks | used_bytes | num_agg_contexts | stats_timestamp

------+-------+------+------+-------+-------------+---------------+------------+-------------+------------+------------------+-----------------

(0 rows)

-- system/catalog indexes

postgres=# select count(*) from pg_get_process_memory_contexts(31291,false,2) where name = 'index info' and ident ~ 'pg_';

count

-------

87

(1 row)

Creating a new table and an index on that table in the first session will change the picture to this:

-- first session

postgres=# create table t ( a int );

CREATE TABLE

postgres=# create index i on t(a);

CREATE INDEX

postgres=#

-- second session

postgres=# select * from pg_get_process_memory_contexts(31291,false,2) where name = 'index info' and ident !~ 'pg_';

name | ident | type | path | level | total_bytes | total_nblocks | free_bytes | free_chunks | used_bytes | num_agg_contexts | stats_timestamp

------------+-------+----------+-----------+-------+-------------+---------------+------------+-------------+------------+------------------+-------------------------------

index info | i | AllocSet | {1,16,26} | 3 | 2048 | 2 | 776 | 3 | 1272 | 1 | 2025-04-08 13:44:55.496668+02

(1 row)

… and this will also increase the aggregation count we did above:

postgres=# select count(*) from pg_get_process_memory_contexts(31291,false,2) where name = 'index info';

count

-------

98

(1 row)

… but why to 98 and not to 89? Because additional system indexes have also been loaded (I let it as an exercise to you find out which ones those are):

postgres=# select count(*) from pg_get_process_memory_contexts(31291,false,2) where name = 'index info' and ident ~ 'pg_';

count

-------

97

(1 row)

You can go on and do additional tests for the other memory contexts to get an idea how that works. Personally, I think this is a great new feature because you can now have a look at the memory contexts of problematic processes. Thanks to all involved, details here.

L’article PostgreSQL 18: Add function to report backend memory contexts est apparu en premier sur dbi Blog.

PostgreSQL 18: Allow NOT NULL constraints to be added as NOT VALID

Before we take a look at what this new feature is about, let’s have a look at how PostgreSQL 17 (and before) handles “NOT NULL” constraints when they get created. As usual we start with a simple table:

postgres=# select version();

version

-----------------------------------------------------------------------------------------------------------------------------

PostgreSQL 17.2 on x86_64-pc-linux-gnu, compiled by gcc (GCC) 14.2.1 20250110 (Red Hat 14.2.1-7), 64-bit

(1 row)

postgres=# create table t ( a int not null, b text );

CREATE TABLE

postgres=# \d t

Table "public.t"

Column | Type | Collation | Nullable | Default

--------+---------+-----------+----------+---------

a | integer | | not null |

b | text | | |

Trying to insert data into that table which violates the constraint of course will fail:

postgres=# insert into t select null,1 from generate_series(1,2);

ERROR: null value in column "a" of relation "t" violates not-null constraint

DETAIL: Failing row contains (null, 1).

Even if you can set the column to “NOT NULL” syntax wise, this will not disable the constraint:

postgres=# alter table t alter column a set not null;

ALTER TABLE

postgres=# insert into t select null,1 from generate_series(1,2);

ERROR: null value in column "a" of relation "t" violates not-null constraint

DETAIL: Failing row contains (null, 1).

postgres=# \d t

Table "public.t"

Column | Type | Collation | Nullable | Default

--------+---------+-----------+----------+---------

a | integer | | not null |

b | text | | |

The only option you have when you want to do this, is to drop the constraint:

postgres=# alter table t alter column a drop not null;

ALTER TABLE

postgres=# insert into t select null,1 from generate_series(1,2);

INSERT 0 2

postgres=# \d t

Table "public.t"

Column | Type | Collation | Nullable | Default

--------+---------+-----------+----------+---------

a | integer | | |

b | text | | |

The use case for this is data loading. Maybe you want to load data where you know that the constraint would be violated but you’re ok with fixing that manually afterwards and then re-enable the constraint like this:

postgres=# update t set a = 1;

UPDATE 2

postgres=# alter table t alter column a set not null;

ALTER TABLE

postgres=# \d t

Table "public.t"

Column | Type | Collation | Nullable | Default

--------+---------+-----------+----------+---------

a | integer | | not null |

b | text | | |

postgres=# insert into t select null,1 from generate_series(1,2);

ERROR: null value in column "a" of relation "t" violates not-null constraint

DETAIL: Failing row contains (null, 1).

This will change with PostgreSQL 18. From now you have more options. The following still behaves as before:

postgres=# select version();

version

--------------------------------------------------------------------

PostgreSQL 18devel on x86_64-linux, compiled by gcc-14.2.1, 64-bit

(1 row)

postgres=# create table t ( a int, b text );

CREATE TABLE

postgres=# alter table t add constraint c1 not null a;

ALTER TABLE

postgres=# \d t

Table "public.t"

Column | Type | Collation | Nullable | Default

--------+---------+-----------+----------+---------

a | integer | | not null |

b | text | | |

postgres=# insert into t select null,1 from generate_series(1,2);

ERROR: null value in column "a" of relation "t" violates not-null constraint

DETAIL: Failing row contains (null, 1).

This, of course, leads to the same behavior as with PostgreSQL 17 above. But now you can do this:

postgres=# create table t ( a int, b text );

CREATE TABLE

postgres=# insert into t select null,1 from generate_series(1,2);

INSERT 0 2

postgres=# alter table t add constraint c1 not null a not valid;

ALTER TABLE

This gives us a “NOT NULL” constraint which will not be enforced when it is created. Doing the same in PostgreSQL 17 (and before) will scan the table and enforce the constraint:

postgres=# select version();

version

-----------------------------------------------------------------------------------------------------------------------------

PostgreSQL 17.2 dbi services build on x86_64-pc-linux-gnu, compiled by gcc (GCC) 14.2.1 20250110 (Red Hat 14.2.1-7), 64-bit

(1 row)

postgres=# create table t ( a int, b text );

CREATE TABLE

postgres=# insert into t select null,1 from generate_series(1,2);

INSERT 0 2

postgres=# alter table t add constraint c1 not null a not valid;

ERROR: syntax error at or near "not"

LINE 1: alter table t add constraint c1 not null a not valid;

^

postgres=# alter table t alter column a set not null;

ERROR: column "a" of relation "t" contains null values

As you can see the syntax is not supported and adding a “NOT NULL” constraint will scan the table and enforce the constraint.

Back to the PostgreSQL 18 cluster. As we now have data which would violate the constraint:

postgres=# select * from t;

a | b

---+---

| 1

| 1

(2 rows)

postgres=# \d t

Table "public.t"

Column | Type | Collation | Nullable | Default

--------+---------+-----------+----------+---------

a | integer | | not null |

b | text | | |

… we can fix that manually and then validate the constraint afterwards:

postgres=# update t set a = 1;

UPDATE 2

postgres=# alter table t validate constraint c1;

ALTER TABLE

postgres=# insert into t values (null, 'a');

ERROR: null value in column "a" of relation "t" violates not-null constraint

DETAIL: Failing row contains (null, a).

Nice, thanks to all involved, details here.

L’article PostgreSQL 18: Allow NOT NULL constraints to be added as NOT VALID est apparu en premier sur dbi Blog.

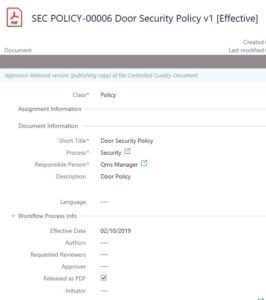

Best Practices for Structuring Metadata in M-Files

In one of my previous post, I talked about the importance of Metadata, it is the skeleton of efficient document management system and particularly in M-Files!

As I like to say, M-Files is like an empty shell that can be very easily customized to obtain the ideal solution for our business/customers.

Unlike traditional folder-based storage, M-Files leverages metadata to classify, search, and retrieve documents with ease.

Properly structuring metadata ensures better organization, improved searchability, and enhanced workflow automation.

In this blog post, we will explore best practices for structuring metadata in M-Files to maximize its potential.

Metadata in M-Files is used to describe documents, making them easier to find and categorize.

Instead of placing files in rigid folder structures, metadata allows documents to be dynamically organized based on properties such as document type, project, client, or status…

Metadata are then used to create views, which are simply predefined searches based on them.

Additionally M-files is able to make automatic relations between different objects.

For example in a project “XXX” related to a customer “YYY”, all associated documents to this project are automatically related (and findable) to the customer.

Or Choosing a “Document Type” can dictate required approval workflows.

Another advantage can be, only the members working on the project can access the customers information and contacts…

Define Clear and Consistent Metadata FieldsWhen setting up metadata in M-Files, define clear fields that align with your business processes. Some essential metadata fields include:

- Document Type (e.g., Invoice, Contract, Report)

- Department (e.g., HR, Finance, Legal)

- Project or Client Name

- Status (e.g., Draft, Approved, Archived)

Ensure consistency by using standardized field names and avoiding duplicate or unnecessary fields.

When values are known like for Departments or Status,… better to use the Value lists to ensure the accuracy of the data (no typo-mistake, no end user creativity).

To make sure to respect the company naming convention, use the automatic values on properties, it can be:

- Automatic numbering

- Customized Numbering

- Concatenation of properties

- Calculated value (script)

M-Files provides customizable metadata cards, allowing users to input relevant data efficiently.

Often there are properties not relevant for the end user, we can use the Metadata card configuration to hide them.

To improve readability, we can also create “section” to logically group the properties.

And Finally, Metadata card Configuration can be used to set default value and provide tips (Property description and/or Tooltip).

Reduce manual entry and improve accuracy by setting up automatic metadata population.

M-Files, with the help of its intelligence service (previous post here), can suggest metadata from file properties, templates, or integrated systems, minimizing human error and saving time.

M-Files is a living system and needs and must evolve with the business needs.

It is important to periodically review metadata structures to ensure they remain relevant.

Refine metadata rules, and continuously train employees on best practices to keep your M-Files environment optimized.

A well-structured metadata system in M-Files enhances efficiency, improves document retrieval, and supports seamless automation. By implementing these best practices, organizations can create a smarter document management strategy that adapts to their needs.

Are you making the most of metadata in M-Files? Good news it’s never to late with M-Files, so start optimizing your structure today!

If you feel a bit lost, we can help you!

L’article Best Practices for Structuring Metadata in M-Files est apparu en premier sur dbi Blog.

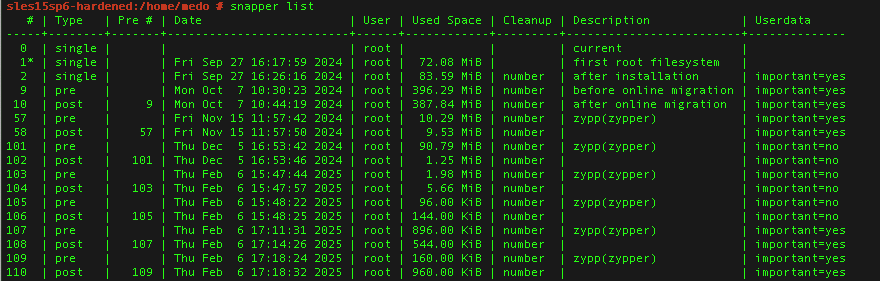

Working with Btrfs & Snapper

In this Blog post I will try to give a pure technical cheat sheet using Btrfs on any distribution. Additionally I will explain how to use one of the most sophisticated backup/ restore tool called “snapper”.

What is Btrfs and how to set it up if not already installed?Btrfs (aka Butter FS or aka B-Tree FS) is like xfs and ext4 a filesystem which offers any linux user many features to maintain or manage their filesystem. Usually Btrfs is used stand-alone but it works with LVM2 too, without any additional configuration.

Key Features of Btrfs:

- Copy-on-Write (CoW): Btrfs uses CoW, meaning it creates new copies of data instead of overwriting existing files.

- Snapshots: You can create instant, space-efficient snapshots of your filesystem or specific directories, like with Snapper on SUSE. These are perfect for backups, rollbacks, or tracking changes (e.g., before/after updates).

- Self-Healing: Btrfs supports data integrity with checksums and can detect and repair errors, especially with RAID configurations.

- Flexible Storage: It handles multiple devices, RAID (0, 1, 5, 6, 10), and dynamic resizing, making it adaptable for growing storage needs.

- Compression: Btrfs can compress files on the fly (e.g., using Zstandard or LZO), saving space without sacrificing performance.

- Subvolumes: Btrfs lets you create logical partitions (subvolumes) within the same filesystem, enabling fine-grained control. It is like having separate root, home, or snapshot subvolumes.

Usually Btrfs is used by default in any SuSE Linux Server (and OpenSuSE) and can be used in RHEL & OL and other distribution. To use Btrfs on any RPM based distribution just install the package “btrfs-progs”. With Debian and Ubuntu this is a bit more tricky, which is why we will keep this blog about RPM-based distributions only.

Create new filesystem and increase it with Btrfs# Wipe any old filesystem on /dev/sdb (careful—data’s toast!)

wipefs -a /dev/vdb

# Create a Btrfs filesystem on /dev/sdb

mkfs.btrfs /dev/vdb

# Make a mount point

mkdir /mnt/btrfs

# Mount it—basic setup, no fancy options yet

mount /dev/vdb /mnt/btrfs

# Check it’s there and Btrfs

df -h /mnt/btrfs

# Add to /etc/fstab for permanence (use your device UUID from blkid)

/dev/vdb /mnt/btrfs btrfs defaults 0 2

# Test fstab

mount -a

# List all Btrfs Filesystems

btrfs filesystem show

# Add additional storage to existing Btrfs filesystem (in our case /)

btrfs add device /dev/vdd /

# In some cases it is smart to balance the storage between all the devices

btrfs balance start /

# If the space allows it you can remove devices from a Btrfs filesystem

btrfs device delete /dev/vdd /# List all snapshots for the root config

snapper -c root list

# Pick a snapshot and check its diff (example with 5 & 6)

snapper -c root diff 5..6

# Roll back to snapshot 5 (dry run first)

snapper -c root rollback 5 --print-number

# Do it for real—reboots to snapshot 5

snapper -c root rollback 5

reboot

# Verify after reboot—root’s now at snapshot 5

snapper -c root list# Mount the Btrfs root filesystem (not a subvolume yet)

mount /dev/vdb /mnt/butter

# Create a subvolume called ‘data’ (only possible inside a existing Btrfs volume)

btrfs subvolume create /mnt/butter/data

# List subvolumes

btrfs subvolume list /mnt/butter

# Make a mount point for the subvolume

mkdir /mnt/data

# Mount the subvolume explicitly

mount -o subvol=data /dev/vdb /mnt/data

# Check it’s mounted as a subvolume

df -h /mnt/data

# Create a new subvolume by creating a snapshot inside the btrfs volume

btrfs subvolume snapshot /mnt/data /mnt/butter/data-snap1

# Delete the snapshot which is a subvolume

btrfs subvolume delete /mnt/data-snap1# Install Snapper if it’s not there

zypper install snapper

# Create a snapper config of filesystem

snapper -c <ConfigName> create-config <btrfs-mountpoint>

# Enable timeline snapshots (if not enabled by default)

echo "TIMELINE_CREATE=\"yes\"" >> /etc/snapper/configs/root

# Set snapshot limits (e.g., keep 10 hourly)

sed -i 's/TIMELINE_LIMIT_HOURLY=.*/TIMELINE_LIMIT_HOURLY="10"/' /etc/snapper/configs/root

# Start the Snapper timer (if not enabled by default)

systemctl enable snapper-timeline.timer

systemctl start snapper-timeline.timer

# Trigger a manual snapshot to test

snapper -c <ConfigName> create -d "Manual test snapshot"

# List snapshots to confirm

snapper -c <ConfigName> listHere is a small overview of the most important settings to use within a snapper config file:

- SPACE_LIMIT=”0.5″

- Sets the maximum fraction of the filesystem’s space that snapshots can occupy. 0.5 = 50%

- FREE_LIMIT=”0.2″

- Ensures a minimum fraction of the filesystem stays free. 0.2= 20%

- ALLOW_USERS=”admin dbi”

- Lists users allowed to manage this Snapper config.

- ALLOW_GROUPS=”admins”

- A list of Groups that are allowed to manage this config.

- SYNC_ACL=”no”

- Syncs permissions from ALLOW_USERS and ALLOW_GROUPS to the .snapshots directory. If yes, Snapper updates the access control lists on /.snapshots to match ALLOW_USERS/ALLOW_GROUPS. With no, it skips this, and you manage permissions manually.

- NUMBER_CLEANUP=”yes”

- When yes, Snapper deletes old numbered snapshots (manual and/ or automated ones) when they exceed NUMBER_LIMIT or age past NUMBER_MIN_AGE.

- NUMBER_MIN_AGE=”1800″

- Minimum age (in seconds) before a numbered snapshot can be deleted.

- NUMBER_LIMIT=”50″

- Maximum number of numbered snapshots to keep.

- NUMBER_LIMIT_IMPORTANT=”10″

- Maximum number of numbered snapshots marked as “important” to keep.

- TIMELINE_CREATE=”yes”

- Enables automatic timeline snapshots.

- TIMELINE_CLEANUP=”yes”

- Enables cleanup of timeline snapshots based on limits.

- TIMELINE_LIMIT_*=”10″

- TIMELINE_LIMIT_HOURLY=”10″

- TIMELINE_LIMIT_DAILY=”10″

- TIMELINE_LIMIT_WEEKLY=”0″ (disabled)

- TIMELINE_LIMIT_MONTHLY=”10″

- TIMELINE_LIMIT_YEARLY=”10″

- Controls how many snapshots Snapper retains over time. Keeps 10 hourly, 10 daily, 10 monthly, and 10 yearly, but skips weekly 0.

For further information about the settings, check out the SUSE documentation.

Btrfs RAID and Multi-Device management# Format two disks (/dev/vdb, /dev/vdc) as Btrfs RAID1

mkfs.btrfs -d raid1 -m raid1 /dev/vdb /dev/vdc

# Mount it

mount /dev/vdb /mnt/btrfs-raid

# Check RAID status

btrfs filesystem show /mnt/btrfs-raid

# Add a third disk (/dev/sdd) to the array

btrfs device add /dev/sdd /mnt/btrfs-raid

# Rebalance to RAID1 across all three (dconvert is data raid and mconvert is metadata raid definition)

btrfs balance start -dconvert=raid1 -mconvert=raid1 /mnt/btrfs-raid

# Check device stats for errors

btrfs device stats /mnt/btrfs-raid

# Remove a disk if needed

btrfs device delete /dev/vdc /mnt/btrfs-raid# Check disk usage?

btrfs filesystem df /mnt/btrfs

# Full filesystem and need more storage? Add an additional empty storage device to the Btrfs volume:

btrfs add device /dev/vde /mnt/btrfs

# If the storage devices has grown (via LVM or virtually) one can resize the size to max:

btrfs filesystem resize max /mnt/btrfs

# Balance to free space if it’s tight (dusage defines from what % the rebalance should start. In our case only 50% or less data per block will trigger the re-balance)

btrfs balance start -dusage=50 /mnt/btrfs

# Too many snapshots? List them

snapper -c root list | wc -l

# Delete old snapshots (e.g., #10)

snapper -c root delete 10

# Check filesystem for corruption

btrfs check /dev/vdb

# Repair if it’s borked (careful—backup first!)

btrfs check --repair /dev/vdb

# Rollback stuck? Force it

snapper -c root rollback 5 --force

Something that needs to be pointed out is that the snapper list is sorted from the oldest (starting at point 1) to the newest. BUT: At the top there is always the current state of the filesystem with the number 0.

L’article Working with Btrfs & Snapper est apparu en premier sur dbi Blog.

Installing and configuring Veeam RMAN plug-in on an ODA

I recently had to install and configure the Veeam RMAN plug-in on an ODA, and would like to provide the steps in this article, as it might be helpful for many other people.

Read more: Installing and configuring Veeam RMAN plug-in on an ODA Create Veeam linux OS user

We will create an OS linux user on the ODA that will be used to authenticate on the Veeam Backup server. This user on the sever will need to have the Veeam Backup Administrator role or Veeam Backup Operator and Veeam Restore Operator roles.

Check if role for user and group is not already used:

[root@ODA02 ~]# grep 497 /etc/group [root@ODA02 ~]# grep 54323 /etc/passwd

Create the group:

[root@ODA02 ~]# groupadd -g 497 veeam

Create the user:

[root@ODA02 ~]# useradd -g 497 -u 54323 -d /home/veeam -s /bin/bash oda_veeam [root@ODA02 ~]# passwd oda_veeam Changing password for user oda_veeam. New password: Retype new password: passwd: all authentication tokens updated successfully.Installing Veeam RMAN plug-in