Claudia Zeiler

Composite Interval Partitioning isn't as advertised.

Oracle® Database VLDB and Partitioning Guide 11g Release 1 (11.1) Part Number B32024-01 says:

Interval Partitioning

Interval partitioning is an extension of range partitioning which instructs the database to automatically create partitions of a specified interval when data inserted into the table exceeds all of the existing range partitions. You must specify at least one range partition.

You can create single-level interval partitioned tables as well as the following composite partitioned tables:

* Interval-range

* Interval-hash

* Interval-list

Sure, I can create these composite partitions, but the results aren't particularly useful. When I tried. Oracle spread my results nicely between the two hash subpartitions for the manually defined partition, but put everything in the same subpartition for the interval generated partition. Notice that these are identical sets of rows. The only difference is the key to force them into the manually specified partition or the generated partition. I assume that there is a metalink note on this somewhere.

I got equivalent results for interval-list composite partitioning. I won't bore the reader with the step-by-step for that test since the results are also that all rows in the generated partitions are forced into one subpartition.

Here are my results for the interval hash test:

SQL> create table interval_hash (

N number,

N2 number

)

partition by range(N) interval (2)

SUBPARTITION BY HASH (N2)

(partition p1 values less than (2)

(SUBPARTITION p_1 ,

SUBPARTITION p_2

));

Table created.

SQL> BEGIN

FOR i IN 1 .. 15 LOOP

INSERT INTO interval_hash VALUES (5, i);

INSERT INTO interval_hash VALUES (0, i);

END LOOP;

COMMIT;

END;

/

PL/SQL procedure successfully completed.

SQL> EXEC DBMS_STATS.gather_table_stats(USER, 'INTERVAL_HASH', granularity=>'ALL');

PL/SQL procedure successfully completed.

SQL> SELECT table_name, partition_name, subpartition_name, num_rows

FROM user_tab_subpartitions

ORDER by table_name, partition_name, subpartition_name;

TABLE_NAME PARTITION_NAME SUBPARTITION_NAME NUM_ROWS

-------------------- -------------------- -------------------- ----------

INTERVAL_HASH P1..................P_1........................... 6

INTERVAL_HASH P1..................P_2........................... 9

INTERVAL_HASH SYS_P138......SYS_SUBP137............15

(I am having tabbing problems in blogger. I hope that my added lines of dots don't confuse too much)

SQL> select * from interval_hash subpartition(p_2) order by n2;

N N2

---------- ----------

0 1

0 3

0 4

0 7

0 9

0 10

0 12

0 14

0 15

9 rows selected.

SQL> select * from interval_hash subpartition(p_1) order by n2;

N N2

---------- ----------

0 2

0 5

0 6

0 8

0 11

0 13

6 rows selected.

SQL> select * from interval_hash subpartition(SYS_SUBP137) ORDER BY N2;

N N2

---------- ----------

5 1

5 2

5 3

5 4

5 5

5 6

5 7

5 8

5 9

5 10

5 11

5 12

5 13

5 14

5 15

15 rows selected.

That's a whole lot of partitions!

I create the simplest table possible and insert 3 rows - generating 3 partitions.

SQL> create table d1 (dt date)

2 partition by range (dt) interval (numtoyminterval(1,'MONTH'))

3 (PARTITION P1 VALUES LESS THAN (TO_DATE('08/01/1776', 'MM/DD/YYYY')));

Table created.

SQL> insert into d1 values (to_date('07/04/1776', 'MM/DD/YYYY'));

1 row created.

SQL> insert into d1 values (to_date('09/22/1862', 'MM/DD/YYYY'));

1 row created.

SQL> insert into d1 values (to_date('08/18/1920', 'MM/DD/YYYY'));

1 row created.

SQL> select * from d1;

DT

---------

04-JUL-76

22-SEP-62

18-AUG-20

SQL> select table_name, partition_name from user_tab_partitions where table_name = 'D1';

TABLE_NAME PARTITION_NAME

------------------------------ ------------------------------

D1 P1

D1 SYS_P62

D1 SYS_P63

But when I look at the partition_count in user_part_tables...

SQL> select table_name, partition_count from user_PART_TABLES where table_name = 'D1';

TABLE_NAME PARTITION_COUNT

------------------------------ ---------------

D1 1048575

That's a whole lot of partitions! Clearly that is the maximum possible partitions. It's odd that the developers at Oracle chose to store that value there rather than the actual count of partitions created. They obviously have it available. Ah, the mysteries of the Oracle.

It's wonderful, but will I survive?

It is all Wonderful. Just one little question. How am I going to survive 3 more days? I'm going to bed!

A trivial index demo

"It is an Ancient DBA and he stoppeth one of three."

I demonstrated the matter a bit to him.

Logged on as scott, I create a play table:

SQL> create table tmp(f1 number(4), f2 number(4), f3 number(4));

Table created.

SQL> insert into tmp values (1, 2, 3);

1 row created.

SQL> create index tmp_f2 on tmp(f2);

Index created.

SQL> drop index tmp_f2;

Index dropped.

All straight forward. The index was created 'explicitly' and there is no constraint that it affects if it is dropped, so I can drop it without problem.

Now to add a constraint, and an 'implicit' index.

SQL> alter table tmp modify f2 unique;

Table altered.

SQL> SELECT CONSTRAINT_NAME FROM USER_CONSTRAINTS WHERE TABLE_NAME = 'TMP';

CONSTRAINT_NAME

------------------------------

SYS_C0011482

SQL> select index_name from user_indexes where table_name = 'TMP';

INDEX_NAME

------------------------------

SYS_C0011482

The constraint has been created as has an index

SQL> drop index SYS_C0011482;

drop index SYS_C0011482

*

ERROR at line 1:

ORA-02429: cannot drop index used for enforcement of unique/primary key

and as expected, the index cannot be dropped since it is used by the constraint.

It is all very trivial.

What did get a little interesting was that I was able to enter multiple rows of nulls in a field with a 'unique' constraint. I need to review the properties of null.

And the real question that was never answered

"Now wherefore stopp'st thou me?"

OOW any free Discover tickets around?

I see that Oracle Open World Discover ticket costs $50. I remember that it cost something in prior years, but that there was some way to get it for free if you signed up by the early bird date. Is there such a thing again this year?

I have seen the 'chance for a full registration', and have entered, but I haven't seen the free 'discover' pass. Is it around?

TYIA

Making problems for myself

Playing around with my toy database I asked myself, "What happens if DUAL has more than 1 row?" I found out.

SQL> insert into dual values ('Y');

1 row created.

SQL> select * from dual;

D

-

X

SQL> select count(*) from dual;

COUNT(*) ---------- 1

I tried it again. Same result. "Oh, I guess I can't insert into DUAL", says I, and I went about my business.

Later I logged on as SCOTT and tried to drop a table. Playing I have more EMP tables than employees.

SQL> DROP TABLE EMP4;

ERROR at line 1:

ORA-00604: error occurred at recursive SQL level 1

ORA-01422: exact fetch returns more than requested number of rows

WHAT??!!?

Yes it is there and there is only 1 table called EMP4.

SELECT OWNER, OBJECT_NAME FROM ALL_OBJECTS WHERE OBJECT_NAME = 'EMP4'

OWNER OBJECT_NAME

------------------------------ ------------------------------

SCOTT EMP4

I looked the matter up at orafaq. and followed the instructions.

SQL> select * from dual;

D

-

X

SQL> create table temp_dual as select * from dual;

Table created.

SQL> select * from temp_dual;

D

-

X

Y

Y

Yes, I plead guilty. I DID succeed in inserting those rows into DUAL.

SQL> delete from dual where dummy = 'Y';

1 row deleted.

Strange. It deleted 1 row even though I had put 2 in.

SQL> drop table temp_dual;

drop table temp_dual

*

ERROR at line 1:

ORA-00604: error occurred at recursive SQL level 1

ORA-01422: exact fetch returns more than requested number of rows

I deleted the second excess row:

SQL> delete from dual where dummy = 'Y';

1 row deleted.

and I had a functioning database back.

SQL> DROP TABLE TMP_DUAL;

Table dropped.

and then as SCOTT

SQL> DROP TABLE EMP4;

Table dropped.

OK, I get it, Oracle consults DUAL in the drop process. And don't go messing up a database of any importance. But it is odd how the fact that I was succeeding to mess things up was hidden from me. Yes it told me that I had inserted the row, but then it didn't display it with a select. It was an interesting bit of play.

Lazy Log Writer - Vindication & thank you Fitaloon

I crawled out of bed, bleary eyed, signed in, and tried to start it. I got:

ALTER DATABASE MOUNT

Sat Oct 11 00:35:05 2008

ORA-00202: control file: '/u01/control02.ctl'

ORA-27037: unable to obtain file status

That woke me up. OK, Who/what messed with the control file?

I tried to look at the file and got a message that told me that not only was the control file inaccessable, the entire drive /u01 was inaccessable.

I called across the great divide to the company who controls the hardware and eventually got, "The backup battery for the write cache needs replacing in the SAN".

Monday morning I resent my email to management from a week and a half before. I highlighted my paraphrasing of Fitaloon's comment to my first posting on this subject, "Could our problem be caused by the write cache on the disk having some sort of problem? For example, could it be something as simple as failed backup batteries for the write cache?" Thank you, Fitaloon. You hit the nail on the head.

Since the replacement of the battery, our log writer has been zipping right along, good performance, at last!

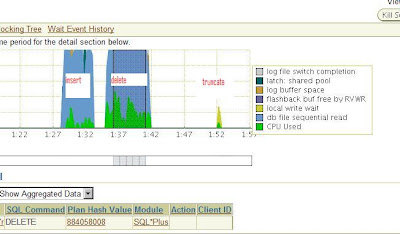

Delete vs. Truncate - graphically

I had not been expecting such a graphical re-enforcement of the rule. I had found the full table delete in some executing code and was curious how much redo it was generating. I ran René Nyffenegger's script 'how_much_redo' .

Assuming that it is giving accurate results, here is what I got for the 3 operations.

SQL> INSERT INTO T1_DEL (SELECT * FROM T1);

1161874 rows created.

SQL> exec how_much_redo;

New Redo Bytes Written: 408985600 (390 MB of redo TO INSERT)

SQL> delete from T1_DEL;

1161874 rows deleted.

Elapsed: 00:06:30.90 (6 MINUTES TO DELETE)

New Redo Bytes Written: 661496320 (630 MB of redo TO DELETE)

SQL> truncate table T1;

Table truncated.

Elapsed: 00:00:06.80 (6 SECONDS TO TRUNCATE)

New Redo Bytes Written: 815616 (less than 1Mb of redo to TRUNCATE)

Lazy Log Writer - The non Resolution

1. Management asked that the entire database be moved to the NAS disks because they initially seemed faster. I had to let them know, that, no, the writes there are not faster.

2. The efforts to cross the great divide and get the storage manager in the other company to actually look at his configuration resulted in, "Since the writes are slow on two different pieces of our machinery, it can't be our fault - it must be oracle. I'm debugging nothing."

3. The other company informed us, "By the way, we will be installing new hardware in a couple of weeks at the same time that you are making a major application upgrade." How does that sound for a prospect of a smooth transition? This was followed by an email, "Claudia, are you working with the other company on this?" Not only am I not working with them, I never heard of it!

4. Management informed me, "Since there will be a hardware change soon, don't bother to follow up on this problem."

So they will install the hardware, we will deploy the new application version, and there will be storm and drama about the excessive waits for redo log writes. I think that I should change my name to Cassandra.

A lazy log writer

What was going on at work? Not much - as in not what should have been. I like looking at the performance monitor on Enterprise Manager for a quick glance at what is going on. It wasn't a pretty picture.

Clicking on the 'Blocking Sessions' tab I saw that that the log writer session was blocking various other sessions.

I went into the alert log and was pointed to a log writer trace file. Inside the trace file I found

*** 2008-09-25 15:28:24.239

Warning: log write time 15460ms, size 6999KB

*** 2008-09-25 15:28:24.836

Warning: log write time 590ms, size 6898KB

*** 2008-09-25 15:28:29.852

Warning: log write time 5020ms, size 6999KB

I looked at metalink and got

" The above warning messages has been introduced in 10.2.0.4 patchset. This warning message will be generated only if the log write time is more than 500 ms and it will be written to the lgwr trace file .

"These messages are very much expected in 10.2.0.4 database in case the log write is more than 500 ms. This is a warning which means that the write process is not as fast as it intented to be . So probably you need to check if the disk is slow or not or for any potential OS causes. "

We just upgraded to 10.2.0.4. Our storage is across the great divide at another company. We are often short of answers other than, "Everything is configured correctly". With quite a bit of work we have gotten a pair of LUNS allocated for redo logs.

As a test, I moved the redo logs from the SAN to a NSF drive - NOT one that should be allocated to redo. Here was the immediate result:

The log writer waits stopped. Compliments from management. A request from management to storage management to move the entire database to this kind of storage, everyone is happy

almost.

Tonight I looked at the trace file:

*** 2008-09-25 22:53:34.154

Warning: log write time 750ms, size 0KB

*** 2008-09-25 22:53:35.943

Warning: log write time 1770ms, size 28KB

*** 2008-09-25 22:53:39.889

Warning: log write time 940ms, size 0KB

Log writer is taking forever, and it isn't even doing anything!

To be continued..... and detective suggestions welcome!

OOW - Day 2 - Simple things

I then went to a hands on on Oracle Advanced Compression in 11g. The class really was just working through a couple of OBE examples with the comfort of people who actually know the a LOT about Oracle Compression there to help with the silly little problems that people have working through examples. I wish I had had some sort of deep questions to ask about compression. All I learned was, it works. There is some time, and some space savings. It will be useful when we move up to 11g.

When I say that the Advanced Compression was 'Hands On', I mean it literally. Braille would have been more accurate. For some reason, the whole demo was available in a box slightly larger than a youtube video. I get annoyed with myself when I have difficulties with the parts that I think should be the simple parts.. Here were a whole group of Oracle employee experts who couldn't make the VM window expand to the whole screen. There was more compression in the demo than anyone intended!

OOW - Day 1 - Blogger's perks.

I happened to be seated next to , who apparently does work with Oracle 11g and is ready for the exam. I wish him all the best on the exam.

Fuad's conference badge had a large 'Blogger' written in bright orange on the case. I know that I signed up for one. I asked at the registration desk, and they gave me a 'Blogger' badge too. I lined up for the Keynote Address. Immediately, the ushers pushed me out of the main line and into a smaller line for press & bloggers. I was led to a special area of the auditiorium saved for bloggers - a long table set up so that we could record every golden world of Mary Matalin & James Carville. For Pete's sake! I think this 'Blogger' badge is going to have some strange side effects.

Then this evening I was able to get together with all of the other Oracle Bloggers who I have been following for a year. That was really special. What a nice bunch of people!

travelling trace file

We had an error logged in the alert log. It informed me that details were in

db_ckpt_1234.log. Fine, but there was no such log in the bdump directory.

The dba who was here before me wrote a nice script to run every Sunday to clean up old trace files. What he didn't think of is that the trace files seem to be written per session, and that the checkpoint 'session' continues from instance start up.

His script came along and happily moved the trace file to a backup_bdump directory. Oracle couldn't find it and didn't write the details.

One more fix to make.

NoCOUG conference 8/21/08

![]()

Summer Conference 2008 Hosted by Chevron August 21, 2008 at Chevron, San Ramon, CA

See directions to the conference location near the bottom of this page.

8:00 - 9:00 Registration and Continental Breakfast - Refreshments Served 9:00 - 9:30 General Session and Welcome - Roger Schrag, NoCOUG President 9:30 - 10:30 Keynote: “Millsap's Grand Unified Theory of "Tuning"” - Cary Millsap, Method-R.com 10:30 - 11:00 Break Room 1220 Room 1240 Room 1130 11:00 - 12:00

Session 1 “Case Studies in Performance Problem Diagnosis and Repair” by Cary Millsap, Method-R.com “Aces in the Hole: Learning Advanced SQL Techniques from the OTN Forum Pros” by Greg Pike, Piocon “Architecting Data Systems for Compliance” by John Weathington, Excellent Management Systems 12:00 - 1:00 Lunch 1:00 - 2:00

Session 2 “Getting Coherence: Introduction to Data Grids” by Raanan Dagan, Oracle “Data Warehousing with Oracle 11g” by George Lumpkin, Oracle Corporation “Building a Web-based Application Using Application Express” by Willie Albino, Lockheed Martin 2:00 - 2:30 Break and Refreshments 2:30 - 3:30

Session 3 “Power at Your Fingertips - Overlooked Gems in Oracle Enterprise Manager” by John Sheaffer, Oracle Corporation “Introduction to Java-PL/SQL Developers Take Heart” by Peter Koletzke, Quovera “Oracle Fusion Middleware Roadmap & Strategy” by Margaret Lee, Oracle Corporation 3:30 - 4:00 Raffle

In the vendor area. 4:00 - 5:00

Session 4 “A Tour of the AWR Tables” by Dave Abercrombie, Convio “What the Oracle Really Meant: The Quest for PL/SQL Testing Using Code Tester” by Arnie Weinstein, Lawrence Livermore National Laboratory “Oracle Analytical Functions - the hidden treasure” by Ron Warshawsky, DBA Infopower 5:00 - ??? NoCOUG networking and happy hour at San Ramon Marriott, 2600 Bishop Dr., San Ramon, CA 94583 -- (925) 244-6171

Directions: Leaving the conference, go straight across Bollinger onto Camino Ramon. Turn left onto Bishop Drive. The Marriott is less than half a mile, on the left.

*** Walk through the bar to the outside patio. ***

Mark your calendar for NoCOUG's Fall Conference:

November 13, 2008 at CarrAmerica Conference Center in Pleasanton.

Speaker Abstracts for Spring Conference

Keynote “Millsap's Grand Unified Theory of "Tuning"” - Cary Millsap, Method-R.com

First there were the v$ and x$ views. And tkprof. Then came bstat/estat, Statspack, ASH, ADDM, OEM, and plenty of third-party tools that look kind of like those tools. There are loads of OS tools too that have been around a long time, like top and sar and strace and pstack and gprof. And then there are the methods: OPIM, and YAPP, and Method R to name three. In this presentation, Cary Millsap gives a brief tour of the moving parts and reveals his own perspective about how all these parts should fit together.

While we certainly learn from our own experiences, we can save ourselves plenty of time and frustration if we can learn from the experiences of others. This presentation reviews the real life experiences of professionals like you who faced some nasty performance problems and lived to tell the tale. Come experience their journeys as a spectator so that next time, when you're faced with a similar problem, you may have just the information you need to handle it with greater ease and efficiency.

Grid-based infrastructures are being developed, deployed and used to achieve unlimited application scalability and continuous availability across multiple datacenters. Understanding the additional capabilities of these infrastructures and how they can be improved with the use of Data Grid technology to solve increasingly difficult and complex problems ensures that your organization is getting the maximum utility from Grid Computing.

This session focuses on how Oracle Coherence Data Grid can easily help you achieve all of these goals and more!

Today's DBA is increasingly tasked with providing greater support across the enterprise - in some cases, being stretched so thin that leveraging automated tools is your only survival mechanism. In this session, you will learn about how often overlooked features of Oracle Enterprise Manager will help you improve efficiency, lower your blood pressure, and get you home on time!

Introduced in version 10g, Oracle's Automatic Workload Repository (AWR) provides diagnostic information for performance and scalability studies, automatically recording a rich variety of database performance statistics.

What's the best way to leverage this wealth of data? While you can run Oracle-supplied AWR reports, or use Oracle features such as the Automatic Database Diagnostic Monitor (ADDM), each Oracle database presents its own unique tuning challenges. In this session you'll learn how to work directly with AWR tables, using customized queries to improve insight into your own particular scalability issues.

Topics include:

- Important AWR tables, their contents, how to join them, and their quirks and limitations.

- Sample queries that can be easily adapted to focus on your own unique set of problems.

- Estimating the "Average Active Session" metric.

- Simple statistical techniques to find spikes and other types of anomalous behavior.

- A comparison of techniques used for historical scalability studies with those used for real-time performance crisis resolution..

- Use of DBMS_APPLICATION_INFO and JDBC end-to-end metrics.

- Useful tips on configuring AWR.

Although seasoned professionals understand the benefits of solving business problems with efficient queries or PL/SQL, the volunteer experts and Oracle Ace contributors on OTN's SQL and PL/SQL discussion forums raise the query writing bar to an entirely new level. Oracle professionals at any skill level will find this forum packed with a treasure chest of tips, tricks and techniques. With over 60,000 topics and 325,000 posts, it's the mother lode of SQL and PL/SQL education from recognized experts worldwide.

In this session, the powerful techniques of advanced query authoring are explored by reviewing real-world forum threads and the unique solutions posted by the gurus. The resident experts from the OTN forums solve problems using a combination of analytic functions, hierarchical queries (CONNECT BY), collections (COLLECT ), XML functions/operators (SYS_XMLGEN, and XMLSEQUENCE), Pipelined Functions, the MODEL clause and more.

Satisfying business intelligence requirements for all users throughout the enterprise requires a fast, reliable and scalable data warehouse to protect and maintain quality business information. Come learn why Oracle is the #1 database for data warehousing, why Oracle Warehouse Builder is the best tool for building data warehouses, how ground-breaking new features in Oracle Database 11g will dramatically speed query performance, and, how you can accelerate data warehousing deployments.

Oracle's current focus on implementing database and development features based on the Java language may have you thinking that you need to learn Java. However, if you are familiar with PL/SQL, your first view of Java may be a bit discouraging because its object-oriented core makes it look very different. Also, you may be wonder about Java's strengths and weaknesses and where it fits in the industry.

This presentation explains to PL/SQL developers who have had little or no exposure to Java, the basic concepts of, and terms used in Java. The presentation provides an overview of the language and reviews the concepts of object orientation upon which Java is based. It also discusses the fundamental Java code structures -- classes and methods -- as well as control statements; exception handling; data types; and variables. This explanation will act as a springboard for further study. Level: Beginner

Software developers face constant pressure to produce highly complex PL/SQL code under tight deadlines. Without an efficient and reliable way to perform thorough code testing, software is released with defects that would otherwise be eliminated. Using an automated test tool carries risks that may be mitigated by certain practices. These practices greatly improved our ability to develop high quality and efficient testing software. In this paper, we will share some of these practices

A gentle introduction to compliance for database system architects, and introductory concepts for the architecture that's optimal for supporting the growing need for your company's compliance.

Application Express is a free web application development environment that comes with the Oracle database (Oracle 9.0.3+). Using a simple web browser, you can create and deploy web-based, database-centric applications very quickly. In addition you can upload and create applications from MS Excel spreadsheets. In this session, an introduction to the Application Express environment will be provided. In addition, an application will be built from tables existing in the database, as well as data uploaded from some Excel spreadsheets.

With the recent acquisition of BEA, a number of best-in-class products have been added to Oracle's robust portfolio of middleware solutions. Customers are interested in knowing what Oracle's strategy and roadmap will be around protecting existing BEA customers' investments and integrating BEA products into existing Fusion Middleware solutions. This session will detail how Oracle will leverage best products from both companies to provide solutions in Java & Transaction Processing, SOA and Business Process Management, and User Interaction and Enterprise 2.0.

Oracle introduced them in 8i, but do we really use them to their full potential? Oracle analytical functional can save great deal of coding and provide amazing results very fast.

How about doing this - and this is only a beginning:

- Ranking data within subsets of a data set.

- Aggregating data within subsets of a data set.

- Performing aggregations over moving windows.

- Displaying and comparing aggregates to individual entries within a single query.

- Comparing two or more rows within a given data set.

If you have suggestions for future meetings or would like to offer feedback on previous conferences, then please complete our online survey or send us an email.

Directions to Chevron Park in San Ramon:Address: 6101 Bollinger Canyon Road, San Ramon, CA 94583

Please note that the conference building is just after the Chevron Campus.

Upon arrival, enter the building at the East entrance where you'll find NoCOUG representatives ready to sign you in.

From Highway 680 South:

Exit at Bollinger Canyon Road. Turn left onto Bollinger Canyon Road (heading east over the freeway). After passing Chevron Park Circle West, turn right into Bishop Ranch 1. Address 6101 is the first building after entering the parking lot. Park anywhere not restricted.

From Highway 680 North:

Exit at Bollinger Canyon Road. Turn right onto Bollinger Canyon Road (heading east). After passing Chevron Park Circle West, turn right into Bishop Ranch 1. Address 6101 is the first building after entering the parking lot. Park anywhere not restricted.

Map

Copyright © 2008 NoCOUG. All rights reserved.

Make it go away! (rman delete)

I am doing perfectly lovely incremental compressed backupsets of my database. Then one day, playing with EM, I in experimented with the 'Oracle recommended backup stategy'. I may have missed something in my set up, but I ended up with an un needed image copy backup of my database. Help! This thing is devouring my flashback area. So what is the proper way to remove it.

First step, of course, is to kill the Oracle job, so it doesn't do that again!

Then in rman:

RMAN> list copy;

gives me a nice long list - one line of which was:

List of Datafile Copies

Key File S Completion Time Ckp SCN Ckp Time Name

30428 4 A 29-JUL-08 224478 29-JUL-08 /bkup/flashbk/datafile/o1_mf_users_44y8x123_.dbf

I double check on the file system:

$> ls o1_mf_users_48y8x123_.dbf

o1_mf_users_44y8x123_.dbf

Yes, the copy is there.

To delete it in RMAN I enter

RMAN> delete datafilecopy 30428;

and get:

List of Datafile Copies

Key File S Completion Time Ckp SCN Ckp Time Name

30428 4 A 29-JUL-08 224478 29-JUL-08 /bkup/flashbk/datafile/o1_mf_users_44y8x123_.dbf

Do you really want to delete the above objects (enter YES or NO)? y

deleted datafile copy

datafile copy filename=/bkup/flashbk//datafile/o1_mf_users_44y8x123_.dbf recid=30428 stamp=1334123

Deleted 1 objects

I check in the file system and this time my ls returns

ls: 0653-341 The file o1_mf_users_44y8x123_.dbf does not exist.

Good bye, nusance.

Something is VERY busy

This is a solaris box. I read that there is a 2G file limit on Solaris. (Maybe that means a limit on log files. Obviously, my data files are much larger than that.).

I issued the lsnrctl command:

lsnrctl >set log_file listener2.log

Quickly it resumed recording connections, now into listener2.log. It recorded 2 of them EVERY second!!! OK. my suspicion was right. Now to track down exactly WHAT is connecting and disconnecting so much.

I'm beginning to distrust Oracle

Now I am learning that ASMM has been allocating our shared pool too large, and has been adjusting sizes not once an hour, but once a minute.

I am not happy with the statistics that the 'wonderful' automatic GATHER_STATS_JOB has been collecting. Also the job has been causing contention with some of our production jobs.

The one that seems really strange to me is Log_buffer. I allocated 28M to log_buffer. According to Oracle, LGWR should be writing, at a maximum, when log_buffer is 1M full. Instead, I see that it is writing 2M at a time. I am going to have to figure that one out.

I expect that when Oracle offers to automate these processes, that this is their own product, so the calculations will be well done. I expected, but I am wrong.

Q.E.D.

DBA, "You are the only user on the DB, but you have a dozen sessions open. Can you close some sessions?"

User, "I can't see my sessions through the application. Bounce the database"

DBA, " I can see the sessions just fine. I'll kill your excess sessions."

User, "No, don't kill my sessions. Bounce the database. I'm bouncing the database."

We don't need no stinking controls around here.

Everyone can do everything.

He bounced the database.

User, "I bounced the database. My [one] session is running just fine."

Q.E.D. - bouncing the database improves performance.

==========================================================

An update to the post above:

The same user has informed me that I should always shutdown the database with "Shutdown abort" . "It works much better."

I have always assumed that everyone else knows more than I do.

Maybe that isn't true. Maybe I do understand more than some people...

Lessons Learned after a Hell Weekend

- A necessary part of any database project plan is a fall back plan. What is planned if there is a failure at any particular step.

- Backup - this includes enough space allocated for additional backups as needed.

- Part of any upgrade script should be rollback scripts.

I should have know that I was in trouble when on Friday afternoon when I was given a time line which was called 'optimistic' with no what-if forseen.

Northern California Oracle Users' Group Conference

![]()

SPRING CONFERENCE 2008 May 15, 2008 at the Crowne Plaza Hotel in Foster City, CA

See directions to the conference location near the bottom of this page.

8:00 - 9:00 Registration and Continental Breakfast - Refreshments Served 9:00 - 9:30 General Session and Welcome - Roger Schrag, NoCOUG President 9:30 - 10:30 Keynote: “How Oracle Came To Rule the Database World” - Rich Niemiec, TUSC 10:30 - 11:00 Break Columbus Room Drake I Drake II 11:00 - 12:00

Session 1 “The Best Oracle Database 11g New Features” by Rich Niemiec, TUSC “Building the Technology Stack for Modern Applications” by Caleb Small, Caleb.com, Camosun College “Natural Data Clustering: Why Nested Loops Win So Often” by Dan Tow, SingingSql.com 12:00 - 1:00 Lunch in Marco Polo Room 1:00 - 2:00

Session 2 “Oracle Archiving Best Practices” by Dave Moore, NEON Enterprise Software “Poor Man's Auditing with Oracle LogMiner” by Caleb Small, Caleb.com, Camosun College “Getting SQL Right the First Try” by Dan Tow, SingingSql.com 2:00 - 2:30 Break and Refreshments 2:30 - 3:30

Session 3 “Oracle Database Security in a Nutshell” by Daniel Liu, Oracle Corporation “Web 2.0 Ajax-Based User Interfaces Development Made Simple” by Shay Shmeltzer, Oracle Corporation “RAC 11g, Virtualization and More...” by Vijay Ragunathan, Guidewire 3:30 - 4:00 Raffle 4:00 - 5:00

Session 4 “How Independent Software Companies are Leveraging Oracle Embedded Products in Their Software Applications” by Gabe Stanek, Oracle Corporation “Hacking and Defending Databases” by Todd DeSantis, Sentrigo “The Evolving Web UI” by Avrom Roy-Faderman, Quovera 5:00 - ??? NoCOUG Networking and No Host Happy Hour in the conference hotel at the Club House Bistro

Mark your calendar for NoCOUG's Summer Conference:

August 21, 2008 at Chevron in San Ramon.

Speaker Abstracts for Spring Conference

Keynote “How Oracle Came To Rule the Database World” - Rich Niemiec, TUSC

This is a break from the technical presentations, but not too much of a break. Sit back and listen to the history of the relational database go by. Find out the crucial moves that Oracle made at critical junctures of their history. See what drove the product from inception, over the rocky road and eventual to the top of the mountain. Learn what made Oracle the product a success, but also find out the attributes that made Oracle the company a font of technological wizardry. This talk will reveal several seldom heard facts and some unknown secrets of Oracle's success.

I. The Paper that started it all - E. F. Codd

II. System-R & Ingres

III. Oracle is Founded as SDL

IV. Version 1 to Version 10g

V. Why did Oracle win?

VI. Future market direction

VII. Summary

This presentation will look at which 11g new features should be investigated for use. Most of the features that will be covered will be related to the DBA, but there will also be a few outside that realm. There will be simple examples to show the basic functionality of the following new features:

Memory Target

Partition Advisor

Security Enhancements

DDL Lock Timeout

The Invisible Index

Automatic Diagnostics Repository

SQL Plan Management

Real Application Testing (Workload Capture and Replay)

SQL Repair Advisor

ADDM Enhancements

Interval Partitioning

Optimizer Enhancements

Data volumes are growing ... Space is at a premium... Backups take forever ... Query response is dragging... Unused data can be moved out of the active database ...

The subject of data archiving is never addressed until the data retention problem is serious. Requirements for archiving range from legal obligations for data retention (Sarbanes Oxley, HIPAA, and so on), to operational performance, to internal business requirements. What data should you archive? How often? How should you do it? And what needs to be done inside of Oracle to regain operational efficiency? Whatever needs are driving your business archive initiatives, join Dave Moore to determine archiving best practices. This informative session will describe how you can meet your Oracle database archiving requirements while simultaneously optimizing database performance.

In this Seminar, the students will learn how they can use Oracle database features to meet the security and compliance requirements of their organization. The current regulatory environment of the Sarbanes-Oxley Act, HIPPA, the UK Data Protection Act, and others requires better security at the database level. The session provides suggested architectures for common problems. It covers the following security features of the database: auditing, column and file encryption, virtual private database, label security, enterprise user security, and more.

The market for embedded database software continues to experience significant growth, based on a highly diverse range of use cases, including consumer and mobile devices, desktop and enterprise software, large-scale networking and storage equipment and appliances. Oracle offers the industry's broadest portfolio of world-class embeddable database products that ranges from: -- Oracle TimesTen - for real-time, in-memory relational data management and caching; -- Oracle Berkeley DB - for high performance, non-relational data management; and -- Oracle Database Lite - for online/offline mobile data management.

Requiring virtually no human administration, these products are ideal for developers in industries such as telecommunications and high technology, which have demanding requirements for intelligent edge devices and services.

"The increase in customer adoption for Oracle's embedded databases is a testament to our commitment to embedded developers building the next generation of applications that need to run in always-on, zero administration environments," said Rex Wang, vice president Embedded Systems Marketing, Oracle. "Our goal is to offer developers a broad range of choices, so they can pick the right solution for their specific application. We continue to innovate and integrate our products, so that our customers can avoid the time, cost and risk of building it themselves."

Organizations spanning multiple industries are lauding the one-stop support, lower TCO, and comprehensive, unattended operation as key benefits of Oracle's Embedded product lines. One of those is to be determined (would like to involve one of our customers from the local area, but I need to confirm).

Overview of building clustered Oracle Application Servers, clustered RAC Database Servers, clustered NetApp Storage Arrays, and clustered Load Balancers to deploy java or forms & reports applications in a high availability environment.

The need for database auditing is a topic that most DBAs have heard loud and clear. Oracle offers many auditing solutions, and the specific requirements of each individual application must be assessed before choosing. One of the simplest, yet most powerful solutions is the time tested LogMiner. Oracle LogMiner allows reconstruction of past SQL statements from the on-line and archived redo logs, provided that some simple configuration requirements are met. While there is some additional overhead imposed on the database, for the most part all the necessary mechanisms are already in place and no additional audit trails or log files need to be maintained. This presentation steps through the decision criteria for selecting this option, and the actual database implementation steps based on an actual production system.

The Web 2.0 generation of applications has brought a new user experience to Web-based applications. Ajax-based UIs are much more dynamic and offer better usability. This session explains how the new Oracle Application Development Framework (Oracle ADF) Faces rich-client components drastically simplify the development of such advanced UIs. Learn about the new components, how they work, and how they can influence the design of your user interface.

"Presenting a new angle on a popular attack vector on databases: SQL Injection." We will describe types and techniques of SQL Injection attacks on both Oracle-based web applications and built-in database stored program units, and show how simple SQL Injection can be used to own the database server through the means of privilege escalation.------ We will also list ways of preventing SQL Injection attacks - ranging from secure coding practices to various external tools that will alert and prevent SQL Injection attempts, and demonstrate how hacker techniques of evasion can be used to subvert them. Finally, we will introduce new deep inspection tools for Oracle 9i/10g that can prevent SQL injection, even in zero-day scenarios.

Take away points:

- How SQL Injection attacks work

- Secure coding practices

- Existing tools for SQL Injection prevention and techniques to evade them

- New resilient technologies used to solve entirely SQL injections, even those exploiting zero-day vulnerabilities

I'll present a mix of empirical data and theoretical explanation to demonstrate why optimizers tend to favor hash joins, while experienced SQL tuners so often find they can do better by forcing nested-loops joins. The key is co-clustering - the tendency of well-clustered rows in one table to join to well-clustered rows in another table, a tendency that favors nested-loops joins.

Most SQL tuning material addresses the question of how to fix performance of slow SQL. However, there are little-known rules that enable developers to avoid most tuning problems proactively, while also avoiding common functional mistakes and writing SQL that is far easier to understand and to maintain when it does need later work. This presentation proposes rules and processes to write correct and fast SQL from the beginning.

Virtualization software like VMware and Xen are changing the commodity hardware world. One of the benefits of this virtualization software is to be able to run Oracle RAC with more than one node with limited cost for development and testing environments. We will talk about how to set up RAC 11g with this software. In addition, we will also talk about some 11g features related to RAC, ASM, and AWR/ADDM and cache fusion tracing.

Applications on the web have come full circle--from highly interactive applets, through request/response-cycle based JSP applications, through richer applications through asynchronous server calls, back to highly interactive Web 2.0 applications. This talk covers the current state of the art in web applications, with a focus on developing these applications in Oracle JDeveloper 11g.

If you have suggestions for future meetings or would like to offer feedback on previous conferences, then please complete our online survey or send us an email.

Directions to the Crowne Plaza Hotel in Foster City:Address: 1221 Chess Drive, Foster City, CA 94404 (888) 444-0401

From Highway 92 East:

Take exit 14B toward Foster City Blvd (0.2 mi) Turn left at Metro Center Blvd (495 ft) Turn left at Foster City Blvd (0.2 mi) Turn left at Chess Dr (285 ft) To: 1221 Chess Drive, Foster City, CA 94404

From Highway 92 West:

Take exit 14B toward E Hillsdale Blvd/Foster City Blvd (0.3 mi) To: 1221 Chess Drive, Foster City, CA 94404

Map

View Larger Map

Copyright © 2008 NoCOUG. All rights reserved.