Chris Muir

Solar Adventures & Saving Money - Win win win win!

Recently I tweeted the following pic which raised some interest, showing the kWh units of electricity used in my house compared to others in the local suburb Kensington in Perth Australia:

Our yearly electricity bill is ~AU$430, down from ~AU$754 in 2013.

I was asked what have we done to drop this low? Sold a child? Installed a massive solar system and battery?

To set context I live in a 1950s single story double brick tiled house in Perth Australia, what the British call a free standing bungalow I believe. My wife and my 2 kids under ten live in 3 bedrooms, 2 bathrooms, 1.5 living rooms and a separate office, approx 16m x 8m. The house is equipped with an evaporative airconditioner, natural gas for cooking, heating & hot water, with the exception of the oven which is electric. We have 2 TVs, 2 monitors, 3 laptops, lots of iphone/ipads, coffee machine, dish washer, clothes washer, fridge, microwave. Heating is a portable natural gas burner, we don't have a clothes dryer as Perth sun provides our drying needs.

So what have we done to reduce use compared to our neighbours?

I've taken a baby steps approach to slowly improving various parts of the house.

To help identify phantom power use we have the following live monitor attached to our house meter ... I find this particularly useful for just watching what's happening in the house to see if something has been left on accidentally, or something is misbehaving. It only shows net use after solar is taken out, but that's fine as that when we're paying the grid and this is what I want to avoid.

The main uses of electricity left when not offset by solar are things like the electric oven in the evening (my NE panels aren't good for offsetting this), the fridge which runs over the night, and the various electronics to a certain or lesser degree depending on what the kids are doing.

Overall I think the main trick in achieving what I've done has been not to attempt to go gung-ho as not only can you easily suffer burn out but the family is likely to rebel. Instead I suggest incrementally improving the house, leaving an active note in your calendar every couple of months to remind yourself to revisit what you achieved and what you can do next. Besides the occasional disagreement about leaving the lights or TV on, mostly my family hasn't much noticed any difference (or they're very patient with me ;-). They still get to do what they want to do as far as I can tell.

In terms of motivation, avoiding the ever increasing electricity charges is definitely part of it. The local government raised electricity by ~3% this year again, and are signalling 7% increases the next two. Our house bills has dropped from AU$754.80 in 2013 before solar, to AU$395 in 2014, $445 in 2015 and $430 for 2016.

My other main motivation is this graph:

...we do get a paltry $0.07 kWh feed in tariff so the size of the blue bar is not that exciting. But what is exciting is I just like knowing that we're a net exporter of energy. (of interest you can really see the winter drop mid year when solar isn't as effective here)

Ideally I'd really like to see the yellow bar drop some more, I think I can shave about another 1/4. As the Australian federal government this year and for the next 15 years is now reducing the upfront solar rebate subsidy, I'm actively looking to max out our solar to 5kw. A battery may be in the future, but currently they are still expensive here, and I suspect my solar system isn't designed well for a battery anyway.

Overall though, I'm particularly happy with the outcome to date. It hasn't been a strain on lifestyle, I feel like I'm sticking it to our government who can't get their renewable energy act together, I'm also helping tell the fossil fuel companies where to go, and finally I'm saving money too.

Win win win win.

Our yearly electricity bill is ~AU$430, down from ~AU$754 in 2013.

I was asked what have we done to drop this low? Sold a child? Installed a massive solar system and battery?

To set context I live in a 1950s single story double brick tiled house in Perth Australia, what the British call a free standing bungalow I believe. My wife and my 2 kids under ten live in 3 bedrooms, 2 bathrooms, 1.5 living rooms and a separate office, approx 16m x 8m. The house is equipped with an evaporative airconditioner, natural gas for cooking, heating & hot water, with the exception of the oven which is electric. We have 2 TVs, 2 monitors, 3 laptops, lots of iphone/ipads, coffee machine, dish washer, clothes washer, fridge, microwave. Heating is a portable natural gas burner, we don't have a clothes dryer as Perth sun provides our drying needs.

So what have we done to reduce use compared to our neighbours?

I've taken a baby steps approach to slowly improving various parts of the house.

- We installed a 2.0kw solar about 3 years ago, it has a 2.2kw inverter, 2.0kw of panels, and at best peaks at 1.8kw during summer, about 1.3kw during winter. The 8x250kw panels face North East.

- In line with the solar, for things like clothes washing and the dish washer, we put them on a timer to run around lunchtime each day while we're at work & lunchtime sun means solar covers all the use.

- I do clean the solar panels, about every 3 months with a big squidgy mop. Perth summer air has lots of dust, and bees seem to like dropping pollen in big wads on the panels too which is easy to clean off with instant power boost.

- All of our lights were moved to fluorescents about 8 years ago, and now I've about 1/2 that are LEDs. Personally I'm finding LED lights way better than fluorescents which tended to blow frequently. LEDs have come down in price, and I keep an eye out for when they're on special too.

- We turn off phantom/standby powers use at the wall ... the tv equipment + laptops were big culprits and an easy fix. To make this easier I've provided powerpacks with individual power switches for each point so they are easier to reach rather than the powerpoints hiding behind cabinetry.

- We moved to a central iphone/ipad/usb/etc charger in the main living room rather than numerous powerpacks spread across the house which silently draw electricity and mostly weren't used for most of the day. With the one charger I can easily add and remove devices once they're charged without the family caring as long as they're charged. In turn my personal iphone & ipad are plugged in during the day for recharging, which takes me no effort at all.

- During winter we try to use a slow cooker during the day which takes further advantage of the available sun.

- During summer to control the house temperature I try and make use of relatively cold outside air in the morning to fill the house up, opening windows and doors to get cross breezes, and close it all down when the house equalizes to the outside temperature or a hot easterly starts blowing. With the evap aircon turning the fan and not the water pump helps to get cold air in the house quickly in the morning if need be. As Perth gets hot in summer (we hit 45C/113F last year), we do need to switch the evap aircon on properly during the day and late afternoon, but the goal is to cool the house with free cool air when I can.

To help identify phantom power use we have the following live monitor attached to our house meter ... I find this particularly useful for just watching what's happening in the house to see if something has been left on accidentally, or something is misbehaving. It only shows net use after solar is taken out, but that's fine as that when we're paying the grid and this is what I want to avoid.

The main uses of electricity left when not offset by solar are things like the electric oven in the evening (my NE panels aren't good for offsetting this), the fridge which runs over the night, and the various electronics to a certain or lesser degree depending on what the kids are doing.

Overall I think the main trick in achieving what I've done has been not to attempt to go gung-ho as not only can you easily suffer burn out but the family is likely to rebel. Instead I suggest incrementally improving the house, leaving an active note in your calendar every couple of months to remind yourself to revisit what you achieved and what you can do next. Besides the occasional disagreement about leaving the lights or TV on, mostly my family hasn't much noticed any difference (or they're very patient with me ;-). They still get to do what they want to do as far as I can tell.

In terms of motivation, avoiding the ever increasing electricity charges is definitely part of it. The local government raised electricity by ~3% this year again, and are signalling 7% increases the next two. Our house bills has dropped from AU$754.80 in 2013 before solar, to AU$395 in 2014, $445 in 2015 and $430 for 2016.

My other main motivation is this graph:

...we do get a paltry $0.07 kWh feed in tariff so the size of the blue bar is not that exciting. But what is exciting is I just like knowing that we're a net exporter of energy. (of interest you can really see the winter drop mid year when solar isn't as effective here)

Ideally I'd really like to see the yellow bar drop some more, I think I can shave about another 1/4. As the Australian federal government this year and for the next 15 years is now reducing the upfront solar rebate subsidy, I'm actively looking to max out our solar to 5kw. A battery may be in the future, but currently they are still expensive here, and I suspect my solar system isn't designed well for a battery anyway.

Overall though, I'm particularly happy with the outcome to date. It hasn't been a strain on lifestyle, I feel like I'm sticking it to our government who can't get their renewable energy act together, I'm also helping tell the fossil fuel companies where to go, and finally I'm saving money too.

Win win win win.

This blog has moved!

No really, it has! You can find my new Oracle blog here.

For the record this older blog will stay to allow readers to continue to benefit from the existing posts.

I hope to see you on the new blog!

For the record this older blog will stay to allow readers to continue to benefit from the existing posts.

I hope to see you on the new blog!

If you can't beat 'em join 'em

A New Year has brought a desire for new challenges. As a result early in the year I'll be taking on a new role as a product manager for ADF at Oracle Corporation.

The decision to move was certainly a difficult one. I've had an excellent 10+ years at SAGE Computing Services under the leadership of Oracle ACE Penny Cookson and the SAGE team, all who've been inspiring to work with. In turn I was fortunate enough to have two offers on my table which were both excellent, but both providing different outcomes. Choices choices.

The end decision has me moving to Oracle Corporation in early February, still based in Perth Australia for now.

One ramification of the move to Oracle is I give up my Oracle ACE Director status. This is a sad moment in many ways because like SAGE I owe the ACE Director program a lot. I feel that the program has allowed me to grow and extend my skills and experiences significantly. The chance to mix with other ACEs and Oracle staff, living up to their experiences & expectations, the chance to attend and present at conferences and share my enthusiasm with delegates has been incredibly rewarding. As a result my thanks go out to both the OTN team for running the program and providing the opportunity, and also to all the ACE and ACE Directors, Oracle staff, user groups reps and Oracle enthusiasts out there I've had pleasure of meeting and befriending over the last 5 years. Seriously your friendships, advice and generosity has meant a lot to me.

With that little bit of news out of the way I'd like to wish everyone a happy New Year and I hope to see you at a conference somewhere soon.

(Post edit: as some people have kindly taken the time to point out, yes it is in fact true, the real reason for the move is I just couldn't bear to be apart from Richard Foote ;-)

The decision to move was certainly a difficult one. I've had an excellent 10+ years at SAGE Computing Services under the leadership of Oracle ACE Penny Cookson and the SAGE team, all who've been inspiring to work with. In turn I was fortunate enough to have two offers on my table which were both excellent, but both providing different outcomes. Choices choices.

The end decision has me moving to Oracle Corporation in early February, still based in Perth Australia for now.

One ramification of the move to Oracle is I give up my Oracle ACE Director status. This is a sad moment in many ways because like SAGE I owe the ACE Director program a lot. I feel that the program has allowed me to grow and extend my skills and experiences significantly. The chance to mix with other ACEs and Oracle staff, living up to their experiences & expectations, the chance to attend and present at conferences and share my enthusiasm with delegates has been incredibly rewarding. As a result my thanks go out to both the OTN team for running the program and providing the opportunity, and also to all the ACE and ACE Directors, Oracle staff, user groups reps and Oracle enthusiasts out there I've had pleasure of meeting and befriending over the last 5 years. Seriously your friendships, advice and generosity has meant a lot to me.

With that little bit of news out of the way I'd like to wish everyone a happy New Year and I hope to see you at a conference somewhere soon.

(Post edit: as some people have kindly taken the time to point out, yes it is in fact true, the real reason for the move is I just couldn't bear to be apart from Richard Foote ;-)

Apache Ivy and JDeveloper integration

As software applications grow, a common technique to reduce the complexity is to break the overall solution into separately built and deployed modules. This allows each component to be worked on independently without being overwhelmed with detail, though the cost of reassembling and building the application is the trade off for the added flexibility. When modules become reusable across applications the reassembly and build problem is exasperated and it becomes essential to track which version of each module is required against each application. Such problems can be reduced by the introduction of dependency management tools.

In the Java world there are a few well known tools for dependency management including Apache Ivy and Apache Maven. Strictly speaking Ivy is just a dependency management tool which integrates with Apache Ant, while Maven is a set of tools of where dependency management is but just one of its specialities.

In the ADF world thanks to the inclusion of ADF Libraries (aka. modules) that can be shared across applications, dependency management is also a relevant problem. Recently I went through the exercise of including Apache Ivy into our JDeveloper 11g and Hudson mix for an existing set of applications. This blog post attempts to describe the configuration of Apache Ivy in context of our JDeveloper setup in order to assist others setting up a similar installation. The blog post will introduce a simplistic application (downloadable from here) with 1 dependency to introduce the Ivy features, in very much an A-B-C style to assist the reader's learning.

Readers should be careful to note this post doesn't attempt to explain all the in's and out's of using Apache Ivy, just a successful configuration on our part. Readers are encouraged to seek out further resources to assist their learning of Apache Ivy.

Assumptions

This blog post assumes you understand the following concepts:

• ADF Libraries

• Resource palette

• Apache Ant

• ojdeploy

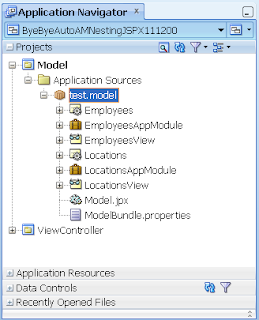

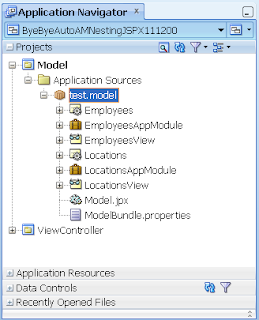

In the beginning there was... ah... ApplicationA

To start out with our overall application contains one JDeveloper application workspace known as ApplicationA, installed under C:/JDeveloper/mywork as follows:

ApplicationA initially has no dependencies and can be built and run standalone.

ApplicationA initially has no dependencies and can be built and run standalone.

Within the application we create a separate project entitled "Build" with an Ant build scripts entitled "pre-ivy.build.xml" to build our application using ojdeploy as follows:

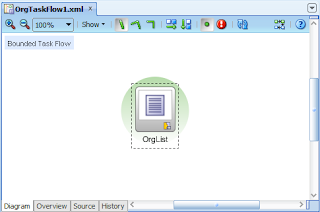

And then ApplicationA begat ADFLibrary1

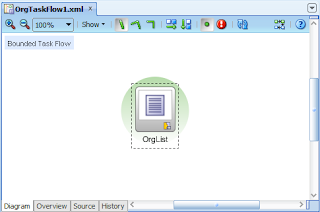

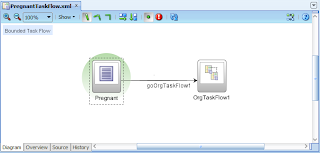

Now we'll create a new task flow in a separate application workspace known as ADFLibrary1 which ApplicationA is dependent on:

We add an ADF Library JAR deployment profile to ADFLibrary1's ViewController project to generate ADFLibrary1.jar to:

We add an ADF Library JAR deployment profile to ADFLibrary1's ViewController project to generate ADFLibrary1.jar to:

C:\JDeveloper\mywork\ADFLibrary1\ViewController\deploy\adflibADFLibrary1.jar

Similar to ApplicationA we add a Build project to our application workspace and a pre-ivy.build.xml Ant build script using ojdeploy:

Experienced readers will know to setup a Resource Palette "File Connection" to map to C:\JDeveloper\mywork\lib then simply add the JARs from the palette.

Experienced readers will know to setup a Resource Palette "File Connection" to map to C:\JDeveloper\mywork\lib then simply add the JARs from the palette.

Adding Apache Ivy

At this point we have a rudimentary form of dependency management setup, where a logical version 1 of ApplicationA has attached a logical version 1 of ADFLibrary1 through the use of the ADF Library JAR being attached to ApplicationA's ViewController project. Note the word "rudimentary". Currently there is no way to track versions. If we have separate versions of ApplicationA dependent on separate versions of ADFLibrary1, developers have to be very careful to check out and build the correct versions, and there's nothing inherently obvious in the generated JAR file names to gives us an idea of what versions are being used.

Let's introduce Apache Ivy into the mix with this simplistic dependency model as a basis for learning, to see how Ivy solves the versioning dependency issue.

Adding ivy.xml to each module

Ivy requires that each module have an ivy.xml. The ivy.xml file among other things describes for each module:

a) The module name

b) The version of the module

c) Determines what artefacts the module publishes

d) Track the module's dependencies including the version of the dependencies

For our existing ADFLibrary1 we'll add an ivy.xml file to our Build project containing the following details:

a) The module name in the <info> tag

b) The revision/version number in the <info> tag

c) The publication of an ADF Library jar in the <publications> tag

d) And that this module is not dependent on any other modules through the commented out <dependencies> tag

(You might also note the <configurations> tag. Configurations define the type of artefacts we can possible generate for the module. In this case we're creating an ADF Library "JAR", but alternatively for example we could produce a WAR or EAR file or some other sort of artefact. For purposes of this blog post we'll keep this relatively simple and just stick to JARs and EARs).

For our existing ApplicationA its ivy.xml file under the Build project will look as follows:

a) The module name ApplicationA in the <info> tag

b) The revision/version number 1 in the <info> tag

c) The publication of an EAR in the <publications> tag

d) And of most importance, a dependency of ADFLibrary1, specifically release/version 1.

It's this last point that is most important as not only does it track the dependencies between modules (which truthfully JDev was already doing for us) but the ivy.xml file also tracks the version dependency, namely ApplicationA release/version 1 is dependent on version/release 1 of ADFLibrary1.

Apache Ivy Repository

In the previously described application configuration we were assuming the build of ApplicationA and ADFLibrary1 was all on the same developer machine. It's relatively simply for 1 developer to copy the JARs to the correct location to satisfy the dependencies. Yet in a typical development environment there will be multiple developers working on different modules across different developer machines. Moving JARs between developer PCs becomes problematic. We really need some sort of developer repository to share the modules archives.

At this point we introduce an Apache Ivy repository into our solution. Simplistically the Ivy repository is a location where developers can publish JARs to, and other developers when building an application with a dependency, can download the dependencies from.

Ivy supports different types of repositories which are documented under Resolvers in the Ivy documentation. For purposes of this blog post we'll use the simplest repository type of "FileSystem".

In order to make use of the FileSystem Ivy repository all developers must have access to a file (typically called) ivysettings.xml. This file defines for Ivy where the repository resides among other settings. How you distribute this file to developers is up to you, maybe it's located on a shared network location, maybe a copy checked out to a common local location. For purposes of this blog post we'll assume it resides on every developer's machine under C:\JDeveloper\ivy:

The following reveals the contents of a possible ivysettings.xml file:

The following reveals the contents of a possible ivysettings.xml file:

1) Note the ivy.repo.dir property. Typically this would point to your own //SomeServer/YourRepositoryLocation which all developers can access on your local network. For the purposes of this blog post, in order to give readers a single zip file that they can download and use, I've changed this setting to instead locate the repository at C:\JDeveloper\ivy\repositories\development. This certainly *isn't* a good location for a shared repository, but one that is workable for demonstration purposes.

2) The <resolvers> <chain> defines the list of repositories for Ivy to publish to or download dependencies from. In this case we've only configured one repository, but there's nothing stopping you having a series of repositories.

3) The <ivy> subtag of the <filesystem> tag defines how Ivy will store and search for it's own metadata files in the repository, of which it stores information such as the module name, versions and more that is essentially copied from your ivy.xml files.

4) The <artifact> tag defines how Ivy will store and search for the actual artefacts you generate (such as the ADF Library JARs) in the repository.

With regards the last 2 points it's best to leave the patterns as the default, as in the end the repository can be treated as a black box. You don't really care how it works, just as long as Ivy allows you to publish and retrieve files from the repository.

Configuring Ant to *understand* Ivy

With the ivy.xml and ivysettings.xml files in place, we now need to configure our Ant build scripts to interpret the settings and work with our repository during builds.

First we download the Apache Ivy and install into a location each developer's machine can access. This blog posts assumes Ivy v2.2.0 and that the associated ivy-2.2.0.jar has been unzipped to C:\JDeveloper\ivy\apache-ivy-2.2.0:

Next we modify our existing build scripts for each module. In the build.xml file for *both* ADFLibrary1 and ApplicationA we insert the following code:

Next we modify our existing build scripts for each module. In the build.xml file for *both* ADFLibrary1 and ApplicationA we insert the following code:

(Note in the downloadable application this code resides in build.xml, not pre-ivy.build.xml which was documented earlier in this blog post).

1) Setting the property ivy.default.ivy.user.dir changes the default location under which Ivy stores local copies of the data downloaded from the repository.

2) Setting the property ivy.default.ivy.lib.dir defines the location where the JAR files should be ultimately delivered for dependent modules to make use of.

3) The <ivy:configure> tag tells Ivy where the ivysettings.xml file is located which includes the configuration information about the repositories.

4) The <ivy:info> tag tells Ivy where the current modules ivy.xml file is located.

Configuring Ant to *use* Ivy

With the previous Ivy setup we're now ready to start building using Ivy via Ant.

Let's consider our goals. What we want to first do is build and then publish ADFLibrary1 to the Ivy repository. Then subsequently for ApplicationA we want to download ADFLibrary1 from the Ivy repository, then build ApplicationA.

To achieve the first goal, we already have a Build Ant target in the ADFLibrary1 build.xml. So we just need to add another target "Publish" which will take the artefacts generated from the Build target as follows:

1) The <ivy:publish> tag that says which resolver (ie. which repository) to publish too, what to do if the exact file and revision already exists in the repository, and what revision/version to publish the file as. With regards the ${ivy.revision} this variable is derived from the ADFLibrary1's ivy.xml file.

2) The <artifacts> tag which tells the publish command where to find the artifact to publish.

3) Because of the <artifacts> tag there's a dependency that the module has already been built. This could be easily catered for in the overall build script by making an <antcall> to the Build target at the start of the <ivy:publish> tag, but for purposes of simplicity this change hasn't been made for this blog post.

At this point let's see what outputs we see if we run the Build and Publish scripts. First when we run the Build target the JDeveloper log window reports:

Next when we run the Publish task the following output is produced:

The different files are beyond the discussion here, but to say this is the structure Ivy has put into place.

The different files are beyond the discussion here, but to say this is the structure Ivy has put into place.

At this point we've achieved our first goal of build and publishing the ADFLibrary1 to the Ivy repository. Let's more over to our second goal for ApplicationA where we want to download ADFLibrary1 from the Ivy repository, then build ApplicationA.

In order to do this we'll add a new target to the ApplicationA build.xml "Download_dependencies" as follows:

1) The <ivy:cleancache> tag clears the ${ivy.default.ivy.user.dir}\Cache of previously downloaded dependencies. This is only really necessary if when you're uploading dependencies you're not creating new versions, but rather overwriting an existing release. In this later case Ivy will in preference use the cached copy of the JAR rather than retrieving the updated JAR in the repository. Flushing the cache solves this issue as the JARs need to be downloaded each time.

2) The <ivy:resolve> tag which loads the dependency metadata for the current module from the associated ivy.xml file, determines which artefacts to obtain from the repository and downloads them to the ${ivy.default.ivy.user.dir}\Cache directory on the local PC.

3) The <ivy:retrieve> tag then searches the Cache directory for the required JAR files and places them in the location where the application expects to find them, namely C:\JDeveloper\lib

If we run this task we see in the logs:

If you now Build ApplicationA you will see it compiles correctly. To check it doesn't build when the ADFLibrary1.jar is not sitting in the C:\JDeveloper\lib directory, delete the JAR and rebuild ApplicationA.

Making and building with new revisions

Overtime your modules will include new revisions. You will of course be checking these changes in and out of your version control system such as Subversion. How do you cater for the new versions with regards to Ivy?

Imagine the scenario where ApplicationA release 3 is now dependent on ADFLibrary1 revision 6. This requires two simple changes.

Firstly in the ADFLibrary1 ivy.xml, replace the revision number under the <info> tag to 6, build and publish.

Second in ApplicationA's ivy.xml, update it's revision number to 3, then in the <dependencies> tag update the ADFLibrary1 dependency's revision number to 6. Forthright when you download the dependencies for ApplicationA revision 3, it will download revision 6 from the repository.

Conclusion

At this point we have all the moving parts to make use of Ivy with JDeveloper and ADF to implement a dependency management solution. While the example is contrived, it shows the use of:

1) The ivy.xml file to define each module, what it publishes and what it depends on

2) The ivysettings.xml file to define the location of the shared repository

3) The Ivy Ant tasks for publishing modules to the repository, as well as downloading modules from the repository

If I have time I will write a different blog post to show how transitive dependencies work in Ivy. The nice thing about Ivy is it handles these automagically so there's not much to configure, just explain a working example.

Beyond this there really isn't that much else to explain, working out the nuances of Ivy takes around a week, retrofitting it into your environment takes longer, but beyond that Ivy is pretty simple in that it does one thing and it does one thing well.

Finally note I'm not advocating Apache Ivy over Apache Maven with this post, ultimately this post simply documents how to use Ivy with JDeveloper, and readers need to make their own choice which tool if any to use. Future versions of JDeveloper (See the Maven integration section in the following blog post) are scheduled to have improved Maven integration so readers should take care not to discount Maven as an option.

Errata

This was tested against JDev 11.1.2.1.0 and 11.1.1.4.0, but in essence should run against any JDev version with Ant support.

In the Java world there are a few well known tools for dependency management including Apache Ivy and Apache Maven. Strictly speaking Ivy is just a dependency management tool which integrates with Apache Ant, while Maven is a set of tools of where dependency management is but just one of its specialities.

In the ADF world thanks to the inclusion of ADF Libraries (aka. modules) that can be shared across applications, dependency management is also a relevant problem. Recently I went through the exercise of including Apache Ivy into our JDeveloper 11g and Hudson mix for an existing set of applications. This blog post attempts to describe the configuration of Apache Ivy in context of our JDeveloper setup in order to assist others setting up a similar installation. The blog post will introduce a simplistic application (downloadable from here) with 1 dependency to introduce the Ivy features, in very much an A-B-C style to assist the reader's learning.

Readers should be careful to note this post doesn't attempt to explain all the in's and out's of using Apache Ivy, just a successful configuration on our part. Readers are encouraged to seek out further resources to assist their learning of Apache Ivy.

Assumptions

This blog post assumes you understand the following concepts:

• ADF Libraries

• Resource palette

• Apache Ant

• ojdeploy

In the beginning there was... ah... ApplicationA

To start out with our overall application contains one JDeveloper application workspace known as ApplicationA, installed under C:/JDeveloper/mywork as follows:

ApplicationA initially has no dependencies and can be built and run standalone.

ApplicationA initially has no dependencies and can be built and run standalone.Within the application we create a separate project entitled "Build" with an Ant build scripts entitled "pre-ivy.build.xml" to build our application using ojdeploy as follows:

<?xml version="1.0" encoding="UTF-8" ?>(Note the jdev.ojdeploy.path & jdev.ant.library properties that map to your JDeveloper installation. You will need to change these to suit your environment. This will need to be done for both ApplicationA and the following ADFLibrary1)

<project xmlns="antlib:org.apache.tools.ant" name="Build" basedir=".">

<property name="jdev.ojdeploy.path" value="C:\java\jdeveloper\JDev11gBuild6081\jdeveloper\jdev\bin\ojdeploy.exe"/>

<property name="jdev.ant.library" value="C:\java\jdeveloper\JDev11gBuild6081\jdeveloper\jdev\lib\ant-jdeveloper.jar"/>

<target name="Build">

<taskdef name="ojdeploy" classname="oracle.jdeveloper.deploy.ant.OJDeployAntTask" uri="oraclelib:OJDeployAntTask"

classpath="${jdev.ant.library}"/>

<ora:ojdeploy xmlns:ora="oraclelib:OJDeployAntTask" executable="${jdev.ojdeploy.path}"

ora:buildscript="C:\Temp\build.log" ora:statuslog="C:\Temp\status.log">

<ora:deploy>

<ora:parameter name="workspace" value="C:\JDeveloper\mywork\ApplicationA\ApplicationA.jws"/>

<ora:parameter name="profile" value="ApplicationA"/>

<ora:parameter name="outputfile" value="C:\JDeveloper\mywork\ApplicationA\deploy\ApplicationA"/>

</ora:deploy>

</ora:ojdeploy>

</target>

</project>

And then ApplicationA begat ADFLibrary1

Now we'll create a new task flow in a separate application workspace known as ADFLibrary1 which ApplicationA is dependent on:

We add an ADF Library JAR deployment profile to ADFLibrary1's ViewController project to generate ADFLibrary1.jar to:

We add an ADF Library JAR deployment profile to ADFLibrary1's ViewController project to generate ADFLibrary1.jar to:C:\JDeveloper\mywork\ADFLibrary1\ViewController\deploy\adflibADFLibrary1.jar

Similar to ApplicationA we add a Build project to our application workspace and a pre-ivy.build.xml Ant build script using ojdeploy:

<?xml version="1.0" encoding="UTF-8" ?>From here we want to attach ADFLibrary1.jar to ApplicationA's ViewController project. Overtime we might have many JARs we want to attach, so rather than mapping to several different deploy directories under each ADF Library application workspace, we'll assume the libraries are instead available under a central "lib" directory as follows:

<project xmlns="antlib:org.apache.tools.ant" name="Build" basedir=".">

<property name="jdev.ojdeploy.path" value="C:\java\jdeveloper\JDev11gBuild6081\jdeveloper\jdev\bin\ojdeploy.exe"/>

<property name="jdev.ant.library" value="C:\java\jdeveloper\JDev11gBuild6081\jdeveloper\jdev\lib\ant-jdeveloper.jar"/>

<target name="Build">

<taskdef name="ojdeploy" classname="oracle.jdeveloper.deploy.ant.OJDeployAntTask" uri="oraclelib:OJDeployAntTask"

classpath="${jdev.ant.library}"/>

<ora:ojdeploy xmlns:ora="oraclelib:OJDeployAntTask" executable="${jdev.ojdeploy.path}"

ora:buildscript="C:\Temp\build.log" ora:statuslog="C:\Temp\status.log">

<ora:deploy>

<ora:parameter name="workspace" value="C:\JDeveloper\mywork\ADFLibrary1\ADFLibrary1.jws"/>

<ora:parameter name="project" value="ViewController"/>

<ora:parameter name="profile" value="ADFLibrary1"/>

<ora:parameter name="outputfile" value="C:\JDeveloper\mywork\ADFLibrary1\ViewController\deploy\ADFLibrary1"/>

</ora:deploy>

</ora:ojdeploy>

</target>

</project>

Experienced readers will know to setup a Resource Palette "File Connection" to map to C:\JDeveloper\mywork\lib then simply add the JARs from the palette.

Experienced readers will know to setup a Resource Palette "File Connection" to map to C:\JDeveloper\mywork\lib then simply add the JARs from the palette.Adding Apache Ivy

At this point we have a rudimentary form of dependency management setup, where a logical version 1 of ApplicationA has attached a logical version 1 of ADFLibrary1 through the use of the ADF Library JAR being attached to ApplicationA's ViewController project. Note the word "rudimentary". Currently there is no way to track versions. If we have separate versions of ApplicationA dependent on separate versions of ADFLibrary1, developers have to be very careful to check out and build the correct versions, and there's nothing inherently obvious in the generated JAR file names to gives us an idea of what versions are being used.

Let's introduce Apache Ivy into the mix with this simplistic dependency model as a basis for learning, to see how Ivy solves the versioning dependency issue.

Adding ivy.xml to each module

Ivy requires that each module have an ivy.xml. The ivy.xml file among other things describes for each module:

a) The module name

b) The version of the module

c) Determines what artefacts the module publishes

d) Track the module's dependencies including the version of the dependencies

For our existing ADFLibrary1 we'll add an ivy.xml file to our Build project containing the following details:

<?xml version="1.0" encoding="UTF-8"?>Of note:

<ivy-module version="2.0">

<info organisation="sage" module="ADFLibrary1" revision="1"/>

<configurations>

<conf name="jar" description="Java archive"/>

<conf name="ear" description="Enterprise archive"/>

</configurations>

<publications>

<artifact name="ADFLibrary1" conf="jar" ext="jar"/>

</publications>

<!-- <dependencies> There are no dependencies for this module <dependencies/> -->

</ivy-module>

a) The module name in the <info> tag

b) The revision/version number in the <info> tag

c) The publication of an ADF Library jar in the <publications> tag

d) And that this module is not dependent on any other modules through the commented out <dependencies> tag

(You might also note the <configurations> tag. Configurations define the type of artefacts we can possible generate for the module. In this case we're creating an ADF Library "JAR", but alternatively for example we could produce a WAR or EAR file or some other sort of artefact. For purposes of this blog post we'll keep this relatively simple and just stick to JARs and EARs).

For our existing ApplicationA its ivy.xml file under the Build project will look as follows:

<?xml version="1.0" encoding="UTF-8"?>Of note:

<ivy-module version="2.0">

<info organisation="sage" module="ApplicationA" revision="1"/>

<configurations>

<conf name="jar" description="Java archive"/>

<conf name="ear" description="Enterprise archive"/>

</configurations>

<publications>

<artifact name="ApplicationA" conf="ear" ext="ear"/>

</publications>

<dependencies>

<dependency org="sage" name="ADFLibrary1" rev="1">

<artifact name="ADFLibrary1" ext="jar"/>

</dependency>

</dependencies>

</ivy-module>

a) The module name ApplicationA in the <info> tag

b) The revision/version number 1 in the <info> tag

c) The publication of an EAR in the <publications> tag

d) And of most importance, a dependency of ADFLibrary1, specifically release/version 1.

It's this last point that is most important as not only does it track the dependencies between modules (which truthfully JDev was already doing for us) but the ivy.xml file also tracks the version dependency, namely ApplicationA release/version 1 is dependent on version/release 1 of ADFLibrary1.

Apache Ivy Repository

In the previously described application configuration we were assuming the build of ApplicationA and ADFLibrary1 was all on the same developer machine. It's relatively simply for 1 developer to copy the JARs to the correct location to satisfy the dependencies. Yet in a typical development environment there will be multiple developers working on different modules across different developer machines. Moving JARs between developer PCs becomes problematic. We really need some sort of developer repository to share the modules archives.

At this point we introduce an Apache Ivy repository into our solution. Simplistically the Ivy repository is a location where developers can publish JARs to, and other developers when building an application with a dependency, can download the dependencies from.

Ivy supports different types of repositories which are documented under Resolvers in the Ivy documentation. For purposes of this blog post we'll use the simplest repository type of "FileSystem".

In order to make use of the FileSystem Ivy repository all developers must have access to a file (typically called) ivysettings.xml. This file defines for Ivy where the repository resides among other settings. How you distribute this file to developers is up to you, maybe it's located on a shared network location, maybe a copy checked out to a common local location. For purposes of this blog post we'll assume it resides on every developer's machine under C:\JDeveloper\ivy:

The following reveals the contents of a possible ivysettings.xml file:

The following reveals the contents of a possible ivysettings.xml file:<ivysettings>Points to consider:

<property name="ivy.repo.dir" value="C:\JDeveloper\ivy\repositories\development"/>

<resolvers>

<chain>

<filesystem name="repository">

<ivy pattern="${ivy.repo.dir}/[module]/ivy[module]_[revision].xml" />

<artifact pattern="${ivy.repo.dir}/[module]/[type][artifact]_[revision].[ext]"/>

</filesystem>

</chain>

</resolvers>

</ivysettings>

1) Note the ivy.repo.dir property. Typically this would point to your own //SomeServer/YourRepositoryLocation which all developers can access on your local network. For the purposes of this blog post, in order to give readers a single zip file that they can download and use, I've changed this setting to instead locate the repository at C:\JDeveloper\ivy\repositories\development. This certainly *isn't* a good location for a shared repository, but one that is workable for demonstration purposes.

2) The <resolvers> <chain> defines the list of repositories for Ivy to publish to or download dependencies from. In this case we've only configured one repository, but there's nothing stopping you having a series of repositories.

3) The <ivy> subtag of the <filesystem> tag defines how Ivy will store and search for it's own metadata files in the repository, of which it stores information such as the module name, versions and more that is essentially copied from your ivy.xml files.

4) The <artifact> tag defines how Ivy will store and search for the actual artefacts you generate (such as the ADF Library JARs) in the repository.

With regards the last 2 points it's best to leave the patterns as the default, as in the end the repository can be treated as a black box. You don't really care how it works, just as long as Ivy allows you to publish and retrieve files from the repository.

Configuring Ant to *understand* Ivy

With the ivy.xml and ivysettings.xml files in place, we now need to configure our Ant build scripts to interpret the settings and work with our repository during builds.

First we download the Apache Ivy and install into a location each developer's machine can access. This blog posts assumes Ivy v2.2.0 and that the associated ivy-2.2.0.jar has been unzipped to C:\JDeveloper\ivy\apache-ivy-2.2.0:

Next we modify our existing build scripts for each module. In the build.xml file for *both* ADFLibrary1 and ApplicationA we insert the following code:

Next we modify our existing build scripts for each module. In the build.xml file for *both* ADFLibrary1 and ApplicationA we insert the following code:(Note in the downloadable application this code resides in build.xml, not pre-ivy.build.xml which was documented earlier in this blog post).

<property name="ivy.default.ivy.user.dir" value="C:\JDeveloper\ivy"/>Items to note:

<property name="ivy.default.ivy.lib.dir" value="C:\JDeveloper\lib"/>

<path id="ivy.lib.path">

<fileset dir="C:\JDeveloper\ivy\apache-ivy-2.2.0" includes="*.jar"/>

</path>

<taskdef resource="org/apache/ivy/ant/antlib.xml" uri="antlib:org.apache.ivy.ant" classpathref="ivy.lib.path"/>

<ivy:configure file="C:\JDeveloper\ivy\ivysettings.xml"/>

<ivy:info file="./ivy.xml"/>

1) Setting the property ivy.default.ivy.user.dir changes the default location under which Ivy stores local copies of the data downloaded from the repository.

2) Setting the property ivy.default.ivy.lib.dir defines the location where the JAR files should be ultimately delivered for dependent modules to make use of.

3) The <ivy:configure> tag tells Ivy where the ivysettings.xml file is located which includes the configuration information about the repositories.

4) The <ivy:info> tag tells Ivy where the current modules ivy.xml file is located.

Configuring Ant to *use* Ivy

With the previous Ivy setup we're now ready to start building using Ivy via Ant.

Let's consider our goals. What we want to first do is build and then publish ADFLibrary1 to the Ivy repository. Then subsequently for ApplicationA we want to download ADFLibrary1 from the Ivy repository, then build ApplicationA.

To achieve the first goal, we already have a Build Ant target in the ADFLibrary1 build.xml. So we just need to add another target "Publish" which will take the artefacts generated from the Build target as follows:

<target name="Publish">Items to note:

<ivy:publish resolver="repository" overwrite="true" pubrevision="${ivy.revision}" update="true">

<ivy:artifacts pattern="../ViewController/deploy/[artifact].[ext]"/>

</ivy:publish>

</target>

1) The <ivy:publish> tag that says which resolver (ie. which repository) to publish too, what to do if the exact file and revision already exists in the repository, and what revision/version to publish the file as. With regards the ${ivy.revision} this variable is derived from the ADFLibrary1's ivy.xml file.

2) The <artifacts> tag which tells the publish command where to find the artifact to publish.

3) Because of the <artifacts> tag there's a dependency that the module has already been built. This could be easily catered for in the overall build script by making an <antcall> to the Build target at the start of the <ivy:publish> tag, but for purposes of simplicity this change hasn't been made for this blog post.

At this point let's see what outputs we see if we run the Build and Publish scripts. First when we run the Build target the JDeveloper log window reports:

Buildfile: C:\JDeveloper\mywork\ADFLibrary1\Build\build.xmlAt the beginning of the output you can see Ivy being initialized but at the moment it's mostly not used. From the output you can see the JAR being built by ojdeploy and placed under C:/JDeveloper/mywork/ADFLibrary1/ViewController/deploy.

[ivy:configure] :: Ivy 2.2.0 - 20100923230623 :: http://ant.apache.org/ivy/ ::

[ivy:configure] :: loading settings :: file = C:\JDeveloper\ivy\ivysettings.xml

Build:

[ora:ojdeploy] ----build file----

[ora:ojdeploy] <?xml version = '1.0' standalone = 'yes'?>

[ora:ojdeploy] <ojdeploy-build>

[ora:ojdeploy] <deploy>

[ora:ojdeploy] <parameter name="workspace" value="C:\JDeveloper\mywork\ADFLibrary1\ADFLibrary1.jws"/>

[ora:ojdeploy] <parameter name="project" value="ViewController"/>

[ora:ojdeploy] <parameter name="profile" value="ADFLibrary1"/>

[ora:ojdeploy] <parameter name="outputfile" value="C:\JDeveloper\mywork\ADFLibrary1\ViewController\deploy\ADFLibrary1"/>

[ora:ojdeploy] </deploy>

[ora:ojdeploy] <defaults>

[ora:ojdeploy] <parameter name="statuslogfile" value="C:\Temp\status.log"/>

[ora:ojdeploy] </defaults>

[ora:ojdeploy] </ojdeploy-build>

[ora:ojdeploy] ------------------

[ora:ojdeploy] 07/12/2011 1:31:42 PM oracle.security.jps.util.JpsUtil disableAudit

[ora:ojdeploy] INFO: JpsUtil: isAuditDisabled set to true

[ora:ojdeploy] 07/12/2011 1:31:43 PM oracle.jdevimpl.deploy.fwk.TopLevelDeployer prepareImpl

[ora:ojdeploy] INFO: ---- Deployment started. ----

[ora:ojdeploy] 07/12/2011 1:31:43 PM oracle.jdevimpl.deploy.fwk.TopLevelDeployer printTargetPlatform

[ora:ojdeploy] INFO: Target platform is Standard Java EE.

[ora:ojdeploy] 07/12/2011 1:31:43 PM oracle.jdevimpl.deploy.common.ProfileDependencyAnalyzer deployImpl

[ora:ojdeploy] INFO: Running dependency analysis...

[ora:ojdeploy] 07/12/2011 1:31:43 PM oracle.jdeveloper.deploy.common.BuildDeployer build

[ora:ojdeploy] INFO: Building...

[ora:ojdeploy] Compiling...

[ora:ojdeploy] [1:31:45 PM] Successful compilation: 0 errors, 0 warnings.

[ora:ojdeploy] 07/12/2011 1:31:45 PM oracle.jdevimpl.deploy.common.ModulePackagerImpl deployProfiles

[ora:ojdeploy] INFO: Deploying profile...

[ora:ojdeploy] 07/12/2011 1:31:45 PM oracle.adfdt.controller.adfc.source.deploy.AdfcConfigDeployer deployerPrepared

[ora:ojdeploy] INFO: Moving WEB-INF/adfc-config.xml to META-INF/adfc-config.xml

[ora:ojdeploy]

[ora:ojdeploy] 07/12/2011 1:31:45 PM oracle.jdeveloper.deploy.jar.ArchiveDeployer logFileWritten

[ora:ojdeploy] INFO: Wrote Archive Module to file:/C:/JDeveloper/mywork/ADFLibrary1/ViewController/deploy/ADFLibrary1.jar

[ora:ojdeploy] 07/12/2011 1:31:45 PM oracle.jdevimpl.deploy.fwk.TopLevelDeployer finishImpl

[ora:ojdeploy] INFO: Elapsed time for deployment: 3 seconds

[ora:ojdeploy] 07/12/2011 1:31:45 PM oracle.jdevimpl.deploy.fwk.TopLevelDeployer finishImpl

[ora:ojdeploy] INFO: ---- Deployment finished. ----

[ora:ojdeploy] Status summary written to /C:/Temp/status.log

Next when we run the Publish task the following output is produced:

Buildfile: C:\JDeveloper\mywork\ADFLibrary1\Build\build.xmlBeyond the initial Ivy setup, of importance we can see the calls to <ivy:publish> pulling the JAR from the previous Build step to the repository. If we look at our C: drive where the repository is located we can indeed see files now sitting in the repository:

[ivy:configure] :: Ivy 2.2.0 - 20100923230623 :: http://ant.apache.org/ivy/ ::

[ivy:configure] :: loading settings :: file = C:\JDeveloper\ivy\ivysettings.xml

Publish:

[ivy:publish] :: publishing :: sage#ADFLibrary1

[ivy:publish] published ADFLibrary1 to C:\JDeveloper\ivy\repositories\development/ADFLibrary1/jarADFLibrary1_1.jar

[ivy:publish] published ivy to C:\JDeveloper\ivy\repositories\development/ADFLibrary1/ivyADFLibrary1_1.xml

The different files are beyond the discussion here, but to say this is the structure Ivy has put into place.

The different files are beyond the discussion here, but to say this is the structure Ivy has put into place.At this point we've achieved our first goal of build and publishing the ADFLibrary1 to the Ivy repository. Let's more over to our second goal for ApplicationA where we want to download ADFLibrary1 from the Ivy repository, then build ApplicationA.

In order to do this we'll add a new target to the ApplicationA build.xml "Download_dependencies" as follows:

<target name="Download_dependencies">Of note:

<ivy:cleancache/>

<ivy:resolve/>

<ivy:retrieve pattern="${ivy.default.ivy.lib.dir}/[artifact].[ext]" type="jar"/>

</target>

1) The <ivy:cleancache> tag clears the ${ivy.default.ivy.user.dir}\Cache of previously downloaded dependencies. This is only really necessary if when you're uploading dependencies you're not creating new versions, but rather overwriting an existing release. In this later case Ivy will in preference use the cached copy of the JAR rather than retrieving the updated JAR in the repository. Flushing the cache solves this issue as the JARs need to be downloaded each time.

2) The <ivy:resolve> tag which loads the dependency metadata for the current module from the associated ivy.xml file, determines which artefacts to obtain from the repository and downloads them to the ${ivy.default.ivy.user.dir}\Cache directory on the local PC.

3) The <ivy:retrieve> tag then searches the Cache directory for the required JAR files and places them in the location where the application expects to find them, namely C:\JDeveloper\lib

If we run this task we see in the logs:

Buildfile: C:\JDeveloper\mywork\ApplicationA\Build\build.xmlBeyond the initial configuration of Ivy, in the output you can see the &ivy;resolve> tag resolving the dependency of ApplicationA on ADFLibrary1 version 1, then downloading the file to the cache. Finally the <retrieve> tag retrieves the file from the cache and copies it to the local lib directory (though this isn't that obvious from the logs).

[ivy:configure] :: Ivy 2.2.0 - 20100923230623 :: http://ant.apache.org/ivy/ ::

[ivy:configure] :: loading settings :: file = C:\JDeveloper\ivy\ivysettings.xml

Download_dependencies:

[ivy:resolve] :: resolving dependencies :: sage#ApplicationA;1

[ivy:resolve] confs: [jar, ear]

[ivy:resolve] found sage#ADFLibrary1;1 in repository

[ivy:resolve] downloading C:\JDeveloper\ivy\repositories\development\ADFLibrary1\jarADFLibrary1_1.jar ...

[ivy:resolve] .. (6kB)

[ivy:resolve] .. (0kB)

[ivy:resolve] [SUCCESSFUL ] sage#ADFLibrary1;1!ADFLibrary1.jar (0ms)

[ivy:resolve] :: resolution report :: resolve 109ms :: artifacts dl 0ms

---------------------------------------------------------

| | modules || artifacts |

| conf | number| search|dwnlded|evicted|| number|dwnlded|

---------------------------------------------------------

| jar | 1 | 1 | 1 | 0 || 1 | 1 |

| ear | 1 | 1 | 1 | 0 || 1 | 1 |

---------------------------------------------------------

[ivy:retrieve] :: retrieving :: sage#ApplicationA

[ivy:retrieve] confs: [jar, ear]

[ivy:retrieve] 1 artifacts copied, 0 already retrieved (6kB/0ms)

If you now Build ApplicationA you will see it compiles correctly. To check it doesn't build when the ADFLibrary1.jar is not sitting in the C:\JDeveloper\lib directory, delete the JAR and rebuild ApplicationA.

Making and building with new revisions

Overtime your modules will include new revisions. You will of course be checking these changes in and out of your version control system such as Subversion. How do you cater for the new versions with regards to Ivy?

Imagine the scenario where ApplicationA release 3 is now dependent on ADFLibrary1 revision 6. This requires two simple changes.

Firstly in the ADFLibrary1 ivy.xml, replace the revision number under the <info> tag to 6, build and publish.

Second in ApplicationA's ivy.xml, update it's revision number to 3, then in the <dependencies> tag update the ADFLibrary1 dependency's revision number to 6. Forthright when you download the dependencies for ApplicationA revision 3, it will download revision 6 from the repository.

Conclusion

At this point we have all the moving parts to make use of Ivy with JDeveloper and ADF to implement a dependency management solution. While the example is contrived, it shows the use of:

1) The ivy.xml file to define each module, what it publishes and what it depends on

2) The ivysettings.xml file to define the location of the shared repository

3) The Ivy Ant tasks for publishing modules to the repository, as well as downloading modules from the repository

If I have time I will write a different blog post to show how transitive dependencies work in Ivy. The nice thing about Ivy is it handles these automagically so there's not much to configure, just explain a working example.

Beyond this there really isn't that much else to explain, working out the nuances of Ivy takes around a week, retrofitting it into your environment takes longer, but beyond that Ivy is pretty simple in that it does one thing and it does one thing well.

Finally note I'm not advocating Apache Ivy over Apache Maven with this post, ultimately this post simply documents how to use Ivy with JDeveloper, and readers need to make their own choice which tool if any to use. Future versions of JDeveloper (See the Maven integration section in the following blog post) are scheduled to have improved Maven integration so readers should take care not to discount Maven as an option.

Errata

This was tested against JDev 11.1.2.1.0 and 11.1.1.4.0, but in essence should run against any JDev version with Ant support.

ADF bug: missing af:column borders in af:table for IE7

There’s a rather obscure JDeveloper bug that only effects IE7, for af:columns in af:tables that show af:outputText fields based on dates that are null (phew, try and say that with a mouth full of wheaties). It occurs in 11.1.1.4.0 and 11.1.2.0.0 (and all versions in between it’s assumed).

In the previous picture from IE7 if you look closely, you’ll notice that the HireDate2 column has lost its border for the null entries. Note the other columns even when they are null, still have a border.

In the previous picture from IE7 if you look closely, you’ll notice that the HireDate2 column has lost its border for the null entries. Note the other columns even when they are null, still have a border.

If we look under IE8 (or any other browser for that matter) we see the problem doesn’t occur at all:

The problem is being caused by 2 separate issues:

The problem is being caused by 2 separate issues:

1) IE7 does not render borders for HTML table cells (ie. the tag) if the cell contains no data. This can be fixed if the cell contains a tag.

2) ADF Faces RC includes the tag for empty table cells, except for null date af:outputText fields who in addition have child tags that aren’t converter and validator tags.

To demonstrate the bug the MissingTableBorders application includes a simple test case. The application contains a View Object “EmployeesView” with a query based on the Oracle HR sample schema:

Next is the code for our JSPX page “Employees.jspx” containing an af:table based on the VO from above. I’ve deliberately cut out the surroundings tags to focus on the tags that matter:

Next is the code for our JSPX page “Employees.jspx” containing an af:table based on the VO from above. I’ve deliberately cut out the surroundings tags to focus on the tags that matter:

a) There are two columns to display data from the first_name column. The only difference between them is the first_name2 column includes an additional af:clientAttribute tag.

b) There are two columns to display data from the hire_date column. Similar to the first_name columns, they only differ in the fact hire_date2 includes an af:clientAttribute tag.

When this page renders in the browser the generate HTML content for the rows of the table are as follows (note the formatting and the comment were added by me to make it easier to read):

If we look at records 105 to 110 note:

a) The FirstName1 column when null includes a to forcefully place a blank entry into the cell.

b) The FirstName2 column does exactly the same, remembering the FirstName2 column includes an additional af:clientAttribute tag.

c) For the HireDate1 column it also includes a . Remember the HireDate1 column *does*not* include an af:clientAttribute tag.

d) For the HireDate2 column it *does*not* include an tag, even though the HireDate2 values are null. Remember the HireDate2 column *does*include* an af:clientAttribute tag.

At this point we only see differing behaviour with af:outputText values in af:columns where they show Dates *and* the af:outputText includes an af:clientAttribute tag.

From my testing, converter and validator tags added to the af:outputText don't exhibit the same behaviour. However any other tag, not just adding an af:clientAttribute tag but even an af:clientListener as example will result in the missing tag.

This in itself isn't an issue but when we consider IE7. If you render this page in IE8 the null date columns with an af:clientAttribute tag will still show the cell borders:

Yet in IE7 we get this:

Yet in IE7 we get this:

While the issue is particular to IE7, the issue could be fixed by ADF Faces RC consistently generating the entry as described in the HTML generated above.

While the issue is particular to IE7, the issue could be fixed by ADF Faces RC consistently generating the entry as described in the HTML generated above.

In discussing this bug (12942411) with Oracle staff it turns out there is a broader base bug 9682969 where this issue occurs for more than just date columns. Unfortunately the problem is not easily fixable by Oracle as it requires the af:table and af:column components to know if the child component (in this example an af:outputText) will be null before it and the data it refers to is accessed and rendered.

The simple workaround as proposed by Oracle is to not render the child component at all if the data value is null, simply by including code similar to the following:

My thanks to Oracle staff who assisted in looking and resolving this issue.

A sample application can be downloaded from here.

In the previous picture from IE7 if you look closely, you’ll notice that the HireDate2 column has lost its border for the null entries. Note the other columns even when they are null, still have a border.

In the previous picture from IE7 if you look closely, you’ll notice that the HireDate2 column has lost its border for the null entries. Note the other columns even when they are null, still have a border.If we look under IE8 (or any other browser for that matter) we see the problem doesn’t occur at all:

The problem is being caused by 2 separate issues:

The problem is being caused by 2 separate issues:1) IE7 does not render borders for HTML table cells (ie. the tag) if the cell contains no data. This can be fixed if the cell contains a tag.

2) ADF Faces RC includes the tag for empty table cells, except for null date af:outputText fields who in addition have child tags that aren’t converter and validator tags.

To demonstrate the bug the MissingTableBorders application includes a simple test case. The application contains a View Object “EmployeesView” with a query based on the Oracle HR sample schema:

SELECT emp.EMPLOYEE_ID,The query is designed to return two String columns that will have a mix of null and non null values, and two date columns that will also have a mix of null and non null values. If we run the Business Components Browser the data appears as follows:

(CASE WHEN employee_id < 105 THEN first_name ELSE null END) AS FIRST_NAME1,

(CASE WHEN employee_id < 105 THEN first_name ELSE null END) AS FIRST_NAME2,

(CASE WHEN employee_id < 105 THEN hire_date ELSE null END) AS HIRE_DATE1,

(CASE WHEN employee_id < 105 THEN hire_date ELSE null END) AS HIRE_DATE2

FROM EMPLOYEES emp

WHERE emp.EMPLOYEE_ID BETWEEN 100 AND 110

ORDER BY emp.EMPLOYEE_ID

Next is the code for our JSPX page “Employees.jspx” containing an af:table based on the VO from above. I’ve deliberately cut out the surroundings tags to focus on the tags that matter:

Next is the code for our JSPX page “Employees.jspx” containing an af:table based on the VO from above. I’ve deliberately cut out the surroundings tags to focus on the tags that matter:<af:tableThe code was created by JDeveloper by drag and dropping the VO from the data control palette, with the following changes:

value="#{bindings.EmployeesView1.collectionModel}"

var="row"

rows="#{bindings.EmployeesView1.rangeSize}"

emptyText="#{bindings.EmployeesView1.viewable ? 'No data.' : 'Access Denied.'}"

fetchSize="#{bindings.EmployeesView1.rangeSize}"

rowBandingInterval="0"

selectedRowKeys="#{bindings.EmployeesView1.collectionModel.selectedRow}"

selectionListener="#{bindings.EmployeesView1.collectionModel.makeCurrent}"

rowSelection="single"

id="t1">

<af:column

sortProperty="EmployeeId"

sortable="false"

headerText="#{bindings.EmployeesView1.hints.EmployeeId.label}"

id="c5">

<af:outputText value="#{row.EmployeeId}" id="ot4">

<af:convertNumber groupingUsed="false" pattern="#{bindings.EmployeesView1.hints.EmployeeId.format}"/>

</af:outputText>

</af:column>

<af:column

sortProperty="FirstName1"

sortable="false"

headerText="#{bindings.EmployeesView1.hints.FirstName1.label}"

id="c4">

<af:outputText value="#{row.FirstName1}" id="ot5">

</af:outputText>

</af:column>

<af:column

sortProperty="FirstName2"

sortable="false"

headerText="#{bindings.EmployeesView1.hints.FirstName2.label}"

id="c3">

<af:outputText value="#{row.FirstName2}" id="ot2">

<af:clientAttribute name="ItemValue" value="#{row.FirstName2}"/>

</af:outputText>

</af:column>

<af:column

sortProperty="HireDate1"

sortable="false"

headerText="#{bindings.EmployeesView1.hints.HireDate1.label}"

id="c1">

<af:outputText value="#{row.HireDate1}" id="ot3">

<af:convertDateTime pattern="#{bindings.EmployeesView1.hints.HireDate1.format}"/>

</af:outputText>

</af:column>

<af:column

sortProperty="HireDate2"

sortable="false"

headerText="#{bindings.EmployeesView1.hints.HireDate2.label}"

id="c2">

<af:outputText value="#{row.HireDate2}" id="ot1">

<af:convertDateTime pattern="#{bindings.EmployeesView1.hints.HireDate2.format}"/>

<af:clientAttribute name="ItemValue" value="#{row.HireDate2}"/>

</af:outputText>

</af:column>

</af:table>

a) There are two columns to display data from the first_name column. The only difference between them is the first_name2 column includes an additional af:clientAttribute tag.

b) There are two columns to display data from the hire_date column. Similar to the first_name columns, they only differ in the fact hire_date2 includes an af:clientAttribute tag.

When this page renders in the browser the generate HTML content for the rows of the table are as follows (note the formatting and the comment were added by me to make it easier to read):

<tbody>If you look at records 100 to 104 all columns include data.

<!-- ---------- Record 100 ---------- -->

<tr _afrrk="0" class="xxy ">

<td style="width:100px;" nowrap="" class="xxv"><nobr>100</nobr></td>

<td style="width:100px;" nowrap="" class="xxv"><nobr>Steven</nobr></td>

<td style="width:100px;" nowrap="" class="xxv"><nobr><span id="t1:0:ot2">Steven</span></nobr></td>

<td style="width:100px;" nowrap="" class="xxv"><nobr>17/06/1987</nobr></td>

<td style="width:100px;" nowrap="" class="xxv"><nobr><span id="t1:0:ot1">17/06/1987</span></nobr></td>

</tr>

<!-- ---------- Record 101 ---------- -->

<tr _afrrk="1" class="xxy">

<td nowrap="" class="xxv"><nobr>101</nobr></td>

<td nowrap="" class="xxv"><nobr>Neena</nobr></td>

<td nowrap="" class="xxv"><nobr><span id="t1:1:ot2">Neena</span></nobr></td>

<td nowrap="" class="xxv"><nobr>21/09/1989</nobr></td>

<td nowrap="" class="xxv"><nobr><span id="t1:1:ot1">21/09/1989</span></nobr></td>

</tr>

<!-- ---------- Record 102 ---------- -->

<tr _afrrk="2" class="xxy">

<td nowrap="" class="xxv"><nobr>102</nobr></td><td nowrap="" class="xxv"><nobr>Lex</nobr></td>

<td nowrap="" class="xxv"><nobr><span id="t1:2:ot2">Lex</span></nobr></td>

<td nowrap="" class="xxv"><nobr>13/01/1993</nobr></td>

<td nowrap="" class="xxv"><nobr><span id="t1:2:ot1">13/01/1993</span></nobr></td>

</tr>

<!-- ---------- Record 103 ---------- -->

<tr _afrrk="3" class="xxy">

<td nowrap="" class="xxv"><nobr>103</nobr></td>

<td nowrap="" class="xxv"><nobr>Alexander</nobr></td>

<td nowrap="" class="xxv"><nobr><span id="t1:3:ot2">Alexander</span></nobr></td>

<td nowrap="" class="xxv"><nobr>3/01/1990</nobr></td>

<td nowrap="" class="xxv"><nobr><span id="t1:3:ot1">3/01/1990</span></nobr></td>

</tr>

<!-- ---------- Record 104 ---------- -->

<tr _afrrk="4" class="xxy">

<td nowrap="" class="xxv"><nobr>104</nobr></td>

<td nowrap="" class="xxv"><nobr>Bruce</nobr></td>

<td nowrap="" class="xxv"><nobr><span id="t1:4:ot2" class="">Bruce</span></nobr></td>

<td nowrap="" class="xxv"><nobr>21/05/1991</nobr></td>

<td nowrap="" class="xxv"><nobr><span id="t1:4:ot1">21/05/1991</span></nobr></td>

</tr>

<!-- ---------- Record 105 ---------- -->

<tr _afrrk="5" class="xxy"><td nowrap="" class="xxv"><nobr>105</nobr></td>

<td nowrap="" class="xxv"><nobr></nobr> </td>

<td nowrap="" class="xxv"><nobr><span id="t1:5:ot2"></span></nobr> </td>

<td nowrap="" class="xxv"><nobr></nobr> </td>

<td nowrap="" class="xxv"><nobr><span id="t1:5:ot1"></span></nobr></td>

</tr>

<!-- ---------- Record 106 ---------- -->

<tr _afrrk="6" class="p_AFSelected p_AFFocused xxy">

<td nowrap="" class="xxv"><nobr>106</nobr></td><td nowrap="" class="xxv"><nobr></nobr> </td>

<td nowrap="" class="xxv"><nobr><span id="t1:6:ot2"></span></nobr> </td>

<td nowrap="" class="xxv"><nobr></nobr> </td>

<td nowrap="" class="xxv"><nobr><span id="t1:6:ot1"></span></nobr></td>

</tr>

<!-- ---------- Record 107 ---------- -->

<tr _afrrk="7" class="xxy"><td nowrap="" class="xxv"><nobr>107</nobr></td>

<td nowrap="" class="xxv"><nobr></nobr> </td>

<td nowrap="" class="xxv"><nobr><span id="t1:7:ot2"></span></nobr> </td>

<td nowrap="" class="xxv"><nobr></nobr> </td>

<td nowrap="" class="xxv"><nobr><span id="t1:7:ot1"></span></nobr></td>

</tr>

<!-- ---------- Record 108 ---------- -->

<tr _afrrk="8" class="xxy">

<td nowrap="" class="xxv"><nobr>108</nobr></td>

<td nowrap="" class="xxv"><nobr></nobr> </td>

<td nowrap="" class="xxv"><nobr><span id="t1:8:ot2"></span></nobr> </td>

<td nowrap="" class="xxv"><nobr></nobr> </td>

<td nowrap="" class="xxv"><nobr><span id="t1:8:ot1"></span></nobr></td>

</tr>

<!-- ---------- Record 109 ---------- -->

<tr _afrrk="9" class="xxy">

<td nowrap="" class="xxv"><nobr>109</nobr></td>

<td nowrap="" class="xxv"><nobr></nobr> </td>

<td nowrap="" class="xxv"><nobr><span id="t1:9:ot2"></span></nobr> </td>

<td nowrap="" class="xxv"><nobr></nobr> </td>

<td nowrap="" class="xxv"><nobr><span id="t1:9:ot1"></span></nobr></td>

</tr>

<!-- ---------- Record 110 ---------- -->

<tr _afrrk="10" class="xxy">

<td nowrap="" class="xxv"><nobr>110</nobr></td>

<td nowrap="" class="xxv"><nobr></nobr> </td>

<td nowrap="" class="xxv"><nobr><span id="t1:10:ot2"></span></nobr> </td>

<td nowrap="" class="xxv"><nobr></nobr> </td>

<td nowrap="" class="xxv"><nobr><span id="t1:10:ot1"></span></nobr></td>

</tr>

</tbody>

If we look at records 105 to 110 note:

a) The FirstName1 column when null includes a to forcefully place a blank entry into the cell.

b) The FirstName2 column does exactly the same, remembering the FirstName2 column includes an additional af:clientAttribute tag.

c) For the HireDate1 column it also includes a . Remember the HireDate1 column *does*not* include an af:clientAttribute tag.

d) For the HireDate2 column it *does*not* include an tag, even though the HireDate2 values are null. Remember the HireDate2 column *does*include* an af:clientAttribute tag.

At this point we only see differing behaviour with af:outputText values in af:columns where they show Dates *and* the af:outputText includes an af:clientAttribute tag.

From my testing, converter and validator tags added to the af:outputText don't exhibit the same behaviour. However any other tag, not just adding an af:clientAttribute tag but even an af:clientListener as example will result in the missing tag.

This in itself isn't an issue but when we consider IE7. If you render this page in IE8 the null date columns with an af:clientAttribute tag will still show the cell borders:

Yet in IE7 we get this:

Yet in IE7 we get this: While the issue is particular to IE7, the issue could be fixed by ADF Faces RC consistently generating the entry as described in the HTML generated above.

While the issue is particular to IE7, the issue could be fixed by ADF Faces RC consistently generating the entry as described in the HTML generated above.In discussing this bug (12942411) with Oracle staff it turns out there is a broader base bug 9682969 where this issue occurs for more than just date columns. Unfortunately the problem is not easily fixable by Oracle as it requires the af:table and af:column components to know if the child component (in this example an af:outputText) will be null before it and the data it refers to is accessed and rendered.

The simple workaround as proposed by Oracle is to not render the child component at all if the data value is null, simply by including code similar to the following:

My thanks to Oracle staff who assisted in looking and resolving this issue.

A sample application can be downloaded from here.

ADF Faces - a logic bomb in the order of bean instantiations

One of my talented colleagues discovered an interesting ADF logic bomb which I thought I'd share here. The issue is with the instantiation order of ADF Faces scoped beans in JDev 11g when using Bounded Task Flows embedded as regions in another page.

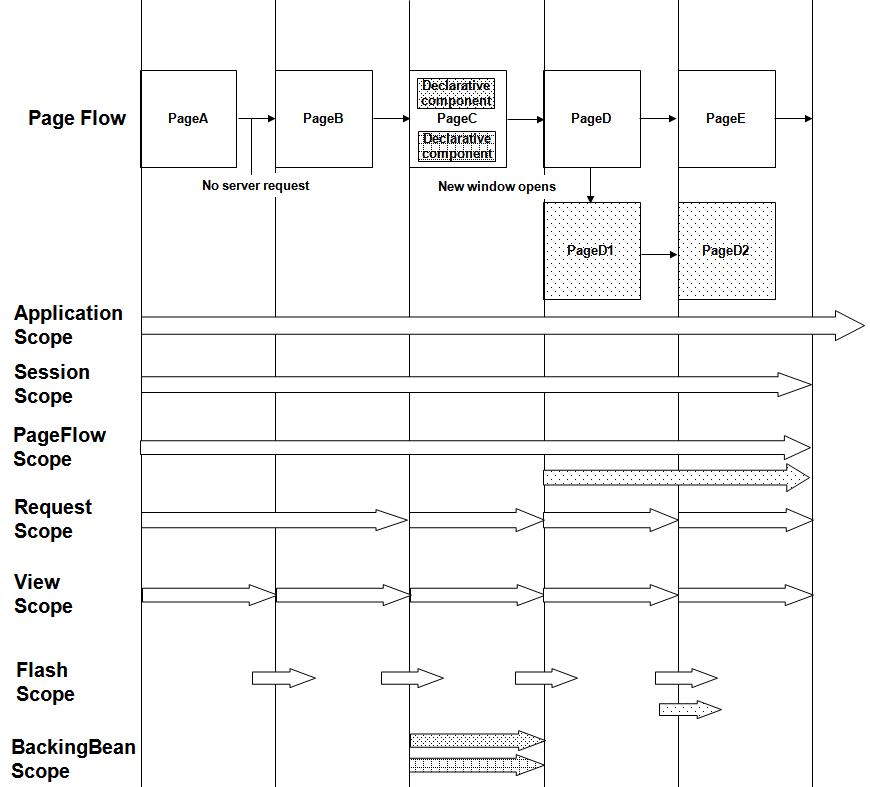

Regular readers would be familiar that Oracle's ADF solution is built on top of JavaServer Faces (JSF). ADF supports bean scopes such as ViewScope, PageFlowScope and BackingBeanScope on top of the regular JSF ApplicationScope, SessionScope and RequestScope beans. Readers should also be familiar that the beans have a defined life (ie. scope) as detailed in the JDev Web Guide:

(Source: Oracle JDeveloper Web Guide 11.1.2.1 section 5.6 figure 5-11)

(Source: Oracle JDeveloper Web Guide 11.1.2.1 section 5.6 figure 5-11)

In specifically focusing on the life cycle of ADF PageFlowScope and BackingBeanScope beans, the guide states (to paraphrase):

1) A PageFlowScope bean for a Task Flow (either bounded or unbounded) survives for the life of the task flow.

2) A BackingBeanScope bean for a page fragment will survive from when receiving a request from the client and sending a response back.

The implication of this is when we're using Bounded Task Flows (BTFs) based on page fragments, it's typical to have a PageFlowScope bean to accept and carry the parameters of the BTF, and one or more BackingBeanScope beans for each fragment within the BTF.

Sample Application

With this in mind let's explore a simple application that shows this sort of usage in play. You can download the sample application from here.

The application's Unbounded Task Flow (UTF) includes a single SessionScope bean MySessionBean carrying one attribute mySessionBeanString as follows:

The UTF also includes a single page MyPage.jspx containing the following code:

When this page is first rendered the inputText makes reference to the SessionScope variable. JSF doesn't pre-initialize managed beans, only on first access do they get instantiated. As such as soon as the inputText is rendered we should see the message from the MySessionBean constructor when it accesses the mySessionBeanString via the EL expression:

MySessionBean initialized

If we were to comment out the region, run this page and press the commandButton, we would only see the initialized message once, as the session bean lives for the life of the user's session.

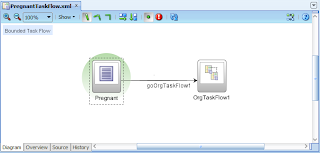

Now let's move onto considering the region and embedded Bounded Task Flow (BTF) called FragmentBTF.xml. Points of note for the BTF are:

a) The Task Flow binding has its Refresh property = ifNeeded

b) And the BTF expects a parameter entitled btfParameterString, which takes its value from our SessionScope beans variable:

In terms of the FragmentBTF (as separate to the region/task flow binding) it has the following characteristics:

In terms of the FragmentBTF (as separate to the region/task flow binding) it has the following characteristics:

a) The BTFs has its initializers and finalizers set to call a "none" scope TaskFlowUtilsBean to simply print a message when the task flow is initialized and finalized. This will help us to understand when the BTF restarts and terminates.

b) The BTF has one incoming parameter btfParameterString. To store this value the BTF has its own PageFlowScope bean called MyPageFlowBean, with a variable myPageFlowBeanString to carry the parameter value.

c) The BTF contains a single fragment MyFragment.jsff with the following code:

c.1) An inputText to output the current value for the MyPageFlowBean.myPageFlowBeanString. Remember this value is indirectly derived from the btfParamaterString of the BTF.

c.2) A second inputText to output the value from another bean, this time a BackingScopeBean which we consider next.

d) The BackingBeanScope bean is where we'll see some interesting behaviour. Let's explain its characteristics first:

d.1) The BackingBeanScope bean entitled MyBackingBean is managed by the BTF.

d.2) It is only referenced by the MyFragment.jsff within the BTF, via the inputText above in c.2.

d.3) The BackingBeanScope bean has the following code which includes the usual System.out.println on the constructor:

d.5) However note that the constructor of the bean grabs a reference to the PageFlowScope bean and uses that to access the myPageFlowBeanString value, writing the value to the myBackingBeanString.

While this example is abstract for purposes of this blog post, it's not uncommon in context of a BTF for a backing bean to want to access state from the BTF's page flow scope bean. As such the technique is to use the JSF classes to evaluate an EL expression to return the page flow scope bean. This is what the resolveELExpression method in the backing bean does, called via the constructor and given to a by-reference-variable in the backing bean to hold for its life/scope.

At this point we have all the moving parts of our very small application.

Scenario One - Initialization

Let's run through the sequence of events we expect to occur when this page renders for the first time agaom, this time including the BTF-region processing as well as the parent page's processing:

1) From earlier we know that when the page first renders we'll see the SessionScope bean's constructor logged as the inputText in MyPage.jspx accesses mySessionBeanString.

MySessionBean initialized